Based on limited clinical data, hundreds of medical algorithms have been approved. Scientists are debating who should test the tools and how best to do so.

Who is testing medical AI systems?

AI-based medical applications, such as the one Singh is developing, are often considered medical devices by drug regulators, including the US FDA and the UK Medicines and Healthcare products Regulatory Agency. Therefore, the standards for review and authorization of use are generally less stringent than those for pharmaceuticals. Only a small subset of devices—those that may pose a high risk to patients—require clinical trial data for approval.

Many people think the threshold is too low. When Gary Weissman, a critical care physician at the University of Pennsylvania in Philadelphia, reviewed FDA-approved AI devices in his field, he found that of the ten devices he identified, only three cited published data in their authorization. Only four mentioned a safety assessment, and none included a bias assessment, which analyzes whether the tool's results are fair to different patient groups. "The concern is that these devices can and do impact bedside care," he said. "Patient lives may depend on these decisions."

A lack of data makes it difficult for hospitals and health systems to decide whether to use these technologies. In a difficult situation. In some cases, financial incentives come into play. In the United States, for example, health insurance plans already reimburse hospitals for the use of certain medical AI devices, making them financially attractive. These institutions may also be inclined to adopt AI tools that promise cost savings, even if they don’t necessarily improve patient care.

Ouyang said these incentives may prevent AI companies from investing in clinical trials. “For a lot of commercial businesses, you can imagine they’re going to work harder to make sure their AI tools are reimbursable,” he said.

The situation may vary in different markets. In the United Kingdom, for example, a government-funded national health plan might set higher evidence thresholds before medical centers can purchase specific products, said Xiaoxuan Liu, a clinical researcher at the University of Birmingham who studies responsible innovation in artificial intelligence. There is an incentive to conduct clinical trials. "

Once a hospital purchases an AI product, they do not need to conduct further testing and can use it immediately like other software. However, some agencies recognize that regulatory approval is no guarantee that the device will actually be beneficial. So they chose to test it themselves. Currently, many of these efforts are conducted and funded by academic medical centers, Ouyang said.

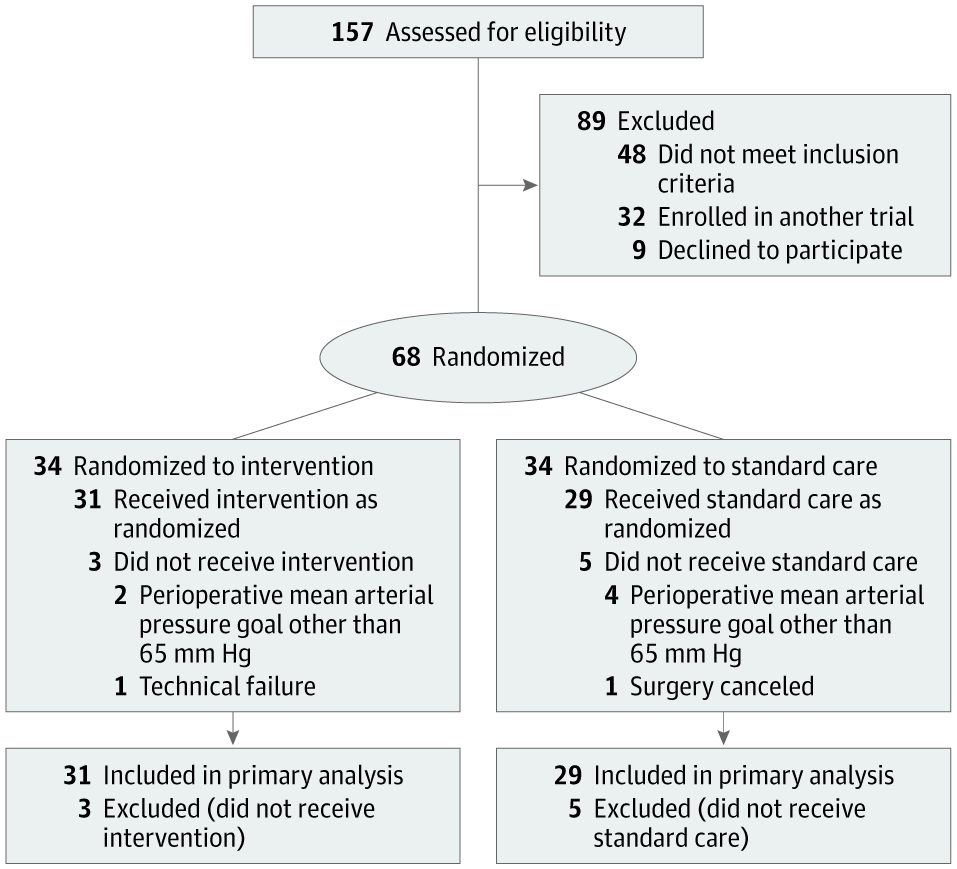

Alexander Vlaar, medical director of intensive care at the University Medical Center Amsterdam, and Denise Veelo, an anesthesiologist at the same institution, began such an endeavor in 2017. Their goal was to test an algorithm designed to predict the occurrence of hypotension during surgery. This condition, known as intraoperative hypotension, can lead to life-threatening complications such as heart muscle damage, heart attack and acute kidney failure, and even death.

The algorithm, developed by California-based Edwards Lifesciences, uses arterial waveform data — the red lines with peaks and troughs displayed on monitors in emergency departments or intensive care units. The method can predict hypotension minutes before it occurs, allowing for early intervention.

Currently, most medical AI tools help healthcare professionals with screening, diagnosis, or treatment planning. Patients may not be aware that these technologies are being tested or used routinely in their care, and no country currently requires healthcare providers to disclose this.

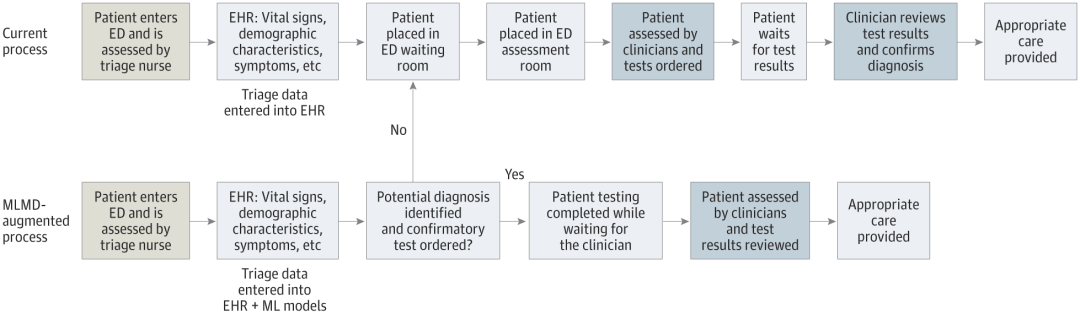

The debate continues over what patients should be told about artificial intelligence technology. Some of these apps have pushed the issue of patient consent into the spotlight for developers. That's the case with an artificial intelligence device that Singh's team is developing to streamline care for children in the SickKids emergency room.

What’s strikingly different about this technology is that it removes the clinician from the entire process, allowing the child (or their parent or guardian) to be the end user.

“What this tool does is take emergency triage data, make predictions, and give parents direct approval — yes or no — if their child can be tested,” Singh said. This reduces the burden on the clinician and speeds up the entire process. But it also brings many unprecedented problems. Who is responsible if something goes wrong for the patient? Who pays if unnecessary tests are performed?

“We need to obtain informed consent from families in an automated way.” Singh said, and the consent must be reliable and authentic. “This can’t be like when you sign up for social media and have 20 pages of fine print and you just click accept.”

While Singh and his colleagues wait for funding to start trials on patients, the team is working with legal experts and Engage the country's regulatory agency, Health Canada, in reviewing its proposals and considering regulatory implications. Currently, "the regulatory landscape is a bit like the Wild West," said Anna Goldenberg, a computer scientist and co-chair of the SickKids Children's Medical Artificial Intelligence Initiative.

Medical institutions prudently adopt AI tools and conduct autonomous testing.

Cost factors have prompted researchers and medical institutions to explore alternatives.

Large medical institutions have less difficulty, while small institutions face greater challenges.

Mayo Clinic tests AI tool for use in community health care settings.

The Health AI Alliance established a guarantee laboratory to evaluate the model.

Duke University proposes internal testing capabilities to locally verify AI models.

Radiologist Nina Kottler emphasizes the importance of local verification.

Human factors need to be paid attention to to ensure the accuracy of artificial intelligence and end users.

Reference content: https://www.nature.com/articles/d41586-024-02675-0

The above is the detailed content of Nature's point of view: The testing of artificial intelligence in medicine is in chaos. What should be done?. For more information, please follow other related articles on the PHP Chinese website!

border-collapse

border-collapse

What are the main differences between linux and windows

What are the main differences between linux and windows

The difference between static web pages and dynamic web pages

The difference between static web pages and dynamic web pages

Tutorial on merging multiple words into one word

Tutorial on merging multiple words into one word

The role of c++this pointer

The role of c++this pointer

How to close port 445 in xp

How to close port 445 in xp

How to install pycharm

How to install pycharm

How to open Windows 7 Explorer

How to open Windows 7 Explorer

Google earth cannot connect to the server solution

Google earth cannot connect to the server solution