Backend Development

Backend Development

Python Tutorial

Python Tutorial

How to create an AI chatbot using one API to access multiple LLMs

How to create an AI chatbot using one API to access multiple LLMs

How to create an AI chatbot using one API to access multiple LLMs

Originally published on the Streamlit blog by Liz Acosta

Remember how cool it was playing with an AI image generator for the first time? Those twenty million fingers and nightmare spaghetti-eating images were more than just amusing, they inadvertently revealed that oops! AI models are only as smart as we are. Like us, they also struggle to draw hands.

AI models have quickly become more sophisticated, but now there are so many of them. And – again – like us, some of them are better at certain tasks than others. Take text generation, for example. Even though Llama, Gemma, and Mistral are all LLMs, some of them are better at generating code while others are better at brainstorming, coding, or creative writing. They offer different advantages depending on the prompt, so it may make sense to include more than one model in your AI application.

But how do you integrate all these models into your app without duplicating code? How do you make your use of AI more modular and therefore easier to maintain and scale? That’s where an API can offer a standardized set of instructions for communicating across different technologies.

In this blog post, we’ll take a look at how to use Replicate with Streamlit to create an app that allows you to configure and prompt different LLMs with a single API call. And don’t worry – when I say “app,” I don’t mean having to spin up a whole Flask server or tediously configure your routes or worry about CSS. Streamlit’s got that covered for you ?

Read on to learn:

- What Replicate is

- What Streamlit is

- How to build a demo Replicate chatbot Streamlit app

- And best practices for using Replicate

Don’t feel like reading? Here are some other ways to explore this demo:

- Find the code in the Streamlit Cookbook repo here

- Try out a deployed version of the app here

- Watch a video walkthrough from Replicate founding designer, Zeke Sikelianos, here

What is Replicate?

Replicate is a platform that enables developers to deploy, fine tune, and access open source AI models via a CLI, API, or SDK. The platform makes it easy to programmatically integrate AI capabilities into software applications.

Available models on Replicate

- Text: Models like Llama 3 can generate coherent and contextually relevant text based on input prompts.

- Image: Models like stable diffusion can generate high-quality images from text prompts.

- Speech: Models like whisper can convert speech to text while models like xtts-v2 can generate natural-sounding speech.

- Video: Models like animate-diff or variants of stable diffusion like videocrafter can generate and/or edit videos from text and image prompts, respectively.

When used together, Replicate allows you to develop multimodal apps that can accept input and generate output in various formats whether it be text, image, speech, or video.

What is Streamlit?

Streamlit is an open-source Python framework to build highly interactive apps – in only a few lines of code. Streamlit integrates with all the latest tools in generative AI, such as any LLM, vector database, or various AI frameworks like LangChain, LlamaIndex, or Weights & Biases. Streamlit’s chat elements make it especially easy to interact with AI so you can build chatbots that “talk to your data.”

Combined with a platform like Replicate, Streamlit allows you to create generative AI applications without any of the app design overhead.

? To learn more about how Streamlit biases you toward forward progress, check out this blog post.

To learn more about Streamlit, check out the 101 guide.

Try the app recipe: Replicate + Streamlit

But don’t take my word for it. Try out the app yourself or watch a video walk through and see what you think.

In this demo, you’ll spin up a Streamlit chatbot app with Replicate. The app uses a single API to access three different LLMs and adjust parameters such as temperature and top-p. These parameters influence the randomness and diversity of the AI-generated text, as well as the method by which tokens are selected.

? What is model temperature? Temperature controls how the model selects tokens. A lower temperature makes the model more conservative, favoring common and “safe” words. Conversely, a higher temperature encourages the model to take more risks by selecting less probable tokens, resulting in more creative outputs.

? What is top-p? Also known as “nucleus sampling” — is another method for adjusting randomness. It works by considering a broader set of tokens as the top-p value increases. A higher top-p value leads to a more diverse range of tokens being sampled, producing more varied outputs.

Prerequisites

- Python version >=3.8, !=3.9.7

- A Replicate API key (Please note that a payment method is required to access features beyond the free trial limits.)

? To learn more about API keys, check out the blog post here.

Environment setup

Local setup

- Clone the Cookbook repo: git clone https://github.com/streamlit/cookbook.git

- From the Cookbook root directory, change directory into the Replicate recipe: cd recipes/replicate

- Add your Replicate API key to the .streamlit/secrets_template.toml file

- Update the filename from secrets_template.toml to secrets.toml: mv .streamlit/secrets_template.toml .streamlit/secrets.toml (To learn more about secrets handling in Streamlit, refer to the documentation here.)

- Create a virtual environment: python -m venv replicatevenv

- Activate the virtual environment: source replicatevenv/bin/activate

- Install the dependencies: pip install -r requirements.txt

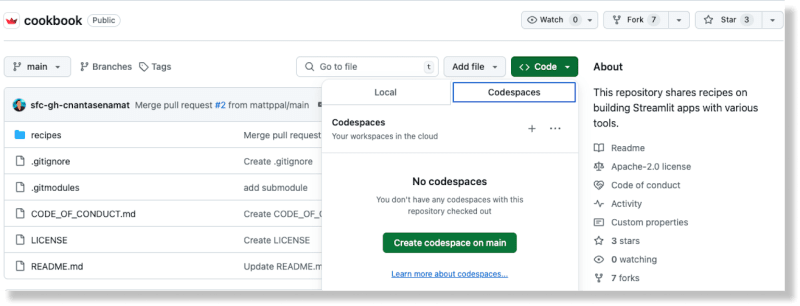

GitHub Codespaces setup

-

From the Cookbook repo on GitHub, create a new codespace by selecting the Codespaces option from the Code button

Once the codespace has been generated, add your Replicate API key to the recipes/replicate/.streamlit/secrets_template.toml file

Update the filename from secrets_template.toml to secrets.toml

(To learn more about secrets handling in Streamlit, refer to the documentation here.)From the Cookbook root directory, change directory into the Replicate recipe: cd recipes/replicate

Install the dependencies: pip install -r requirements.txt

Run a text generation model with Replicate

- Create a file in the recipes/replicate directory called replicate_hello_world.py

-

Add the following code to the file:

import replicate import toml import os # Read the secrets from the secrets.toml file with open(".streamlit/secrets.toml", "r") as f: secrets = toml.load(f) # Create an environment variable for the Replicate API token os.environ['REPLICATE_API_TOKEN'] = secrets["REPLICATE_API_TOKEN"] # Run a model for event in replicate.stream("meta/meta-llama-3-8b", input={"prompt": "What is Streamlit?"},): print(str(event), end="")Copy after login Run the script: python replicate_hello_world.py

You should see a print out of the text generated by the model.

To learn more about Replicate models and how they work, you can refer to their documentation here. At its core, a Replicate “model” refers to a trained, packaged, and published software program that accepts inputs and returns outputs.

In this particular case, the model is meta/meta-llama-3-8b and the input is "prompt": "What is Streamlit?". When you run the script, a call is made to the Replicate endpoint and the printed text is the output returned from the model via Replicate.

Run the demo Replicate Streamlit chatbot app

To run the demo app, use the Streamlit CLI: streamlit run streamlit_app.py.

Running this command deploys the app to a port on localhost. When you access this location, you should see a Streamlit app running.

You can use this app to prompt different LLMs via Replicate and produce generative text according to the configurations you provide.

A common API for multiple LLM models

Using Replicate means you can prompt multiple open source LLMs with one API which helps simplify AI integration into modern software flows.

This is accomplished in the following block of code:

for event in replicate.stream(model,

input={"prompt": prompt_str,

"prompt_template": r"{prompt}",

"temperature": temperature,

"top_p": top_p,}):

yield str(event)

The model, temperature, and top p configurations are provided by the user via Streamlit’s input widgets. Streamlit’s chat elements make it easy to integrate chatbot features in your app. The best part is you don’t need to know JavaScript or CSS to implement and style these components – Streamlit provides all of that right out of the box.

Replicate best practices

Use the best model for the prompt

Replicate provides an API endpoint to search for public models. You can also explore featured models and use cases on their website. This makes it easy to find the right model for your specific needs.

Different models have different performance characteristics. Use the appropriate model based on your needs for accuracy and speed.

Improve performance with webhooks, streaming, and image URLs

Replicate's output data is only available for an hour. Use webhooks to save the data to your own storage. You can also set up webhooks to handle asynchronous responses from models. This is crucial for building scalable applications.

Leverage streaming when possible. Some models support streaming, allowing you to get partial results as they are being generated. This is ideal for real-time applications.

Using image URLs provides improved performance over the use of uploaded images encoded by base 64.

Unlock the potential of AI with Streamlit

With Streamlit, months and months of app design work are streamlined to just a few lines of Python. It’s the perfect framework for showing off your latest AI inventions.

Get up and running fast with other AI recipes in the Streamlit Cookbook. (And don’t forget to show us what you’re building in the forum!)

Happy Streamlit-ing! ?

The above is the detailed content of How to create an AI chatbot using one API to access multiple LLMs. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to solve the permissions problem encountered when viewing Python version in Linux terminal?

Apr 01, 2025 pm 05:09 PM

How to solve the permissions problem encountered when viewing Python version in Linux terminal?

Apr 01, 2025 pm 05:09 PM

Solution to permission issues when viewing Python version in Linux terminal When you try to view Python version in Linux terminal, enter python...

How to avoid being detected by the browser when using Fiddler Everywhere for man-in-the-middle reading?

Apr 02, 2025 am 07:15 AM

How to avoid being detected by the browser when using Fiddler Everywhere for man-in-the-middle reading?

Apr 02, 2025 am 07:15 AM

How to avoid being detected when using FiddlerEverywhere for man-in-the-middle readings When you use FiddlerEverywhere...

How to efficiently copy the entire column of one DataFrame into another DataFrame with different structures in Python?

Apr 01, 2025 pm 11:15 PM

How to efficiently copy the entire column of one DataFrame into another DataFrame with different structures in Python?

Apr 01, 2025 pm 11:15 PM

When using Python's pandas library, how to copy whole columns between two DataFrames with different structures is a common problem. Suppose we have two Dats...

How to teach computer novice programming basics in project and problem-driven methods within 10 hours?

Apr 02, 2025 am 07:18 AM

How to teach computer novice programming basics in project and problem-driven methods within 10 hours?

Apr 02, 2025 am 07:18 AM

How to teach computer novice programming basics within 10 hours? If you only have 10 hours to teach computer novice some programming knowledge, what would you choose to teach...

How does Uvicorn continuously listen for HTTP requests without serving_forever()?

Apr 01, 2025 pm 10:51 PM

How does Uvicorn continuously listen for HTTP requests without serving_forever()?

Apr 01, 2025 pm 10:51 PM

How does Uvicorn continuously listen for HTTP requests? Uvicorn is a lightweight web server based on ASGI. One of its core functions is to listen for HTTP requests and proceed...

How to solve permission issues when using python --version command in Linux terminal?

Apr 02, 2025 am 06:36 AM

How to solve permission issues when using python --version command in Linux terminal?

Apr 02, 2025 am 06:36 AM

Using python in Linux terminal...

How to handle comma-separated list query parameters in FastAPI?

Apr 02, 2025 am 06:51 AM

How to handle comma-separated list query parameters in FastAPI?

Apr 02, 2025 am 06:51 AM

Fastapi ...

How to get news data bypassing Investing.com's anti-crawler mechanism?

Apr 02, 2025 am 07:03 AM

How to get news data bypassing Investing.com's anti-crawler mechanism?

Apr 02, 2025 am 07:03 AM

Understanding the anti-crawling strategy of Investing.com Many people often try to crawl news data from Investing.com (https://cn.investing.com/news/latest-news)...