TCP and UDP operate at the transport layer of the Internet Protocol Suite and are responsible for facilitating data transfer between devices over a network.

TCP (Transmission Control Protocol) is a connection-oriented protocol that establishes a reliable channel between sender and receiver.

It ensures that all data packets are delivered accurately and in the correct order, making it ideal for applications where data integrity is crucial.

UDP (User Datagram Protocol) is a connectionless protocol that sends data without establishing a dedicated end-to-end connection.

It does not guarantee the delivery or order of data packets, which reduces overhead and allows for faster transmission speeds.

This makes UDP suitable for applications where speed is more critical than reliability.

TCP is a connection-oriented protocol, which means it requires a formal connection to be established between the sender and receiver before any data transfer begins.

This setup process is known as the "three-way handshake."

In this handshake, the sender and receiver exchange synchronization (SYN) and acknowledgment (ACK) packets to agree on initial sequence numbers and window sizes.

Establishing this connection ensures that both parties are ready for communication, providing a reliable channel for data exchange.

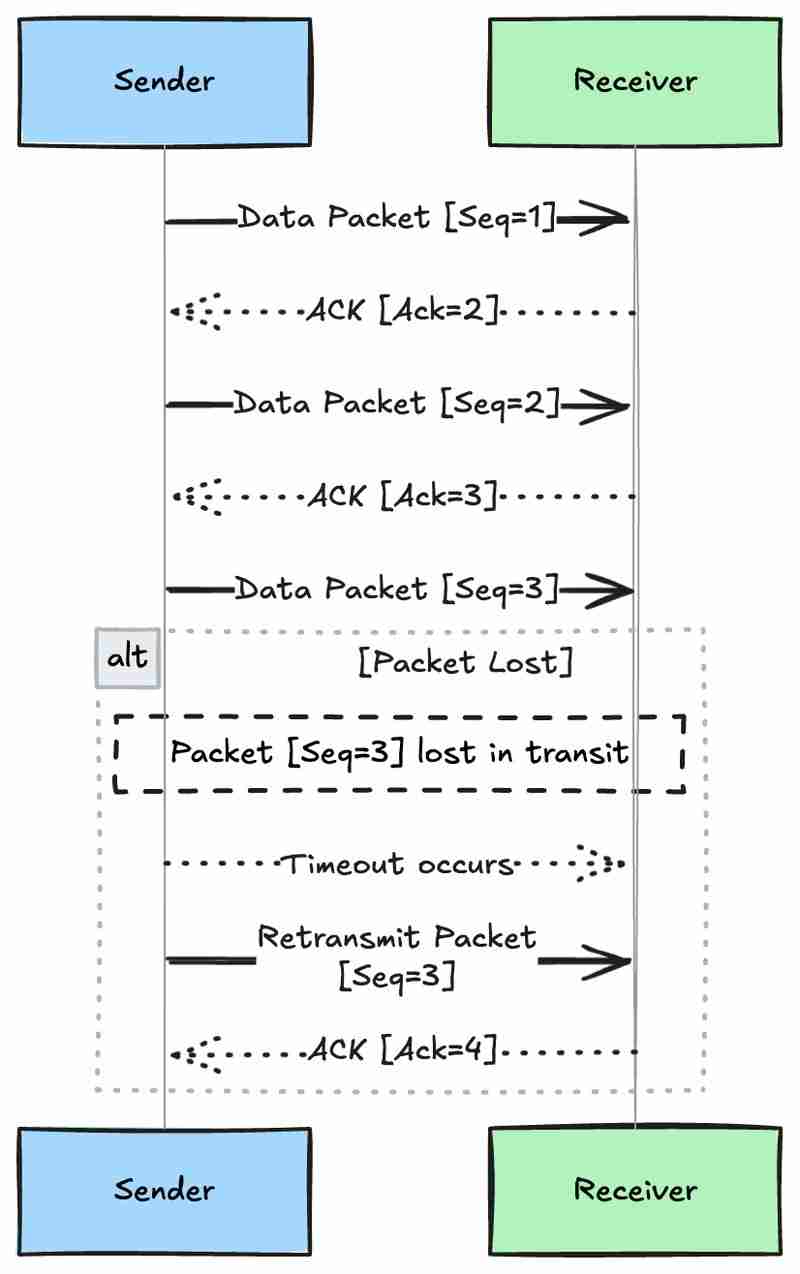

One of TCP's primary strengths is its ability to guarantee the reliable delivery of data packets in the exact order they were sent.

It achieves this through sequencing and acknowledgment mechanisms:

This allows the receiver to reassemble packets in the correct order, even if they arrive out of sequence due to network routing.

If the sender doesn't receive an acknowledgment within a certain timeframe, it assumes the packet was lost and retransmits it.

TCP incorporates flow control and congestion control algorithms to manage data transmission efficiently:

TCP uses a sliding window protocol where the receiver advertises the amount of data it can accept at one time (the window size).

The sender must respect this limit, ensuring smooth data flow and preventing buffer overflow at the receiver's end.

It uses algorithms like Slow Start, Congestion Avoidance, Fast Retransmit, and Fast Recovery to adjust the rate of data transmission.

When packet loss or delays are detected—signs of potential congestion—the sender reduces its transmission rate to alleviate network strain.

Conversely, if the network is clear, TCP gradually increases the transmission rate to optimize throughput.

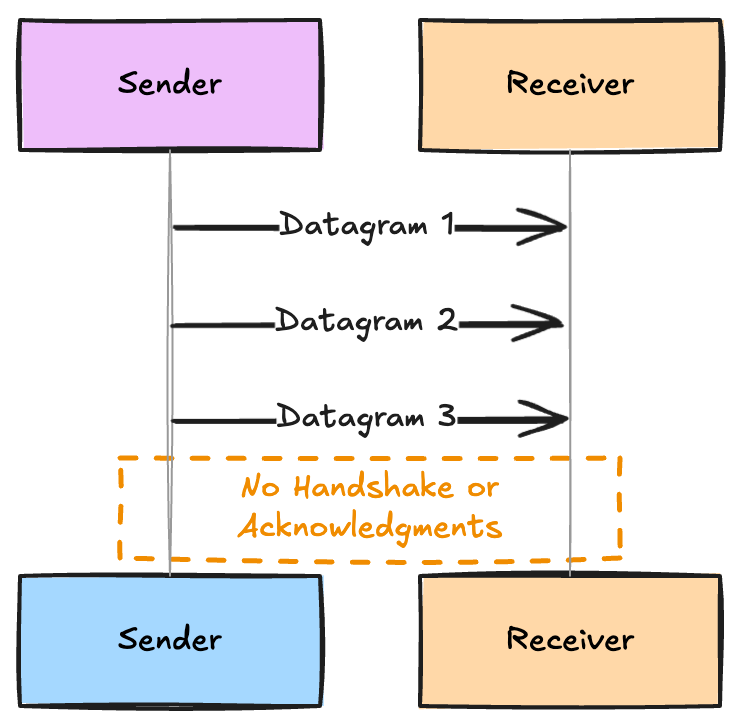

UDP is a connectionless protocol, meaning it does not require a dedicated end-to-end connection before data is transmitted.

Unlike TCP, which establishes a connection through a handshake process, UDP sends data packets, known as datagrams, directly to the recipient without any prior communication setup.

This lack of connection establishment reduces the initial delay and allows data transmission to begin immediately.

The sender does not wait for any acknowledgment from the receiver, making the communication process straightforward and efficient.

Immediate Transmission: Since there is no connection setup, data can be sent as soon as it is ready, which is crucial for time-sensitive applications.

No Handshake Process: Eliminates the overhead associated with establishing and terminating connections, reducing latency.

Stateless Communication: Each datagram is independent, containing all the necessary information for routing, which simplifies the protocol and reduces resource usage on network devices.

UDP provides an "unreliable" service, which in networking terms means:

No Guarantee of Packet Delivery: Datagrams may be lost in transit without the sender being notified.

No Assurance of Order: Packets may arrive out of sequence, as UDP does not reorder them.

No Error Correction: Unlike TCP, UDP does not check for errors or retransmit lost or corrupted packets.

Reduced Overhead: By not tracking packet delivery or handling acknowledgments, UDP reduces the amount of additional data sent over the network.

Faster Transmission: Less processing is required by both sender and receiver, allowing for higher throughput and lower latency.

Application-Level Control: Some applications prefer to handle reliability and error correction themselves rather than relying on the transport protocol.

The minimalistic design of UDP contributes to its low overhead:

Small Header Size: UDP's header is only 8 bytes long, compared to TCP's 20-byte header. This smaller size means less data is sent with each packet, conserving bandwidth.

Simplified Processing: Fewer features mean less computational work for networking equipment and endpoints, which can improve performance, especially under high load.

Efficiency in High-Performance Applications: The reduced overhead makes UDP suitable for applications that need to send large volumes of data quickly and can tolerate some data loss.

Requires Reliable Data Transfer for Page Rendering

Web browsing relies heavily on the accurate and complete transfer of data to render web pages correctly.

HTTP and HTTPS protocols use TCP to ensure that all the elements of a webpage—such as text, images, and scripts—are delivered reliably and in the correct order.

TCP's error-checking and acknowledgment features guarantee that missing or corrupted packets are retransmitted, preventing broken images or incomplete content, which is essential for user experience and functionality.

Ensures Complete and Ordered Delivery of Messages

Email protocols like SMTP (Simple Mail Transfer Protocol) and IMAP (Internet Message Access Protocol) use TCP to provide reliable transmission of messages.

Emails often contain important information and attachments that must arrive intact.

TCP ensures that all parts of an email are received in the proper sequence without errors, maintaining the integrity of the communication and preventing data loss, which is crucial for personal and professional correspondence.

Prioritizes Speed Over Reliability to Reduce Latency

Applications like Voice over IP (VoIP) and video conferencing require minimal delay to facilitate smooth, real-time communication.

UDP is used because it allows data to be transmitted quickly without the overhead of establishing a connection or ensuring packet delivery.

While UDP does not guarantee that all packets arrive or are in order, the occasional loss of a data packet might result in a brief glitch but does not significantly affect the overall conversation.

The priority is on reducing latency to maintain a natural flow of communication.

Tolerates Minor Data Loss for Continuous Playback

Streaming services, such as live video or audio streaming, use UDP to send data continuously to users.

The protocol's low overhead allows for a steady stream without the delays associated with error checking and retransmission.

Minor packet losses may cause slight drops in quality but are generally imperceptible to the user.

The key objective is to prevent buffering and interruptions, providing an uninterrupted viewing or listening experience.

UDP enables the service to prioritize continuous playback over perfect data accuracy.

Requires Fast Data Transmission for Real-Time Interaction

Online gaming demands rapid and continuous data exchange to reflect player actions instantaneously.

UDP is preferred because it provides low-latency communication, essential for responsive gameplay.

Players can experience real-time interactions without noticeable delays.

While some data packets may be lost, the game typically compensates by frequently updating the game state, ensuring a seamless experience.

The emphasis is on speed rather than absolute reliability to keep the gameplay fluid.

When choosing between TCP and UDP, it's essential to consider how each protocol's characteristics affect network performance.

Key factors include latency, throughput, reliability, and how these impact the application's functionality and user experience.

Acknowledgment Packets and Handshakes Can Introduce Delays

TCP is designed for reliability and ordered data delivery, which introduces additional overhead:

This process involves exchanging SYN (synchronize) and ACK (acknowledge) packets, adding initial latency.

If acknowledgments aren't received, TCP retransmits the data. While this guarantees delivery, it can cause delays, especially in high-latency networks or over long distances.

While beneficial for network stability, these mechanisms can reduce throughput during periods of congestion, affecting application performance.

Reduced Overhead Leads to Lower Latency

UDP's design prioritizes speed and efficiency:

No Connection Establishment: UDP is connectionless, so it doesn't require a handshake before sending data.

This absence of initial setup reduces latency, allowing for immediate data transmission.

No Acknowledgments: UDP doesn't wait for acknowledgments or retransmit lost packets, eliminating the delays associated with these processes in TCP.

Minimal Protocol Overhead: With a smaller header size and fewer protocol mechanisms, UDP reduces the amount of additional data sent over the network, increasing throughput and decreasing latency.

Determining Whether Speed or Reliability Is Important

Choosing between TCP and UDP depends on the specific needs of the application:

In these cases, TCP's reliability features are essential to ensure data integrity and order.

Here, UDP's low overhead and faster transmission make it the preferred choice, even if some data packets are lost along the way.

Employing Both Protocols Where Suitable

In some scenarios, a combination of both TCP and UDP can optimize performance:

For example, a video conferencing app could use UDP for the real-time audio and video streams to minimize latency, while using TCP for sending text messages or file transfers within the app to ensure reliable delivery.

This approach allows for low-latency communication with added reliability where needed, tailored specifically to the application's requirements.

When choosing between TCP and UDP, it's important to consider not only performance and reliability but also security implications.

Each protocol has inherent vulnerabilities that can be exploited by malicious actors.

Understanding these vulnerabilities and implementing appropriate mitigation techniques is crucial for maintaining network security.

Thought for 5 seconds

V. Security Implications

When choosing between TCP and UDP, it's important to consider not only performance and reliability but also security implications. Each protocol has inherent vulnerabilities that can be exploited by malicious actors. Understanding these vulnerabilities and implementing appropriate mitigation techniques is crucial for maintaining network security.

Susceptibility to SYN Flooding Attacks

TCP's connection-oriented nature requires a three-way handshake (SYN, SYN-ACK, ACK) to establish a connection between a client and a server.

In a SYN flooding attack, an attacker exploits this mechanism by sending a large number of SYN requests to the server but never completing the handshake.

Specifically, the attacker:

Sends numerous SYN packets with spoofed IP addresses.

The server responds with SYN-ACK packets and allocates resources for each half-open connection.

Since the final ACK from the client never arrives, these connections remain half-open, consuming the server's memory and processing power.

The result is that legitimate clients cannot establish connections because the server's resources are overwhelmed, leading to a denial-of-service (DoS) condition.

Implementation of SYN Cookies

SYN cookies are a server-side technique to mitigate SYN flooding attacks without requiring additional resources for half-open connections. Here's how they work:

When a SYN packet is received, instead of allocating resources, the server encodes the state (such as the sequence number and other connection parameters) into the initial sequence number (ISN) field of the SYN-ACK packet.

If the client responds with an ACK packet (completing the handshake), the server can reconstruct the original connection state from the ISN and proceed to establish the connection.

This approach allows the server to handle a large number of SYN requests without overloading its resources, as it doesn't need to keep track of half-open connections.

Use of Firewalls and Intrusion Prevention Systems

Firewalls and intrusion prevention systems (IPS) can be configured to detect and mitigate SYN flooding attacks:

Rate Limiting: Limiting the number of SYN packets from a single IP address or subnet can reduce the impact of an attack.

Thresholds and Alerts: Setting thresholds for normal SYN traffic and generating alerts when exceeded helps in early detection.

Filtering Spoofed IP Addresses: Implementing ingress and egress filtering to block packets with forged source IP addresses.

Timeout Adjustments

Adjusting the timeout period for half-open connections can free up resources more quickly:

Reducing SYN-RECEIVED Timeout: Decreasing the time the server waits for the final ACK before dropping the half-open connection.

Prone to Amplification Attacks Like DNS Amplification

UDP's connectionless nature and lack of validation make it susceptible to amplification attacks, where an attacker can amplify the volume of traffic directed at a target, causing a distributed denial-of-service (DDoS). In a DNS amplification attack:

Similar amplification attacks can exploit other UDP-based services like NTP (Network Time Protocol) and SSDP (Simple Service Discovery Protocol).

Rate Limiting

Implementing rate limiting controls the flow of traffic to and from the network:

Robust Filtering Mechanisms

Employing advanced filtering techniques to block malicious traffic:

Reducing the attack surface by disabling UDP services that are not in use:

Closing Unnecessary Ports: Shutting down services running on UDP ports that are not essential.

Securing Open Services: For necessary services, implementing authentication and access controls to prevent abuse.

Using DNSSEC and Response Rate Limiting (RRL)

For DNS servers:

DNSSEC (Domain Name System Security Extensions): Adds authentication to DNS responses, reducing the effectiveness of spoofing attacks.

Response Rate Limiting: Configuring DNS servers to limit the rate of responses to prevent participating in amplification attacks.

The above is the detailed content of TCP vs UDP protocol. For more information, please follow other related articles on the PHP Chinese website!

How to install printer driver in linux

How to install printer driver in linux

Detailed explanation of Symbol class in JS

Detailed explanation of Symbol class in JS

How to view Tomcat source code

How to view Tomcat source code

The difference between vscode and vs

The difference between vscode and vs

My computer can't open it by double-clicking it.

My computer can't open it by double-clicking it.

What software is ae

What software is ae

How to restart the service in swoole framework

How to restart the service in swoole framework

How to intercept harassing calls

How to intercept harassing calls

Introduction to the difference between javascript and java

Introduction to the difference between javascript and java