By fast programming I mean templates that are automatically inserted into the code and solve simple problems.

Unloading a sample from a file and filling the data array with labels are elementary operations that can be copied and pasted into different projects.

from google.colab import drive

drive.mount('/content/gdrive', force_remount=True)

!cp /content/gdrive/'My Drive'/data.zip .

!unzip data.zip

Google Colab loads the required sample and fills the memory area during the project run. This can be done once, and errors in the model can be corrected without touching this piece of code.

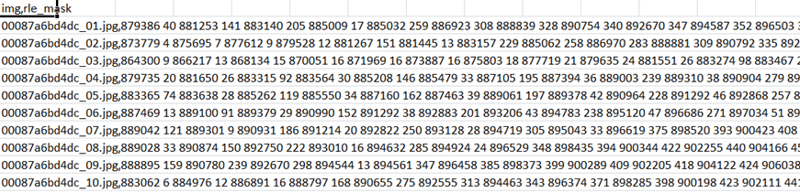

The dataset is downloaded and parsed into labels from the .zip file (below). What is important to us is not the accuracy of the image in the data array, but the average size of the downloaded files.

We also duplicate libraries that are relevant for different projects:

import keras from keras.layers import Dense, GlobalAveragePooling2D, Dropout, UpSampling2D, Conv2D, MaxPooling2D, Activation from keras.models import Model from keras.layers import Input, Dense, Concatenate inp = Input(shape=(256, 256, 3))

But it is better to write the code execution on similar data at a given time, since the array size changes from task to task.

for x, y in keras_generator(train_df, 16):

break

We make sure that the sample labels and the volume of image data match. Otherwise, the model training will be disrupted and errors will occur.

We also monitor declared variables. If in one project there are different designations of single entities, there is a risk of data conflict. FTW

model = Model(inputs=inp, outputs=result)

It is better to store such developments in GitHub for frequent reference. The computer file system has a tendency to confuse when accessing familiar resources.

The above is the detailed content of Fast programming. For more information, please follow other related articles on the PHP Chinese website!