Llama 3 and GPT-4 are two of the most advanced large language models (LLMs) available to the public. Let’s see which LLM is better by comparing both models in terms of multimodality, context length, performance, and cost.

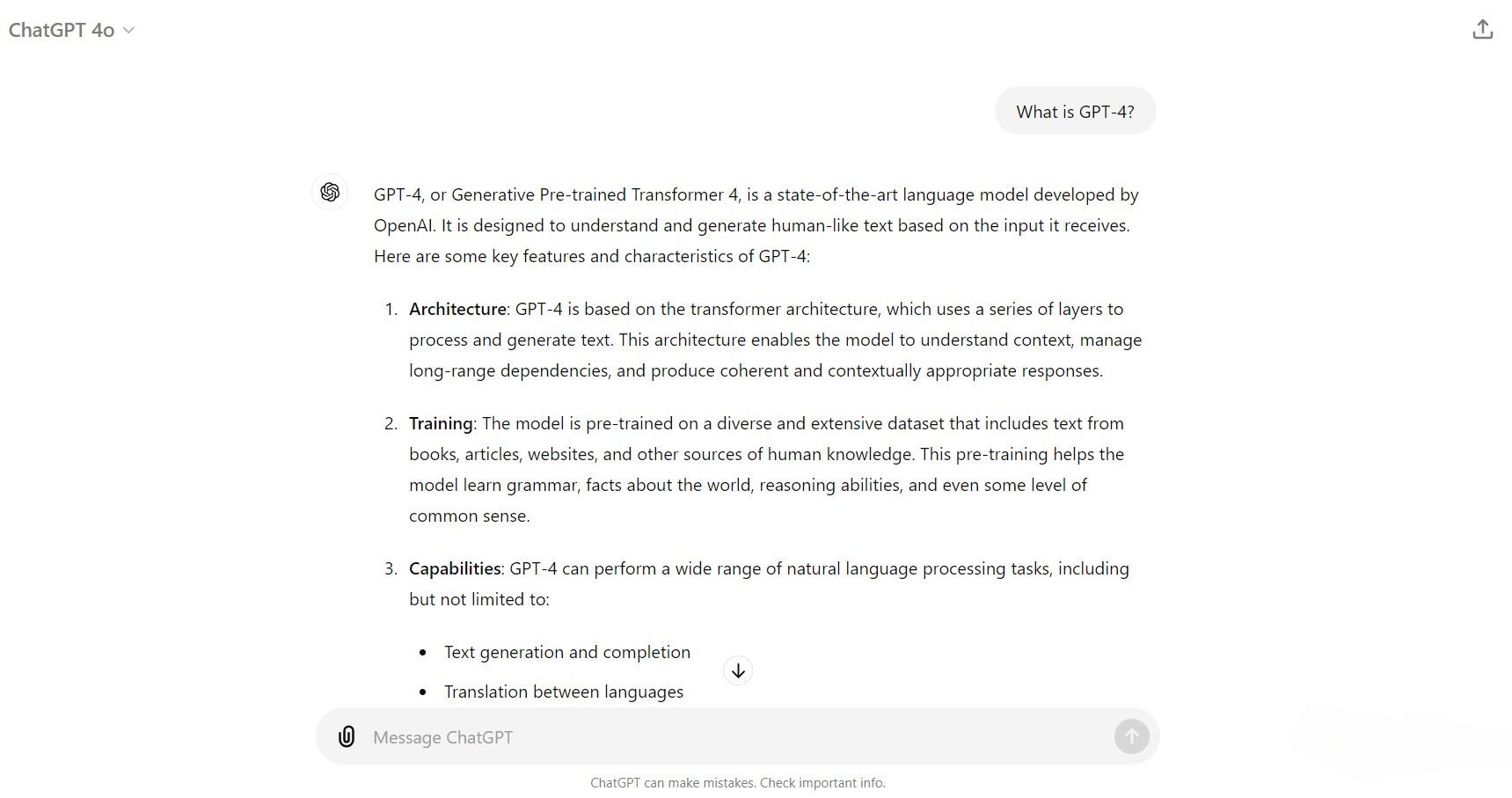

GPT-4 is the latest large language model (LLM) developed by OpenAI. It builds upon the foundations of the older GPT-3 models while using different training techniques and optimizations using a much larger dataset. This significantly increased the parameter size of GPT-4, which is rumored to have a combined total of 1.7 trillion parameters from its smaller expert models. With the new training, optimizations, and larger number of parameters, GPT-4 provides improvements in reasoning, problem-solving, context understanding, and better handling of nuanced instructions.

There are currently three variations of the model:

You can now access all three GPT-4 models by subscribing to OpenAI’s API services, interacting with ChatGPT, or through services such as Descript, Perplexity AI, and the various copilots from Microsoft.

Llama 3 is an open-source LLM developed by Meta AI (parent company of Facebook, Instagram, and WhatsApp), trained using a combination of supervised fine-tuning, rejection sampling, and policy optimization with a diverse dataset including millions of human-annotated examples. Its training focused on high-quality prompts and preference rankings, aiming to create a versatile and capable AI model.

There are currently two Llama 3 models available to the public: Llama 3 8B and Llama 3 70B. The “B” stands for billion, pointing to the model's parameter size. Meta is also training a Llama 3 400B model, expected to launch late in 2024.

You can access Llama 3 through Meta AI, its generative AI chatbot. Alternatively, you can run the LLMs locally on your computer by downloading Llama 3 models and loading them through Ollama, Open WebUI, or LM Studio.

The release of GPT-4o has finally delivered on the initial marketing of GPT-4 having multimodal capabilities. These multimodal features can now be accessed by interacting with ChatGPT using the GPT-4o model. As of June 2024, GPT-4o doesn’t have any integrated way of generating video and audio. However, it does have capabilities to generate text and images based on video and audio inputs.

Llama 3 is also planning to provide a multimodal model for the upcoming Llama 3 400B. It will most likely integrate similar technologies to CLIP (Contrast Language-Imager Pre-Training) to generate images using zero-shot learning techniques. But since Llama 400B is still in training, the only way for the 8B and 70B models to generate images is to use extensions such as the LLaVa, Visual-LLaMA, and LLaMA-VID. As of now, Llama 3 is purely a language-based model that can take text, image, and audio as inputs for generating text.

Context length refers to the amount of text a model can process at once. It is an important factor when considering an LLM’s capability as it dictates the amount of context the model can work with when interacting with users. In general, a higher context length makes an LLM better as it provides a higher level of coherence, continuity, and can reduce repetitions of errors during interactions.

| Training Data Description | Params | Context Length | GQA | Token Count | Knowledge Cutoff | |||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Llama 3 | Mix of publicly available online data | 8B | 8k | Yes | 15T | March, 2023 | |||||||||||||||||||||

| Llama 3 | Mix of publicly available online data | 70B | 8k | Yes | 15T | December, 2023 |

Llama 3 models feature a context length of effectively 8,000 tokens (approximately 6,400 words). This means a Llama 3 model will have a context memory of around 6,400 words within your interaction. Any words past the 8,000 token limit will be forgotten and will not provide any further context during the interaction.

| Description | Context Window | Training Data | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| GPT-4o | Multimodal flagship model, cheaper and faster than GPT-4 Turbo. | 128,000 tokens (API) | Up to Oct 2023 | ||||||||||||||||

| GPT-4-Turbo | Streamlined GPT-4 Turbo model with vision capabilities. | 128,000 tokens (API) | Up to Dec 2023 | ||||||||||||||||

| GPT-4 | First GPT-4 model | 8,192 tokens | Up to Sep 2021 |

In contrast, GPT-4 now supports a significantly larger context length of 32,000 tokens (around 25,600 words) for ChatGPT users and 128,000 tokens (around 102,400 words) for those using API endpoints. This gives GPT-4 models an edge in managing extensive conversations and the ability to read long documents or even through an entire book.

Let’s compare performance by looking at the Llama 3 April 18, 2024, benchmark report from Meta AI and the GPT-4 May 14, 2024, GitHub report by OpenAI. Here are the results:

| MMLU | GPQA | MATH | HumanEval | DROP | ||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| GPT-4o | 88.7 | 53.6 | 76.6 | 90.2 | 83.4 | ||||||||||||||||||||||||||||||||||||

| GPT-4 Turbo | 86.5 | 49.1 | 72.2 | 87.6 | 85.4 | ||||||||||||||||||||||||||||||||||||

| Llama3 8B | 68.4 | 34.2 | 30.0 | 62.2 | 58.4 | ||||||||||||||||||||||||||||||||||||

| Llama3 70B | 82.0 | 39.5 | 50.4 | 81.7 | 79.7 | ||||||||||||||||||||||||||||||||||||

| Llama3 400B | 86.1 | 48.0 | 57.8 | 84.1 | 83.5 |

Here’s what each criterion evaluates:

The recent benchmarks highlight the performance difference between GPT-4 and Llama 3 models. Though the Llama 3 8B model seems to lag significantly behind, the 70B and 400B models provide lower but similar results to both GPT-4o and GPT-4 Turbo models in terms of academic and general knowledge, reading and comprehension, reasoning and logic, and coding. However, no Llama 3 model is yet to come close to the performance of GPT-4 in terms of pure mathematics.

Cost is a critical factor for many users. OpenAI's GPT-4o model is available to all ChatGPT users for free at a 16-message limit every 3 hours. If you need more, then you'll have to subscribe to ChatGPT Plus, which costs $20 USD per month to expand GPT-4o's message limit to 80 while also having access to the other GPT-4 models.

On the other hand, both Llama 3 8B and 70B models are free and open-source, which can be a significant advantage for developers and researchers looking for a cost-effective solution without compromising on performance.

GPT-4 models are widely accessible through OpenAI's ChatGPT generative AI chatbot and through its API. You can also use GPT-4 on Microsoft Copilot, which is one way you can use GPT-4 for free. This widespread availability ensures that users can easily leverage its capabilities across different use cases. In contrast, Llama 3 is an open-source project that provides model flexibility and encourages broader experimentation and collaboration within the AI community. This open-access approach can democratize AI technology, making it available to a much wider audience.

Although both models are readily available, GPT-4 is much easier to use because it is integrated into popular productivity tools and services. On the other hand, Llama 3 is mainly integrated into research and business platforms like Amazon Bedrock, Ollama, and DataBricks (except for Meta AI chat assist), which doesn't appeal to the larger market of non-technical users.

So, which LLM is better? I would have to say that GPT-4 is the better LLM. GPT-4 excels in multimodality with advanced capabilities in handling text, image, and audio inputs, while Llama 3's similar features are still in development. GPT-4 also offers a much larger context length and better performance and is widely accessible through popular tools and services, making it more user-friendly.

However, it's important to highlight that Llama 3 models have performed exceptionally well for a free and open-source project. As a result, Llama 3 remains a standout LLM, favored by researchers and businesses for its cost-free and open-source nature while providing impressive performance, flexibility, and reliable privacy features. While general consumers might not find immediate use for Llama 3, it remains the most viable option for many researchers and businesses.

In conclusion, although GPT-4 stands out for its advanced multimodal capabilities, larger context length, and seamless integration into widely used tools, Llama 3 offers a valuable alternative with its open-source nature, allowing for greater customization and cost savings. So, in terms of application, GPT-4 is ideal for those seeking ease of use and comprehensive features in a model, while Llama 3 is well-suited for developers and researchers looking for flexibility and adaptability.

The above is the detailed content of Llama 3 vs. GPT-4: Which Is Better?. For more information, please follow other related articles on the PHP Chinese website!