Caltech in PyTorch

Buy Me a Coffee☕

*My post explains Caltech 101.

Caltech101() can use Caltech 101 dataset as shown below:

*Memos:

- The 1st argument is root(Required-Type:str or pathlib.Path). *An absolute or relative path is possible.

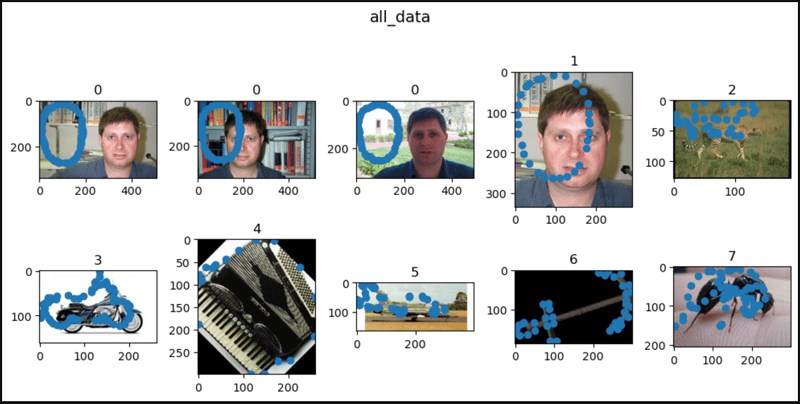

- The 2nd argument is target_type(Optional-Default:"category"-Type:str or tuple or list of str). *"category" and/or "annotation" can be set to it.

- The 3rd argument is transform(Optional-Default:None-Type:callable).

- The 4th argument is target_transform(Optional-Default:None-Type:callable).

- The 5th argument is download(Optional-Default:False-Type:bool):

*Memos:

- If it's True, the dataset is downloaded from the internet and extracted(unzipped) to root.

- If it's True and the dataset is already downloaded, it's extracted.

- If it's True and the dataset is already downloaded and extracted, nothing happens.

- It should be False if the dataset is already downloaded and extracted because it's faster.

- You can manually download and extract the dataset(101_ObjectCategories.tar.gz and Annotations.tar) from here to data/caltech101/.

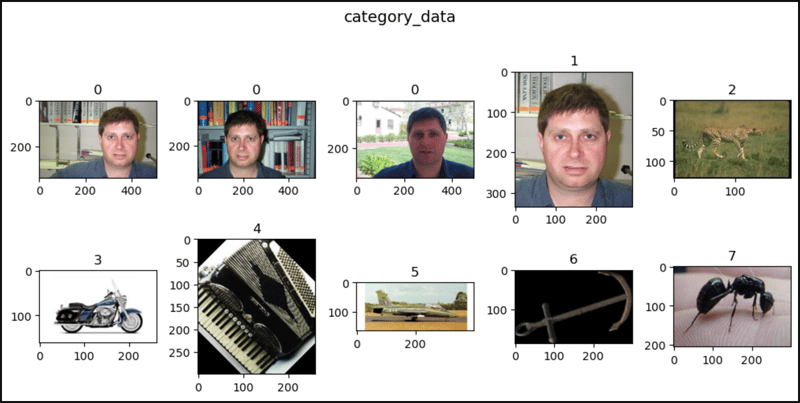

- About the categories of the image indices, Faces(0) is 0~434, Faces_easy(1) is 435~869, Leopards(2) is 870~1069, Motorbikes(3) is 1070~1867, accordion(4) is 1868~1922, airplanes(5) is 1923~2722, anchor(6) is 2723~2764, ant(7) is 2765~2806, barrel(8) is 2807~2853, bass(9) is 2854~2907, etc.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 |

|

The above is the detailed content of Caltech in PyTorch. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to solve the permissions problem encountered when viewing Python version in Linux terminal?

Apr 01, 2025 pm 05:09 PM

How to solve the permissions problem encountered when viewing Python version in Linux terminal?

Apr 01, 2025 pm 05:09 PM

Solution to permission issues when viewing Python version in Linux terminal When you try to view Python version in Linux terminal, enter python...

How to avoid being detected by the browser when using Fiddler Everywhere for man-in-the-middle reading?

Apr 02, 2025 am 07:15 AM

How to avoid being detected by the browser when using Fiddler Everywhere for man-in-the-middle reading?

Apr 02, 2025 am 07:15 AM

How to avoid being detected when using FiddlerEverywhere for man-in-the-middle readings When you use FiddlerEverywhere...

How to efficiently copy the entire column of one DataFrame into another DataFrame with different structures in Python?

Apr 01, 2025 pm 11:15 PM

How to efficiently copy the entire column of one DataFrame into another DataFrame with different structures in Python?

Apr 01, 2025 pm 11:15 PM

When using Python's pandas library, how to copy whole columns between two DataFrames with different structures is a common problem. Suppose we have two Dats...

How does Uvicorn continuously listen for HTTP requests without serving_forever()?

Apr 01, 2025 pm 10:51 PM

How does Uvicorn continuously listen for HTTP requests without serving_forever()?

Apr 01, 2025 pm 10:51 PM

How does Uvicorn continuously listen for HTTP requests? Uvicorn is a lightweight web server based on ASGI. One of its core functions is to listen for HTTP requests and proceed...

How to teach computer novice programming basics in project and problem-driven methods within 10 hours?

Apr 02, 2025 am 07:18 AM

How to teach computer novice programming basics in project and problem-driven methods within 10 hours?

Apr 02, 2025 am 07:18 AM

How to teach computer novice programming basics within 10 hours? If you only have 10 hours to teach computer novice some programming knowledge, what would you choose to teach...

How to solve permission issues when using python --version command in Linux terminal?

Apr 02, 2025 am 06:36 AM

How to solve permission issues when using python --version command in Linux terminal?

Apr 02, 2025 am 06:36 AM

Using python in Linux terminal...

How to handle comma-separated list query parameters in FastAPI?

Apr 02, 2025 am 06:51 AM

How to handle comma-separated list query parameters in FastAPI?

Apr 02, 2025 am 06:51 AM

Fastapi ...

How to get news data bypassing Investing.com's anti-crawler mechanism?

Apr 02, 2025 am 07:03 AM

How to get news data bypassing Investing.com's anti-crawler mechanism?

Apr 02, 2025 am 07:03 AM

Understanding the anti-crawling strategy of Investing.com Many people often try to crawl news data from Investing.com (https://cn.investing.com/news/latest-news)...