Concurrency programming is a programming approach that deals with the simultaneous execution of multiple tasks. In Python, asyncio is a powerful tool for implementing asynchronous programming. Based on the concept of coroutines, asyncio can efficiently handle I/O-intensive tasks. This article will introduce the basic principles and usage of asyncio.

We know that when handling I/O operations, using multithreading can greatly improve efficiency compared to a normal single thread. So, why do we still need asyncio?

Multithreading has many advantages and is widely used, but it also has certain limitations:

It is precisely to solve these problems that asyncio emerged.

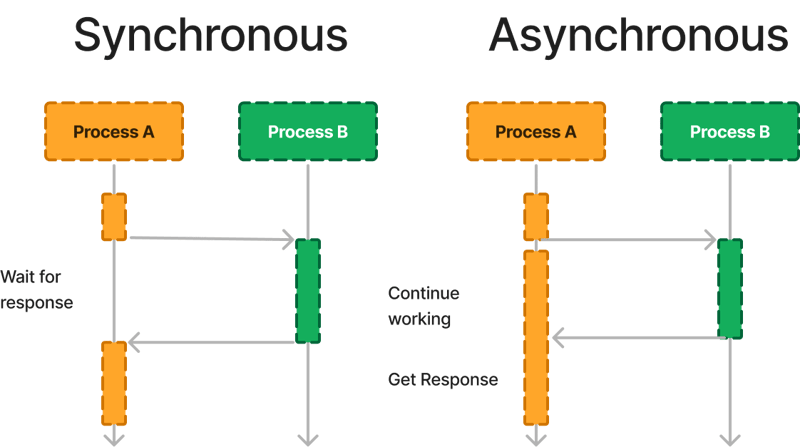

Let's first distinguish between the concepts of Sync (synchronous) and Async (asynchronous).

In summary, the working principle of asyncio is based on the mechanisms of coroutines and event loops. By using coroutines for asynchronous operations and having the event loop responsible for the scheduling and execution of coroutines, asyncio realizes an efficient asynchronous programming model.

Coroutines are an important concept in asyncio. They are lightweight execution units that can quickly switch between tasks without the overhead of thread switching. Coroutines can be defined with the async keyword, and the await keyword is used to pause the execution of the coroutine and resume after a certain operation is completed.

Here is a simple sample code demonstrating how to use coroutines for asynchronous programming:

import asyncio

async def hello():

print("Hello")

await asyncio.sleep(1) # Simulate a time-consuming operation

print("World")

# Create an event loop

loop = asyncio.get_event_loop()

# Add the coroutine to the event loop and execute

loop.run_until_complete(hello())

In this example, the function hello() is a coroutine defined with the async keyword. Inside the coroutine, we can use await to pause its execution. Here, asyncio.sleep(1) is used to simulate a time-consuming operation. The run_until_complete() method adds the coroutine to the event loop and runs it.

asyncio is mainly used to handle I/O-intensive tasks, such as network requests, file reading and writing. It provides a series of API for asynchronous I/O operations, which can be used in combination with the await keyword to easily achieve asynchronous programming.

Here is a simple sample code showing how to use asyncio for asynchronous network requests:

import asyncio

import aiohttp

async def fetch(session, url):

async with session.get(url) as response:

return await response.text()

async def main():

async with aiohttp.ClientSession() as session:

html = await fetch(session, 'https://www.example.com')

print(html)

# Create an event loop

loop = asyncio.get_event_loop()

# Add the coroutine to the event loop and execute

loop.run_until_complete(main())

In this example, we use the aiohttp library for network requests. The function fetch() is a coroutine. It initiates an asynchronous GET request through the session.get() method and waits for the response to return using the await keyword. The function main() is another coroutine. It creates a ClientSession object inside for reuse, then calls the fetch() method to get the web page content and print it.

Note: Here we use aiohttp instead of the requests library because the requests library is not compatible with asyncio, while the aiohttp library is. To make good use of asyncio, especially to exert its powerful functions, in many cases, corresponding Python libraries are required.

asyncio also provides some mechanisms for concurrently executing multiple tasks, such as asyncio.gather() and asyncio.wait(). The following is a sample code showing how to use these mechanisms to concurrently execute multiple coroutine tasks:

import asyncio

async def task1():

print("Task 1 started")

await asyncio.sleep(1)

print("Task 1 finished")

async def task2():

print("Task 2 started")

await asyncio.sleep(2)

print("Task 2 finished")

async def main():

await asyncio.gather(task1(), task2())

# Create an event loop

loop = asyncio.get_event_loop()

# Add the coroutine to the event loop and execute

loop.run_until_complete(main())

In this example, we define two coroutine tasks task1() and task2(), both of which perform some time-consuming operations. The coroutine main() starts these two tasks simultaneously through asyncio.gather() and waits for them to complete. Concurrent execution can improve program execution efficiency.

In actual projects, should we choose multithreading or asyncio? A big shot summarized it vividly:

import asyncio

async def hello():

print("Hello")

await asyncio.sleep(1) # Simulate a time-consuming operation

print("World")

# Create an event loop

loop = asyncio.get_event_loop()

# Add the coroutine to the event loop and execute

loop.run_until_complete(hello())

Input a list. For each element in the list, we want to calculate the sum of the squares of all integers from 0 to this element.

import asyncio

import aiohttp

async def fetch(session, url):

async with session.get(url) as response:

return await response.text()

async def main():

async with aiohttp.ClientSession() as session:

html = await fetch(session, 'https://www.example.com')

print(html)

# Create an event loop

loop = asyncio.get_event_loop()

# Add the coroutine to the event loop and execute

loop.run_until_complete(main())

The execution time is Calculation takes 16.00943413000002 seconds

import asyncio

async def task1():

print("Task 1 started")

await asyncio.sleep(1)

print("Task 1 finished")

async def task2():

print("Task 2 started")

await asyncio.sleep(2)

print("Task 2 finished")

async def main():

await asyncio.gather(task1(), task2())

# Create an event loop

loop = asyncio.get_event_loop()

# Add the coroutine to the event loop and execute

loop.run_until_complete(main())

The execution time is Calculation takes 7.314132894999999 seconds

In this improved code, we use concurrent.futures.ProcessPoolExecutor to create a process pool, and then use the executor.map() method to submit tasks and get results. Note that after using executor.map(), if you need to get the results, you can iterate the results into a list or use other methods to process the results.

if io_bound:

if io_slow:

print('Use Asyncio')

else:

print('Use multi-threading')

elif cpu_bound:

print('Use multi-processing')

The execution time is Calculation takes 5.024221667 seconds

concurrent.futures.ProcessPoolExecutor and multiprocessing are both libraries for implementing multi-process concurrency in Python. There are some differences:

In summary, concurrent.futures.ProcessPoolExecutor is a high-level interface that encapsulates the underlying multi-process functions, suitable for simple multi-process task parallelization. multiprocessing is a more low-level library, providing more control and flexibility, suitable for scenarios that require fine-grained control of processes. You need to choose the appropriate library according to specific requirements. If it is just simple task parallelization, you can use concurrent.futures.ProcessPoolExecutor to simplify the code; if more low-level control and communication are needed, you can use the multiprocessing library.

Unlike multithreading, asyncio is single-threaded, but the mechanism of its internal event loop allows it to run multiple different tasks concurrently and has greater autonomous control than multithreading.

Tasks in asyncio will not be interrupted during operation, so the situation of race condition will not occur.

Especially in scenarios with heavy I/O operations, asyncio has higher operating efficiency than multithreading. Because the cost of task switching in asyncio is much smaller than that of thread switching, and the number of tasks that asyncio can start is much larger than the number of threads in multithreading.

However, it should be noted that in many cases, using asyncio requires the support of specific third-party libraries, such as aiohttp in the previous example. And if the I/O operations are fast and not heavy, using multithreading can also effectively solve the problem.

Finally, let me introduce the ideal platform for deploying Flask/FastAPI: Leapcell.

Leapcell is a cloud computing platform specifically designed for modern distributed applications. Its pay-as-you-go pricing model ensures no idle costs, meaning users only pay for the resources they actually use.

Learn more in the documentation!

Leapcell Twitter: https://x.com/LeapcellHQ

The above is the detailed content of High-Performance Python: Asyncio. For more information, please follow other related articles on the PHP Chinese website!