API Rate Limiting in Node.js: Strategies and Best Practices

Modern web applications rely heavily on APIs, but this power necessitates robust safeguards. Rate limiting, a crucial strategy for controlling client API request frequency within a defined timeframe, is essential for maintaining API stability, security, and scalability.

This article explores advanced rate-limiting techniques and best practices within a Node.js environment, utilizing popular tools and frameworks.

The Importance of Rate Limiting

Rate limiting safeguards your API from misuse, denial-of-service (DoS) attacks, and accidental overloads by:

- Enhanced Security: Preventing brute-force attacks.

- Improved Performance: Ensuring equitable resource distribution.

- Sustained Stability: Preventing server crashes due to overwhelming requests.

Let's examine advanced methods for effective Node.js implementation.

1. Building a Node.js API with Express

We begin by creating a basic Express API:

const express = require('express');

const app = express();

app.get('/api', (req, res) => {

res.send('Welcome!');

});

const PORT = process.env.PORT || 3000;

app.listen(PORT, () => console.log(`Server running on port ${PORT}`));This forms the base for applying rate-limiting mechanisms.

2. Basic Rate Limiting with express-rate-limit

The express-rate-limit package simplifies rate-limiting implementation:

npm install express-rate-limit

Configuration:

const rateLimit = require('express-rate-limit');

const limiter = rateLimit({

windowMs: 15 * 60 * 1000, // 15 minutes

max: 100, // 100 requests per IP per window

message: 'Too many requests. Please try again later.'

});

app.use('/api', limiter);Limitations of Basic Rate Limiting

- Global application across all routes.

- Limited flexibility for diverse API endpoints.

To address these limitations, let's explore more advanced approaches.

3. Distributed Rate Limiting with Redis

In-memory rate limiting is insufficient for multi-server API deployments. Redis, a high-performance in-memory data store, provides a scalable solution for distributed rate limiting.

Installation

npm install redis rate-limiter-flexible

Redis-Based Rate Limiting

const { RateLimiterRedis } = require('rate-limiter-flexible');

const Redis = require('ioredis');

const redisClient = new Redis();

const rateLimiter = new RateLimiterRedis({

storeClient: redisClient,

keyPrefix: 'middleware',

points: 100, // Requests

duration: 60, // 60 seconds

blockDuration: 300, // 5-minute block after limit

});

app.use(async (req, res, next) => {

try {

await rateLimiter.consume(req.ip);

next();

} catch (err) {

res.status(429).send('Too many requests.');

}

});Benefits

- Supports distributed architectures.

- Endpoint-specific customization.

4. Fine-Grained Control with API Gateways

API Gateways (e.g., AWS API Gateway, Kong, NGINX) offer infrastructure-level rate limiting:

- Per-API Key Limits: Differentiated limits for various user tiers.

- Regional Rate Limits: Geographic-based limit customization.

AWS API Gateway Example:

- Enable Usage Plans.

- Configure throttling limits and quotas.

- Assign API keys for user-specific limits.

5. Advanced Rate Limiting: The Token Bucket Algorithm

The token bucket algorithm offers a flexible and efficient approach, allowing traffic bursts while maintaining average request limits.

Implementation

const express = require('express');

const app = express();

app.get('/api', (req, res) => {

res.send('Welcome!');

});

const PORT = process.env.PORT || 3000;

app.listen(PORT, () => console.log(`Server running on port ${PORT}`));6. Monitoring and Alerting

Effective rate limiting requires robust monitoring. Tools like Datadog or Prometheus track:

- Request rates.

- Rejected requests (HTTP 429).

- API performance metrics.

7. Performance Comparison

| Strategy | Latency Overhead | Complexity | Scalability |

|---|---|---|---|

| In-Memory | Low | Simple | Limited |

| Redis-Based | Moderate | Moderate | High |

| API Gateway | Minimal | Complex | Very High |

Best Practices

- Utilize Redis or API Gateways for distributed environments.

- Implement tiered rate limits based on user plans.

- Provide clear error messages (including Retry-After headers).

- Continuously monitor and adjust limits based on traffic patterns.

Conclusion

Effective API rate limiting is crucial for maintaining the performance, security, and reliability of your Node.js applications. By leveraging tools like Redis, implementing sophisticated algorithms, and employing thorough monitoring, you can build scalable and resilient APIs.

The above is the detailed content of API Rate Limiting in Node.js: Strategies and Best Practices. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1359

1359

52

52

Replace String Characters in JavaScript

Mar 11, 2025 am 12:07 AM

Replace String Characters in JavaScript

Mar 11, 2025 am 12:07 AM

Detailed explanation of JavaScript string replacement method and FAQ This article will explore two ways to replace string characters in JavaScript: internal JavaScript code and internal HTML for web pages. Replace string inside JavaScript code The most direct way is to use the replace() method: str = str.replace("find","replace"); This method replaces only the first match. To replace all matches, use a regular expression and add the global flag g: str = str.replace(/fi

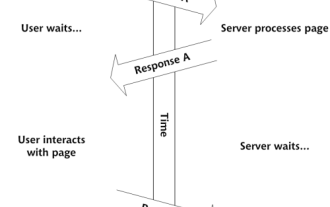

Build Your Own AJAX Web Applications

Mar 09, 2025 am 12:11 AM

Build Your Own AJAX Web Applications

Mar 09, 2025 am 12:11 AM

So here you are, ready to learn all about this thing called AJAX. But, what exactly is it? The term AJAX refers to a loose grouping of technologies that are used to create dynamic, interactive web content. The term AJAX, originally coined by Jesse J

How do I create and publish my own JavaScript libraries?

Mar 18, 2025 pm 03:12 PM

How do I create and publish my own JavaScript libraries?

Mar 18, 2025 pm 03:12 PM

Article discusses creating, publishing, and maintaining JavaScript libraries, focusing on planning, development, testing, documentation, and promotion strategies.

How do I optimize JavaScript code for performance in the browser?

Mar 18, 2025 pm 03:14 PM

How do I optimize JavaScript code for performance in the browser?

Mar 18, 2025 pm 03:14 PM

The article discusses strategies for optimizing JavaScript performance in browsers, focusing on reducing execution time and minimizing impact on page load speed.

How do I debug JavaScript code effectively using browser developer tools?

Mar 18, 2025 pm 03:16 PM

How do I debug JavaScript code effectively using browser developer tools?

Mar 18, 2025 pm 03:16 PM

The article discusses effective JavaScript debugging using browser developer tools, focusing on setting breakpoints, using the console, and analyzing performance.

jQuery Matrix Effects

Mar 10, 2025 am 12:52 AM

jQuery Matrix Effects

Mar 10, 2025 am 12:52 AM

Bring matrix movie effects to your page! This is a cool jQuery plugin based on the famous movie "The Matrix". The plugin simulates the classic green character effects in the movie, and just select a picture and the plugin will convert it into a matrix-style picture filled with numeric characters. Come and try it, it's very interesting! How it works The plugin loads the image onto the canvas and reads the pixel and color values: data = ctx.getImageData(x, y, settings.grainSize, settings.grainSize).data The plugin cleverly reads the rectangular area of the picture and uses jQuery to calculate the average color of each area. Then, use

How to Build a Simple jQuery Slider

Mar 11, 2025 am 12:19 AM

How to Build a Simple jQuery Slider

Mar 11, 2025 am 12:19 AM

This article will guide you to create a simple picture carousel using the jQuery library. We will use the bxSlider library, which is built on jQuery and provides many configuration options to set up the carousel. Nowadays, picture carousel has become a must-have feature on the website - one picture is better than a thousand words! After deciding to use the picture carousel, the next question is how to create it. First, you need to collect high-quality, high-resolution pictures. Next, you need to create a picture carousel using HTML and some JavaScript code. There are many libraries on the web that can help you create carousels in different ways. We will use the open source bxSlider library. The bxSlider library supports responsive design, so the carousel built with this library can be adapted to any

How to Upload and Download CSV Files With Angular

Mar 10, 2025 am 01:01 AM

How to Upload and Download CSV Files With Angular

Mar 10, 2025 am 01:01 AM

Data sets are extremely essential in building API models and various business processes. This is why importing and exporting CSV is an often-needed functionality.In this tutorial, you will learn how to download and import a CSV file within an Angular