(Ampere Computing Series Articles Accelerated Cloud Computing, Part 1)

Traditionally, deploying web applications means running large monolithic applications using servers based on x86 architecture in a company's enterprise data center. Migrating applications to the cloud eliminates the need to over-configure data centers, because cloud resources can be allocated based on real-time requirements. At the same time, migration to the cloud also means a transition to componentized applications (also known as microservices). This approach allows applications to easily scale to potentially tens of thousands or even millions of users.

Using a cloud-native approach, applications can fully operate in the cloud and take advantage of the unique capabilities of the cloud. For example, using a distributed architecture, developers can scale seamlessly by creating more instances of application components instead of running larger and larger applications, much like adding another application server without having to add another database. Many large companies (such as Netflix, Wikipedia, etc.) have taken distributed architecture to the next level, breaking applications into standalone microservices. This simplifies the scale of design, deployment, and load balancing. For more details on decomposing monomer applications, see the Phoenix Project, and for best practices in developing cloud-native applications, see the Twelve Element Applications.

The traditional x86 server is built on a common architecture, which is mainly used in personal computing platforms, and users need to be able to execute various types of desktop applications simultaneously on a single CPU. Due to this flexibility, the x86 architecture enables advanced features and capacity that are useful for desktop applications, but many cloud applications do not require these capabilities. However, companies running applications on the x86-based cloud still have to pay for these features, even if they don't use them.

To improve utilization, the x86 processor uses hyperthreading technology to enable one core to run two threads. While hyperthreading allows for more full utilization of core capacity, it may also allow one thread to affect the performance of another when the core's resources are overused. Specifically, whenever these two threads compete for the same resource, this brings significant and unpredictable latency to the operation. It's hard to optimize an app when you don't know (and have no control) which app it will share core with. Hyperthreading can be considered as trying to pay bills and watching sports games at the same time. The bill takes longer to complete, and you don’t really appreciate the game. It is best to separate and isolate the tasks by completing the bill first and then focusing on the game, or assigning the tasks to two people (one of whom is not a football fan).

Hyperthreading also expands the security attack surface of the application, because the application in another thread may be malware that attempts to perform side channel attacks. Keeping applications in different threads isolated from each other introduces overhead and additional latency at the processor level.

To improve efficiency and design simplicity, developers need cloud resources designed specifically to process their specific data efficiently (not everyone else’s data). To this end, an efficient cloud-native platform can accelerate typical operation types of cloud-native applications. To improve overall performance, cloud native processors provide more cores designed to optimize microservice execution, rather than building larger cores that require hyperthreading to execute increasingly complex desktop applications. This results in more consistent and deterministic latency, supports transparent scaling, and avoids many of the security issues posed by hyperthreading, as they are naturally isolated when applications run on their own core.

To accelerate cloud-native applications, Ampere has developed Altra and Altra Max 64-bit cloud-native processors. Offering unprecedented density of up to 128 cores on a single IC, a single 1U chassis comes with two slots that can accommodate up to 256 cores in a single rack.

Ampere Altra and Ampere Altra Max cores are designed around the Arm Instruction Set Architecture (ISA). Although the x86 architecture was originally designed for general-purpose desktops, Arm has evolved from the tradition of embedded applications where deterministic behavior and power efficiency are more concerned. From this basis, Ampere processors are designed for applications where power consumption and core density are important design considerations. Overall, Ampere processors provide an extremely efficient foundation for many cloud-native applications, enabling high performance, predictable and consistent responsiveness, and higher power efficiency.

For developers, the fact that Ampere processors implement Arm ISA means that there is already a wide ecosystem of software and tooling for development. In the second part of this series, we will cover how developers can seamlessly migrate existing applications to the Ampere cloud native platform provided by leading CSPs to start accelerating their cloud operations right away.

A key advantage of running on cloud-native platforms is lower latency, resulting in more consistent and predictable performance. For example, the microservice approach is fundamentally different from the current monomer cloud applications. Therefore, it is not surprising that a fundamentally different approach is needed for optimization of service quality and utilization efficiency.

Microservices break down large tasks into smaller components. The advantage is that because microservices can be specialized, they can provide higher efficiency, such as achieving higher cache utilization between operations than more general monolithic applications that attempt to accomplish all the necessary tasks. However, even though microservices typically use less compute resources per component, the latency requirements per layer are much stricter than typical cloud applications. In other words, each microservice gets only a small portion of the latency budget that can be used for the full application.

Predictable and consistent latency is critical from an optimization perspective because the worst-case latency is when the responsiveness of each microservice varies as much as the hyperthreaded x86 architecture. is the sum of the worst-case scenarios of each microservice combination. The good news is that it also means that even a small amount of improvements in microservice latency can result in significant improvements.

Figure 1 illustrates the performance advantages of running a typical cloud application on a cloud native platform like Ampere Altra Max compared to Intel IceLake and AMD Milan. Ampere Altra Max not only provides higher performance, but also higher performance/watt efficiency. The figure also shows how Ampere Altra Max has an edge over Intel IceLake (13%) to deliver the stable performance required for cloud-native applications.

Figure 1: Cloud native platforms such as Ampere Altra Max provide superior performance, power efficiency, and latency compared to Intel IceLake and AMD Milan.

Figure 1: Cloud native platforms such as Ampere Altra Max provide superior performance, power efficiency, and latency compared to Intel IceLake and AMD Milan.

Even if CSPs handle the power consumption of their data centers, many developers are aware of the increasing concern of the public and company stakeholders how companies address sustainability issues. In 2022, cloud data centers are estimated to account for 80% of total data center power consumption1. According to 2019 data, power consumption in data centers is expected to double by 2030.

It is obvious that sustainability is crucial to the long-term development of the cloud, and the cloud industry must start adopting more efficient energy technologies. Reducing power consumption will also reduce operating costs. In any case, companies that have been the first to reduce their carbon footprint today will be prepared when such measures become mandatory.

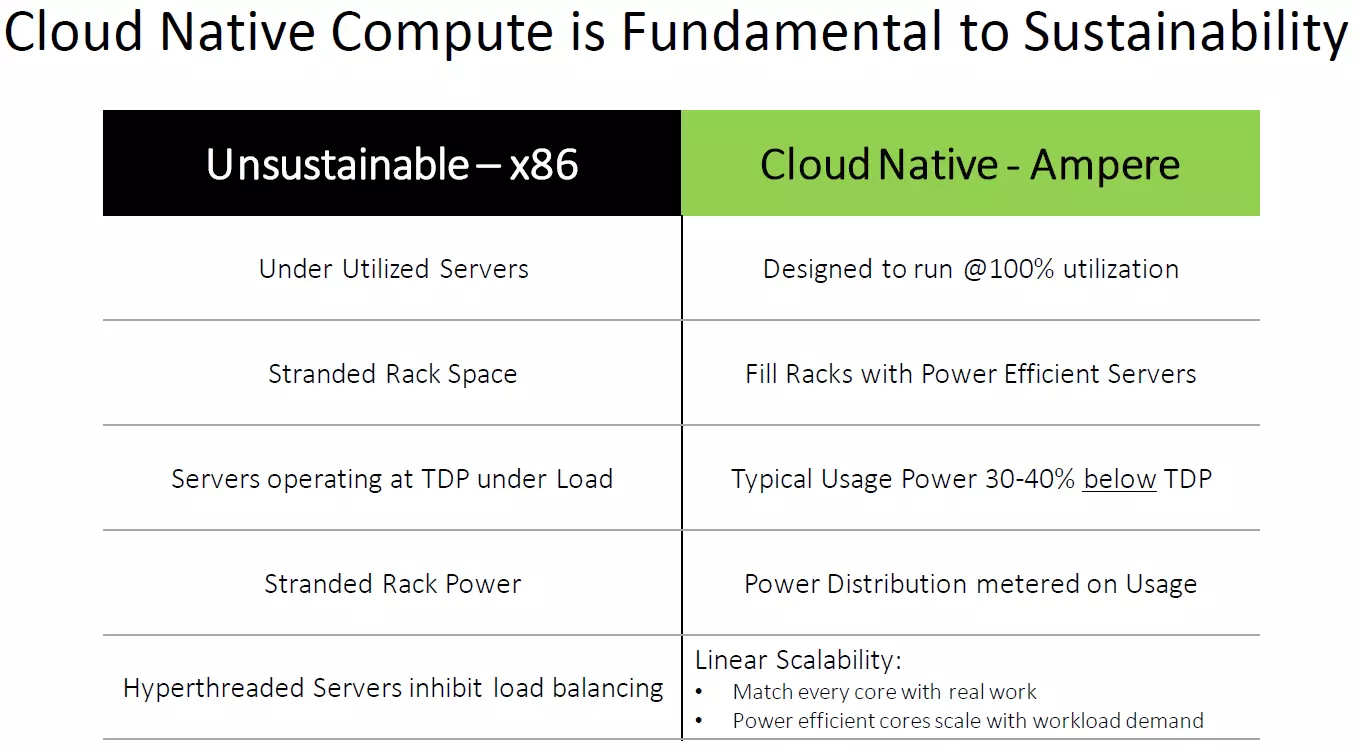

Table 1: Advantages of cloud-native processing using Ampere cloud-native platform compared to traditional x86 clouds.

Table 1: Advantages of cloud-native processing using Ampere cloud-native platform compared to traditional x86 clouds.

Cloud native technologies such as Ampere allow CSPs to continue to increase the computing density in data centers (see Table 1). Meanwhile, cloud native platforms offer compelling performance/price/power benefits, allowing developers to reduce daily operating costs while improving performance.

In the second part of this series, we will detail what you need to redeploy existing applications to a cloud-native platform and speed up your operations.

Please check out the Ampere Computing Developer Center for more relevant content and the latest news.

The above is the detailed content of Accelerating the Cloud: Going Cloud Native. For more information, please follow other related articles on the PHP Chinese website!