Introducing the Natural Language Toolkit (NLTK)

Natural language processing (NLP) is the automatic or semi-automatic processing of human language. NLP is closely related to linguistics and has links to research in cognitive science, psychology, physiology, and mathematics. In the computer science domain in particular, NLP is related to compiler techniques, formal language theory, human-computer interaction, machine learning, and theorem proving. This Quora question shows the different advantages of NLP.

In this tutorial I'm going to walk you through an interesting Python platform for NLP called the Natural Language Toolkit (NLTK). Before we see how to work with this platform, let me first tell you what NLTK is.

What Is NLTK?

The Natural Language Toolkit (NLTK) is a platform used for building programs for text analysis. The platform was originally released by Steven Bird and Edward Loper in conjunction with a computational linguistics course at the University of Pennsylvania in 2001. There is an accompanying book for the platform called Natural Language Processing with Python.

Installing NLTK

Let's now install NLTK to start experimenting with natural language processing. It will be fun!

Installing NLTK is very simple. I'm using Windows 10, so in my Command Prompt (sent_tokenize() method.

Consider the following text.

"Python is a very high-level programming language. Python is interpreted."<br>

Let's tokenize it using the word_tokenize() method. Let's use the same text and pass it through the word_tokenize()<code>word_tokenize() method.

from nltk.tokenize import word_tokenize

text = "Python is a very high-level programming language. Python is interpreted."<br>print(word_tokenize(text))

Here is the output:

['Python', 'is', 'a', 'very', 'high-level', 'programming', 'language', '.', 'Python', 'is', 'interpreted', '.']<br>

As you can see from the output, punctuation marks are also considered to be words.

Stop Words

Sometimes we need to filter out useless data to make the data more understandable by the computer. In natural language processing (NLP), such useless data (words) are called stop words. These words have no meaning to us, so we would like to remove them.

NLTK provides us with some stop words to start with. To see those words, use the following script:

from nltk.corpus import stopwords<br>print(set(stopwords.words('English')))<br>In which case you will get the following output:

What we did is that we printed out a set (unordered collection of items) of stop words in the English language. If you were using another language, for example German, you have to define it as follows:

from nltk.corpus import stopwords<br>print(set(stopwords.words('german')))<br>How can we remove the stop words from our own text? The example below shows how we can perform this task:

from nltk.corpus import stopwords<br>from nltk.tokenize import word_tokenize<br><br>text = 'In this tutorial, I\'m learning NLTK. It is an interesting platform.'<br>stop_words = set(stopwords.words('english'))<br>words = word_tokenize(text)<br><br>new_sentence = []<br><br>for word in words:<br> if word not in stop_words:<br> new_sentence.append(word)<br><br>print(new_sentence)<br>The output of the above script is:

So what the word_tokenize()<code>word_tokenize() function does is:

Tokenize a string to split off punctuation other than periods

Searching

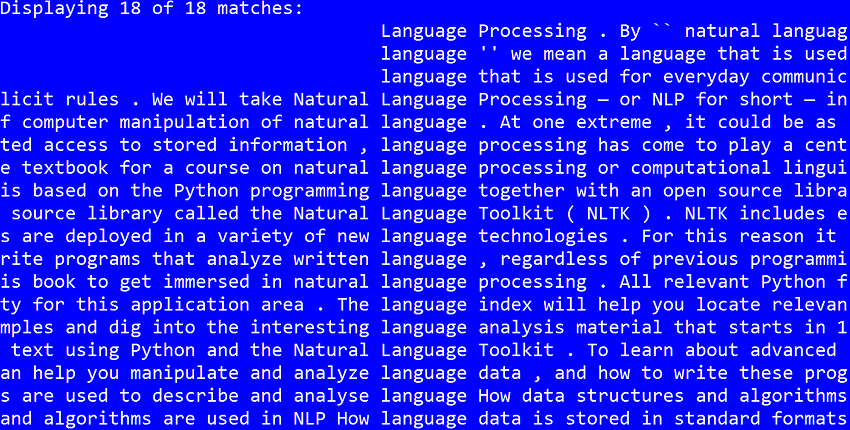

Let's say we have the following text file (download the text file from Dropbox). We would like to look for (search) the word language. We can simply do this using the NLTK platform as follows:

"Python is a very high-level programming language. Python is interpreted."<br>

In which case you will get the following output:

Notice that concordance() returns every occurrence of the word language, in addition to some context. Before that, as shown in the script above, we tokenize the read file and then convert it into an nltk.Text object.

I just want to note that the first time I ran the program, I got the following error, which seems to be related to the encoding the console uses:

from nltk.tokenize import word_tokenize

text = "Python is a very high-level programming language. Python is interpreted."<br>print(word_tokenize(text))

What I simply did to solve this issue is to run this command in my console before running the program: chcp 65001.

The Gutenberg Corpus

As mentioned in Wikipedia:

Project Gutenberg (PG) is a volunteer effort to digitize and archive cultural works, to "encourage the creation and distribution of eBooks". It was founded in 1971 by Michael S. Hart and is the oldest digital library. Most of the items in its collection are the full texts of public domain books. The project tries to make these as free as possible, in long-lasting, open formats that can be used on almost any computer. As of 3 October 2015, Project Gutenberg reached 50,000 items in its collection.

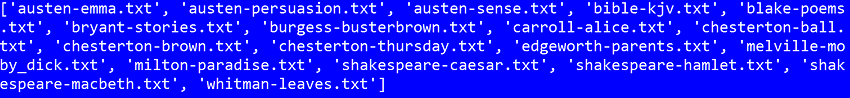

NLTK contains a small selection of texts from Project Gutenberg. To see the included files from Project Gutenberg, we do the following:

['Python', 'is', 'a', 'very', 'high-level', 'programming', 'language', '.', 'Python', 'is', 'interpreted', '.']<br>

The output of the above script will be as follows:

If we want to find the number of words for the text file bryant-stories.txt for instance, we can do the following:

from nltk.corpus import stopwords<br>print(set(stopwords.words('English')))<br>The above script should return the following number of words: 55563.

Conclusion

As we have seen in this tutorial, the NLTK platform provides us with a powerful tool for working with natural language processing (NLP). I have only scratched the surface in this tutorial. If you would like to go deeper into using NLTK for different NLP tasks, you can refer to NLTK's accompanying book: Natural Language Processing with Python.

This post has been updated with contributions from Esther Vaati. Esther is a software developer and writer for Envato Tuts .

The above is the detailed content of Introducing the Natural Language Toolkit (NLTK). For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1422

1422

52

52

1316

1316

25

25

1267

1267

29

29

1239

1239

24

24

Python vs. C : Applications and Use Cases Compared

Apr 12, 2025 am 12:01 AM

Python vs. C : Applications and Use Cases Compared

Apr 12, 2025 am 12:01 AM

Python is suitable for data science, web development and automation tasks, while C is suitable for system programming, game development and embedded systems. Python is known for its simplicity and powerful ecosystem, while C is known for its high performance and underlying control capabilities.

Python: Games, GUIs, and More

Apr 13, 2025 am 12:14 AM

Python: Games, GUIs, and More

Apr 13, 2025 am 12:14 AM

Python excels in gaming and GUI development. 1) Game development uses Pygame, providing drawing, audio and other functions, which are suitable for creating 2D games. 2) GUI development can choose Tkinter or PyQt. Tkinter is simple and easy to use, PyQt has rich functions and is suitable for professional development.

The 2-Hour Python Plan: A Realistic Approach

Apr 11, 2025 am 12:04 AM

The 2-Hour Python Plan: A Realistic Approach

Apr 11, 2025 am 12:04 AM

You can learn basic programming concepts and skills of Python within 2 hours. 1. Learn variables and data types, 2. Master control flow (conditional statements and loops), 3. Understand the definition and use of functions, 4. Quickly get started with Python programming through simple examples and code snippets.

Python vs. C : Learning Curves and Ease of Use

Apr 19, 2025 am 12:20 AM

Python vs. C : Learning Curves and Ease of Use

Apr 19, 2025 am 12:20 AM

Python is easier to learn and use, while C is more powerful but complex. 1. Python syntax is concise and suitable for beginners. Dynamic typing and automatic memory management make it easy to use, but may cause runtime errors. 2.C provides low-level control and advanced features, suitable for high-performance applications, but has a high learning threshold and requires manual memory and type safety management.

How Much Python Can You Learn in 2 Hours?

Apr 09, 2025 pm 04:33 PM

How Much Python Can You Learn in 2 Hours?

Apr 09, 2025 pm 04:33 PM

You can learn the basics of Python within two hours. 1. Learn variables and data types, 2. Master control structures such as if statements and loops, 3. Understand the definition and use of functions. These will help you start writing simple Python programs.

Python and Time: Making the Most of Your Study Time

Apr 14, 2025 am 12:02 AM

Python and Time: Making the Most of Your Study Time

Apr 14, 2025 am 12:02 AM

To maximize the efficiency of learning Python in a limited time, you can use Python's datetime, time, and schedule modules. 1. The datetime module is used to record and plan learning time. 2. The time module helps to set study and rest time. 3. The schedule module automatically arranges weekly learning tasks.

Python: Automation, Scripting, and Task Management

Apr 16, 2025 am 12:14 AM

Python: Automation, Scripting, and Task Management

Apr 16, 2025 am 12:14 AM

Python excels in automation, scripting, and task management. 1) Automation: File backup is realized through standard libraries such as os and shutil. 2) Script writing: Use the psutil library to monitor system resources. 3) Task management: Use the schedule library to schedule tasks. Python's ease of use and rich library support makes it the preferred tool in these areas.

Python: Exploring Its Primary Applications

Apr 10, 2025 am 09:41 AM

Python: Exploring Its Primary Applications

Apr 10, 2025 am 09:41 AM

Python is widely used in the fields of web development, data science, machine learning, automation and scripting. 1) In web development, Django and Flask frameworks simplify the development process. 2) In the fields of data science and machine learning, NumPy, Pandas, Scikit-learn and TensorFlow libraries provide strong support. 3) In terms of automation and scripting, Python is suitable for tasks such as automated testing and system management.