DeepChecks Tutorial: Automating Machine Learning Testing

This tutorial explores DeepChecks for data validation and machine learning model testing, and leverages GitHub Actions for automated testing and artifact creation. We'll cover machine learning testing principles, DeepChecks functionality, and a complete automated workflow.

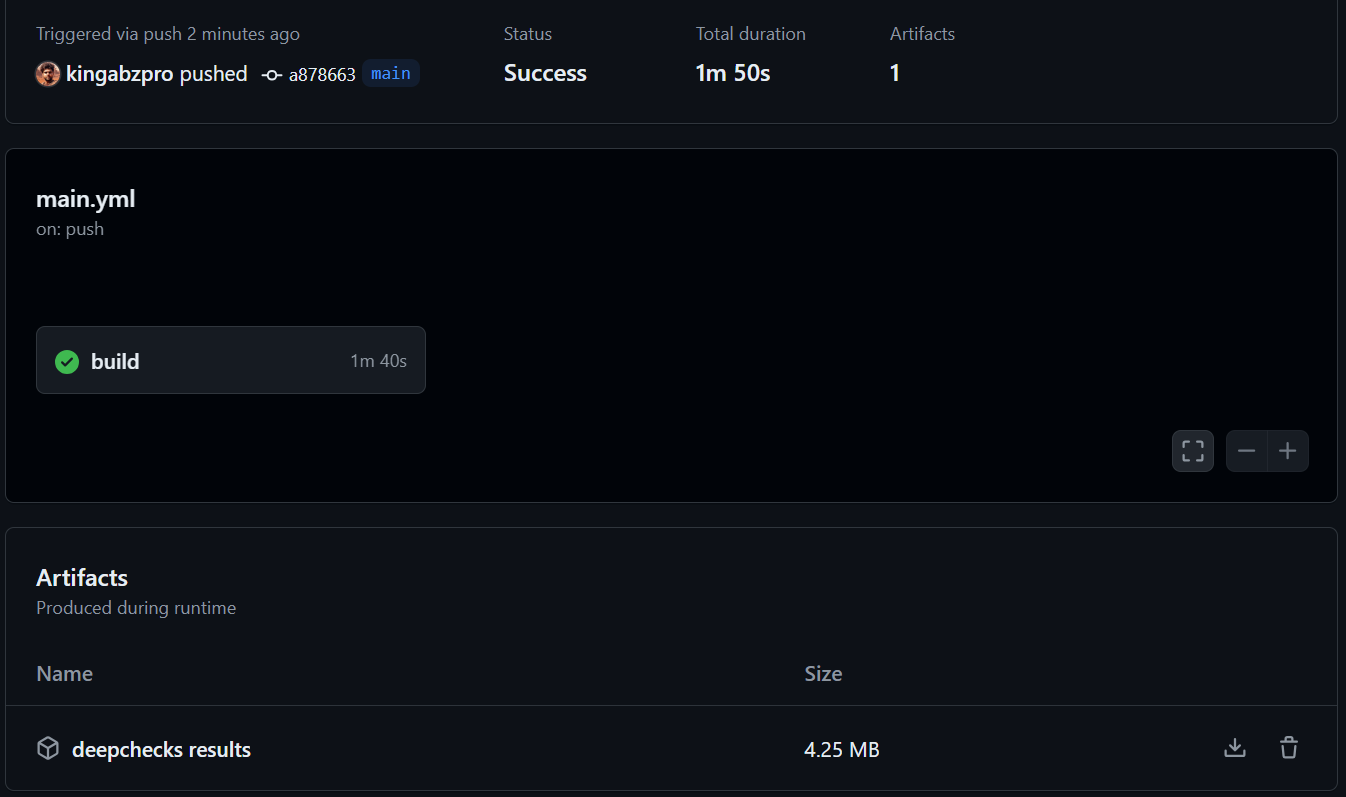

Image by Author

Understanding Machine Learning Testing

Effective machine learning requires rigorous testing beyond simple accuracy metrics. We must assess fairness, robustness, and ethical considerations, including bias detection, false positives/negatives, performance metrics, throughput, and alignment with AI ethics. This involves techniques like data validation, cross-validation, F1-score calculation, confusion matrix analysis, and drift detection (data and prediction). Data splitting (train/test/validation) is crucial for reliable model evaluation. Automating this process is key to building dependable AI systems.

For beginners, the Machine Learning Fundamentals with Python skill track provides a solid foundation.

DeepChecks, an open-source Python library, simplifies comprehensive machine learning testing. It offers built-in checks for model performance, data integrity, and distribution, supporting continuous validation for reliable model deployment.

Getting Started with DeepChecks

Install DeepChecks using pip:

pip install deepchecks --upgrade -q

Data Loading and Preparation (Loan Dataset)

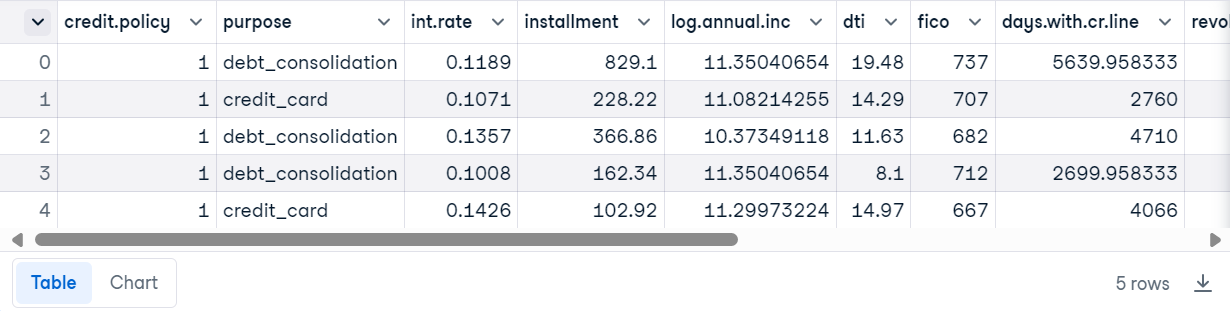

We'll use the Loan Data dataset from DataCamp.

import pandas as pd

loan_data = pd.read_csv("loan_data.csv")

loan_data.head()

Create a DeepChecks dataset:

from sklearn.model_selection import train_test_split from deepchecks.tabular import Dataset label_col = 'not.fully.paid' deep_loan_data = Dataset(loan_data, label=label_col, cat_features=["purpose"])

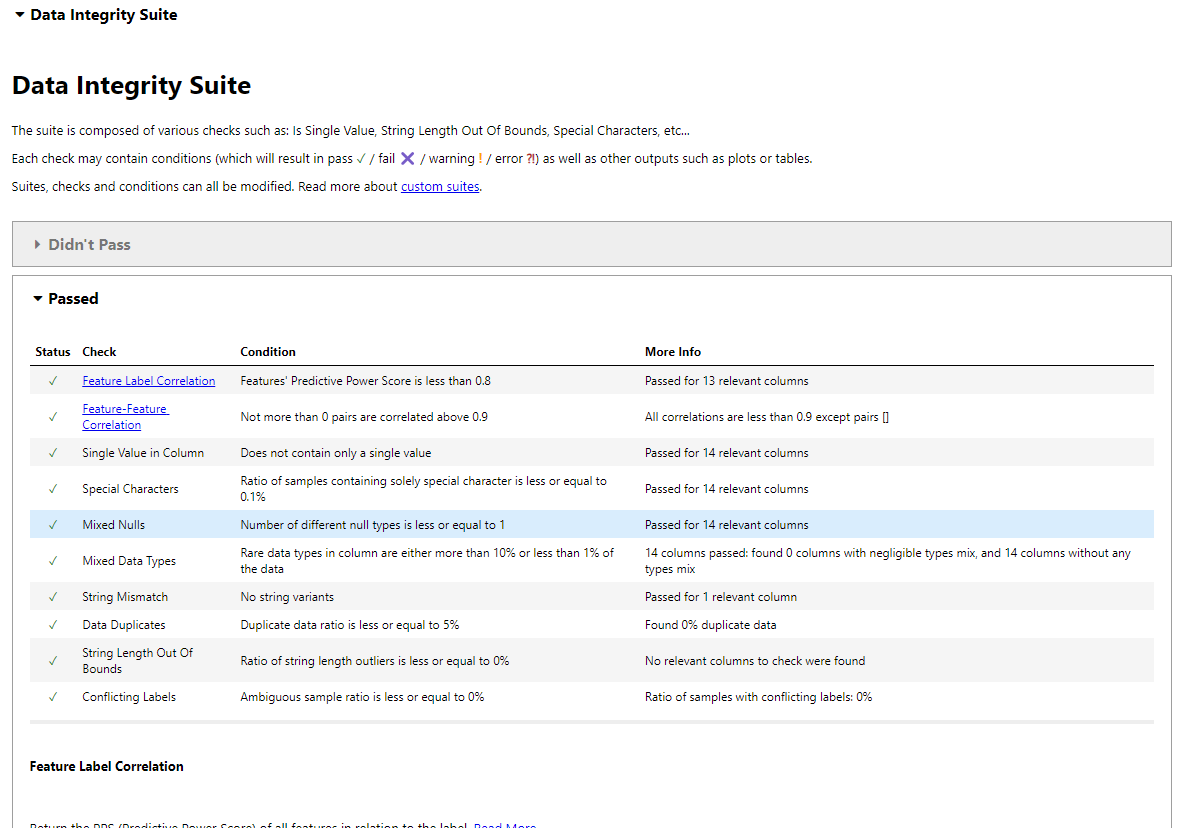

Data Integrity Testing

DeepChecks' data integrity suite performs automated checks.

from deepchecks.tabular.suites import data_integrity integ_suite = data_integrity() suite_result = integ_suite.run(deep_loan_data) suite_result.show_in_iframe() # Use show_in_iframe for DataLab compatibility

This generates a report covering: Feature-Label Correlation, Feature-Feature Correlation, Single Value Checks, Special Character Detection, Null Value Analysis, Data Type Consistency, String Mismatches, Duplicate Detection, String Length Validation, Conflicting Labels, and Outlier Detection.

Save the report:

suite_result.save_as_html()

Individual Test Execution

For efficiency, run individual tests:

from deepchecks.tabular.checks import IsSingleValue, DataDuplicates result = IsSingleValue().run(deep_loan_data) print(result.value) # Unique value counts per column result = DataDuplicates().run(deep_loan_data) print(result.value) # Duplicate sample count

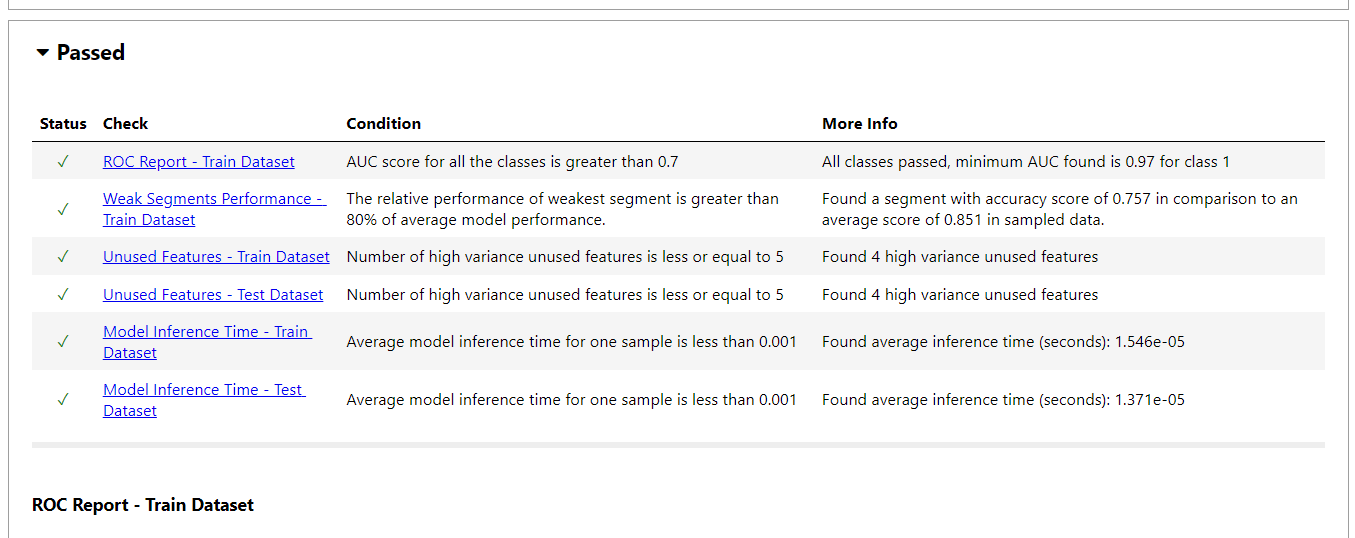

Model Evaluation with DeepChecks

We'll train an ensemble model (Logistic Regression, Random Forest, Gaussian Naive Bayes) and evaluate it using DeepChecks.

pip install deepchecks --upgrade -q

The model evaluation report includes: ROC curves, weak segment performance, unused feature detection, train-test performance comparison, prediction drift analysis, simple model comparisons, model inference time, confusion matrices, and more.

JSON output:

import pandas as pd

loan_data = pd.read_csv("loan_data.csv")

loan_data.head()Individual test example (Label Drift):

from sklearn.model_selection import train_test_split from deepchecks.tabular import Dataset label_col = 'not.fully.paid' deep_loan_data = Dataset(loan_data, label=label_col, cat_features=["purpose"])

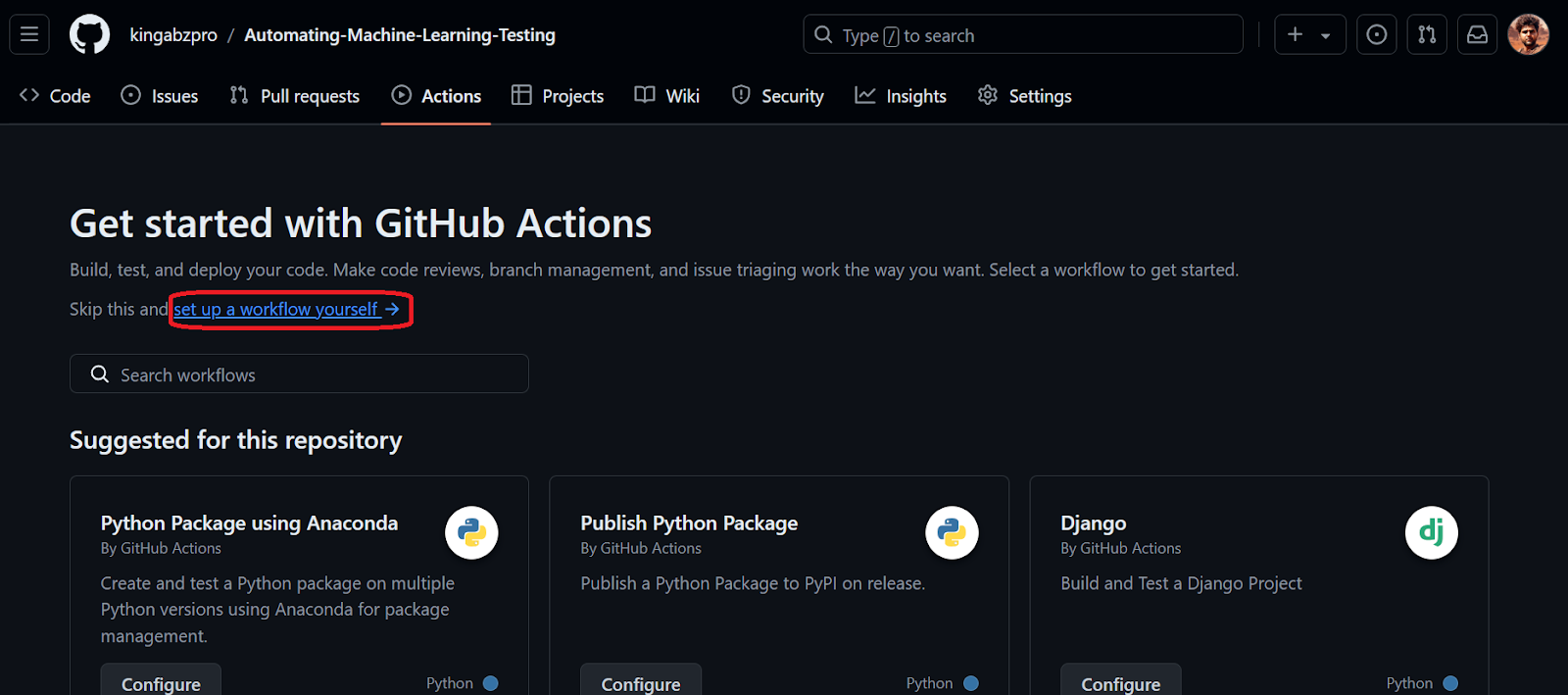

Automating with GitHub Actions

This section details setting up a GitHub Actions workflow to automate data validation and model testing. The process involves creating a repository, adding data and Python scripts (data_validation.py, train_validation.py), and configuring a GitHub Actions workflow (main.yml) to execute these scripts and save the results as artifacts. Detailed steps and code snippets are provided in the original input. Refer to the kingabzpro/Automating-Machine-Learning-Testing repository for a complete example. The workflow utilizes the actions/checkout, actions/setup-python, and actions/upload-artifact actions.

Conclusion

Automating machine learning testing using DeepChecks and GitHub Actions significantly improves efficiency and reliability. Early detection of issues enhances model accuracy and fairness. This tutorial provides a practical guide to implementing this workflow, enabling developers to build more robust and trustworthy AI systems. Consider the Machine Learning Scientist with Python career track for further development in this field.

The above is the detailed content of DeepChecks Tutorial: Automating Machine Learning Testing. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

2024 witnessed a shift from simply using LLMs for content generation to understanding their inner workings. This exploration led to the discovery of AI Agents – autonomous systems handling tasks and decisions with minimal human intervention. Buildin

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

The article reviews top AI voice generators like Google Cloud, Amazon Polly, Microsoft Azure, IBM Watson, and Descript, focusing on their features, voice quality, and suitability for different needs.

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

This week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le