What is Compute Labs? Compute Labs ecosystem and token economy full analysis

Compute Labs: Unlocking the Future of Artificial Intelligence Computing

Abstract: Compute Labs is revolutionizing the field of investment in artificial intelligence (AI). By combining real-world assets (RWA) with blockchain technology, Compute Labs has created a decentralized ecosystem that allows individual and institutional investors to easily participate in the investment and use of high-performance computing resources. This article will explore the core functions, value propositions, security measures, and investment potential of Compute Labs.

Compute Labs Introduction:

Compute Labs is committed to building a financial ecosystem that integrates AI and blockchain technologies. By tokenizing computing resources such as GPUs and developing computing derivatives, Compute Labs creates innovative investment opportunities and solves key challenges in the AI field. Its platform is based on the Solana blockchain and plans to expand to other blockchains, such as NEAR, to improve efficiency and interoperability.

Core team and investors:

Compute Labs has a team of experienced teams in finance and blockchain, including experienced CEO Albert Zhang and CTO Xingfan Xia. The project has received investment from well-known investment institutions such as Protocol Labs and Symbolic Capital, further demonstrating its development potential.

Compute Labs' Value Proposition:

Compute Labs' core value lies in its Computing Resource Tokenization Protocol (CTP). CTP converts physical GPUs into tradable digital assets (GNFTs), thereby improving liquidity and accessibility of computing resources. The AI-Fi ecosystem combines cutting-edge AI infrastructure with decentralized finance (DeFi) technology to provide users with innovative financial tools and investment products.

Detailed explanation of the ecosystem:

-

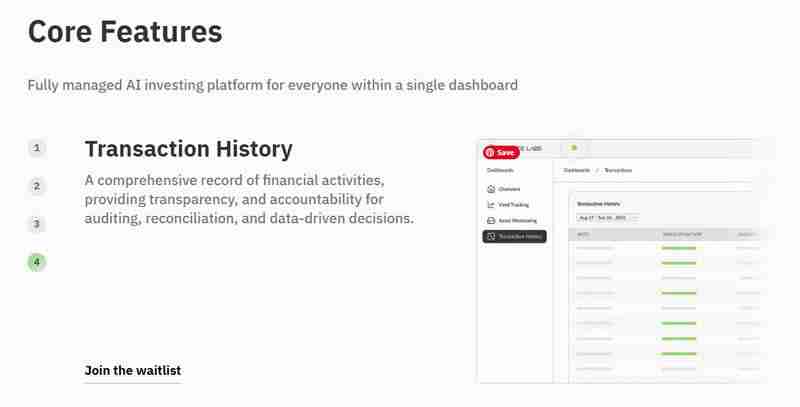

Compute Vaults (Compute Repository): Convert GPU resources to GNFTs, allowing users to hold and profit from AI computing power. The system provides functions such as information dashboard, income tracking, asset monitoring and transaction records.

-

AI-Fi Ecosystem: Connect AI computing resources and financial tools to enable investors to trade computing power like stocks. $AIFI tokens are at the heart of this ecosystem.

-

GNFT and COMPUTE Tokens: GNFT represents a single computing asset, while COMPUTE tokens are used for transactions within the ecosystem.

-

Computing derivatives: Financial instruments based on basic computing assets, such as GPU ETFs.

Application scenarios and market opportunities:

Compute Labs aims to solve the challenges faced by the AI market, including high costs, supply chain bottlenecks and market frictions. The solutions include:

- GPU tokenization to achieve scalable AI investment: Provide investors with more convenient investment channels for AI computing resources.

- Monetize computing power, accelerate AI innovation: Provide AI companies with low-cost computing resource acquisition solutions.

- Maximize AI computing revenue: Generate additional revenue for AI data center operations partners through GPU hosting and RWA asset repository.

Safety Measures:

Compute Labs is committed to ensuring the security of the platform and has taken a number of measures, including data encryption, security audit and penetration testing, smart contract security, and open source commitments.

Investment Potential and Conclusion:

Compute Labs has huge development potential and its innovative model is expected to reshape the future of AI investment. However, investors still need to carefully evaluate risks. Compute Labs has successfully completed a seed round of financing recently, further confirming its market prospects.

Compute Labs has brought new vitality to the AI field through its unique technology and business model and is expected to become an important force in promoting the development of AI.

The above is the detailed content of What is Compute Labs? Compute Labs ecosystem and token economy full analysis. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Sony confirms the possibility of using special GPUs on PS5 Pro to develop AI with AMD

Apr 13, 2025 pm 11:45 PM

Sony confirms the possibility of using special GPUs on PS5 Pro to develop AI with AMD

Apr 13, 2025 pm 11:45 PM

Mark Cerny, chief architect of SonyInteractiveEntertainment (SIE, Sony Interactive Entertainment), has released more hardware details of next-generation host PlayStation5Pro (PS5Pro), including a performance upgraded AMDRDNA2.x architecture GPU, and a machine learning/artificial intelligence program code-named "Amethylst" with AMD. The focus of PS5Pro performance improvement is still on three pillars, including a more powerful GPU, advanced ray tracing and AI-powered PSSR super-resolution function. GPU adopts a customized AMDRDNA2 architecture, which Sony named RDNA2.x, and it has some RDNA3 architecture.

Finally changed! Microsoft Windows search function will usher in a new update

Apr 13, 2025 pm 11:42 PM

Finally changed! Microsoft Windows search function will usher in a new update

Apr 13, 2025 pm 11:42 PM

Microsoft's improvements to Windows search functions have been tested on some Windows Insider channels in the EU. Previously, the integrated Windows search function was criticized by users and had poor experience. This update splits the search function into two parts: local search and Bing-based web search to improve user experience. The new version of the search interface performs local file search by default. If you need to search online, you need to click the "Microsoft BingWebSearch" tab to switch. After switching, the search bar will display "Microsoft BingWebSearch:", where users can enter keywords. This move effectively avoids the mixing of local search results with Bing search results

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

How to build a Zookeeper cluster in CentOS

Apr 14, 2025 pm 02:09 PM

How to build a Zookeeper cluster in CentOS

Apr 14, 2025 pm 02:09 PM

Deploying a ZooKeeper cluster on a CentOS system requires the following steps: The environment is ready to install the Java runtime environment: Use the following command to install the Java 8 development kit: sudoyumininstalljava-1.8.0-openjdk-devel Download ZooKeeper: Download the version for CentOS (such as ZooKeeper3.8.x) from the official ApacheZooKeeper website. Use the wget command to download and replace zookeeper-3.8.x with the actual version number: wgethttps://downloads.apache.or

What are the methods of tuning performance of Zookeeper on CentOS

Apr 14, 2025 pm 03:18 PM

What are the methods of tuning performance of Zookeeper on CentOS

Apr 14, 2025 pm 03:18 PM

Zookeeper performance tuning on CentOS can start from multiple aspects, including hardware configuration, operating system optimization, configuration parameter adjustment, monitoring and maintenance, etc. Here are some specific tuning methods: SSD is recommended for hardware configuration: Since Zookeeper's data is written to disk, it is highly recommended to use SSD to improve I/O performance. Enough memory: Allocate enough memory resources to Zookeeper to avoid frequent disk read and write. Multi-core CPU: Use multi-core CPU to ensure that Zookeeper can process it in parallel.

How to solve CentOS system failure

Apr 14, 2025 pm 01:57 PM

How to solve CentOS system failure

Apr 14, 2025 pm 01:57 PM

There are many ways to solve CentOS system failures. Here are some common steps and techniques: 1. Check the log file /var/log/messages: system log, which contains various system events. /var/log/secure: Security-related logs, such as SSH login attempts. /var/log/httpd/error_log: If you use the Apache server, there will be an error message here. 2. Use the diagnostic tool dmesg: display the contents of the kernel ring buffer, which helps understand hardware and driver questions

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.