Batch Normalization: Theory and TensorFlow Implementation

Deep neural network training often faces hurdles like vanishing/exploding gradients and internal covariate shift, slowing training and hindering learning. Normalization techniques offer a solution, with batch normalization (BN) being particularly prominent. BN accelerates convergence, improves stability, and enhances generalization in many deep learning architectures. This tutorial explains BN's mechanics, its mathematical underpinnings, and TensorFlow/Keras implementation.

Normalization in machine learning standardizes input data, using methods like min-max scaling, z-score normalization, and log transformations to rescale features. This mitigates outlier effects, improves convergence, and ensures fair feature comparison. Normalized data ensures equal feature contribution to the learning process, preventing larger-scale features from dominating and leading to suboptimal model performance. It allows the model to identify meaningful patterns more effectively.

Deep learning training challenges include:

- Internal covariate shift: Activations' distribution changes across layers during training, hampering adaptation and learning.

- Vanishing/exploding gradients: Gradients become too small or large during backpropagation, hindering effective weight updates.

- Initialization sensitivity: Initial weights heavily influence training; poor initialization can lead to slow or failed convergence.

Batch normalization tackles these by normalizing activations within each mini-batch, stabilizing training and improving model performance.

Batch normalization normalizes a layer's activations within a mini-batch during training. It calculates the mean and variance of activations for each feature, then normalizes using these statistics. Learnable parameters (γ and β) scale and shift the normalized activations, allowing the model to learn the optimal activation distribution.

Source: Yintai Ma and Diego Klabjan.

BN is typically applied after a layer's linear transformation (e.g., matrix multiplication in fully connected layers or convolution in convolutional layers) and before the non-linear activation function (e.g., ReLU). Key components are mini-batch statistics (mean and variance), normalization, and scaling/shifting with learnable parameters.

BN addresses internal covariate shift by normalizing activations within each mini-batch, making inputs to subsequent layers more stable. This enables faster convergence with higher learning rates and reduces initialization sensitivity. It also regularizes, preventing overfitting by reducing dependence on specific activation patterns.

Mathematics of Batch Normalization:

BN operates differently during training and inference.

Training:

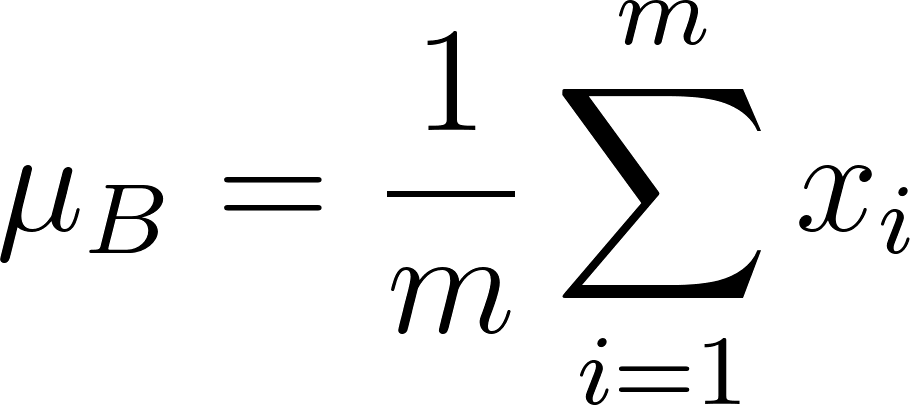

- Normalization: Mean (μB) and variance (σB2) are calculated for each feature in a mini-batch:

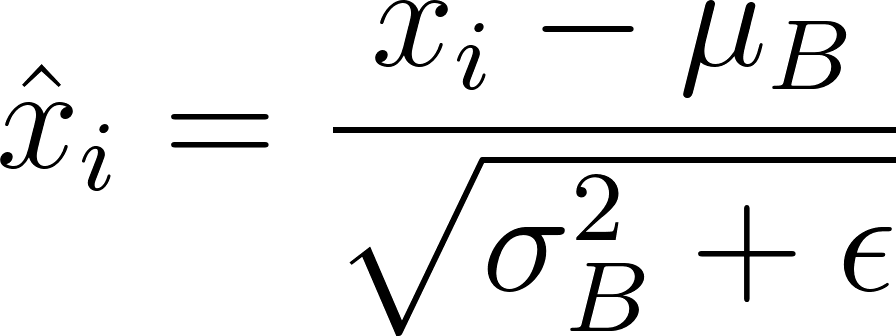

Activations (xi) are normalized:

(ε is a small constant for numerical stability).

- Scaling and Shifting: Learnable parameters γ and β scale and shift:

Inference: Batch statistics are replaced with running statistics (running mean and variance) calculated during training using a moving average (momentum factor α):

These running statistics and the learned γ and β are used for normalization during inference.

TensorFlow Implementation:

import tensorflow as tf

from tensorflow import keras

# Load and preprocess MNIST data (as described in the original text)

# ...

# Define the model architecture

model = keras.Sequential([

keras.layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)),

keras.layers.BatchNormalization(),

keras.layers.Conv2D(64, (3, 3), activation='relu'),

keras.layers.BatchNormalization(),

keras.layers.MaxPooling2D((2, 2)),

keras.layers.Flatten(),

keras.layers.Dense(128, activation='relu'),

keras.layers.BatchNormalization(),

keras.layers.Dense(10, activation='softmax')

])

# Compile and train the model (as described in the original text)

# ...Implementation Considerations:

- Placement: After linear transformations and before activation functions.

- Batch Size: Larger batch sizes provide more accurate batch statistics.

- Regularization: BN introduces a regularization effect.

Limitations and Challenges:

- Non-convolutional architectures: BN's effectiveness is reduced in RNNs and transformers.

- Small batch sizes: Less reliable batch statistics.

- Computational overhead: Increased memory and training time.

Mitigating Limitations: Adaptive batch normalization, virtual batch normalization, and hybrid normalization techniques can address some limitations.

Variants and Extensions: Layer normalization, group normalization, instance normalization, batch renormalization, and weight normalization offer alternatives or improvements depending on the specific needs.

Conclusion: Batch normalization is a powerful technique improving deep neural network training. Remember its benefits, implementation details, and limitations, and consider its variants for optimal performance in your projects.

The above is the detailed content of Batch Normalization: Theory and TensorFlow Implementation. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

2024 witnessed a shift from simply using LLMs for content generation to understanding their inner workings. This exploration led to the discovery of AI Agents – autonomous systems handling tasks and decisions with minimal human intervention. Buildin

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

The article reviews top AI voice generators like Google Cloud, Amazon Polly, Microsoft Azure, IBM Watson, and Descript, focusing on their features, voice quality, and suitability for different needs.

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

This week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le