GPTCache Tutorial: Enhancing Efficiency in LLM Applications

GPTCache is an open-source framework for large language model (LLM) applications like ChatGPT. It stores previously generated LLM responses to similar queries. Instead of relying on the LLM, the application checks the cache for a relevant response to save you time.

This guide explores how GPTCache works and how you can use it effectively in your projects.

What is GPTCache?

GPTCache is a caching system designed to improve the performance and efficiency of large language models (LLMs) like GPT-3. It helps LLMs store the previously generated queries to save time and effort.

When a similar query comes up again, the LLM can pull up the cached response instead of developing a new one from scratch.

Unlike other tools, GPTCache works on semantic caching. Semantic caches hold the objective of a query/request. As a result, when the previously stored queries are recalled, their result reduces the server’s workload and improves cache hit rates.

Benefits of Using GPTCache

The main idea behind GPTCache is to store and reuse the intermediate computations generated during the inference process of an LLM. Doing so has several benefits:

Cost savings on LLM API calls

Most LLMs charge a specific fee per request based on the number of tokens processed. That’s when GPTCache comes in handy. It minimizes the number of LLM API calls by serving previously generated responses for similar queries. As a result, this saves costs by reducing extra LLM call expenses.

Improved response time and efficiency

Retrieving the response from a cache is substantially faster than generating it from scratch by querying the LLM. It boosts the speed and improves response times. Efficient responses reduce the burden on the LLM itself and free up space that can be allocated to other tasks.

Enhanced user experience through faster application performance

Suppose you’re searching questions for your content. Every question you ask takes ages for AI to answer. Why? Because most LLM services enforce request limits within set periods. Exceeding these limits blocks further requests until the limit resets, which causes service interruptions.

ChatGPT can reach its response generating limit

To avoid these issues, GPTchache caches previous answers to similar questions. When you ask for something, it quickly checks its memory and delivers the information in a flash. As a result, you get your response in less time than usual.

Simply put, by leveraging cached responses, GPTCache ensures LLM-based applications become responsive and efficient—just like you'd expect from any modern tool.

Setting Up GPTCache

Here’s how you can install GPTCache directly:

Installation and configuration

Install the GPTCache package using this code.

1 |

|

Next, import GPTCache into your application.

1 2 3 |

|

That’s it, and you’re done!

Integration with LLMs

You can integrate GPTCache with LLMs through its LLM Adapter. As of now, it is compatible with only two large language model adapters:

- OpenAI

- Langchain

Here’s how you can integrate it with both adapters:

GPTCache with OpenAI ChatGPT API

To integrate GPTCache with OpenAI, initialize the cache and import openai from gptcache.adapter.

1 2 3 4 5 |

|

Before you run the example code, set the OPENAI_API_KEY environment variable by executing echo $OPENAI_API_KEY.

If it is not already set, you can set it by using export OPENAI_API_KEY=YOUR_API_KEY on Unix/Linux/MacOS systems or set OPENAI_API_KEY=YOUR_API_KEY on Windows systems.

Then, if you ask ChatGPT two exact questions, it will retrieve the answer to the second question from the cache instead of asking ChatGPT again.

Here’s an example code for similar search cache:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

|

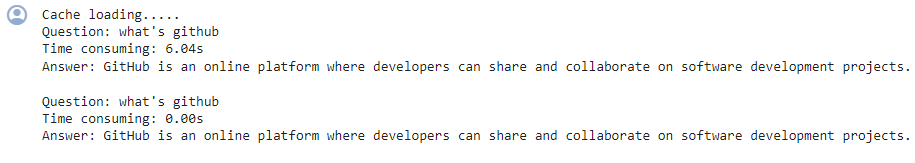

Here’s what you will see in the output:

The second time, GPT took nearly 0 seconds to answer the same question

GPTCache with LangChain

If you want to utilize a different LLM, try the LangChain adapter. Here’s how you can integrate GPTCahe with LangChain:

1 2 3 4 5 |

|

Learn how to build LLM applications with Langchain.

Using GPTCache in Your Projects

Let's look at how GPTCache can support your projects.

Basic operations

LLMs can become ineffective due to the inherent complexity and variability of LLM queries, resulting in a low cache hit rate.

To overcome this limitation, GPTCache adopts semantic caching strategies. Semantic caching stores similar or related queries—increasing the probability of cache hits and enhancing the overall caching efficiency.

GPTCache leverages embedding algorithms to convert queries into numerical representations called embeddings. These embeddings are stored in a vector store, enabling efficient similarity searches. This process allows GPTCache to identify and retrieve similar or related queries from the cache storage.

With its modular design, you can customize semantic cache implementations according to your requirements.

However—sometimes false cache hits and cache misses can occur in a semantic cache. To monitor this performance, GPTCache provides three performance metrics:

- Hit ratio measures a cache's success rate in fulfilling requests. Higher values indicate better performance.

- Latency indicates the time taken to retrieve data from the cache, where lower is better.

- Recall shows the proportion of correctly served cache queries. Higher percentages reflect better accuracy.

Advanced features

All basic data elements like the initial queries, prompts, responses, and access timestamps are stored in a 'data manager.' GPTCache currently supports the following cache storage options:

- SQLite

- MySQL

- PostgreSQL databases.

It doesn’t support the ‘NoSQL’ database yet, but it’s planned to be incorporated soon.

Using the eviction policies

However, GPTCache can remove data from the cache storage based on a specified limit or count. To manage the cache size, you can implement either a Least Recently Used (LRU) eviction policy or a First In, First Out (FIFO) approach.

- LRU eviction policy evicts the least recently accessed items.

- Meanwhile, the FIFO eviction policy discards the cached items that have been present for the longest duration.

Evaluating response performance

GPTCache uses an ‘evaluation’ function to assess whether a cached response addresses a user query. To do so, it takes three inputs:

- user's request for data

- cached data being evaluated

- user-defined parameters (if any)

You can also use two other functions:

- ‘log_time_func’ lets you record and report the duration of intensive tasks like generating ‘embeddings’ or performing cache ‘searches.’ This helps monitor the performance characteristics.

- With ‘similarity_threshold,’ you can define the threshold for determining when two embedding vectors (high-dimensional representations of text data) are similar enough to be matched.

GPTCache Best Practices and Troubleshooting

Now that you know how GPTCache functions, here are some best practices and tips to ensure you reap its benefits.

Optimizing GPTCache performance

There are several steps you can take to optimize the performance of GPTCache, as outlined below.

1. Clarify your prompts

How you prompt your LLM impacts how well GPTCache works. So, keep your phrasing consistent to enhance your chances of reaching the cache.

For example, use consistent phrasing like "I can't log in to my account." This way, GPTCache recognizes similar issues, such as "Forgot my password" or "Account login problems," more efficiently.

2. Use the built-in tracking metrics

Monitor built-in metrics like hit ratio, recall, and latency to analyze your cache’s performance. A higher hit ratio indicates that the cache more effectively serves requested content from stored data, helping you understand its effectiveness.

3. Scaling GPTCache for LLM applications with large user bases

To scale GPTCache for larger LLM applications, implement a shared cache approach that utilizes the same cache for user groups with similar profiles. Create user profiles and classify them to identify similar user groups.

Leveraging a shared cache for users of the same profile group yields good returns regarding cache efficiency and scalability.

This is because users within the same profile group tend to have related queries that can benefit from cached responses. However, you must employ the right user profiling and classification techniques to group users and maximize the benefits of shared caching accurately.

Troubleshooting common GPTCache issues

If you’re struggling with GPTCache, there are several steps you can take to troubleshoot the issues.

1. Cache invalidation

GPTCache relies on up-to-date cache responses. If the underlying LLM's responses or the user's intent changes over time, the cached responses may become inaccurate or irrelevant.

To avoid this, set expiration times for cached entries based on the expected update frequency of the LLM and regularly refresh the cache.

2. Over-reliance on cached responses

While GPTCache can improve efficiency, over-reliance on cached responses can lead to inaccurate information if the cache is not invalidated properly.

For this purpose, make sure your application occasionally retrieves fresh responses from the LLM, even for similar queries. This maintains the accuracy and quality of the responses when dealing with critical or time-sensitive information.

3. Ignoring cache quality

The quality and relevance of the cached response impact the user experience. So, you should use evaluation metrics to assess the quality of cached responses before serving them to users.

By understanding these potential pitfalls and their solutions, you can ensure that GPTCache effectively improves the performance and cost-efficiency of your LLM-powered applications—without compromising accuracy or user experience.

Wrap-up

GPTCache is a powerful tool for optimizing the performance and cost-efficiency of LLM applications. Proper configuration, monitoring, and cache evaluation strategies are required to ensure you get accurate and relevant responses.

If you’re new to LLMs, these resources might help:

- Developing large language models

- Building LLM applications with LangChain and GPT

- Training an LLM with PyTorch

- Using LLM with cohere API

- Developing LLM applications with LangChain

FAQs

How do you initialize the cache to run GPTCache and import the OpenAI API?

To initialize the cache and import the OpenAI API, import openai from gptcache.adapter. This will automatically set the data manager to match the exact cache. Here’s how you can do this:

1 |

|

What happens if you ask ChatGPT the same question twice?

GPTCache stores the previous responses in the cache and retrieves the answer from the cache instead of making a new request to the API. So, the answer to the second question will be obtained from the cache without requesting ChatGPT again.

The above is the detailed content of GPTCache Tutorial: Enhancing Efficiency in LLM Applications. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Shopify CEO Tobi Lütke's recent memo boldly declares AI proficiency a fundamental expectation for every employee, marking a significant cultural shift within the company. This isn't a fleeting trend; it's a new operational paradigm integrated into p

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

This week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

2024 witnessed a shift from simply using LLMs for content generation to understanding their inner workings. This exploration led to the discovery of AI Agents – autonomous systems handling tasks and decisions with minimal human intervention. Buildin

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

Hey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’