Kimi k1.5: A Generative AI Reasoning Model Reshaping the Landscape

Recent breakthroughs in reinforcement learning (RL) and large language models (LLMs) have culminated in the creation of Kimi k1.5, a model poised to revolutionize generative AI reasoning. This article delves into Kimi k1.5's key features, innovations, and potential impact, drawing insights from the accompanying research.

Table of Contents:

What is Kimi k1.5?

Kimi k1.5 represents a substantial leap forward in scaling RL with LLMs. Unlike conventional models relying on intricate methods like Monte Carlo tree search, it employs a streamlined approach centered on autoregressive prediction and RL techniques. Its design enables it to handle multimodal tasks, showcasing exceptional performance in benchmarks like Math Vista and Live Code Bench.

Kimi k1.5 Training

Kimi k1.5's training is a multi-stage process designed to enhance reasoning through RL and multimodal integration:

Pretraining: The model is pretrained on a vast, high-quality multimodal dataset encompassing text (English, Chinese, code, math, general knowledge) and visual data, rigorously filtered for relevance and diversity.

Supervised Fine-Tuning (SFT): This involves two phases: vanilla SFT using ~1 million examples across various tasks, and Long-Chain-of-Thought (CoT) SFT for training complex reasoning pathways.

Reinforcement Learning (RL): A carefully curated prompt set drives the RL training. The model learns to generate solutions through a sequence of reasoning steps, guided by a reward model evaluating response accuracy. Online mirror descent optimizes the policy.

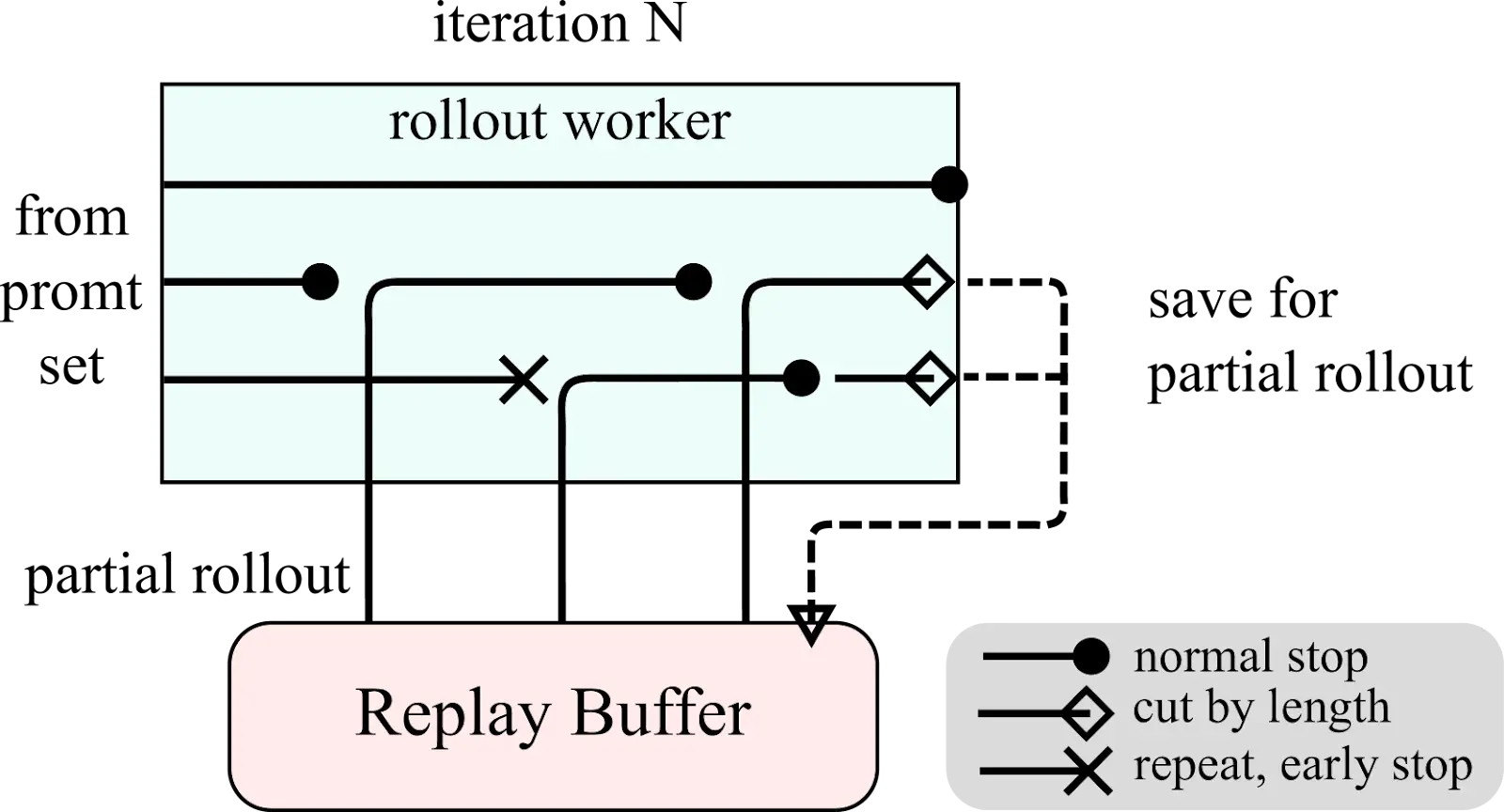

Partial Rollouts: To efficiently handle long contexts, Kimi k1.5 uses partial rollouts, saving unfinished portions for later continuation.

Length Penalty & Sampling: A length penalty encourages concise answers, while curriculum and prioritized sampling strategies focus training on easier tasks first.

Evaluation & Iteration: Continuous evaluation against benchmarks guides iterative model updates.

Kimi k1.5 System Overview & Partial Rollout Diagrams:

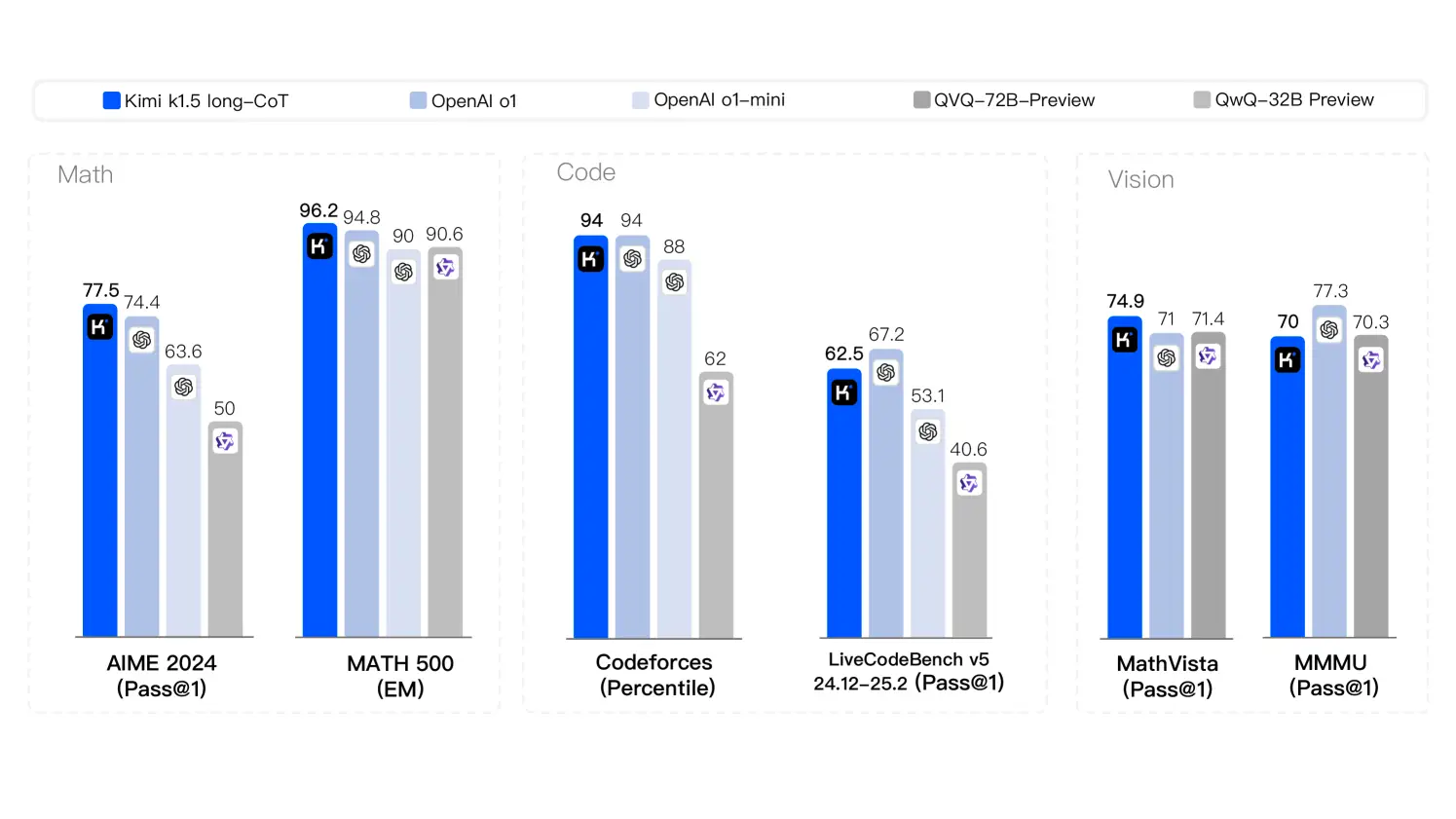

Kimi k1.5 Benchmarks

Kimi k1.5 demonstrates state-of-the-art performance across diverse tasks:

Reasoning Strategies Diagram:

Kimi k1.5 Key Innovations

Kimi k1.5 vs. DeepSeek R1

Kimi k1.5 and DeepSeek R1 represent different approaches to LLM development. Kimi k1.5's streamlined architecture, integrated RL, and long context handling distinguish it from DeepSeek R1's more traditional methods. The differences impact their performance on complex, context-heavy tasks.

Accessing Kimi k1.5 via API

API access requires registration on KIMI's management console. An example Python code snippet demonstrates API interaction:

# ... (API key setup and message preparation) ...

stream = client.chat.completions.create(

model="kimi-k1.5-preview",

messages=messages,

temperature=0.3,

stream=True,

max_tokens=8192,

)

# ... (streaming response handling) ...Conclusion

Kimi k1.5 represents a significant advancement in generative AI reasoning, simplifying RL design while achieving state-of-the-art results. Its innovations in context scaling and multimodal data handling position it as a leading model with broad implications across various industries.

The above is the detailed content of After DeepSeek, Kimi k1.5 Outshines OpenAI o1. For more information, please follow other related articles on the PHP Chinese website!