Unlocking RAG's Potential with ModernBERT

ModernBERT: A Powerful and Efficient NLP Model

ModernBERT significantly improves upon the original BERT architecture, offering enhanced performance and efficiency for various natural language processing (NLP) tasks. This advanced model incorporates cutting-edge architectural improvements and innovative training methods, expanding its capabilities for developers in the machine learning field. Its extended context length of 8,192 tokens—a substantial increase over traditional models—allows for tackling complex challenges like long-document retrieval and code understanding with remarkable accuracy. This efficiency, coupled with reduced memory usage, makes ModernBERT ideal for optimizing NLP applications, from sophisticated search engines to AI-powered coding environments.

Key Features and Advancements

ModernBERT's superior performance stems from several key innovations:

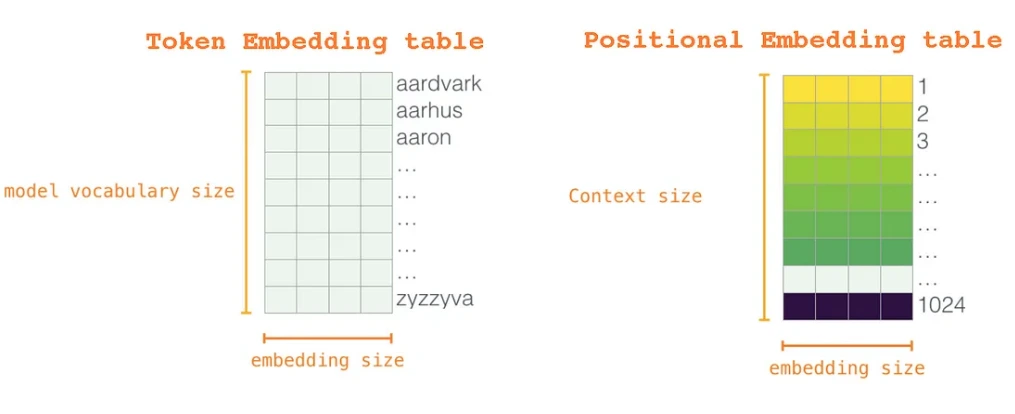

- Rotary Positional Encoding (RoPE): Replaces traditional positional embeddings, enabling better understanding of word relationships and scaling to longer sequences (up to 8,192 tokens). This addresses the limitations of absolute positional encoding which struggles with longer sequences.

- GeGLU Activation Function: Combines GLU (Gated Linear Unit) and GELU (Gaussian Error Linear Unit) activations for improved information flow control and enhanced non-linearity within the network.

- Alternating Attention Mechanism: Employs a blend of global and local attention, balancing efficiency and performance. This optimized approach speeds up processing of long inputs by reducing computational complexity.

- Flash Attention 2 Integration: Further enhances computational efficiency by minimizing memory usage and accelerating processing, particularly beneficial for long sequences.

- Extensive Training Data: Trained on a massive dataset of 2 trillion tokens, including code and scientific literature, enabling superior performance in code-related tasks.

ModernBERT vs. BERT: A Comparison

| Feature | ModernBERT | BERT |

|---|---|---|

| Context Length | 8,192 tokens | 512 tokens |

| Positional Embeddings | Rotary Positional Embeddings (RoPE) | Traditional absolute positional embeddings |

| Activation Function | GeGLU | GELU |

| Training Data | 2 trillion tokens (diverse sources including code) | Primarily Wikipedia |

| Model Sizes | Base (139M parameters), Large (395M parameters) | Base (110M parameters), Large (340M parameters) |

| Speed & Efficiency | Significantly faster training and inference | Slower, especially with longer sequences |

Practical Applications

ModernBERT's capabilities extend to various applications:

- Long-Document Retrieval: Ideal for analyzing extensive documents like legal texts or scientific papers.

- Hybrid Semantic Search: Enhances search engines by understanding both text and code queries.

- Contextual Code Analysis: Facilitates tasks such as bug detection and code optimization.

- Code Retrieval: Excellent for AI-powered IDEs and code indexing solutions.

- Retrieval Augmented Generation (RAG) Systems: Provides enhanced context for generating more accurate and relevant responses.

Python Implementation (RAG System Example)

A simplified RAG system using ModernBERT embeddings and Weaviate is demonstrated below. (Note: This section requires installation of several libraries and a Hugging Face account with an authorization token. The code also assumes access to an appropriate dataset and an OpenAI API key.) The complete code is omitted here for brevity but illustrates the integration of ModernBERT for embedding generation and retrieval within a RAG pipeline.

Conclusion

ModernBERT presents a substantial advancement in NLP, combining enhanced performance with improved efficiency. Its capacity to handle long sequences and its diverse training data make it a versatile tool for numerous applications. The integration of innovative techniques like RoPE and GeGLU positions ModernBERT as a leading model for tackling complex NLP and code-related tasks.

(Note: The image URLs remain unchanged.)

The above is the detailed content of Unlocking RAG's Potential with ModernBERT. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

The article reviews top AI voice generators like Google Cloud, Amazon Polly, Microsoft Azure, IBM Watson, and Descript, focusing on their features, voice quality, and suitability for different needs.

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

2024 witnessed a shift from simply using LLMs for content generation to understanding their inner workings. This exploration led to the discovery of AI Agents – autonomous systems handling tasks and decisions with minimal human intervention. Buildin

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

This week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le