What is Deep Learning? A Tutorial for Beginners

Deep Learning Demystified: A Comprehensive Guide

Deep learning, a powerful subset of machine learning, empowers computers to learn from examples, mirroring human learning. Imagine teaching a computer to identify cats – instead of explicitly defining features, you show it countless cat images. The computer autonomously identifies common patterns and learns to recognize cats. This is the core principle of deep learning.

Technically, deep learning leverages artificial neural networks, inspired by the human brain's structure. These networks comprise interconnected nodes (neurons) arranged in layers, processing information sequentially. The more layers, the "deeper" the network, enabling the learning of increasingly complex patterns and the execution of sophisticated tasks.

The Brain-Inspired Architecture of Neural Networks

From Machine Learning to Deep Learning: A Paradigm Shift

Machine learning, itself a branch of artificial intelligence (AI), enables computers to learn from data and make decisions without explicit programming. It encompasses various techniques allowing systems to recognize patterns, predict outcomes, and improve performance over time. Deep learning extends machine learning by automating tasks previously requiring human expertise.

Deep learning distinguishes itself through the use of neural networks with three or more layers. These networks attempt to mimic the human brain's functionality, learning from vast datasets.

The Crucial Role of Feature Engineering

Feature engineering involves selecting, transforming, or creating the most relevant variables (features) from raw data for use in machine learning models. For instance, in weather prediction, raw data might include temperature, humidity, and wind speed. Feature engineering determines which variables are most predictive and transforms them (e.g., converting Fahrenheit to Celsius) for optimal model performance.

Traditional machine learning often necessitates manual and time-consuming feature engineering, requiring domain expertise. A key advantage of deep learning is its ability to automatically learn relevant features from raw data, minimizing manual intervention.

The Significance of Deep Learning

Deep learning's dominance stems from several key advantages:

- Unstructured Data Handling: Deep learning models readily process unstructured data, unlike models trained on structured data, saving time and resources in data standardization.

- Large Data Processing: GPUs enable deep learning models to process massive datasets at remarkable speeds.

- High Accuracy: Deep learning consistently delivers highly accurate results in computer vision, natural language processing (NLP), and audio processing.

- Automated Pattern Recognition: Unlike many models requiring human intervention, deep learning models automatically detect diverse patterns.

This guide delves into deep learning's core concepts, preparing you for a career in AI. For practical exercises, consider our "Introduction to Deep Learning in Python" course.

Fundamental Deep Learning Concepts

Before exploring deep learning algorithms and applications, understanding its foundational concepts is crucial. This section introduces the building blocks: neural networks, deep neural networks, and activation functions.

Neural Networks

Deep learning's core is the artificial neural network, a computational model inspired by the human brain. These networks consist of interconnected nodes ("neurons") that collaboratively process information and make decisions. Similar to the brain's specialized regions, neural networks have layers dedicated to specific functions.

Deep Neural Networks

A "deep" neural network is distinguished by its multiple layers between input and output. This depth allows for the learning of highly complex features and more accurate predictions. The depth is the source of deep learning's name and its power in solving intricate problems.

Activation Functions

Activation functions act as decision-makers in a neural network, determining which information proceeds to the next layer. These functions introduce complexity, enabling the network to learn from data and make nuanced decisions.

How Deep Learning Functions

Deep learning employs feature extraction to recognize similar features within the same label and uses decision boundaries to classify features accurately. In a cat/dog classifier, the model extracts features like eye shape, face structure, and body shape, then divides them into distinct classes.

Deep learning models utilize deep neural networks. A simple neural network has an input layer, a hidden layer, and an output layer. Deep learning models have multiple hidden layers, enhancing accuracy with each additional layer.

A Simple Neural Network Illustration

A Simple Neural Network Illustration

Input layers receive raw data, passing it to hidden layer nodes. Hidden layers classify data points based on the target information, progressively narrowing the scope to produce accurate predictions. The output layer uses hidden layer information to select the most probable label.

Artificial Intelligence, Machine Learning, and Deep Learning: The Hierarchy

Addressing a common question: Is deep learning a form of artificial intelligence? The answer is yes. Deep learning is a subset of machine learning, which in turn is a subset of AI.

The Relationship Between AI, ML, and DL

The Relationship Between AI, ML, and DL

AI aims to create intelligent machines mimicking or surpassing human intelligence. AI utilizes machine learning and deep learning methods to accomplish human tasks. Deep learning, being the most advanced algorithm, is a crucial component of AI's decision-making capabilities.

Applications of Deep Learning

Deep learning powers numerous applications, from Netflix movie recommendations to Amazon warehouse management systems.

Computer Vision

Computer vision (CV) is used in self-driving cars for object detection and collision avoidance, as well as face recognition, pose estimation, image classification, and anomaly detection.

Face Recognition Powered by Deep Learning

Face Recognition Powered by Deep Learning

Automatic Speech Recognition (ASR)

ASR is ubiquitous in smartphones, activated by voice commands like "Hey, Google" or "Hi, Siri." It's also used for text-to-speech, audio classification, and voice activity detection.

Speech Pattern Recognition

Speech Pattern Recognition

Generative AI

Generative AI, exemplified by the creation of CryptoPunks NFTs and OpenAI's GPT-4 model (powering ChatGPT), generates synthetic art, text, video, and music.

Generative Art

Generative Art

Translation

Deep learning facilitates language translation, photo-to-text translation (OCR), and text-to-image translation.

Language Translation

Language Translation

Time Series Forecasting

Deep learning predicts market crashes, stock prices, and weather patterns, crucial for financial and other industries.

Time Series Forecasting

Time Series Forecasting

Automation and Robotics

Deep learning automates tasks, such as warehouse management and robotic control, even enabling AI to outperform human players in video games.

Robotic Arm Controlled by Deep Learning

Robotic Arm Controlled by Deep Learning

Customer Feedback Analysis

Deep learning processes customer feedback and powers chatbot applications for seamless customer service.

Customer Feedback Analysis

Customer Feedback Analysis

Biomedical Applications

Deep learning aids in cancer detection, drug development, anomaly detection in medical imaging, and medical equipment assistance.

Analyzing DNA Sequences

Analyzing DNA Sequences

Deep Learning Models: A Taxonomy

This section explores various deep learning models and their functionalities.

Supervised Learning

Supervised learning uses labeled datasets to train models for classification or prediction. The dataset includes features and target labels, allowing the algorithm to learn by minimizing the difference between predicted and actual labels. This includes classification and regression problems.

Classification

Classification algorithms categorize data based on extracted features. Examples include ResNet50 (image classification) and BERT (text classification).

Classification

Classification

Regression

Regression models predict outcomes by learning the relationship between input and output variables. They are used for predictive analysis, weather forecasting, and stock market prediction. LSTM and RNN are popular regression models.

Linear Regression

Linear Regression

Unsupervised Learning

Unsupervised learning algorithms identify patterns in unlabeled datasets and create clusters. Deep learning models learn hidden patterns without human intervention, often used in recommendation systems. Applications include species grouping, medical imaging, and market research. Deep embedded clustering is a common model.

Clustering of Data

Clustering of Data

Reinforcement Learning (RL)

RL involves agents learning behaviors from an environment through trial and error, maximizing rewards. RL is used in automation, self-driving cars, game playing, and rocket landing.

Reinforcement Learning Framework

Reinforcement Learning Framework

Generative Adversarial Networks (GANs)

GANs use two neural networks (generator and discriminator) to produce synthetic instances of original data. They are used for generating synthetic art, video, music, and text.

Generative Adversarial Network Framework

Generative Adversarial Network Framework

Graph Neural Networks (GNNs)

GNNs operate directly on graph structures, used in large dataset analysis, recommendation systems, and computer vision for node classification, link prediction, and clustering.

A Directed Graph

A Directed Graph

A Graph Network

A Graph Network

Natural Language Processing (NLP) and Deep Learning

NLP uses deep learning to enable computers to understand human language, processing speech, text, and images. Transfer learning enhances NLP by fine-tuning models with minimal data to achieve high performance.

Subcategories of NLP

Subcategories of NLP

Advanced Deep Learning Concepts

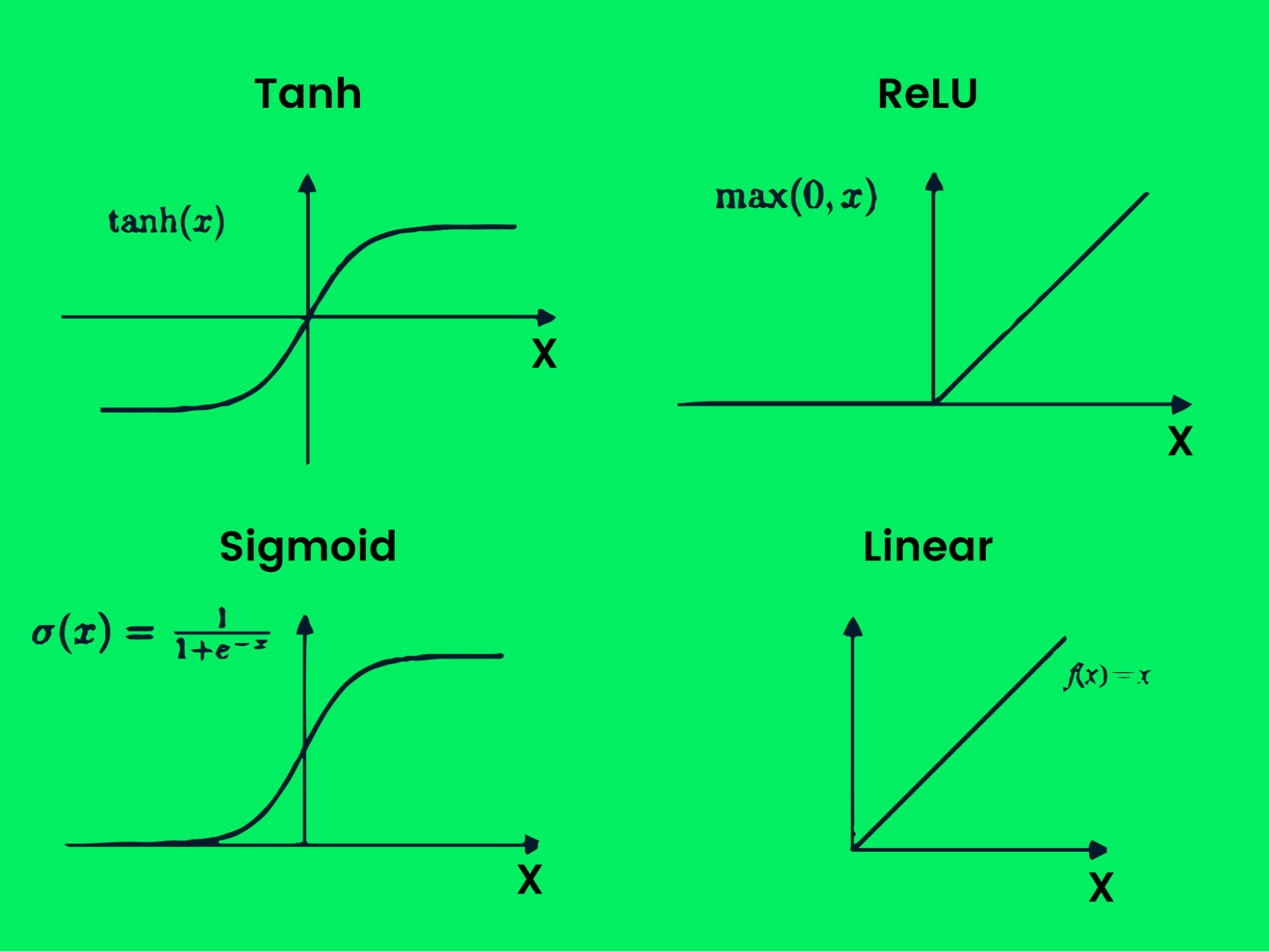

Activation Functions

Activation functions produce output decision boundaries, improving model performance. They introduce non-linearity to networks. Examples include Tanh, ReLU, Sigmoid, Linear, Softmax, and Swish.

Activation Function Graph

Activation Function Graph

Loss Function

The loss function measures the difference between actual and predicted values, tracking model performance. Examples include binary cross-entropy, categorical hinge, mean squared error, Huber, and sparse categorical cross-entropy.

Backpropagation

Backpropagation adjusts network weights to minimize the loss function, improving model accuracy.

Stochastic Gradient Descent

Stochastic gradient descent optimizes the loss function by iteratively adjusting weights using batches of samples, improving efficiency.

Hyperparameters

Hyperparameters are tunable parameters affecting model performance, such as learning rate, batch size, and number of epochs.

Popular Deep Learning Algorithms

Convolutional Neural Networks (CNNs)

CNNs process structured data (images) effectively, excelling at pattern recognition.

Convolutional Neural Network Architecture

Convolutional Neural Network Architecture

Recurrent Neural Networks (RNNs)

RNNs handle sequential data by feeding output back into the input, useful for time series analysis and NLP.

Recurrent Neural Network Architecture

Recurrent Neural Network Architecture

Long Short-Term Memory Networks (LSTMs)

LSTMs are advanced RNNs that address the vanishing gradient problem, better retaining long-term dependencies in sequential data.

LSTM Architecture

LSTM Architecture

Deep Learning Frameworks: A Comparison

Several deep learning frameworks exist, each with strengths and weaknesses. Here are some of the most popular:

TensorFlow (TF)

TensorFlow is an open-source library for creating deep learning applications, supporting CPU, GPU, and TPU. It includes TensorBoard for experiment analysis and integrates Keras for easier development.

Keras

Keras is a user-friendly neural network API that runs on multiple backends (including TensorFlow), facilitating rapid experimentation.

PyTorch

PyTorch is known for its flexibility and ease of use, popular among researchers. It uses tensors for fast computation and supports GPU and TPU acceleration.

Conclusion

This guide provided a comprehensive overview of deep learning, covering its core concepts, applications, models, and frameworks. To further your learning, consider our Deep Learning in Python Track or Deep Learning with Keras in R courses.

The above is the detailed content of What is Deep Learning? A Tutorial for Beginners. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

This week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Shopify CEO Tobi Lütke's recent memo boldly declares AI proficiency a fundamental expectation for every employee, marking a significant cultural shift within the company. This isn't a fleeting trend; it's a new operational paradigm integrated into p

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

2024 witnessed a shift from simply using LLMs for content generation to understanding their inner workings. This exploration led to the discovery of AI Agents – autonomous systems handling tasks and decisions with minimal human intervention. Buildin

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

Hey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’