This blog post demonstrates building an AI agent for web searches using LangChain and Llama 3.3, a powerful large language model. The agent leverages external knowledge bases like ArXiv and Wikipedia to provide comprehensive answers.

This tutorial will teach you:

This article is part of the Data Science Blogathon.

Table of Contents

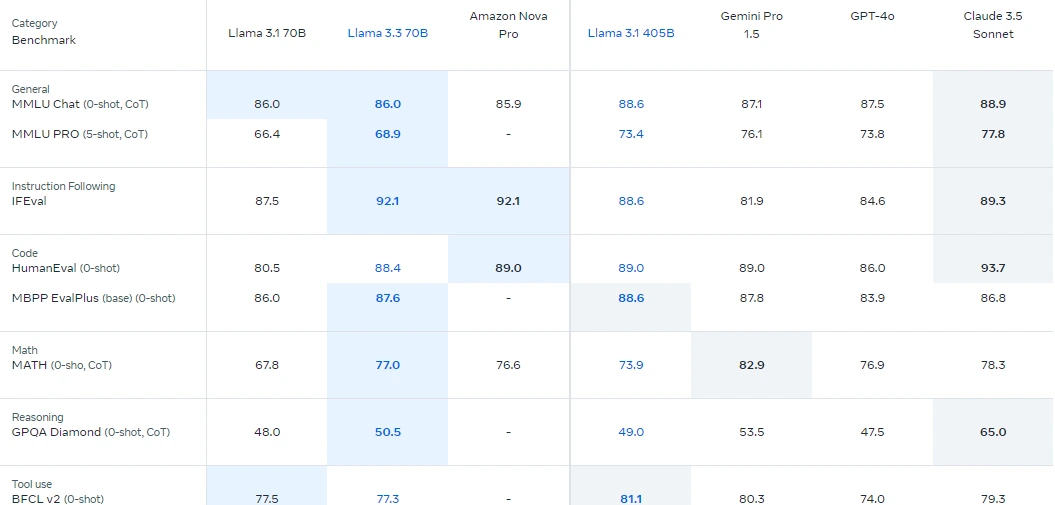

Understanding Llama 3.3

Llama 3.3, a 70-billion parameter instruction-tuned LLM from Meta, excels at text-based tasks. Its improvements over previous versions (Llama 3.1 70B and Llama 3.2 90B) and cost-effectiveness make it a compelling choice. It even rivals larger models in certain areas.

Introducing LangChain

LangChain is an open-source framework for developing LLM-powered applications. It simplifies LLM integration, allowing for the creation of sophisticated AI solutions.

LangChain Key Features:

Core Components of the Web-Searching Agent

Our agent uses:

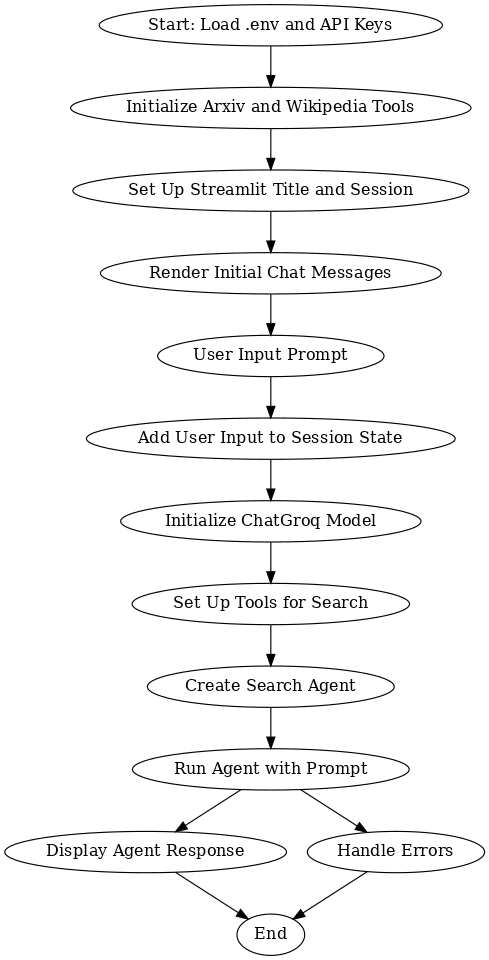

Workflow Diagram

This diagram illustrates the interaction between the user, the LLM, and the data sources (ArXiv, Wikipedia). It shows how user queries are processed, information is retrieved, and responses are generated. Error handling is also incorporated.

Environment Setup and Configuration

This section details setting up the development environment, installing dependencies, and configuring API keys. It includes code snippets for creating a virtual environment, installing packages, and setting up a .env file for secure API key management. The code examples demonstrate importing necessary libraries, loading environment variables, and configuring ArXiv and Wikipedia tools. The Streamlit app setup, including handling user input and displaying chat messages, is also covered. Finally, the code shows how to initialize the LLM, tools, and the search agent, and how to generate and display the assistant's response, including error handling. Example outputs are also provided.

Conclusion

This project showcases the power of combining LLMs like Llama 3.3 with external knowledge sources using LangChain. The modular design allows for easy expansion and adaptation to various domains. Streamlit simplifies the creation of interactive user interfaces.

Frequently Asked Questions

(Note: Images are not included in this response as they were not provided in a format suitable for direct inclusion. The image URLs remain as placeholders.)

The above is the detailed content of Building a Web-Searching Agent. For more information, please follow other related articles on the PHP Chinese website!