The F-Beta Score: A Comprehensive Guide to Model Evaluation in Machine Learning

In machine learning and statistical modeling, accurately assessing model performance is crucial. While accuracy is a common metric, it often falls short when dealing with imbalanced datasets, failing to adequately capture the trade-offs between precision and recall. Enter the F-Beta Score—a more flexible evaluation metric that allows you to prioritize either precision or recall depending on the specific task. This article provides a detailed explanation of the F-Beta Score, its calculation, applications, and implementation in Python.

Learning Objectives:

Table of Contents:

What is the F-Beta Score?

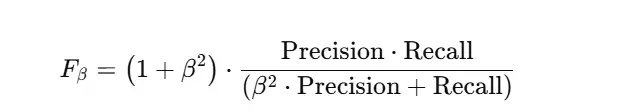

The F-Beta Score provides a nuanced assessment of a model's output by considering both precision and recall. Unlike the F1 Score, which averages precision and recall equally, the F-Beta Score allows you to adjust the weighting of recall relative to precision using the β parameter.

When to Use the F-Beta Score

The F-Beta Score is particularly useful in scenarios demanding a careful balance or prioritization of precision and recall. Here are some key situations:

Imbalanced Datasets: In datasets with a skewed class distribution (e.g., fraud detection, medical diagnosis), accuracy can be misleading. The F-Beta Score allows you to adjust β to emphasize recall (fewer missed positives) or precision (fewer false positives), aligning with the cost associated with each type of error.

Domain-Specific Prioritization: Different applications have varying tolerances for different types of errors. For example:

Optimizing the Precision-Recall Trade-off: The F-Beta Score provides a single metric to guide the optimization process, allowing for targeted improvements in either precision or recall.

Cost-Sensitive Tasks: When the costs of false positives and false negatives differ significantly, the F-Beta Score helps choose the optimal balance.

Calculating the F-Beta Score

The F-Beta Score is calculated using the precision and recall derived from a confusion matrix:

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actual Positive | True Positive (TP) | False Negative (FN) |

| Actual Negative | False Positive (FP) | True Negative (TN) |

Practical Applications of the F-Beta Score

The F-Beta Score finds widespread application across numerous domains:

Python Implementation

The scikit-learn library provides a straightforward way to calculate the F-Beta Score:

from sklearn.metrics import fbeta_score, precision_score, recall_score, confusion_matrix

import numpy as np

# Example data

y_true = np.array([1, 0, 1, 1, 0, 1, 0, 0, 1, 0])

y_pred = np.array([1, 0, 1, 0, 0, 1, 0, 1, 1, 0])

# Calculate scores

precision = precision_score(y_true, y_pred)

recall = recall_score(y_true, y_pred)

f1 = fbeta_score(y_true, y_pred, beta=1)

f2 = fbeta_score(y_true, y_pred, beta=2)

f05 = fbeta_score(y_true, y_pred, beta=0.5)

print(f"Precision: {precision:.2f}")

print(f"Recall: {recall:.2f}")

print(f"F1 Score: {f1:.2f}")

print(f"F2 Score: {f2:.2f}")

print(f"F0.5 Score: {f05:.2f}")

# Confusion matrix

conf_matrix = confusion_matrix(y_true, y_pred)

print("\nConfusion Matrix:")

print(conf_matrix)Conclusion

The F-Beta Score is a powerful tool for evaluating machine learning models, particularly when dealing with imbalanced datasets or situations where the cost of different types of errors varies. Its flexibility in weighting precision and recall makes it adaptable to a wide range of applications. By understanding and utilizing the F-Beta Score, you can significantly enhance your model evaluation process and achieve more robust and contextually relevant results.

Frequently Asked Questions

Q1: What is the F-Beta Score used for? A1: To evaluate model performance by balancing precision and recall based on application needs.

Q2: How does β affect the F-Beta Score? A2: Higher β values prioritize recall; lower β values prioritize precision.

Q3: Is the F-Beta Score suitable for imbalanced datasets? A3: Yes, it's highly effective for imbalanced datasets.

Q4: How is the F-Beta Score different from the F1 Score? A4: The F1 Score is a special case of the F-Beta Score with β = 1.

Q5: Can I calculate the F-Beta Score without a library? A5: Yes, but libraries like scikit-learn simplify the process.

The above is the detailed content of What is F-Beta Score?. For more information, please follow other related articles on the PHP Chinese website!