Containers Vs Virtual Machines: A Detailed Comparison

Containers and Virtual Machines are both Virtualization technologies that can be used to deploy and manage applications. However, they have different strengths and weaknesses, and the best choice for a particular application will depend on a number of factors.

Containers are lightweight and efficient, making them ideal for deploying microservices-based applications. They are also portable, meaning that they can be easily moved from one environment to another. However, containers do not provide as much isolation as virtual machines, and they can be more difficult to manage.

Virtual Machines are more heavyweight than containers, but they provide a higher level of isolation and security. They are also easier to manage, as they can be managed using traditional tools like Hypervisors. However, virtual machines can be more resource-intensive than containers, and they can be less portable.

In this guide, we will discuss the key differences between containers and virtual machines in more detail. We will also provide some guidance on when to use each technology.

Table of Contents

What is Virtualization?

Every tool or platform is created to solve a particular problem. You have to first understand the problem statement and what solution virtualization provides.

Virtualization is the process of creating a virtual version of a hardware resource, such as a server, storage device, network, or even an entire operating system.

Before the invention of virtualization, you had to buy physical hardware which includes DRIVES, RAM, CPU, GPU, etc., to install an operating system.

And then you have to install the operating system on top of the hardware, harden it as per your personal requirement or industry standards, and then hand it over to the development team. This is a time-consuming and resource-consuming process.

Virtualization is created to address this issue. Virtualization allows you to use the same physical resources from a single machine to create multiple machines called virtual machines.

This allows multiple instances of the resource to run on the same physical hardware, which can improve efficiency and save costs.

The following image will give you a good idea of installing operating systems with and without virtualization.

Benefits of Virtualization

Virtualization offers a many benefits and solves a number of problems, including:

- Underutilized hardware: Virtualization allows multiple VMs to share the resources of a single physical machine. This can help to improve utilization and reduce costs.

- Hardware consolidation: Virtualization can be used to consolidate multiple physical machines into a single VM. This can free up space in the data center and reduce power consumption.

- Flexibility: Virtualization makes it easy to create and deploy new VMs. This can help to improve IT agility and responsiveness.

- Disaster recovery: Virtualization can be used to create disaster recovery copies of VMs. This can help to protect data and applications in the event of a disaster.

- Security: Virtualization can help to improve security by isolating VMs from each other. This can help to prevent malware from spreading from one VM to another.

- Scalability: Virtualization makes it easy to scale your IT infrastructure up or down as needed. This can help you to save costs and improve efficiency.

- Testing and development: Virtualization can be used to create isolated environments for testing and development. This can help to improve the quality of your applications and reduce the risk of errors.

- Training: Virtualization can be used to create training environments for employees. This can help to improve skills and knowledge and reduce the time it takes to onboard new employees.

- Infrastructure & Application automation.

- Faster deployment.

- Cost effective.

What is a Hypervisor in Virtualization?

A Hypervisor is a software that will be installed on top of your operating system or hardware. It allows you to create multiple virtual machines by isolating the underlying physical resources and allocating them to multiple virtual machines.

There are two types of hypervisor:

- Type 1 or Bare-Metal Hypervisor,

- Type 2 Hypervisor.

Type 1 or Bare-Metal Hypervisors

This type of hypervisor is installed directly on top of the physical hardware and there is no need of installing any operating system on top of the hardware.

The hypervisor will directly interact with the hardware and allocate resources to the virtual machines you create. This type of hypervisor is most commonly used in corporations.

Some of the popular type 1 hypervisors are

- VMware vSphere / ESXi,

- Microsoft Hyper V,

- Kernel Based Virtual Machine (KVM),

- Red Hat Enterprise Virtualization (RHEV),

- Citrix Xenserver.

Type 2 Hypervisors

This type of hypervisor is used mostly on your personal computers to create virtual machines. Type 2 hypervisors come as software that needs to be installed on top of an operating system.

Type 2 hypervisors are slow compared to Type 1 hypervisors because the Type 2 hypervisors need to interact with the OS and then with the physical resource, but Type 1 hypervisors directly interact with the physical resources.

Some of the popular type 2 hypervisors are

- Oracle Virtualbox,

- VMWare Workstation,

- Parallels Desktop.

Which Hypervisor to Choose?

There are many factors to be considered before choosing the right hypervisor solution.

FREEWARE

If you are looking for a free hypervisor to quickly spin up some virtual machines for testing purposes, then Oracle Virtualbox and VMware workstation will be a perfect choice. Both hypervisors are available for Windows, Linux, and macOS platforms.

If you are using Linux as your base operating system, then KVM will be a preferred choice. Since KVM is integrated with Linux kernel, it out-performs Virtualbox, VMWare workstation and other Type-2 hypervisors.

PRODUCTION WORKLOAD

In most cases, the companies will go with Type 1 hypervisors for production deployments. You can purchase the Type 1 hypervisor licenses which also come with support.

In any case, if you face issues, the product support team will assist you in fixing them. This is not the case with freeware hypervisors.

For freeware hypervisors, you should rely on community support or product articles to fix any issues.

What is Virtual Machine?

In the context of computing, virtualization refers to the creation of a virtual machine (VM). A VM is a software-based computer that runs on top of a physical machine. It has its own operating system, memory, storage, and network resources.

The physical machine is also called as host machine and the virtual machine is known as guest machine.

As an example, let's say you buy either a Server machine or a Laptop and install your favorite operating system. You can then install any virtualization tools (E.g. Oracle Virtualbox) and create one or more guest operating systems (VMs) without buying dedicated hardware for each operating system.

The following image shows my current virtualization setup. I am running my favorite Pop!_OS Linux distribution as my base operating system. I am using Virtualbox as the virtualization solution and running Fedora XFCE as a virtual machine.

Here,

- Pop!_OS is the Host machine,

- Fedora XFCE is the Guest machine (i.e. Virtual Machine),

- Oracle Virtualbox is the Hypervisor.

Role of Virtualization in Cloud Computing

Virtualization plays a crucial role in cloud computing. The resource you spin up on the cloud platforms is created virtually in the cloud provider's data center. For example, let’s talk about AWS which is one of a popular cloud platform in the market.

AWS uses KVM as its virtual solution. When you launch an EC2 instance in AWS, it actually creates a virtual machine through KVM. When it comes to the cloud along with virtualization automation also plays a major role.

In traditional data centers, we create virtual machines manually but in the cloud environment, everything happens in a minute or so with the help of automation.

The main advantage of virtualization on the cloud platform as an end user who handles IAAS, PAAS, or SAAS environments is we need not worry about physical hardware and setting up type 1 or type 2 hypervisors. All we care about is spinning up an EC2 instance or any other services quickly.

Virtualization is not only about servers in the cloud. Storage, network, CPU, GPU, Desktops, etc. are also virtualized in the cloud. As you know, the cloud offers three main service types.

- Infrastructure As A Service - You spin up the virtual machine (EC2), set up storage (S3), configure the network (VPC), and install any applications on top of the EC2 instance. Here EC2, S3, and VPC are all virtualized solutions.

- Software As A Service - A good example of a SAAS product on the AWS cloud is the Snowflake data warehouse solution. Snowflake is available on Azure and GCP too. In the SAAS model, the product is completely abstracted so you will not be able to identify the type of virtualization that is applied to the underlying infrastructure.

- Platform As A Service - In the PAAS model you will be having flexibility in choosing the workload type and the underlying resource will be created in a virtual platform. A good example of a PAAS product in AWS is Elastic Map Reduce (EMR).

What are Containers?

Containers are a more simplified solution for creating and deploying modern-day applications. Containers play well with any DevOps stack you choose to create and deploy applications. Containers are mostly used to create applications with microservices architecture.

Unlike the Virtual machines, Containers will not host the full operating system. Containers are packaged only with the necessary libraries and services that are essential to run an application.

Containers use the underlying operating system’s kernel to access hardware resources through the kernel and provide isolation between containers through Namespace and CGroups.

Similar to hypervisors in virtualization, you need to install any of the container management solutions like Docker, Podman, etc., to work with containers.

The most popular container management solution is Docker which can be used to run your test applications as well as production workloads.

So how is the container set up?

- You need to install an operating system on a physical hardware or it can also be a virtual machine. The preferred choice would be the linux operating system.

- Install container management solution on top of the operating system. In this case, let’s assume it is docker running on top of Linux OS.

- Build your own container or pull the container from the repository.

- Launch the container.

- Points #3 and #4 can be either done manually or through DevOps CI/CD pipelines and automation tools like Ansible, shell script, or any other automation tools.

Below is a sample image where I pulled the Python latest image from the Docker Hub and launched the container. I managed to set up the Python environment I required with just two commands, and it was faster than installing Python directly on my main machine.

Till now I gave you a very simplified explanation of Containers and Container management solutions. Now let’s dive deep into the two technical aspects of containerization.

Container Runtimes

Container runtimes are responsible for spinning up your containers, allocating required resources, setting up namespaces, unpacking the image, managing the image, etc.

There are high-level and low-level container runtimes. Low-level runtimes talk with the kernel and provide resource allocation and process isolation.

In 2015, an initiative named Open Container Initiative (OCI) was created by industry leaders with the primary focus of creating a standard format for containers and run times.

OCI develops a runtime called runc. The container orchestration tools that you see in the market now are created with runc as the base. That is why some container solutions are cross-compatible. For example, podman will seamlessly allow you to run docker containers.

- Low-Level Container Runtime - Runc, Crun, runhcs, containerd

- High-Level Container Runtime - Docker, Podman, CRI-O

Namespace and CGroups

Namespace and CGroups are kernel features that actually made the implementation of containerization possible.

Namespace

Namespace is a kernel feature that provides isolation between different processes. There are different namespaces offered by the Linux kernel. For example, there is a user namespace that has its own user and group id that will be allocated to the process.

There is also one other namespace called process ID which allocated PID to the process in a namespace. The PID from two different namespaces will be independent of each other.

This set of features allows the containers to run on their own namespace thus preventing conflict between container processes.

CGroups

CGroup, aka control group, is a kernel feature that allows you to limit the number of resources (RAM, CPU, DISK IO, NETWORK) a specific process can access.

When you create containers the low-level run time talks to the kernel Cgroups and makes sure only a specified amount of resource is allocated to the container and not beyond that.

Containers in DevOps

Most software development approaches now focus on Agile and DevOps for incremental and quicker deployment of applications.

DevOps is a set of tools, frameworks, and best practices to help deliver the product in incremental chunks. You can use any set of tools to form your DevOps stack to build and deploy your application.

Microservices is another popular approach in software development where you build independent software services and then it will communicate with other services over the network through API. Containers are the center of the microservices implementation where you deploy a service in a container.

For example, let’s say you are building a front-end application and currently working on authentication services for the application. The authentication services allow the user to create accounts or log in using existing accounts for your product.

In this case, you can deploy the authentication service to a docker container which then communicates with the backend database which will also be running in a different container.

Following will be the flow when you are using DevOps to deploy an application.

- Sprint will be planned to create or make changes to an application.

- Create/Modify code in your local machine.

- Push the changes to the central Git repository(Github, Bitbucket, Gitlab, etc..).

- Run CI/CD pipeline which involves building the application and generating necessary artifacts(.jar, .wheel, etc).

- Pull container base image from the central registry(Github).

- Deploy the generated artifact(.jar, .wheel, etc) to the container by rebuilding it from the base image.

- Deploy the container to a lower environment for testing.

- Deploy the container to production.

What is Container Orchestration?

Container solutions like Docker, Podman, and CRI-O offer tools to pull images, launch one or more containers, and start and stop containers.

This is most suitable for a single-user approach. When it comes to the real world, there are many aspects to container deployment.

- Container availability.

- Scalability - Increasing or decreasing container count.

- Scalability - Increasing or decreasing container resources.

- Load balancing.

- Redundancy.

- Logging.

We cannot manually satisfy the above aspects when working with real-life applications. so we need a tool that can automatically take care of the above aspects.

The Orchestration tools are responsible for communicating with underlying container solutions like Docker and handling the life cycle of the container.

Some of the core functionality of an orchestration tool is:

- Monitor the health of a running container.

- Launch a new container when a container becomes unavailable.

- Scale up or down the container count based on the incoming workload.

- Act as a load balancer and distributes traffic to different containers.

- Additional security.

- Automated rollouts and rollbacks during deployments.

- Communication between different containers in the network.

There are some popular container orchestration engines like Docker Swarm, Kubernetes, and Apache Mesos.

Among these three, Kubernetes is the very popular and advanced solution used in enterprise to deploy highly scalable and available applications.

The majority of the cloud providers offer kubernetes as PAAS solutions and they are tightly integrated with their native DevOps stack for seamless application prototyping, development, and deployment.

Containers Vs Virtual Machines

Here's a comparison between Containers and Virtual machines:

| Aspect | Containers | Virtual Machines |

|---|---|---|

| Isolation | Lightweight isolation from host and other containers. | Strong isolation, simulating separate physical machines. |

| Resource Efficiency | Shares host OS kernel, uses less memory and disk space. | Requires separate OS for each VM, consumes more resources. |

| Startup Speed | Extremely fast startup times. | Slower startup times due to OS boot. |

| Performance | Near-native performance, lower overhead. | Slightly higher overhead, may impact performance. |

| Portability | Highly portable due to shared OS kernel. | Less portable due to OS differences. |

| Scalability | Easily scalable, as containers share host resources. | Can be resource-intensive to scale due to multiple OS instances. |

| Isolation Type | Process-level isolation using namespaces. | Full machine-level isolation. |

| Use Cases | Microservices, DevOps, and cloud-native apps. | Legacy applications, different OS instances. |

| Management | Managed using orchestration tools like Kubernetes. | Managed using virtualization management tools. |

| Density | Can run multiple containers on a single host. | Limited number of VMs per host due to resource consumption. |

| Security | Limited isolation, potential security challenges. | Strong isolation, better security for each VM. |

Keep in mind that the choice between containers and virtual machines depends on the specific use case and requirements of your project.

Frequently Asked Questions: Containers and Virtual Machines

Q: What are containers and virtual machines?A: Containers are lightweight, standalone executable software packages that bundle applications and their dependencies. Virtual machines (VMs) are software-based emulations of physical computers that run operating systems and applications.

Q: How do containers differ from virtual machines?A: Containers share the host OS kernel and resources, resulting in lower overhead and faster performance. Virtual machines, on the other hand, run separate OS instances and have stronger isolation, which can lead to higher resource consumption and slower performance.

Q: What's the key benefit of containers?A: Containers offer excellent portability and consistency across various environments, making application deployment and scaling easier. They are ideal for microservices architecture and cloud-native applications.

Q: When should I use virtual machines?A: Virtual machines are suitable for scenarios requiring stronger isolation, such as legacy applications, different operating systems, and when security is a top concern. They provide a more traditional approach to virtualization.

Q: Which is more resource-efficient, containers or virtual machines?A: Containers are more resource-efficient as they share the host's kernel and resources. This enables higher density and better utilization of hardware compared to virtual machines that require separate OS instances.

Q: Are containers or virtual machines more secure?A: Virtual machines offer stronger security through complete isolation, ensuring that any vulnerabilities are contained within the VM. Containers share the host kernel, which can present potential security challenges if not managed properly.

Q: What tools are used to manage containers and virtual machines?A: Containers are often managed using orchestration tools like Kubernetes and Docker Swarm. Virtual machines are managed using virtualization management tools like VMware, VirtualBox, and Hyper-V.

Q: Can containers and virtual machines work together?A: Yes, containers and virtual machines can complement each other in certain scenarios. For instance, you can run containers within virtual machines for added isolation or to run different types of applications in distinct environments.

Q: Which platforms support containers and virtual machines?A: Both containers and virtual machines are supported on various platforms, including Windows, Linux, and macOS. Docker, Podman, Kubernetes, and Docker Swarm are popular for containers, while KVM, VMware, VirtualBox, and Hyper-V are well-known for virtual machines.

Q: Which technology is better for scaling applications?A: Containers are generally better suited for scaling applications due to their lightweight nature, rapid startup times, and efficient resource sharing. They are designed to work seamlessly with container orchestration platforms like Kubernetes.

My Recommendation for Beginners

If you're new to the world of software and looking to choose the right Virtualization and Containerization tools to begin with, my suggestion is to start using VirtualBox for creating virtual machines and Docker for handling containers. These tools are available for Windows, Linux, and macOS operating systems.

There is also a popular tool called Vagrant that lets you spin up virtual machines in virtualbox and other providers in an automated way.

This will remove the overhead of installing OS in the VM instead all you have to do is create a vagrant config file and run a single command to provision the VM.

We have reached the end of the article and by now you should have a fair idea about virtual machines and containers.

Conclusion

In summary, Containers are a good choice for applications that need to be lightweight and portable, while Virtual Machines are a good choice for applications that need to be isolated and secure.

The choice ultimately depends on your specific needs, with containers optimizing resource usage and virtual machines ensuring strong separation.

One can learn these two technologies to further increase their domain knowledge and enhance job opportunities in the field of IT infrastructure, cloud computing, software development, DevOps, and modern application deployment.

By mastering containers and virtual machines, individuals can position themselves as valuable assets in a variety of roles related to system administration, software engineering, cloud architecture, and more.

The above is the detailed content of Containers Vs Virtual Machines: A Detailed Comparison. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1379

1379

52

52

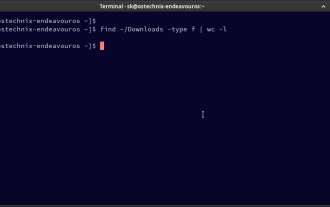

How To Count Files And Directories In Linux: A Beginner's Guide

Mar 19, 2025 am 10:48 AM

How To Count Files And Directories In Linux: A Beginner's Guide

Mar 19, 2025 am 10:48 AM

Efficiently Counting Files and Folders in Linux: A Comprehensive Guide Knowing how to quickly count files and directories in Linux is crucial for system administrators and anyone managing large datasets. This guide demonstrates using simple command-l

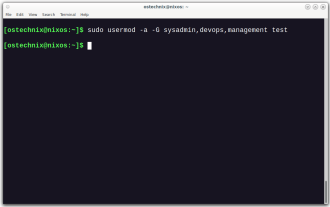

How To Add A User To Multiple Groups In Linux

Mar 18, 2025 am 11:44 AM

How To Add A User To Multiple Groups In Linux

Mar 18, 2025 am 11:44 AM

Efficiently managing user accounts and group memberships is crucial for Linux/Unix system administration. This ensures proper resource and data access control. This tutorial details how to add a user to multiple groups in Linux and Unix systems. We

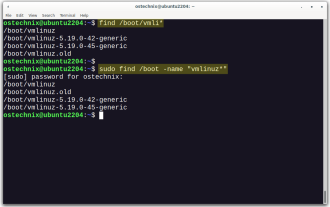

How To List Or Check All Installed Linux Kernels From Commandline

Mar 23, 2025 am 10:43 AM

How To List Or Check All Installed Linux Kernels From Commandline

Mar 23, 2025 am 10:43 AM

Linux Kernel is the core component of a GNU/Linux operating system. Developed by Linus Torvalds in 1991, it is a free, open-source, monolithic, modular, and multitasking Unix-like kernel. In Linux, it is possible to install multiple kernels on a sing

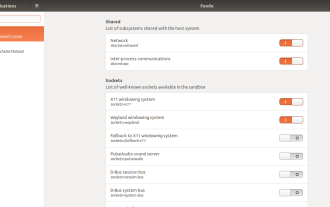

How To Easily Configure Flatpak Apps Permissions With Flatseal

Mar 22, 2025 am 09:21 AM

How To Easily Configure Flatpak Apps Permissions With Flatseal

Mar 22, 2025 am 09:21 AM

Flatpak application permission management tool: Flatseal User Guide Flatpak is a tool designed to simplify Linux software distribution and use. It safely encapsulates applications in a virtual sandbox, allowing users to run applications without root permissions without affecting system security. Because Flatpak applications are located in this sandbox environment, they must request permissions to access other parts of the operating system, hardware devices (such as Bluetooth, network, etc.) and sockets (such as pulseaudio, ssh-auth, cups, etc.). This guide will guide you on how to easily configure Flatpak with Flatseal on Linux

How To Type Indian Rupee Symbol In Ubuntu Linux

Mar 22, 2025 am 10:39 AM

How To Type Indian Rupee Symbol In Ubuntu Linux

Mar 22, 2025 am 10:39 AM

This brief guide explains how to type Indian Rupee symbol in Linux operating systems. The other day, I wanted to type "Indian Rupee Symbol (₹)" in a word document. My keyboard has a rupee symbol on it, but I don't know how to type it. After

What is the Linux best used for?

Apr 03, 2025 am 12:11 AM

What is the Linux best used for?

Apr 03, 2025 am 12:11 AM

Linux is best used as server management, embedded systems and desktop environments. 1) In server management, Linux is used to host websites, databases, and applications, providing stability and reliability. 2) In embedded systems, Linux is widely used in smart home and automotive electronic systems because of its flexibility and stability. 3) In the desktop environment, Linux provides rich applications and efficient performance.

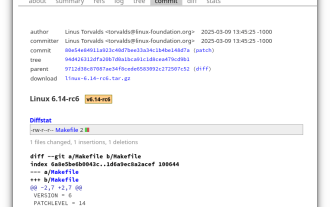

Linux Kernel 6.14 RC6 Released

Mar 24, 2025 am 10:21 AM

Linux Kernel 6.14 RC6 Released

Mar 24, 2025 am 10:21 AM

Linus Torvalds has released Linux Kernel 6.14 Release Candidate 6 (RC6), reporting no significant issues and keeping the release on track. The most notable change in this update addresses an AMD microcode signing issue, while the rest of the updates

LocalSend - The Open-Source Airdrop Alternative For Secure File Sharing

Mar 24, 2025 am 09:20 AM

LocalSend - The Open-Source Airdrop Alternative For Secure File Sharing

Mar 24, 2025 am 09:20 AM

If you're familiar with AirDrop, you know it's a popular feature developed by Apple Inc. that enables seamless file transfer between supported Macintosh computers and iOS devices using Wi-Fi and Bluetooth. However, if you're using Linux and missing o