Database

Database

Mysql Tutorial

Mysql Tutorial

What are the best practices for database schema design to improve performance?

What are the best practices for database schema design to improve performance?

What are the best practices for database schema design to improve performance?

What are the best practices for database schema design to improve performance?

When designing a database schema to improve performance, several best practices should be considered:

- Normalization: Normalize your database to reduce data redundancy and improve data integrity. This involves organizing data into tables in such a way that each piece of data is stored in one place and one place only. However, be mindful of over-normalization, which can lead to complex queries and decreased performance.

- Denormalization: In some cases, denormalization can be beneficial for read-heavy operations. By duplicating data across tables, you can reduce the need for complex joins, thereby improving query performance. The key is to balance normalization and denormalization based on your specific use case.

- Indexing: Proper indexing is crucial for performance. Create indexes on columns that are frequently used in WHERE clauses, JOIN conditions, and ORDER BY statements. However, too many indexes can slow down write operations, so it's important to strike a balance.

- Partitioning: For large databases, partitioning can help manage and query data more efficiently. By dividing a table into smaller, more manageable parts based on a specific key, you can improve query performance and simplify maintenance tasks.

- Use of Appropriate Data Types: Choose the right data types for your columns. Using the smallest data type that can accommodate your data will save space and improve performance. For example, use INT instead of BIGINT if the range of values is small.

- Avoiding Unnecessary Columns: Only include columns that are necessary for your queries. Unused columns can increase the size of your tables and slow down queries.

- Optimizing JOIN Operations: Design your schema to minimize the number of JOIN operations required. When JOINs are necessary, ensure that the columns used in the JOIN conditions are indexed.

- Regular Maintenance: Regularly update statistics and rebuild indexes to ensure the query optimizer has the most current information to work with. This can significantly improve query performance.

By following these best practices, you can design a database schema that not only meets your data integrity needs but also performs efficiently.

What indexing strategies can significantly enhance database query performance?

Indexing is a powerful tool for enhancing database query performance. Here are some strategies that can significantly improve performance:

- Primary and Unique Indexes: Always create a primary index on the primary key of a table. Additionally, create unique indexes on columns that must contain unique values. These indexes not only enforce data integrity but also speed up queries that filter on these columns.

- Composite Indexes: Use composite indexes when queries frequently filter on multiple columns. A composite index on columns (A, B, C) can speed up queries that filter on A, A and B, or A, B, and C. However, it will not speed up queries that filter only on B or C.

- Covering Indexes: A covering index includes all the columns needed to satisfy a query. This means the database engine can retrieve all the required data from the index itself without having to look up the actual table, significantly speeding up the query.

- Clustered Indexes: A clustered index determines the physical order of data in a table. It's beneficial for range queries and can improve performance when you frequently retrieve data in a specific order. However, only one clustered index can be created per table.

- Non-Clustered Indexes: These indexes do not affect the physical order of the data but can speed up queries that do not require the full table scan. They are particularly useful for columns used in WHERE clauses and JOIN conditions.

- Indexing on Frequently Used Columns: Identify columns that are frequently used in WHERE clauses, JOIN conditions, and ORDER BY statements, and create indexes on these columns. However, be cautious not to over-index, as this can slow down write operations.

- Partial Indexes: In some databases, you can create partial indexes that only index a subset of the data based on a condition. This can be useful for improving the performance of queries that only need to access a small portion of the data.

- Regular Index Maintenance: Regularly rebuild and reorganize indexes to ensure they remain efficient. Over time, indexes can become fragmented, which can degrade performance.

By implementing these indexing strategies, you can significantly enhance the performance of your database queries.

How can normalization and denormalization be balanced to optimize database performance?

Balancing normalization and denormalization is crucial for optimizing database performance. Here's how you can achieve this balance:

- Understand Your Workload: The first step is to understand your database workload. If your application is read-heavy, denormalization might be beneficial to reduce the number of JOIN operations. Conversely, if your application is write-heavy, normalization might be more appropriate to minimize data redundancy and improve data integrity.

- Identify Performance Bottlenecks: Use query analysis tools to identify performance bottlenecks. If certain queries are slow due to multiple JOINs, consider denormalizing the data to improve performance. Conversely, if data integrity issues are causing problems, normalization might be necessary.

- Use Hybrid Approaches: In many cases, a hybrid approach works best. You can normalize your data to a certain extent and then denormalize specific parts of the schema that are critical for performance. For example, you might keep your core data normalized but denormalize certain frequently accessed fields to improve read performance.

- Materialized Views: Materialized views can be a good compromise between normalization and denormalization. They store the result of a query in a physical table, which can be updated periodically. This allows you to maintain a normalized schema while still benefiting from the performance improvements of denormalization.

- Data Warehousing: For analytical workloads, consider using a data warehouse with a denormalized schema. This can significantly improve query performance for reporting and analytics, while keeping your transactional database normalized.

- Regular Monitoring and Tuning: Continuously monitor your database performance and be prepared to adjust your normalization/denormalization strategy as your application evolves. What works well today might not be optimal tomorrow.

By carefully balancing normalization and denormalization based on your specific use case and workload, you can optimize your database performance without compromising data integrity.

What tools or software can help in analyzing and improving database schema design for better performance?

Several tools and software can help in analyzing and improving database schema design for better performance. Here are some of the most effective ones:

- Database Management Systems (DBMS): Most modern DBMS, such as MySQL, PostgreSQL, and Oracle, come with built-in tools for analyzing and optimizing database performance. For example, MySQL's EXPLAIN statement can help you understand how queries are executed and identify potential performance issues.

- Query Analyzers: Tools like SQL Server Management Studio (SSMS) for Microsoft SQL Server, pgAdmin for PostgreSQL, and Oracle SQL Developer for Oracle databases provide query analysis features. These tools can help you identify slow queries and suggest optimizations.

- Database Profiling Tools: Tools like New Relic, Datadog, and Dynatrace can monitor your database performance in real-time. They provide insights into query performance, resource usage, and other metrics that can help you identify and resolve performance bottlenecks.

- Schema Design and Modeling Tools: Tools like ER/Studio, Toad Data Modeler, and DbDesigner 4 can help you design and model your database schema. These tools often include features for analyzing the impact of schema changes on performance.

- Index Tuning Tools: Tools like SQL Server's Database Engine Tuning Advisor and Oracle's SQL Access Advisor can analyze your workload and recommend index changes to improve performance.

- Performance Monitoring and Diagnostics Tools: Tools like SolarWinds Database Performance Analyzer and Redgate SQL Monitor provide comprehensive monitoring and diagnostics capabilities. They can help you identify performance issues and suggest optimizations.

- Database Migration and Optimization Tools: Tools like AWS Database Migration Service and Google Cloud's Database Migration Service can help you migrate your database to the cloud and optimize its performance. These services often include features for analyzing and improving schema design.

- Open-Source Tools: Open-source tools like pgBadger for PostgreSQL and pt-query-digest for MySQL can help you analyze query logs and identify performance issues. These tools are often free and can be customized to meet your specific needs.

By leveraging these tools and software, you can gain valuable insights into your database schema design and make informed decisions to improve performance.

The above is the detailed content of What are the best practices for database schema design to improve performance?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52

When might a full table scan be faster than using an index in MySQL?

Apr 09, 2025 am 12:05 AM

When might a full table scan be faster than using an index in MySQL?

Apr 09, 2025 am 12:05 AM

Full table scanning may be faster in MySQL than using indexes. Specific cases include: 1) the data volume is small; 2) when the query returns a large amount of data; 3) when the index column is not highly selective; 4) when the complex query. By analyzing query plans, optimizing indexes, avoiding over-index and regularly maintaining tables, you can make the best choices in practical applications.

Explain InnoDB Full-Text Search capabilities.

Apr 02, 2025 pm 06:09 PM

Explain InnoDB Full-Text Search capabilities.

Apr 02, 2025 pm 06:09 PM

InnoDB's full-text search capabilities are very powerful, which can significantly improve database query efficiency and ability to process large amounts of text data. 1) InnoDB implements full-text search through inverted indexing, supporting basic and advanced search queries. 2) Use MATCH and AGAINST keywords to search, support Boolean mode and phrase search. 3) Optimization methods include using word segmentation technology, periodic rebuilding of indexes and adjusting cache size to improve performance and accuracy.

Can I install mysql on Windows 7

Apr 08, 2025 pm 03:21 PM

Can I install mysql on Windows 7

Apr 08, 2025 pm 03:21 PM

Yes, MySQL can be installed on Windows 7, and although Microsoft has stopped supporting Windows 7, MySQL is still compatible with it. However, the following points should be noted during the installation process: Download the MySQL installer for Windows. Select the appropriate version of MySQL (community or enterprise). Select the appropriate installation directory and character set during the installation process. Set the root user password and keep it properly. Connect to the database for testing. Note the compatibility and security issues on Windows 7, and it is recommended to upgrade to a supported operating system.

Difference between clustered index and non-clustered index (secondary index) in InnoDB.

Apr 02, 2025 pm 06:25 PM

Difference between clustered index and non-clustered index (secondary index) in InnoDB.

Apr 02, 2025 pm 06:25 PM

The difference between clustered index and non-clustered index is: 1. Clustered index stores data rows in the index structure, which is suitable for querying by primary key and range. 2. The non-clustered index stores index key values and pointers to data rows, and is suitable for non-primary key column queries.

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL is an open source relational database management system. 1) Create database and tables: Use the CREATEDATABASE and CREATETABLE commands. 2) Basic operations: INSERT, UPDATE, DELETE and SELECT. 3) Advanced operations: JOIN, subquery and transaction processing. 4) Debugging skills: Check syntax, data type and permissions. 5) Optimization suggestions: Use indexes, avoid SELECT* and use transactions.

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

In MySQL database, the relationship between the user and the database is defined by permissions and tables. The user has a username and password to access the database. Permissions are granted through the GRANT command, while the table is created by the CREATE TABLE command. To establish a relationship between a user and a database, you need to create a database, create a user, and then grant permissions.

Can mysql and mariadb coexist

Apr 08, 2025 pm 02:27 PM

Can mysql and mariadb coexist

Apr 08, 2025 pm 02:27 PM

MySQL and MariaDB can coexist, but need to be configured with caution. The key is to allocate different port numbers and data directories to each database, and adjust parameters such as memory allocation and cache size. Connection pooling, application configuration, and version differences also need to be considered and need to be carefully tested and planned to avoid pitfalls. Running two databases simultaneously can cause performance problems in situations where resources are limited.

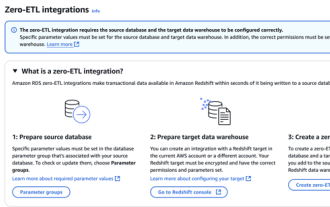

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

Data Integration Simplification: AmazonRDSMySQL and Redshift's zero ETL integration Efficient data integration is at the heart of a data-driven organization. Traditional ETL (extract, convert, load) processes are complex and time-consuming, especially when integrating databases (such as AmazonRDSMySQL) with data warehouses (such as Redshift). However, AWS provides zero ETL integration solutions that have completely changed this situation, providing a simplified, near-real-time solution for data migration from RDSMySQL to Redshift. This article will dive into RDSMySQL zero ETL integration with Redshift, explaining how it works and the advantages it brings to data engineers and developers.