Automating CSV to PostgreSQL Ingestion with Airflow and Docker

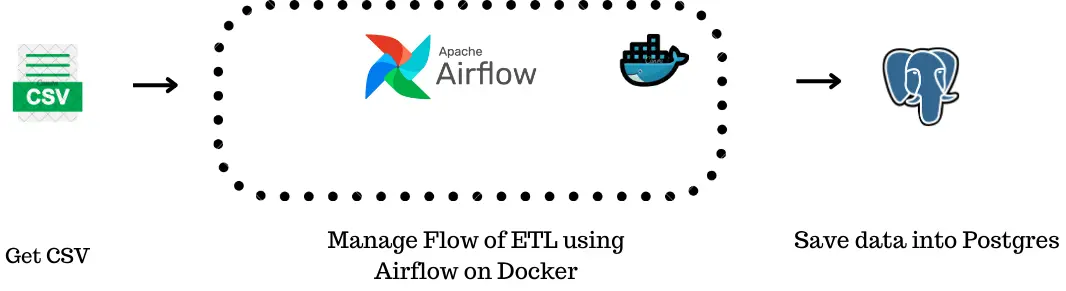

This tutorial demonstrates building a robust data pipeline using Apache Airflow, Docker, and PostgreSQL to automate data transfer from CSV files to a database. We'll cover core Airflow concepts like DAGs, tasks, and operators for efficient workflow management.

This project showcases creating a reliable data pipeline that reads CSV data and writes it to a PostgreSQL database. We'll integrate various Airflow components to ensure efficient data handling and maintain data integrity.

Learning Objectives:

- Grasp core Apache Airflow concepts: DAGs, tasks, and operators.

- Set up and configure Apache Airflow with Docker for workflow automation.

- Integrate PostgreSQL for data management within Airflow pipelines.

- Master reading CSV files and automating data insertion into a PostgreSQL database.

- Build and deploy scalable, efficient data pipelines using Airflow and Docker.

Prerequisites:

- Docker Desktop, VS Code, Docker Compose

- Basic understanding of Docker containers and commands

- Basic Linux commands

- Basic Python knowledge

- Experience building Docker images from Dockerfiles and using Docker Compose

What is Apache Airflow?

Apache Airflow (Airflow) is a platform for programmatically authoring, scheduling, and monitoring workflows. Defining workflows as code improves maintainability, version control, testing, and collaboration. Its user interface simplifies visualizing pipelines, monitoring progress, and troubleshooting.

Airflow Terminology:

- Workflow: A step-by-step process to achieve a goal (e.g., baking a cake).

-

DAG (Directed Acyclic Graph): A workflow blueprint showing task dependencies and execution order. It's a visual representation of the workflow.

- Task: A single action within a workflow (e.g., mixing ingredients).

-

Operators: Building blocks of tasks, defining actions like running Python scripts or executing SQL. Key operators include

PythonOperator,DummyOperator, andPostgresOperator. - XComs (Cross-Communications): Enable tasks to communicate and share data.

- Connections: Manage credentials for connecting to external systems (e.g., databases).

Setting up Apache Airflow with Docker and Dockerfile:

Using Docker ensures a consistent and reproducible environment. A Dockerfile automates image creation. The following instructions should be saved as Dockerfile (no extension):

FROM apache/airflow:2.9.1-python3.9 USER root COPY requirements.txt /requirements.txt RUN pip3 install --upgrade pip && pip3 install --no-cache-dir -r /requirements.txt RUN pip3 install apache-airflow-providers-apache-spark apache-airflow-providers-amazon RUN apt-get update && apt-get install -y gcc python3-dev openjdk-17-jdk && apt-get clean

This Dockerfile uses an official Airflow image, installs dependencies from requirements.txt, and installs necessary Airflow providers (Spark and AWS examples are shown; you may need others).

Docker Compose Configuration:

docker-compose.yml orchestrates the Docker containers. The following configuration defines services for the webserver, scheduler, triggerer, CLI, init, and PostgreSQL. Note the use of the x-airflow-common section for shared settings and the connection to the PostgreSQL database. (The full docker-compose.yml is too long to include here but the key sections are shown above).

Project Setup and Execution:

- Create a project directory.

- Add the

Dockerfileanddocker-compose.ymlfiles. - Create

requirements.txtlisting necessary Python packages (e.g., pandas). - Run

docker-compose up -dto start the containers. - Access the Airflow UI at

http://localhost:8080. - Create a PostgreSQL connection in the Airflow UI (using

write_to_psqlas the connection ID). - Create a sample

input.csvfile.

DAG and Python Function:

The Airflow DAG (sample.py) defines the workflow:

- A

PostgresOperatorcreates the database table. - A

PythonOperator(generate_insert_queries) reads the CSV and generates SQLINSERTstatements, saving them todags/sql/insert_queries.sql. - Another

PostgresOperatorexecutes the generated SQL.

(The full sample.py code is too long to include here but the key sections are shown above).

Conclusion:

This project demonstrates a complete data pipeline using Airflow, Docker, and PostgreSQL. It highlights the benefits of automation and the use of Docker for reproducible environments. The use of operators and the DAG structure are key to efficient workflow management.

(The remaining sections, including FAQs and Github Repo, are omitted for brevity. They are present in the original input.)

The above is the detailed content of Automating CSV to PostgreSQL Ingestion with Airflow and Docker. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

Vibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

The article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

Mistral OCR: Revolutionizing Retrieval-Augmented Generation with Multimodal Document Understanding Retrieval-Augmented Generation (RAG) systems have significantly advanced AI capabilities, enabling access to vast data stores for more informed respons