Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

A brief discussion on how to use PHP to crawl and analyze Zhihu user data

A brief discussion on how to use PHP to crawl and analyze Zhihu user data

A brief discussion on how to use PHP to crawl and analyze Zhihu user data

本文给大家介绍的是利用php的curl编写的爬取知乎用户数据的爬虫,并分析用户的各种属性。有一定的参考价值,有需要的朋友可以参考一下,希望对大家有所帮助。

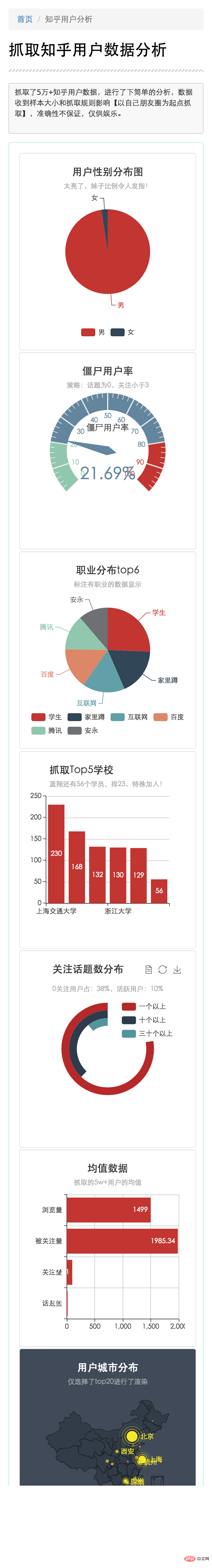

移动端分析数据截图

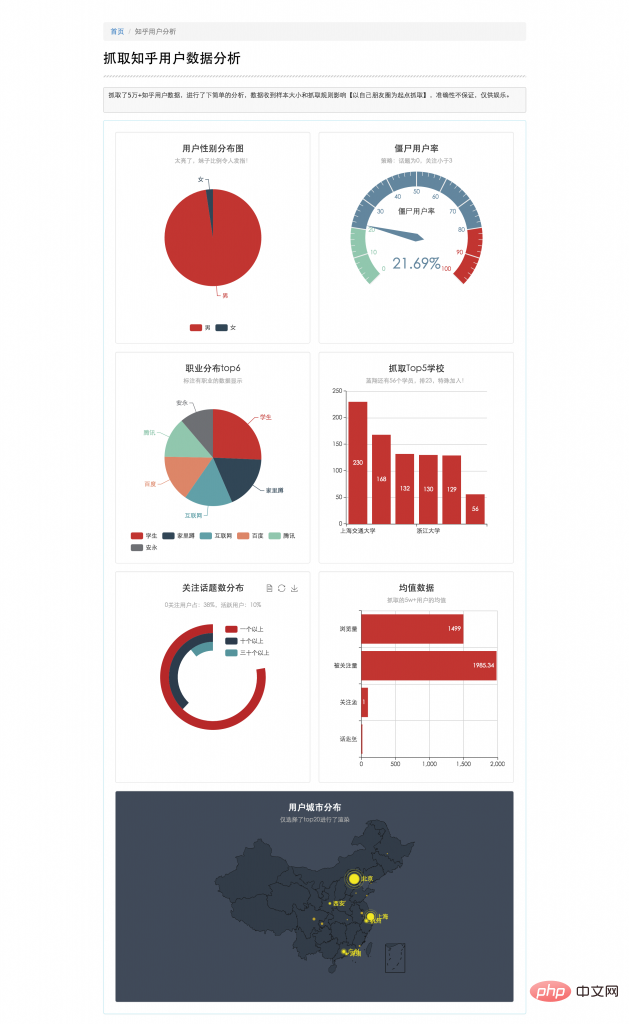

pc端分析数据截图

整个爬取,分析,展现过程大概分如下几步,小拽将分别介绍

curl爬取知乎网页数据

正则分析知乎网页数据

数据数据入库和程序部署

数据分析和呈现

curl爬取网页数据

PHP的curl扩展是PHP支持的,允许你与各种服务器使用各种类型的协议进行连接和通信的库。是一个非常便捷的抓取网页的工具,同时,支持多线程扩展。

本程序抓取的是知乎对外提供用户访问的个人信息页面https://www.zhihu.com/people/xxx,抓取过程需要携带用户cookie才能获取页面。直接上码

获取页面cookie

代码如下:

// 登录知乎,打开个人中心,打开控制台,获取cookie document.cookie "_za=67254197-3wwb8d-43f6-94f0-fb0e2d521c31; _ga=GA1.2.2142818188.1433767929; q_c1=78ee1604225d47d08cddd8142a08288b23|1452172601000|1452172601000; _xsrf=15f0639cbe6fb607560c075269064393; cap_id="N2QwMTExNGQ0YTY2NGVddlMGIyNmQ4NjdjOTU0YTM5MmQ=|1453444256|49fdc6b43dc51f702b7d6575451e228f56cdaf5d"; __utmt=1; unlock_ticket="QUJDTWpmM0lsZdd2dYQUFBQVlRSlZUVTNVb1ZaNDVoQXJlblVmWGJ0WGwyaHlDdVdscXdZU1VRPT0=|1453444421|c47a2afde1ff334d416bafb1cc267b41014c9d5f"; __utma=51854390.21428dd18188.1433767929.1453187421.1453444257.3; __utmb=51854390.14.8.1453444425011; __utmc=51854390; __utmz=51854390.1452846679.1.dd1.utmcsr=google|utmccn=(organic)|utmcmd=organic|utmctr=(not%20provided); __utmv=51854390.100-1|2=registration_date=20150823=1^dd3=entry_date=20150823=1"

通过curl,携带cookie,先抓取本人中心页面

/**

* 通过用户名抓取个人中心页面并存储

*

* @param $username str :用户名 flag

* @return boolean :成功与否标志

*/

public function spiderUser($username)

{

$cookie = "xxxx" ;

$url_info = 'http://www.zhihu.com/people/' . $username; //此处cui-xiao-zhuai代表用户ID,可以直接看url获取本人id

$ch = curl_init($url_info); //初始化会话

curl_setopt($ch, CURLOPT_HEADER, 0);

curl_setopt($ch, CURLOPT_COOKIE, $cookie); //设置请求COOKIE

curl_setopt($ch, CURLOPT_USERAGENT, $_SERVER['HTTP_USER_AGENT']);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); //将curl_exec()获取的信息以文件流的形式返回,而不是直接输出。

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, 1);

$result = curl_exec($ch);

file_put_contents('/home/work/zxdata_ch/php/zhihu_spider/file/'.$username.'.html',$result);

return true;

}正则分析网页数据分析新链接,进一步爬取

对于抓取过来的网页进行存储,要想进行进一步的爬取,页面必须包含有可用于进一步爬取用户的链接。通过对知乎页面分析发现:在个人中心页面中有关注人和部分点赞人和被关注人。

如下所示

代码如下:

// 抓取的html页面中发现了新的用户,可用于爬虫 <a class="zm-item-link-avatar avatar-link" href="/people/new-user" data-tip="p$t$new-user">

ok,这样子就可以通过自己-》关注人-》关注人的关注人-》。。。进行不断爬取。接下来就是通过正则匹配提取该信息

代码如下:

// 匹配到抓取页面的所有用户 preg_match_all('/\/people\/([\w-]+)\"/i', $str, $match_arr); // 去重合并入新的用户数组,用户进一步抓取 self::$newUserArr = array_unique(array_merge($match_arr[1], self::$newUserArr));

到此,整个爬虫过程就可以顺利进行了。

如果需要大量的抓取数据,可以研究下curl_multi和pcntl进行多线程的快速抓取,此处不做赘述。

分析用户数据,提供分析

通过正则可以进一步匹配出更多的该用户数据,直接上码。

// 获取用户头像

preg_match('/<img .+src=\"?([^\s]+\.(jpg|gif|bmp|bnp|png))\"?.+ alt="A brief discussion on how to use PHP to crawl and analyze Zhihu user data" >/i', $str, $match_img);

$img_url = $match_img[1];

// 匹配用户名:

// <span class="name">崔小拽</span>

preg_match('/<span.+class=\"?name\"?>([\x{4e00}-\x{9fa5}]+).+span>/u', $str, $match_name);

$user_name = $match_name[1];

// 匹配用户简介

// class bio span 中文

preg_match('/<span.+class=\"?bio\"?.+\>([\x{4e00}-\x{9fa5}]+).+span>/u', $str, $match_title);

$user_title = $match_title[1];

// 匹配性别

//<input type="radio" name="gender" value="1" checked="checked" class="male"/> 男

// gender value1 ;结束 中文

preg_match('/<input.+name=\"?gender\"?.+value=\"?1\"?.+([\x{4e00}-\x{9fa5}]+).+\;/u', $str, $match_sex);

$user_sex = $match_sex[1];

// 匹配地区

//<span class="location item" title="北京">

preg_match('/<span.+class=\"?location.+\"?.+\"([\x{4e00}-\x{9fa5}]+)\">/u', $str, $match_city);

$user_city = $match_city[1];

// 匹配工作

//<span class="employment item" title="人见人骂的公司">人见人骂的公司</span>

preg_match('/<span.+class=\"?employment.+\"?.+\"([\x{4e00}-\x{9fa5}]+)\">/u', $str, $match_employment);

$user_employ = $match_employment[1];

// 匹配职位

// <span class="position item" title="程序猿"><a href="/topic/19590046" title="程序猿" class="topic-link" data-token="19590046" data-topicid="13253">程序猿</a></span>

preg_match('/<span.+class=\"?position.+\"?.+\"([\x{4e00}-\x{9fa5}]+).+\">/u', $str, $match_position);

$user_position = $match_position[1];

// 匹配学历

// <span class="education item" title="研究僧">研究僧</span>

preg_match('/<span.+class=\"?education.+\"?.+\"([\x{4e00}-\x{9fa5}]+)\">/u', $str, $match_education);

$user_education = $match_education[1];

// 工作情况

// <span class="education-extra item" title='挨踢'>挨踢</span>

preg_match('/<span.+class=\"?education-extra.+\"?.+>([\x{4e00}-

\x{9fa5}]+)</u', $str, $match_education_extra);

$user_education_extra = $match_education_extra[1];

// 匹配关注话题数量

// class="zg-link-litblue"><strong>41 个话题</strong></a>

preg_match('/class=\"?zg-link-litblue\"?><strong>(\d+)\s.+strong>/i', $str, $match_topic);

$user_topic = $match_topic[1];

// 关注人数

// <span class="zg-gray-normal">关注了

preg_match_all('/<strong>(\d+)<.+<label>/i', $str, $match_care);

$user_care = $match_care[1][0];

$user_be_careed = $match_care[1][1];

// 历史浏览量

// <span class="zg-gray-normal">个人主页被 <strong>17</strong> 人浏览</span>

preg_match('/class=\"?zg-gray-normal\"?.+>(\d+)<.+span>/i', $str, $match_browse);

$user_browse = $match_browse[1];在抓取的过程中,有条件的话,一定要通过redis入库,确实能提升抓取和入库效率。没有条件的话只能通过sql优化。这里来几发心德。

数据库表设计索引一定要慎重。在spider爬取的过程中,建议出了用户名,左右字段都不要索引,包括主键都不要,尽可能的提高入库效率,试想5000w的数据,每次添加一个,建立索引需要多少消耗。等抓取完毕,需要分析数据时,批量建立索引。

数据入库和更新操作,一定要批量。 mysql 官方给出的增删改的建议和速度:http://dev.mysql.com/doc/refman/5.7/en/insert-speed.html

# 官方的最优批量插入 INSERT INTO yourtable VALUES (1,2), (5,5), ...;

部署操作。程序在抓取过程中,有可能会出现异常挂掉,为了保证高效稳定,尽可能的写一个定时脚本。每隔一段时间干掉,重新跑,这样即使异常挂掉也不会浪费太多宝贵时间,毕竟,time is money。

#!/bin/bash

# 干掉

ps aux |grep spider |awk '{print $2}'|xargs kill -9

sleep 5s

# 重新跑

nohup /home/cuixiaohuan/lamp/php5/bin/php /home/cuixiaohuan/php/zhihu_spider/spider_new.php &数据分析呈现

数据的呈现主要使用echarts 3.0,感觉对于移动端兼容还不错。兼容移动端的页面响应式布局主要通过几个简单的css控制,代码如下

// 获取用户头像

preg_match('/<img .+src=\"?([^\s]+\.(jpg|gif|bmp|bnp|png))\"?.+ alt="A brief discussion on how to use PHP to crawl and analyze Zhihu user data" >/i', $str, $match_img);

$img_url = $match_img[1];

// 匹配用户名:

// <span class="name">崔小拽</span>

preg_match('/<span.+class=\"?name\"?>([\x{4e00}-\x{9fa5}]+).+span>/u', $str, $match_name);

$user_name = $match_name[1];

// 匹配用户简介

// class bio span 中文

preg_match('/<span.+class=\"?bio\"?.+\>([\x{4e00}-\x{9fa5}]+).+span>/u', $str, $match_title);

$user_title = $match_title[1];

// 匹配性别

//<input type="radio" name="gender" value="1" checked="checked" class="male"/> 男

// gender value1 ;结束 中文

preg_match('/<input.+name=\"?gender\"?.+value=\"?1\"?.+([\x{4e00}-\x{9fa5}]+).+\;/u', $str, $match_sex);

$user_sex = $match_sex[1];

// 匹配地区

//<span class="location item" title="北京">

preg_match('/<span.+class=\"?location.+\"?.+\"([\x{4e00}-\x{9fa5}]+)\">/u', $str, $match_city);

$user_city = $match_city[1];

// 匹配工作

//<span class="employment item" title="人见人骂的公司">人见人骂的公司</span>

preg_match('/<span.+class=\"?employment.+\"?.+\"([\x{4e00}-\x{9fa5}]+)\">/u', $str, $match_employment);

$user_employ = $match_employment[1];

// 匹配职位

// <span class="position item" title="程序猿"><a href="/topic/19590046" title="程序猿" class="topic-link" data-token="19590046" data-topicid="13253">程序猿</a></span>

preg_match('/<span.+class=\"?position.+\"?.+\"([\x{4e00}-\x{9fa5}]+).+\">/u', $str, $match_position);

$user_position = $match_position[1];

// 匹配学历

// <span class="education item" title="研究僧">研究僧</span>

preg_match('/<span.+class=\"?education.+\"?.+\"([\x{4e00}-\x{9fa5}]+)\">/u', $str, $match_education);

$user_education = $match_education[1];

// 工作情况

// <span class="education-extra item" title='挨踢'>挨踢</span>

preg_match('/<span.+class=\"?education-extra.+\"?.+>([\x{4e00}-

\x{9fa5}]+)</u', $str, $match_education_extra);

$user_education_extra = $match_education_extra[1];

// 匹配关注话题数量

// class="zg-link-litblue"><strong>41 个话题</strong></a>

preg_match('/class=\"?zg-link-litblue\"?><strong>(\d+)\s.+strong>/i', $str, $match_topic);

$user_topic = $match_topic[1];

// 关注人数

// <span class="zg-gray-normal">关注了

preg_match_all('/<strong>(\d+)<.+<label>/i', $str, $match_care);

$user_care = $match_care[1][0];

$user_be_careed = $match_care[1][1];

// 历史浏览量

// <span class="zg-gray-normal">个人主页被 <strong>17</strong> 人浏览</span>

preg_match('/class=\"?zg-gray-normal\"?.+>(\d+)<.+span>/i', $str, $match_browse);

$user_browse = $match_browse[1];推荐学习:《PHP视频教程》

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52

How to use PHP crawler to crawl big data

Jun 14, 2023 pm 12:52 PM

How to use PHP crawler to crawl big data

Jun 14, 2023 pm 12:52 PM

With the advent of the data era and the diversification of data volume and data types, more and more companies and individuals need to obtain and process massive amounts of data. At this time, crawler technology becomes a very effective method. This article will introduce how to use PHP crawler to crawl big data. 1. Introduction to crawlers Crawlers are a technology that automatically obtains Internet information. The principle is to automatically obtain and parse website content on the Internet by writing programs, and capture the required data for processing or storage. In the evolution of crawler programs, many mature

Implementation method of high-performance PHP crawler

Jun 13, 2023 pm 03:22 PM

Implementation method of high-performance PHP crawler

Jun 13, 2023 pm 03:22 PM

With the development of the Internet, the amount of information in web pages is getting larger and deeper, and many people need to quickly extract the information they need from massive amounts of data. At this time, crawlers have become one of the important tools. This article will introduce how to use PHP to write a high-performance crawler to quickly and accurately obtain the required information from the network. 1. Understand the basic principles of crawlers. The basic function of a crawler is to simulate a browser to access web pages and obtain specific information. It can simulate a series of operations performed by users in a web browser, such as sending requests to the server.

Getting started with PHP crawlers: How to choose the right class library?

Aug 09, 2023 pm 02:52 PM

Getting started with PHP crawlers: How to choose the right class library?

Aug 09, 2023 pm 02:52 PM

Getting started with PHP crawlers: How to choose the right class library? With the rapid development of the Internet, a large amount of data is scattered across various websites. In order to obtain this data, we often need to use crawlers to extract information from web pages. As a commonly used web development language, PHP also has many class libraries suitable for crawlers to choose from. However, there are some key factors to consider when choosing a library that suits your project needs. Functional richness: Different crawler libraries provide different functions. Some libraries can only be used for simple web scraping, while others

Common anti-crawling strategies for PHP web crawlers

Jun 14, 2023 pm 03:29 PM

Common anti-crawling strategies for PHP web crawlers

Jun 14, 2023 pm 03:29 PM

A web crawler is a program that automatically crawls Internet information. It can obtain a large amount of data in a short period of time. However, due to the scalability and efficiency of web crawlers, many websites are worried that they may be attacked by crawlers, so they have adopted various anti-crawling strategies. Among them, common anti-crawling strategies for PHP web crawlers mainly include the following: IP restriction IP restriction is the most common anti-crawling technology. By restricting IP access, malicious crawler attacks can be effectively prevented. To deal with this anti-crawling strategy, PHP web crawlers can

Concurrency and multi-threading techniques for PHP crawlers

Aug 08, 2023 pm 02:31 PM

Concurrency and multi-threading techniques for PHP crawlers

Aug 08, 2023 pm 02:31 PM

Introduction to concurrency and multi-thread processing skills of PHP crawlers: With the rapid development of the Internet, a large amount of data information is stored on various websites, and obtaining this data has become a requirement in many business scenarios. As a tool for automatically obtaining network information, crawlers are widely used in data collection, search engines, public opinion analysis and other fields. This article will introduce a concurrency and multi-threading processing technique for a PHP-based crawler class, and illustrate its implementation through code examples. 1. The basic structure of the reptile class is used to realize the concurrency and multi-threading of the reptile class.

PHP-based crawler implementation methods and precautions

Jun 13, 2023 pm 06:21 PM

PHP-based crawler implementation methods and precautions

Jun 13, 2023 pm 06:21 PM

With the rapid development and popularization of the Internet, more and more data need to be collected and processed. Crawler, as a commonly used web crawling tool, can help quickly access, collect and organize web data. According to different needs, there will be multiple languages to implement crawlers, among which PHP is also a popular one. Today, we will talk about the implementation methods and precautions of crawlers based on PHP. 1. PHP crawler implementation method Beginners are advised to use ready-made libraries. For beginners, you may need to accumulate certain coding experience and network

How to use PHP to implement a crawler and capture data

Jun 27, 2023 am 10:56 AM

How to use PHP to implement a crawler and capture data

Jun 27, 2023 am 10:56 AM

With the continuous development of the Internet, a large amount of data is stored on various websites, which has important value for business and scientific research. However, these data are not necessarily easy to obtain. At this point, the crawler becomes a very important and effective tool, which can automatically access the website and capture data. PHP is a popular interpreted programming language. It is easy to learn and has efficient code, making it suitable for implementing crawlers. This article will introduce how to use PHP to implement crawlers and capture data from the following aspects. 1. Working principle of crawler

How to use PHP crawler to automatically fill forms and submit data?

Aug 08, 2023 pm 12:49 PM

How to use PHP crawler to automatically fill forms and submit data?

Aug 08, 2023 pm 12:49 PM

How to use PHP crawler to automatically fill forms and submit data? With the development of the Internet, we increasingly need to obtain data from web pages, or automatically fill in forms and submit data. As a powerful server-side language, PHP provides numerous tools and class libraries to implement these functions. In this article, we will explain how to use crawlers in PHP to automatically fill forms and submit data. First, we need to use the curl library in PHP to obtain and submit web page data. The curl library is a powerful