The log has 5G, how to open it?

I want to analyze the logs, but I have 5G. I don’t know what tool can open it. I tried two text splitters, but they can’t be opened, let alone counting splits. I’m looking for a method and a useful text splitter.

Thank you everyone for providing so many methods!

Reply content:

I want to analyze the logs, but I have 5G. I don’t know what tool can open it. I tried two text splitters, but they can’t be opened, let alone counting splits. I’m looking for a method and a useful text splitter.

Thank you everyone for providing so many methods!

What is this opening for? Do you want to see some of the content inside? The log is line by line. You can first use the head command to check the first few lines. If you feel it is not enough, then use tail to check the next few lines. If you want to extract something from the log, you can use the awk command to process it line by line. If you need to process a lot of logic, use python. file('log.log','r') in Python is a row iterator and takes up very little memory.

If you want to open it directly with a visual tool, the ultraEdit 64-bit mentioned earlier can do it

It is recommended to use EmEditor. The picture below is taken from the official website introduction.

You can use fopen/while(!feof($fp))/fgets to traverse the file line by line to operate:

<code><?php

header('Content-Type: text/plain; charset=utf-8');

$file = __FILE__;

$fp = fopen($file, 'r');

$count = 0;

while ( !feof($fp) ) {

$line = fgets($fp);

//echo $count.': '.$line;

$count++;

}

fclose($fp);</code>

Just use linux split

<code>split -b 100m your_file</code>

Try using ultraEdit 64-bit

Log analysis recommended awk

It’s not standardized enough..

Don’t you need to set an upper limit when processing logs, and archive them by classification and level? It’s 5G, which is very painful..

Try it with python generator

UltraEdit has tried to open log files larger than 2G, but not 7G.

less can view files!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1371

1371

52

52

mysql whether to change table lock table

Apr 08, 2025 pm 05:06 PM

mysql whether to change table lock table

Apr 08, 2025 pm 05:06 PM

When MySQL modifys table structure, metadata locks are usually used, which may cause the table to be locked. To reduce the impact of locks, the following measures can be taken: 1. Keep tables available with online DDL; 2. Perform complex modifications in batches; 3. Operate during small or off-peak periods; 4. Use PT-OSC tools to achieve finer control.

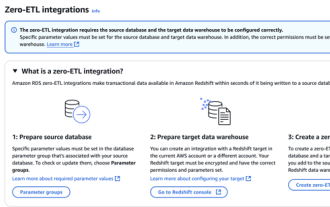

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

Data Integration Simplification: AmazonRDSMySQL and Redshift's zero ETL integration Efficient data integration is at the heart of a data-driven organization. Traditional ETL (extract, convert, load) processes are complex and time-consuming, especially when integrating databases (such as AmazonRDSMySQL) with data warehouses (such as Redshift). However, AWS provides zero ETL integration solutions that have completely changed this situation, providing a simplified, near-real-time solution for data migration from RDSMySQL to Redshift. This article will dive into RDSMySQL zero ETL integration with Redshift, explaining how it works and the advantages it brings to data engineers and developers.

Query optimization in MySQL is essential for improving database performance, especially when dealing with large data sets

Apr 08, 2025 pm 07:12 PM

Query optimization in MySQL is essential for improving database performance, especially when dealing with large data sets

Apr 08, 2025 pm 07:12 PM

1. Use the correct index to speed up data retrieval by reducing the amount of data scanned select*frommployeeswherelast_name='smith'; if you look up a column of a table multiple times, create an index for that column. If you or your app needs data from multiple columns according to the criteria, create a composite index 2. Avoid select * only those required columns, if you select all unwanted columns, this will only consume more server memory and cause the server to slow down at high load or frequency times For example, your table contains columns such as created_at and updated_at and timestamps, and then avoid selecting * because they do not require inefficient query se

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

In MySQL database, the relationship between the user and the database is defined by permissions and tables. The user has a username and password to access the database. Permissions are granted through the GRANT command, while the table is created by the CREATE TABLE command. To establish a relationship between a user and a database, you need to create a database, create a user, and then grant permissions.

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

MySQL has a free community version and a paid enterprise version. The community version can be used and modified for free, but the support is limited and is suitable for applications with low stability requirements and strong technical capabilities. The Enterprise Edition provides comprehensive commercial support for applications that require a stable, reliable, high-performance database and willing to pay for support. Factors considered when choosing a version include application criticality, budgeting, and technical skills. There is no perfect option, only the most suitable option, and you need to choose carefully according to the specific situation.

Can mysql run on android

Apr 08, 2025 pm 05:03 PM

Can mysql run on android

Apr 08, 2025 pm 05:03 PM

MySQL cannot run directly on Android, but it can be implemented indirectly by using the following methods: using the lightweight database SQLite, which is built on the Android system, does not require a separate server, and has a small resource usage, which is very suitable for mobile device applications. Remotely connect to the MySQL server and connect to the MySQL database on the remote server through the network for data reading and writing, but there are disadvantages such as strong network dependencies, security issues and server costs.

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

MySQL database performance optimization guide In resource-intensive applications, MySQL database plays a crucial role and is responsible for managing massive transactions. However, as the scale of application expands, database performance bottlenecks often become a constraint. This article will explore a series of effective MySQL performance optimization strategies to ensure that your application remains efficient and responsive under high loads. We will combine actual cases to explain in-depth key technologies such as indexing, query optimization, database design and caching. 1. Database architecture design and optimized database architecture is the cornerstone of MySQL performance optimization. Here are some core principles: Selecting the right data type and selecting the smallest data type that meets the needs can not only save storage space, but also improve data processing speed.

How to fill in mysql username and password

Apr 08, 2025 pm 07:09 PM

How to fill in mysql username and password

Apr 08, 2025 pm 07:09 PM

To fill in the MySQL username and password: 1. Determine the username and password; 2. Connect to the database; 3. Use the username and password to execute queries and commands.