mysql - PHP bulk insert and update issues

Insert problem

When PHP operates large batches of data, the solution I thought of is as follows

Method 1: Construct the sql statement in the foreach loop traversal and then insert it into the database

insert into xxx values (xxx, xxx, xxx)

Method 2: foreach loop traverses to construct the sql statement, and finally inserts

insert into xxx (field1, field2, field3) values (xxx1, xxx2, xxx3), (xxx1, xxx2, xxx3)

The above is just for insertion. If you want to first determine whether the inserted data exists in the database when inserting, then a select operation must be performed before each insertion. Is this inefficient? How to optimize clam?

Updated question

In fact, it is similar to the insert above. Select before update. If it exists, update it. If it does not exist, insert it. There are still many SQL statements. How to optimize it?

Reply content:

Insert problem

When PHP operates large batches of data, the solution I thought of is as follows

Method 1: Construct the sql statement in the foreach loop traversal and then insert it into the database

insert into xxx values (xxx, xxx, xxx)

Method 2: foreach loop traverses to construct the sql statement, and finally inserts

insert into xxx (field1, field2, field3) values (xxx1, xxx2, xxx3), (xxx1, xxx2, xxx3)

The above is just for insertion. If you want to first determine whether the inserted data exists in the database when inserting, then a select operation must be performed before each insertion. Is this inefficient? How to optimize clam?

Updated question

In fact, it is similar to the above insertion. Select before updating. If it exists, update it. If it does not exist, insert it. There are still many SQL statements. How to optimize it?

1- Mysql also has a syntax that is REPLACE INTO. If it exists, update it. Otherwise, insert

2- Mysql has another syntax that is INSERT INTO... ON DUPLICATE KEY UPDATE. If there is a unique key conflict, update it.

3- Large batches of data insertion are very rare in actual development. At least 1,000 items or less are definitely not considered large batches, so if you want to save trouble, you will usually verify that the trouble caused by being lazy and saving 4 hours is enough for you. 8 hours of this prophecy.

Usually large batch data insertion occurs when data is imported from the old database, but this kind of import is usually only once, so it cannot be taken too seriously. Others, such as importing data from uploaded csv files, need to depend on the specific business. For logic, it is more common to use try/catch for insertion. The failed data is displayed, allowing the user to confirm the overwrite, and then update.

1) If it can be guaranteed that there will be no duplication of data in insert, then insert is definitely more appropriate

2) Index~, proper index really helps a lot to improve performance

3) Use mysql batch processing Importing can help improve performance, but the disadvantage is that data may be lost.

4) Turn off automatic commit=true, which means closing the transaction. After each submission of several records (for example, 1W records), commit once. The speed can be greatly improved. It is also very easy to configure 1W QPS on a single machine.

5) Redesign the mysql library, separate reading and writing, create a cluster, and install SSD...

REPLACE deletes the insert if it exists or updates if DUPLICATE exists

You can use replace to solve the trouble of update and insert

On duplicate key update Use this to create a unique index for each field that you don’t want to duplicate, so you don’t have to check whether to choose insert or update. It can automatically execute the statement following UPDATE when repeated

You don’t have to use REPLACE and DUPLICATE. You can try:

Start a transaction and insert in a loop. If the insertion fails, update instead.

<code><?php

$db = new mysqli('127.0.0.1','user','pass','dbname',3306);

$db->query('SET AUTOCOMMIT=0');

$db->query('START TRANSACTION');

//开始循环

if(!$db->query('INSERT INTO posts(id, post_title, post_content) VALUES(1,"title_1","content_1")')) {

$db->query('UPDATE posts SET post_title = "title_1", post_content = "content_1" WHERE id = 1');

}

//插入失败,或者没有AUTO_INCREMENT字段,或者不是INSERT语句,insert_id为0.

echo $db->insert_id;

$db->query('COMMIT');

$db->query('SET AUTOCOMMIT=1');</code>

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1375

1375

52

52

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

In MySQL database, the relationship between the user and the database is defined by permissions and tables. The user has a username and password to access the database. Permissions are granted through the GRANT command, while the table is created by the CREATE TABLE command. To establish a relationship between a user and a database, you need to create a database, create a user, and then grant permissions.

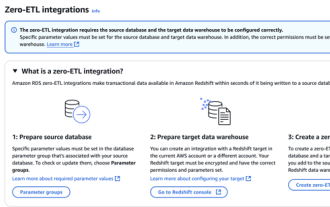

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

Data Integration Simplification: AmazonRDSMySQL and Redshift's zero ETL integration Efficient data integration is at the heart of a data-driven organization. Traditional ETL (extract, convert, load) processes are complex and time-consuming, especially when integrating databases (such as AmazonRDSMySQL) with data warehouses (such as Redshift). However, AWS provides zero ETL integration solutions that have completely changed this situation, providing a simplified, near-real-time solution for data migration from RDSMySQL to Redshift. This article will dive into RDSMySQL zero ETL integration with Redshift, explaining how it works and the advantages it brings to data engineers and developers.

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

MySQL has a free community version and a paid enterprise version. The community version can be used and modified for free, but the support is limited and is suitable for applications with low stability requirements and strong technical capabilities. The Enterprise Edition provides comprehensive commercial support for applications that require a stable, reliable, high-performance database and willing to pay for support. Factors considered when choosing a version include application criticality, budgeting, and technical skills. There is no perfect option, only the most suitable option, and you need to choose carefully according to the specific situation.

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

MySQL database performance optimization guide In resource-intensive applications, MySQL database plays a crucial role and is responsible for managing massive transactions. However, as the scale of application expands, database performance bottlenecks often become a constraint. This article will explore a series of effective MySQL performance optimization strategies to ensure that your application remains efficient and responsive under high loads. We will combine actual cases to explain in-depth key technologies such as indexing, query optimization, database design and caching. 1. Database architecture design and optimized database architecture is the cornerstone of MySQL performance optimization. Here are some core principles: Selecting the right data type and selecting the smallest data type that meets the needs can not only save storage space, but also improve data processing speed.

How to fill in mysql username and password

Apr 08, 2025 pm 07:09 PM

How to fill in mysql username and password

Apr 08, 2025 pm 07:09 PM

To fill in the MySQL username and password: 1. Determine the username and password; 2. Connect to the database; 3. Use the username and password to execute queries and commands.

Query optimization in MySQL is essential for improving database performance, especially when dealing with large data sets

Apr 08, 2025 pm 07:12 PM

Query optimization in MySQL is essential for improving database performance, especially when dealing with large data sets

Apr 08, 2025 pm 07:12 PM

1. Use the correct index to speed up data retrieval by reducing the amount of data scanned select*frommployeeswherelast_name='smith'; if you look up a column of a table multiple times, create an index for that column. If you or your app needs data from multiple columns according to the criteria, create a composite index 2. Avoid select * only those required columns, if you select all unwanted columns, this will only consume more server memory and cause the server to slow down at high load or frequency times For example, your table contains columns such as created_at and updated_at and timestamps, and then avoid selecting * because they do not require inefficient query se

How to copy and paste mysql

Apr 08, 2025 pm 07:18 PM

How to copy and paste mysql

Apr 08, 2025 pm 07:18 PM

Copy and paste in MySQL includes the following steps: select the data, copy with Ctrl C (Windows) or Cmd C (Mac); right-click at the target location, select Paste or use Ctrl V (Windows) or Cmd V (Mac); the copied data is inserted into the target location, or replace existing data (depending on whether the data already exists at the target location).

How to view mysql

Apr 08, 2025 pm 07:21 PM

How to view mysql

Apr 08, 2025 pm 07:21 PM

View the MySQL database with the following command: Connect to the server: mysql -u Username -p Password Run SHOW DATABASES; Command to get all existing databases Select database: USE database name; View table: SHOW TABLES; View table structure: DESCRIBE table name; View data: SELECT * FROM table name;