Greenplum creates table--distributed key_PHP tutorial

Greenplum creates a table--distribution key

Greenplum is a distributed system. When creating a table, you need to specify a distribution key (CREATEDBA permission is required to create a table). The purpose is to evenly distribute the data to Each segment. Choosing the distribution key is very important. Choosing the wrong key will result in non-unique data and, more seriously, will cause a sharp decline in SQL performance.

Greenplum has two distribution strategies:

1. Hash distribution.

Greenplum uses hash distribution strategy by default. This strategy can select one or more columns as the distribution key (DK for short). The distribution key uses a hash algorithm to confirm that the data is stored in the corresponding segment. The same distribution key values will be hashed to the same segment. It is best to have a unique key or primary key on the table to ensure that the data is not distributed evenly among each segment. Grammar, distributed by.

If there is no primary key or unique key, the first column is selected as the distribution key by default. Add primary key

2. Randomly distributed.

Data will be randomly divided into segments, and the same records may be stored in different segments. Random distribution can ensure that the data is even, but Greenplum does not have unique keys to constrain data across nodes, so it cannot guarantee that the data is unique. Based on uniqueness and performance considerations, it is recommended to use hash distribution. The performance part will be introduced in detail in a separate document. Grammar, distributed randomly.

1. Hash distribution key

Create a table. The distribution column and distribution type are not specified. The hash distribution table is created by default and the first column is The ID field serves as the distribution key.

testDB=# create table t_hash(id int,name varchar(50)) distributed by (id);

CREATE TABLE

testDB=#

testDB=# \d t_hash

Table "public.t_hash"

Column | Type | Modifiers

--------+-----------------------+-----------

id | integer |

name | character varying(50) |

Distributed by: (id)After adding the primary key, the primary key is upgraded to a distribution key instead of the id column.

testDB=# alter table t_hash add primary key (name);

NOTICE: updating distribution policy to match new primary key

NOTICE: ALTER TABLE / ADD PRIMARY KEY will create implicit index "t_hash_pkey" for table "t_hash"

ALTER TABLE

testDB=# \d t_hash

Table "public.t_hash"

Column | Type | Modifiers

--------+-----------------------+-----------

id | integer |

name | character varying(50) | not null

Indexes:

"t_hash_pkey" PRIMARY KEY, btree (name)

Distributed by: (name)Verify that the hash distribution table can achieve the uniqueness of the primary key or unique key value

testDB=# insert into t_hash values(1,'szlsd1'); INSERT 0 1 testDB=# testDB=# insert into t_hash values(2,'szlsd1'); ERROR: duplicate key violates unique constraint "t_hash_pkey"(seg2 gp-s3:40000 pid=3855)

In addition, unique keys can still be created on the primary key column

testDB=# create unique index u_id on t_hash(name);

CREATE INDEX

testDB=#

testDB=#

testDB=# \d t_hash

Table "public.t_hash"

Column | Type | Modifiers

--------+-----------------------+-----------

id | integer |

name | character varying(50) | not null

Indexes:

"t_hash_pkey" PRIMARY KEY, btree (name)

"u_id" UNIQUE, btree (name)

Distributed by: (name)However, non-primary key columns cannot create unique indexes independently. If you want to create one, you must include multiple distribution key columns

testDB=# create unique index uk_id on t_hash(id);

ERROR: UNIQUE index must contain all columns in the distribution key of relation "t_hash"

testDB=# create unique index uk_id on t_hash(id,name);

CREATE INDEX

testDB=# \d t_hash

Table "public.t_hash"

Column | Type | Modifiers

--------+-----------------------+-----------

id | integer |

name | character varying(50) | not null

Indexes:

"t_hash_pkey" PRIMARY KEY, btree (name)

"uk_id" UNIQUE, btree (id, name)

Distributed by: (name)After deleting the primary key, the original hash distribution key remains unchanged.

testDB=# alter table t_hash drop constraint t_hash_pkey;

ALTER TABLE

testDB=# \d t_hash

Table "public.t_hash"

Column | Type | Modifiers

--------+-----------------------+-----------

id | integer |

name | character varying(50) | not null

Distributed by: (name)When the distribution key is not the primary key or the unique key, let us verify that the same value of the distribution key falls in a segment.

In the following experiment, the name column is the distribution key. We insert the same name value and we can see that 7 records all fall in segment node No. 2.

testDB=# insert into t_hash values(1,'szlsd');

INSERT 0 1

testDB=# insert into t_hash values(2,'szlsd');

INSERT 0 1

testDB=# insert into t_hash values(3,'szlsd');

INSERT 0 1

testDB=# insert into t_hash values(4,'szlsd');

INSERT 0 1

testDB=# insert into t_hash values(5,'szlsd');

INSERT 0 1

testDB=# insert into t_hash values(6,'szlsd');

INSERT 0 1

testDB=#

testDB=#

testDB=# select gp_segment_id,count(*) from t_hash group by gp_segment_id;

gp_segment_id | count

---------------+-------

2 | 7

(1 row)2. Random distribution key

To create a random distribution table, you need to add the distributed randomly keyword, which column to use as the distribution key unknown.

testDB=# create table t_random(id int ,name varchar(100)) distributed randomly;

CREATE TABLE

testDB=#

testDB=#

testDB=# \d t_random

Table "public.t_random"

Column | Type | Modifiers

--------+------------------------+-----------

id | integer |

name | character varying(100) |

Distributed randomlyVerify the uniqueness of the primary key/unique key, you can see that the random distribution table cannot create the primary key and unique key

testDB=# alter table t_random add primary key (id,name); ERROR: PRIMARY KEY and DISTRIBUTED RANDOMLY are incompatible testDB=# testDB=# create unique index uk_r_id on t_random(id); ERROR: UNIQUE and DISTRIBUTED RANDOMLY are incompatible testDB=#

It can be seen from the experiment that the uniqueness of the data cannot be achieved sex. Moreover, the data is inserted into the randomly distributed table, not polling insertion. There are three segments in the experiment, but 3 records are inserted in No. 1 and 2 records are inserted in Segment No. 2, and then data is inserted in Segment No. 0. How a randomly distributed table achieves even data distribution is unknown. This experiment also verified the conclusion that the same values of the random distribution table are distributed in different segments.

testDB=# insert into t_random values(1,'szlsd3');

INSERT 0 1

testDB=# select gp_segment_id,count(*) from t_random group by gp_segment_id;

gp_segment_id | count

---------------+-------

1 | 1

(1 row)

testDB=#

testDB=# insert into t_random values(1,'szlsd3');

INSERT 0 1

testDB=# select gp_segment_id,count(*) from t_random group by gp_segment_id;

gp_segment_id | count

---------------+-------

2 | 1

1 | 1

(2 rows)

testDB=# insert into t_random values(1,'szlsd3');

INSERT 0 1

testDB=# select gp_segment_id,count(*) from t_random group by gp_segment_id;

gp_segment_id | count

---------------+-------

2 | 1

1 | 2

(2 rows)

testDB=# insert into t_random values(1,'szlsd3');

INSERT 0 1

testDB=# select gp_segment_id,count(*) from t_random group by gp_segment_id;

gp_segment_id | count

---------------+-------

2 | 2

1 | 2

(2 rows)

testDB=# insert into t_random values(1,'szlsd3');

INSERT 0 1

testDB=# select gp_segment_id,count(*) from t_random group by gp_segment_id;

gp_segment_id | count

---------------+-------

2 | 2

1 | 3

(2 rows)

testDB=# insert into t_random values(1,'szlsd3');

INSERT 0 1

testDB=# select gp_segment_id,count(*) from t_random group by gp_segment_id;

gp_segment_id | count

---------------+-------

2 | 2

1 | 3

0 | 1

(3 rows)3. CTAS inherits the original table distribution key

There are two CTAS syntaxes in Greenplum. No matter which syntax is used, the default is Inherit the distribution key of the original table. However, some special attributes of the table will not be inherited, such as primary key, unique key, APPENDONLY, COMPRESSTYPE (compression), etc.

testDB=# \d t_hash;

Table "public.t_hash"

Column | Type | Modifiers

--------+-----------------------+-----------

id | integer |

name | character varying(50) | not null

Indexes:

"t_hash_pkey" PRIMARY KEY, btree (name)

"uk_id" UNIQUE, btree (id, name)

Distributed by: (name)

testDB=#

testDB=#

testDB=# create table t_hash_1 as select * from t_hash;

NOTICE: Table doesn't have 'DISTRIBUTED BY' clause -- Using column(s) named 'name' as the Greenplum

Database data distribution key for this table.

HINT: The 'DISTRIBUTED BY' clause determines the distribution of data. Make sure column(s) chosen are the

optimal data distribution key to minimize skew.

SELECT 0

testDB=# \d t_hash_1

Table "public.t_hash_1"

Column | Type | Modifiers

--------+-----------------------+-----------

id | integer |

name | character varying(50) |

Distributed by: (name)

testDB=#

testDB=# create table t_hash_2 (like t_hash);

NOTICE: Table doesn't have 'distributed by' clause, defaulting to distribution columns from LIKE table

CREATE TABLE

testDB=# \d t_hash_2

Table "public.t_hash_2"

Column | Type | Modifiers

--------+-----------------------+-----------

id | integer |

name | character varying(50) | not null

Distributed by: (name)If CTAS creates a table to change the distribution key, just add distributed by.

testDB=# create table t_hash_3 as select * from t_hash distributed by (id);

SELECT 0

testDB=#

testDB=# \d t_hash_3

Table "public.t_hash_3"

Column | Type | Modifiers

--------+-----------------------+-----------

id | integer |

name | character varying(50) |

Distributed by: (id)

testDB=#

testDB=#

testDB=# create table t_hash_4 (like t_hash) distributed by (id);

CREATE TABLE

testDB=#

testDB=# \d t_hash4

Did not find any relation named "t_hash4".

testDB=# \d t_hash_4

Table "public.t_hash_4"

Column | Type | Modifiers

--------+-----------------------+-----------

id | integer |

name | character varying(50) | not null

Distributed by: (id)When using CTAS, special attention should be paid to randomly distributed keys, and distributed randomly must be added. Otherwise, the original table will have a hash distributed key, and the new CTAS table will have a randomly distributed key.

testDB=# \d t_random

Table "public.t_random"

Column | Type | Modifiers

--------+------------------------+-----------

id | integer |

name | character varying(100) |

Distributed randomly

testDB=#

testDB=# \d t_random_1

Table "public.t_random_1"

Column | Type | Modifiers

--------+------------------------+-----------

id | integer |

name | character varying(100) |

Distributed by: (id)testDB=# create table t_random_2 as select * from t_random distributed randomly;

SELECT 7

testDB=#

testDB=# \d t_random_2

Table "public.t_random_2"

Column | Type | Modifiers

--------+------------------------+-----------

id | integer |

name | character varying(100) |

Distributed randomlyReference:

"Greenplum Enterprise Application Practice"

"Greenplum 4.2.2 Administrator Guide"

Above This is the content of the Greenplum table creation--distributed key_PHP tutorial. For more related content, please pay attention to the PHP Chinese website (www.php.cn)!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

In this chapter, we will understand the Environment Variables, General Configuration, Database Configuration and Email Configuration in CakePHP.

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

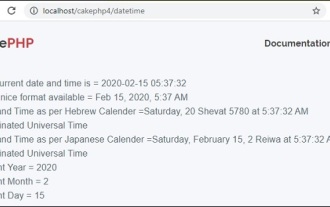

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

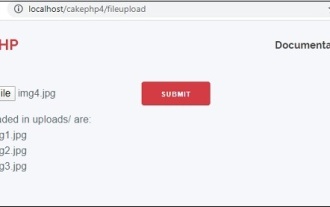

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

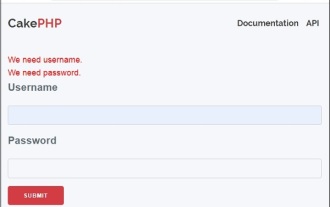

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c