Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

How to divide 100 million pieces of data into 100 tables into Mysql database (PHP), 100 pieces mysql_PHP tutorial

How to divide 100 million pieces of data into 100 tables into Mysql database (PHP), 100 pieces mysql_PHP tutorial

How to divide 100 million pieces of data into 100 tables into Mysql database (PHP), 100 pieces mysql_PHP tutorial

How to divide 100 million pieces of data into 100 tables into a Mysql database (PHP), 100 pieces of mysql

The following demonstrates how to divide 100 million pieces of data by creating 100 tables. Table process, please see the code below for details.

When the amount of data increases sharply, everyone will choose methods such as library table hashing to optimize data reading and writing speed. The author made a simple attempt, with 100 million pieces of data divided into 100 tables. The specific implementation process is as follows:

First create 100 tables:

$i=0;

while($i<=99){

echo "$newNumber \r\n";

$sql="CREATE TABLE `code_".$i."` (

`full_code` char(10) NOT NULL,

`create_time` int(10) unsigned NOT NULL,

PRIMARY KEY (`full_code`),

) ENGINE=MyISAM DEFAULT CHARSET=utf8";

mysql_query($sql);

$i++;

Let me talk about my table splitting rules. full_code is used as the primary key. We hash full_code

The function is as follows:

$table_name=get_hash_table('code',$full_code);

function get_hash_table($table,$code,$s=100){

$hash = sprintf("%u", crc32($code));

echo $hash;

$hash1 = intval(fmod($hash, $s));

return $table."_".$hash1;

}

Get the table name where the data is stored through get_hash_table before inserting data.

Finally we use the merge storage engine to implement a complete code table

CREATE TABLE IF NOT EXISTS `code` ( `full_code` char(10) NOT NULL, `create_time` int(10) unsigned NOT NULL, INDEX(full_code) ) TYPE=MERGE UNION=(code_0,code_1,code_2.......) INSERT_METHOD=LAST ;

In this way, we can get all the full_code data through select * from code.

The above introduction is the entire content of this article, I hope it will be helpful to everyone.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

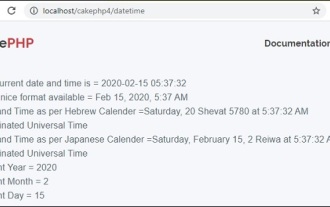

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

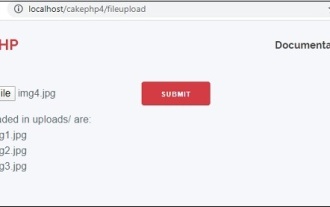

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

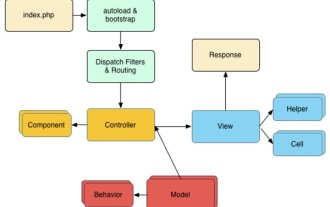

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

How to fix mysql_native_password not loaded errors on MySQL 8.4

Dec 09, 2024 am 11:42 AM

How to fix mysql_native_password not loaded errors on MySQL 8.4

Dec 09, 2024 am 11:42 AM

One of the major changes introduced in MySQL 8.4 (the latest LTS release as of 2024) is that the "MySQL Native Password" plugin is no longer enabled by default. Further, MySQL 9.0 removes this plugin completely. This change affects PHP and other app

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c

CakePHP Quick Guide

Sep 10, 2024 pm 05:27 PM

CakePHP Quick Guide

Sep 10, 2024 pm 05:27 PM

CakePHP is an open source MVC framework. It makes developing, deploying and maintaining applications much easier. CakePHP has a number of libraries to reduce the overload of most common tasks.

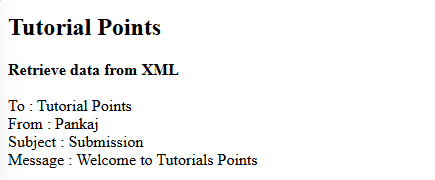

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

This tutorial demonstrates how to efficiently process XML documents using PHP. XML (eXtensible Markup Language) is a versatile text-based markup language designed for both human readability and machine parsing. It's commonly used for data storage an