Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

Solution to PHP multi-user read and write file conflicts_PHP tutorial

Solution to PHP multi-user read and write file conflicts_PHP tutorial

Solution to PHP multi-user read and write file conflicts_PHP tutorial

The general solution is:

$fp = fopen("/tmp/ lock.txt", "w+");

if (flock($fp, LOCK_EX)) {

fwrite($fp, "Write something heren");

flock($fp, LOCK_UN);

} else {

echo "Couldn't lock the file !";

}

fclose($fp);

But in PHP flock seems to work It's not that good! In the case of multiple concurrency, it seems that resources are often monopolized and not released immediately, or not released at all, causing deadlock, causing the server's CPU usage to be very high, and sometimes even causing the server to die completely. It seems that this happens in many linux/unix systems.

So before using flock, you must think carefully.

So there is no solution? In fact, this is not the case. If flock() is used properly, it is entirely possible to solve the deadlock problem. Of course, if you do not consider using the flock() function, there will also be a good solution to our problem.

After my personal collection and summary, the solutions are roughly summarized as follows.

Option 1: When locking a file, set a timeout period.

The approximate implementation is as follows:

if($fp = fopen($fileName, 'a')) {

$startTime = microtime();

do {

$canWrite = flock($fp, LOCK_EX );

if(!$canWrite) usleep(round(rand(0, 100)*1000));

} while ((!$canWrite)&& ((microtime()-$startTime) < 1000 ));

if ($canWrite) {

fwrite($fp, $dataToSave);

}

fclose($fp);

}

The timeout is set to 1ms. If the lock is not obtained within this time, it will be obtained repeatedly until the right to operate the file is obtained, of course. If the timeout limit has been reached, you must exit immediately and give up the lock to allow other processes to operate.

Option 2: Instead of using flock function, use temporary files to solve the problem of read and write conflicts.

The general principle is as follows:

1. Put the files that need to be updated into our temporary file directory, save the last modification time of the file to a variable, and give this temporary file a random file name that is not easy to repeat.

2. After updating this temporary file, check whether the last update time of the original file is consistent with the previously saved time.

3. If the last modification time is the same, the modified temporary file will be renamed to the original file. In order to ensure that the file status is updated synchronously, the file status needs to be cleared.

4. However, if the last modification time is consistent with the previously saved one, it means that the original file has been modified during this period. At this time, the temporary file needs to be deleted and then false is returned, indicating that other processes are operating on the file at this time. .

The approximate implementation code is as follows:

$dir_fileopen = "tmp";

function randomid() {

return time().substr(md5(microtime()), 0, rand(5, 12));

}

function cfopen($filename, $mode ) {

global $dir_fileopen;

clearstatcache();

do {

$id = md5(randomid(rand(), TRUE));

$tempfilename = $dir_fileopen."/ ".$id.md5($filename);

} while(file_exists($tempfilename));

if (file_exists($filename)) {

$newfile = false;

copy($ filename, $tempfilename);

}else{

} $newfile = true;

}

$fp = fopen($tempfilename, $mode);

return $fp ? array($fp , $filename, $id, @filemtime($filename)) : false;

}

function cfwrite($fp,$string) { return fwrite($fp[0], $string); }

function cfclose($fp, $debug = "off") {

global $dir_fileopen;

$success = fclose($fp[0]);

clearstatcache();

$tempfilename = $dir_fileopen."/".$fp[2].md5($fp[1]);

if ((@filemtime($fp[1]) == $fp[3]) || ($fp [4]==true && !file_exists($fp[1])) || $fp[5]==true) {

rename($tempfilename, $fp[1]);

}else{

unlink($tempfilename);

//Indicates that other processes are operating the target file and the current process is rejected

$success = false;

}

return $success;

}

$fp = cfopen('lock.txt','a+');

cfwrite($fp,"welcome to beijing.n");

fclose($fp,'on');

The functions used in the above code need to be explained:

1.rename(); to rename a file or a directory, this function is actually more like mv in Linux. It is convenient to update the path or name of a file or directory.

But when I test the above code in window, if the new file name already exists, a notice will be given saying that the current file already exists. But it works fine under linux.

2.clearstatcache(); Clear the status of the file. PHP will cache all file attribute information to provide higher performance, but sometimes, when multiple processes delete or update files, PHP does not have time to update the cache. The file attributes may easily result in accessing the last updated time which is not the real data. So here you need to use this function to clear the saved cache.

Option 3: Randomly read and write the operated files to reduce the possibility of concurrency.

This solution seems to be used more often when recording user access logs.

Previously we needed to define a random space. The larger the space, the smaller the possibility of concurrency. Assuming that the random read and write space is [1-500], then the distribution of our log files is from log1 to log500. wait. Every time a user accesses, data is randomly written to any file between log1~log500.

At the same time, there are two processes recording logs. Process A may be the updated log32 file, but what about process B? Then the update at this time may be log399. You must know that if you want process B to also operate log32, the probability is basically 1/500, which is almost equal to zero.

When we need to analyze access logs, here we only need to merge these logs first and then analyze them.

One benefit of using this solution to record logs is that the possibility of queuing process operations is relatively small, allowing the process to complete each operation quickly.

Option 4: Put all processes to be operated into a queue. Then put a dedicated service to complete file operations.

Each excluded process in the queue is equivalent to the first specific operation, so for the first time our service only needs to obtain the equivalent of the specific operation items from the queue, if there are still a large number of file operations here Process, it doesn't matter, just queue it to the back of our queue. As long as you are willing to queue, it doesn't matter how long the queue is.

For the previous options, each has its own benefits! It can be roughly divided into two categories:

1. Need to queue (slow impact), such as options 1, 2, and 4.

2. No need to queue. (Fast impact) Option 3

When designing a cache system, generally we will not use Option 3. Because the analysis program and the writing program of Plan 3 are not synchronized, when writing, the difficulty of analysis is not considered at all, as long as the writing is good. Just imagine, if we also use random file reading and writing when updating a cache, it seems that a lot of processes will be added when reading the cache. But options one and two are completely different. Although the writing time needs to wait (when acquiring the lock is unsuccessful, it will be acquired repeatedly), but reading the file is very convenient. The purpose of adding cache is to reduce data reading bottlenecks and thereby improve system performance.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

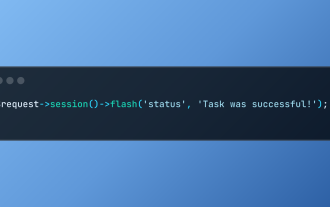

Working with Flash Session Data in Laravel

Mar 12, 2025 pm 05:08 PM

Working with Flash Session Data in Laravel

Mar 12, 2025 pm 05:08 PM

Laravel simplifies handling temporary session data using its intuitive flash methods. This is perfect for displaying brief messages, alerts, or notifications within your application. Data persists only for the subsequent request by default: $request-

cURL in PHP: How to Use the PHP cURL Extension in REST APIs

Mar 14, 2025 am 11:42 AM

cURL in PHP: How to Use the PHP cURL Extension in REST APIs

Mar 14, 2025 am 11:42 AM

The PHP Client URL (cURL) extension is a powerful tool for developers, enabling seamless interaction with remote servers and REST APIs. By leveraging libcurl, a well-respected multi-protocol file transfer library, PHP cURL facilitates efficient execution of various network protocols, including HTTP, HTTPS, and FTP. This extension offers granular control over HTTP requests, supports multiple concurrent operations, and provides built-in security features.

Simplified HTTP Response Mocking in Laravel Tests

Mar 12, 2025 pm 05:09 PM

Simplified HTTP Response Mocking in Laravel Tests

Mar 12, 2025 pm 05:09 PM

Laravel provides concise HTTP response simulation syntax, simplifying HTTP interaction testing. This approach significantly reduces code redundancy while making your test simulation more intuitive. The basic implementation provides a variety of response type shortcuts: use Illuminate\Support\Facades\Http; Http::fake([ 'google.com' => 'Hello World', 'github.com' => ['foo' => 'bar'], 'forge.laravel.com' =>

12 Best PHP Chat Scripts on CodeCanyon

Mar 13, 2025 pm 12:08 PM

12 Best PHP Chat Scripts on CodeCanyon

Mar 13, 2025 pm 12:08 PM

Do you want to provide real-time, instant solutions to your customers' most pressing problems? Live chat lets you have real-time conversations with customers and resolve their problems instantly. It allows you to provide faster service to your custom

Explain the concept of late static binding in PHP.

Mar 21, 2025 pm 01:33 PM

Explain the concept of late static binding in PHP.

Mar 21, 2025 pm 01:33 PM

Article discusses late static binding (LSB) in PHP, introduced in PHP 5.3, allowing runtime resolution of static method calls for more flexible inheritance.Main issue: LSB vs. traditional polymorphism; LSB's practical applications and potential perfo

PHP Logging: Best Practices for PHP Log Analysis

Mar 10, 2025 pm 02:32 PM

PHP Logging: Best Practices for PHP Log Analysis

Mar 10, 2025 pm 02:32 PM

PHP logging is essential for monitoring and debugging web applications, as well as capturing critical events, errors, and runtime behavior. It provides valuable insights into system performance, helps identify issues, and supports faster troubleshoot

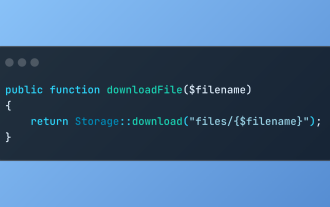

Discover File Downloads in Laravel with Storage::download

Mar 06, 2025 am 02:22 AM

Discover File Downloads in Laravel with Storage::download

Mar 06, 2025 am 02:22 AM

The Storage::download method of the Laravel framework provides a concise API for safely handling file downloads while managing abstractions of file storage. Here is an example of using Storage::download() in the example controller:

HTTP Method Verification in Laravel

Mar 05, 2025 pm 04:14 PM

HTTP Method Verification in Laravel

Mar 05, 2025 pm 04:14 PM

Laravel simplifies HTTP verb handling in incoming requests, streamlining diverse operation management within your applications. The method() and isMethod() methods efficiently identify and validate request types. This feature is crucial for building