Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

Writing Hadoop MapReduce program using PHP and Shell_PHP Tutorial

Writing Hadoop MapReduce program using PHP and Shell_PHP Tutorial

Writing Hadoop MapReduce program using PHP and Shell_PHP Tutorial

Enables any executable program that supports standard IO (stdin, stdout) to become a hadoop mapper or reducer. For example:

hadoop jar hadoop-streaming.jar -input SOME_INPUT_DIR_OR_FILE -output SOME_OUTPUT_DIR -mapper / bin/cat -reducer /usr/bin/wc

In this example, the cat and wc tools that come with Unix/Linux are used as mapper/reducer. Isn’t it amazing?

If you are used to using some dynamic languages, use dynamic languages to write mapreduce. It is no different from previous programming. Hadoop is just a framework to run it. Let me demonstrate how to use PHP to implement mapreduce of Word Counter.

1. Find the Streaming jar

There is no hadoop-streaming.jar in the Hadoop root directory. Because streaming is a contrib, you have to find it under the contrib. Taking hadoop-0.20.2 as an example, it is here:

2. Write Mapper

Create a new wc_mapper.php and write the following code:

#!/usr/bin/php

$in = fopen(“php://stdin”, “r”);

$results = array();

while ( $line = fgets($in, 4096) )

{

$words = preg_split('/W/', $line, 0, PREG_SPLIT_NO_EMPTY);

foreach ($words as $word)

$results[] = $word;

}

fclose ($in);

foreach ($results as $key => $value)

{

print “$valuet1n”;

}

The general meaning of this code is: find the words in each line of input text and output it in the form of "

hello 1

world 1"

.

It’s basically no different from the PHP I wrote before, right? There are two things that may make you feel a little strange:

PHP as an executable program

The "#!/usr/bin/php" in the first line tells Linux to use the program /usr/bin/php as the interpreter for the following code. People who have written Linux shells should be familiar with this writing method. The first line of every shell script is like this: #!/bin/bash, #!/usr/bin/python

With this line, after saving the file, you can directly execute wc_mapper.php as cat and grep commands like this: ./wc_mapper.php

Use stdin to receive input

PHP supports multiple methods of passing in parameters. The most familiar ones should be to get the parameters passed through the Web from the $_GET, $_POST super global variables, and the second is to get the parameters passed from $_SERVER['argv'] Parameters passed in from the command line. Here, the standard input stdin

is used.The effect of its use is:

Enter ./wc_mapper.php in the linux console

wc_mapper.php runs, and the console enters the state of waiting for user keyboard input

User enters text via keyboard

The user presses Ctrl + D to terminate the input, wc_mapper.php starts executing the real business logic and outputs the execution results

So where is stdout? Print itself is already stdout, which is no different from when we wrote web programs and CLI scripts before.

3. Write Reducer

Create a new wc_reducer.php and write the following code:

#!/usr /bin/php

$in = fopen(“php://stdin”, “r”);

$results = array();

while ( $line = fgets($in, 4096) )

{

list($key, $value) = preg_split(“/t/”, trim($line), 2);

$results[$key] += $value;

}

fclose($in);

ksort($results);

foreach ($results as $key => $value)

{

print “$keyt$valuen”;

}

The main idea of this code is to count how many times each word appears and output it in the form of "

hello 2

world 1"

.

4. Use Hadoop to run

Upload the sample text to be counted

hadoop fs - put *.TXT /tmp/input

Execute PHP mapreduce program in Streaming mode

Note:

The input and output directories are paths on HDFS

The mapper and reducer are paths on the local machine. Be sure to write absolute paths, do not write relative paths, otherwise Hadoop will report an error saying that the mapreduce program cannot be found.

View results

5. Shell version of Hadoop MapReduce program

#!/bin/bash -

# Load configuration file

source './config.sh'

# Process command line parameters

while getopts "d:" arg

do

case $arg in

d)

date=$OPTARG

?)

been have – echo "unkonw argument"

# The default processing date is yesterday

default_date=`date -v-1d +%Y-%m-%d`

date=${date:-${default_date}}

if ! [[ "$date" =~ [12][0- 9]{3}-(0[1-9]|1[12])-(0[1-9]|[12][0-9]|3[01]) ]]

echo "invalid date(yyyy-mm-dd): $date"

exit 1

fi

# Files to be processed

log_files=$(${hadoop_home}bin/hadoop fs -ls ${log_file_dir_in_hdfs} | awk '{print $8}' | grep $date)

log_files_amount=$(($(echo $log_files | wc -l) + 0))

if [ $log_files_amount -lt 1 ]

echo "no log files found"

exit 0

fi

# Input file list

for f in $log_files

do

done

function map_reduce () {

if ${hadoop_home}bin/hadoop jar ${streaming_jar_path} -input${input_files_list} -output ${mapreduce_output_dir}${date}/${1}/ -mapper "$ {mapper} ${1}" -reducer "${reducer}" -file "${mapper}"

then

else

exit 1

fi

}

# Loop through each bucket

for bucket in ${bucket_list[@]}

do

done

www.bkjia.com

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1359

1359

52

52

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

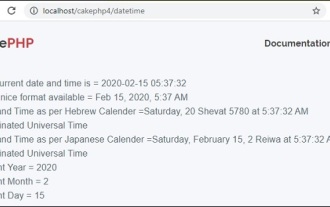

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

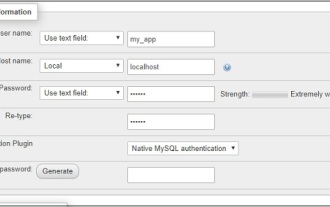

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

Working with database in CakePHP is very easy. We will understand the CRUD (Create, Read, Update, Delete) operations in this chapter.

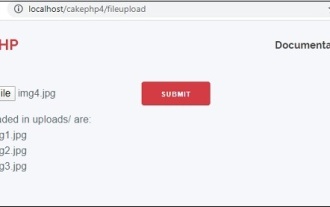

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

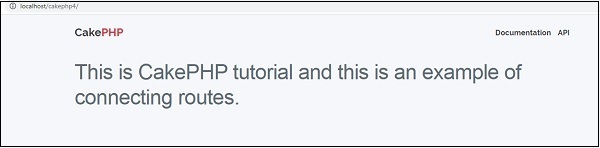

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

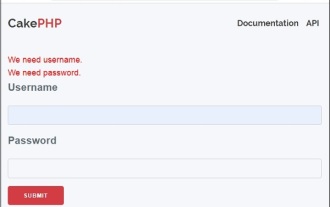

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.

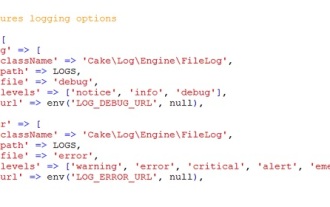

CakePHP Logging

Sep 10, 2024 pm 05:26 PM

CakePHP Logging

Sep 10, 2024 pm 05:26 PM

Logging in CakePHP is a very easy task. You just have to use one function. You can log errors, exceptions, user activities, action taken by users, for any background process like cronjob. Logging data in CakePHP is easy. The log() function is provide