How to implement article collection in PHP_PHP tutorial

Most of the data collection uses regular expressions. I will briefly introduce the idea of how to implement the collection. What I am talking about here is the implementation of PHP. It is usually run on the local machine. It is unwise to put it in the space, because not only It consumes a lot of resources and needs to support remote crawling functions, such as file_get_contents($urls)file($url), etc.

1. Automatic switching of the article list page and obtaining the article path.

2. Obtain: title, content

3. Storage

4. Question

1. Automatic switching of the article list page and obtaining the article path.

a. Automatic switching of list pages generally relies on dynamic pages. For example,

http://www.phpfirst.com/foru... d=1&page=$i

It can be implemented later by using the automatic increase or range of $i, such as $i++;

It can also be like the one demonstrated by penzi. From the page to the page, you can control the range of $i in code.

b. There are two types of scoring points for article paths: those that require filling in regular rules and those that do not require filling in regular rules:

1) No need to fill in the regular rules to get all the links to the article list page above

But it is best to filter and process the connections---determine duplicate connections, leave only one, process relative paths, and turn them into absolute paths. For example../ and ./, etc.

The following is the messy implementation function I wrote:

PHP:

------------------------------------------------ ----------------------------------

//$e=clinchgeturl("http://phpfirst.com/forumdisplay.php?fid=1");

//var_dump($e);

Function clinchgeturl($url)

{

//$url="http://127.0.0.1/1.htm";

//$rootpath="http://fsrootpathfsfsf/yyyyyy/";

//var_dump($rrr);

if(eregi((.)*[.](.)*,$url)){

$roopath=split("/",$url);

$rootpath="http://".$roopath[2]."/";

$nnn=count($roopath)-1;for($yu=3;$yu<$nnn;$yu++){$rootpath.=$roopath[$yu]."/";}

// var_dump($rootpath); //http: ,,127.0.0.1,xnml,index.php

}

else{$rootpath=$url; //var_dump($rootpath);

}

if(isset($url)){

echo "$url has the following link:

";

$fcontents = file($url);

while(list(,$line)=each($fcontents)){

while(eregi((href[[:space:]]*=[[:space:]]*"?[[:alnum:]:@/._-]+[?]?[^"] *"?),$line,$regs)){

//$regs[1] = eregi_replace((href[[:space:]]*=[[:space:]]*"?)([[:alnum:]:@/._-]+ )("?),"2",$regs[1]);

$regs[1] = eregi_replace((href[[:space:]]*=[[:space:]]*["]?)([[:alnum:]:@/._-]+ [?]?[^"]*)(.*)[^"/]*(["]?),"2",$regs[1]);

if(!eregi(^http://,$regs[1])){

if(eregi(^..,$regs[1])){

// $roopath=eregi_replace((http://)?([[:alnum:]:@/._-]+)[[:alnum:]+](.*)[[:alnum: ]+],"http://2",$url);

$roopath=split("/",$rootpath);

$rootpath="http://".$roopath[2]."/";

//echo "This is fundamental: "." ";

$nnn=count($roopath)-1;for($yu=3;$yu<$nnn;$yu++){$rootpath.=$roopath[$yu]."/";}

//var_dump($rootpath);

if(eregi(^..[/[:alnum:]],$regs[1])){

//echo "This is ../directory/: "." ";

//$regs[1]="../xx/xxxxxx.xx";

// $rr=split("/",$regs[1]);

//for($oooi=1;$oooi

$rrr=$regs[1];

// {$rrr.="/".$rr[$oooi];

$rrr = eregi_replace("^[.][.][/]",,$rrr); //}

$regs[1]=$rootpath.$rrr;

}

}else{

if(eregi(^[[:alnum:]],$regs[1])){ $regs[1]=$rootpath.$regs[1]; }

else{ $regs[1] = eregi_replace("^[/]",,$regs[1]); $regs[1]=$rootpath.$regs[1]; }

}

}

$line = $regs[2];

if(eregi((.)*[.](htm|shtm|html|asp|aspx|php|jsp|cgi)(.)*,$regs[1])){

$out[0][]=$regs[1]; }

}

}

}for ($ouou=0;$ouou

{

if($out[0][$ouou]==$out[0][$ouou+1]){

$sameurlsum=1;

//echo "sameurlsum=1:";

for($sameurl=1;$sameurl

if($out[0][$ouou+$sameurl]==$out[0][$ouou+$sameurl+1]){$sameurlsum++;}

else{break;}

}

for($p=$ouou;$p

{ $out[0][$p]=$out[0][$p+$sameurlsum];}

}

}

$i=0;

while($out[0][++$i]) {

//echo $root.$out[0][$i]." ";

$outed[0][$i]=$out[0][$i];

}

unset($out);

$out=$outed; return $out;

}

?>

The things above can only be zended, otherwise they will hinder the appearance of the city: (

After getting all the unique connections, put them in the array

2) Processing that requires filling in regular expressions

If you want to accurately get the article link you need, use this method

Follow Ketle’s idea

Use

PHP:

------------------------------------------------ ----------------------------------

Function cut($file,$from,$end){

$message=explode($from,$file);

$message=explode($end,$message[1]);

return $message[0];

}

$from is the html code in front of the list

$end is the html code behind the list

The above parameters can be submitted through the form.

Remove the parts of the list page that are not lists, and the rest are the required connections,

Just use the following regular expression to get:

PHP:

------------------------------------------------ ----------------------------------

preg_match("/^(http://)?(.*)/i",

$url, $matches);

return $matches[2];

2. Obtain: title, content

a First, use the obtained article path to read the target path

You can use the following functions:

PHP:

------------------------------------------------ ----------------------------------

Function getcontent($url) {

if($handle = fopen ($url, "rb")){

$contents = "";

do {

$data = fread($handle, 2048);

if (strlen($data) == 0) {

break;

}

$contents .= $data;

} while(true);

fclose ($handle);

}

else

exit(".....");

return $contents;

}

Or directly

PHP:

------------------------------------------------ ----------------------------------

file_get_contents($urls);

The latter is more convenient, but the shortcomings can be seen by comparing the above.

b, then get the title:

Generally use this implementation:

PHP:

------------------------------------------------ ----------------------------------

preg_match("||",$allcontent,$title);

The parts inside are obtained by submitting the form.

You can also use a series of cut functions

For example, the function cut($file,$from,$end) mentioned above, specific string cutting can be achieved through character processing function cutting, and "getting content" will be discussed in detail later.

c, get content

The idea of getting the content is the same as getting the title, but the situation is more complicated because the content is not that simple.

1) Characteristic strings near the content such as double quotes, spaces, newlines, etc. are big obstacles

The double quotes need to be changed to "" which can be processed by addslashes()

To remove the newline symbol, you can pass

PHP:

------------------------------------------------ ----------------------------------

$a=ereg_replace(" ", , $a);

$a=ereg_replace("", , $a);

Remove.

2) Idea 2, using a lot of cutting-related functions to extract content requires a lot of practice and debugging. I am working on this, but I haven’t made any breakthrough~~~~~~~~

3. Storage

a. Make sure your database can be inserted

For example, I can insert it directly like this:

PHP:

------------------------------------------------ ----------------------------------

$sql="INSERT INTO $articles VALUES (, $title, , $article,, , clinch, from, keywords, 1, $column id, $time, 1); ";

PHP:

------------------------------------------------ ----------------------------------

(,

It is automatically in ascending order

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

In this chapter, we will understand the Environment Variables, General Configuration, Database Configuration and Email Configuration in CakePHP.

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

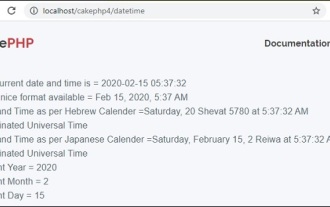

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

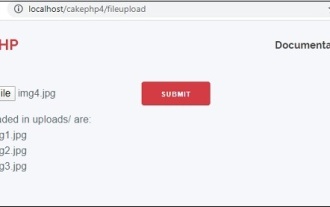

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

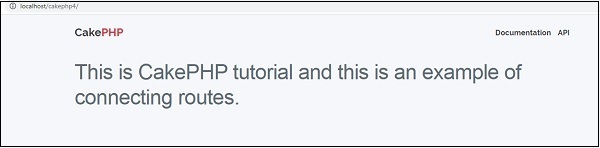

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

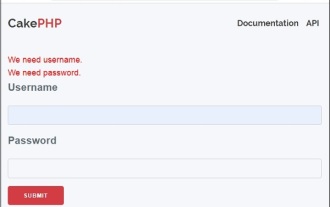

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c