A brief introduction to server-side development_PHP tutorial

I have been engaged in server-side development for some days. When I calm down, I can think about and record some server-side development ideas.

Server-side development, especially web development, is basically all about processing HTTP requests. It is divided into two types according to specific uses: Web page development and API interface development. Web page development can also be regarded as API interface development, but it has two main parts, the page and the ajax request. One is to return html, and the other can return html or other formats. API interface development is for client products. It may be a mobile device, it may be a PC application, etc.

Application framework

The application framework generally uses LNMP or LAMP. The basic framework is the front-end N Web server machines + cgi access to PHP + php access to mysql.

PHP can be regarded as a large-scale Web framework written in C. Its advantage is that it is interpreted and can be modified and updated in real time. Therefore, the cost of online code update and maintenance is extremely low, and in addition, it has some functions that are almost specially customized for web development, so it is suitable for web development. Compared with developing web services in Java, the pain of needing to be recompiled every now and then is enough.

Web servers are now using nginx more and more. The advantages of nginx over apache are its portability and high concurrency performance of static pages. Generally, when you get the equipment, you first need to consider the approximate QPS that a single machine can bear. The method is generally to only consider the memory first, and calculate how many php-cgi can be opened at the same time. For example, in a machine with 4G memory, each php-fpm takes up about 20M of memory. , so almost 200 php-cgi processes can be opened (usually some are left free). Each process can only run one php program at the same time, so assuming that each php program runs for 0.1s, 10 requests can be processed in 1s. , so the single-machine qps will be about 2000. Of course, it is generally not turned on to such an extreme level. There are several reasons:

1 You need to consider the memory usage of other processes

2 Considering that once all the memory is used up, Whether to enable swap, if not, will the machine crash immediately?

3 You also need to consider the usage of CPU and bandwidth. CPU operations such as encryption, decryption, and video transcoding are time-consuming. If the queue is not used at this time, the time of each request will be lengthened.

File Server

Generally requires the file server and web server to be separated. Separation means using different domain names for splitting. Of course, the web server can also be used as a file server, but because the file server needs to upload files, and uploading files is a very time-consuming task, that is, a PHP program needs to stay for a long time, so they need to be separated. On the one hand, it can facilitate the expansion of the file server in the future, and on the other hand, the file service will not affect the normal web service.

Judging from the reasons for splitting the file server, some interfaces that take up resources or are called particularly frequently during operation can or should be considered to be split onto different machines.

Web front-end machines always need to access persistent data, and mysql is the most frequently used. In fact, all web services are basically operations of adding, deleting, modifying and checking the database. When it comes to performance, the performance of the database's add, delete, modify, and query operations actually determines everything. Therefore, the creation of database tables and the use of indexes are particularly important for a website. The few MySQL techniques that I find most useful are:

1 Covering index. It is to find a way to create an index so that the query operation only checks the index and not the table. Creating appropriate indexes and queries that can find data only in the index can improve efficiency.

2 It is best to use auto-increment keys in InnoDB tables to improve the efficiency of insertion operations.

3 Is it better to use varchar or char for the storage format of string type variables? There was once a project table design that changed the database size from char to varchar, and the size difference of the database reached 70G and 20G...

4 When building a table, you need to consider the future sharding of databases and tables. If sharding is used, what is the sharding key? Do you need a reverse lookup table?

5 Even when considering the distribution of databases and web machines in computer rooms, this design becomes even more troublesome...

The table creation process of mysql has a lot to do with demand. Without clear requirements, the table design must be incorrect.

Database support may not be enough, so the first thing that comes to mind may be caching. Is the cache using global cache? Put it on the web front-end machine? What hash algorithm needs to be used? What cache to use? memcache? redis? Mysql also has its own cache. How can I query to better hit this cache? When the data is updated, is the data in the cache dirty? How to update data?

Web page development

When you need to build a website, the first thing to consider is how many users will it have? Making an SNS website and making an operation backend website are two completely different concepts.

First of all, in terms of page pressure, the qps of an SNS website may be in the thousands or tens of thousands, while the operation backend pressure can almost be calculated without calculation. This means that the back-end database support is different. SNS websites may most often call interfaces for friend relationships and personal information. Do such interfaces need to be handled independently? Many such requests will be repeated. Should you consider using middleware or caching to reduce the direct pressure on the database? Operational data can generally be solved using a single table. Personally, I feel that the statistical requirements in operations are the most difficult to accomplish. First of all, statistics cannot satisfy any statistical requirements. This need is related to product discussion requirements. Secondly, statistics generally require the use of access logs and the like, which may involve many shell scripts.

API Development

In fact, compared to web development, API development is passive. This means that the client may be a mobile phone product or a PC product. There are often releases and versions. This means that the API interface cannot update the code at any time like the Web. It requires more consideration of compatibility issues between various versions. Compatibility issues will largely become additions, never subtractions. Personally, I feel that if you meet the needs without restraint, as the number of versions increases, your code will have more and more if elses. In the end, your code will not be maintainable at all. Then someone else will take over your work, step into the trap, refactor while scolding... API development relies most on testing. Often only testers make small changes to each version, and there are countless small features.

Consider the non-functional support:

You may need to keep statistics on API call times, so that you can understand how your interface performs.

Your code may also use services on other machines, such as curling another service. In this case, it is best to consider error handling and logging.

For interface services with monetary transactions, log processing is even more essential.

For some internal errors, it is best not to directly throw them to the user, so it is best to use a whitelist error mechanism.

The encryption method of the interface generally requires at least a signature mechanism. Considering the encryption methods, there are roughly several types: symmetric encryption and asymmetric encryption. When encrypting, you need to consider some circumstances, such as the power consumption of the mobile client.

If you are developing an interface for mobile phones, you need to consider traffic issues and image specifications.

The framework will always change. Not to mention changes in demand, just in terms of changes in the number of users, the frameworks for 200,000 users and 1,000,000 users must be different. At the beginning, you couldn't design a framework based on 1,000,000 users for 200,000 people to use. Therefore, a good server-side framework must undergo several major changes as the number of users changes.

…

Postscript

I wrote this article wherever I thought of it, and I found that I couldn’t continue writing it... In short, web service development There are still a lot of tricks and little things. Some pits need to be stepped on before you know the pain. What’s cute is that I’m still going through the pits...

In addition, interface refactoring is something that almost every server developer must experience. Compared with developing a new system, the difficulty of interface reconstruction can be said to be extremely difficult. Of course, the difficulty here can also be understood as the degree of discomfort...it will also be a very training job. For refactoring, testing is particularly important. How to have a good test set to ensure the correctness of your refactoring is difficult.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Where can I view the records of things I have purchased on Pinduoduo? How to view the records of purchased products?

Mar 12, 2024 pm 07:20 PM

Where can I view the records of things I have purchased on Pinduoduo? How to view the records of purchased products?

Mar 12, 2024 pm 07:20 PM

Pinduoduo software provides a lot of good products, you can buy them anytime and anywhere, and the quality of each product is strictly controlled, every product is genuine, and there are many preferential shopping discounts, allowing everyone to shop online Simply can not stop. Enter your mobile phone number to log in online, add multiple delivery addresses and contact information online, and check the latest logistics trends at any time. Product sections of different categories are open, search and swipe up and down to purchase and place orders, and experience convenience without leaving home. With the online shopping service, you can also view all purchase records, including the goods you have purchased, and receive dozens of shopping red envelopes and coupons for free. Now the editor has provided Pinduoduo users with a detailed online way to view purchased product records. method. 1. Open your phone and click on the Pinduoduo icon.

Four recommended AI-assisted programming tools

Apr 22, 2024 pm 05:34 PM

Four recommended AI-assisted programming tools

Apr 22, 2024 pm 05:34 PM

This AI-assisted programming tool has unearthed a large number of useful AI-assisted programming tools in this stage of rapid AI development. AI-assisted programming tools can improve development efficiency, improve code quality, and reduce bug rates. They are important assistants in the modern software development process. Today Dayao will share with you 4 AI-assisted programming tools (and all support C# language). I hope it will be helpful to everyone. https://github.com/YSGStudyHards/DotNetGuide1.GitHubCopilotGitHubCopilot is an AI coding assistant that helps you write code faster and with less effort, so you can focus more on problem solving and collaboration. Git

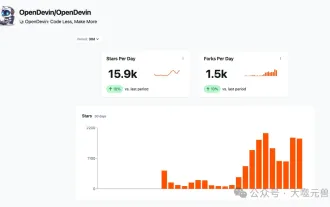

Which AI programmer is the best? Explore the potential of Devin, Tongyi Lingma and SWE-agent

Apr 07, 2024 am 09:10 AM

Which AI programmer is the best? Explore the potential of Devin, Tongyi Lingma and SWE-agent

Apr 07, 2024 am 09:10 AM

On March 3, 2022, less than a month after the birth of the world's first AI programmer Devin, the NLP team of Princeton University developed an open source AI programmer SWE-agent. It leverages the GPT-4 model to automatically resolve issues in GitHub repositories. SWE-agent's performance on the SWE-bench test set is similar to Devin, taking an average of 93 seconds and solving 12.29% of the problems. By interacting with a dedicated terminal, SWE-agent can open and search file contents, use automatic syntax checking, edit specific lines, and write and execute tests. (Note: The above content is a slight adjustment of the original content, but the key information in the original text is retained and does not exceed the specified word limit.) SWE-A

Learn how to develop mobile applications using Go language

Mar 28, 2024 pm 10:00 PM

Learn how to develop mobile applications using Go language

Mar 28, 2024 pm 10:00 PM

Go language development mobile application tutorial As the mobile application market continues to boom, more and more developers are beginning to explore how to use Go language to develop mobile applications. As a simple and efficient programming language, Go language has also shown strong potential in mobile application development. This article will introduce in detail how to use Go language to develop mobile applications, and attach specific code examples to help readers get started quickly and start developing their own mobile applications. 1. Preparation Before starting, we need to prepare the development environment and tools. head

What is the correct way to restart a service in Linux?

Mar 15, 2024 am 09:09 AM

What is the correct way to restart a service in Linux?

Mar 15, 2024 am 09:09 AM

What is the correct way to restart a service in Linux? When using a Linux system, we often encounter situations where we need to restart a certain service, but sometimes we may encounter some problems when restarting the service, such as the service not actually stopping or starting. Therefore, it is very important to master the correct way to restart services. In Linux, you can usually use the systemctl command to manage system services. The systemctl command is part of the systemd system manager

Which Linux distribution is best for Android development?

Mar 14, 2024 pm 12:30 PM

Which Linux distribution is best for Android development?

Mar 14, 2024 pm 12:30 PM

Android development is a busy and exciting job, and choosing a suitable Linux distribution for development is particularly important. Among the many Linux distributions, which one is most suitable for Android development? This article will explore this issue from several aspects and give specific code examples. First, let’s take a look at several currently popular Linux distributions: Ubuntu, Fedora, Debian, CentOS, etc. They all have their own advantages and characteristics.

How to solve the problem of jQuery AJAX error 403?

Feb 23, 2024 pm 04:27 PM

How to solve the problem of jQuery AJAX error 403?

Feb 23, 2024 pm 04:27 PM

How to solve the problem of jQueryAJAX error 403? When developing web applications, jQuery is often used to send asynchronous requests. However, sometimes you may encounter error code 403 when using jQueryAJAX, indicating that access is forbidden by the server. This is usually caused by server-side security settings, but there are ways to work around it. This article will introduce how to solve the problem of jQueryAJAX error 403 and provide specific code examples. 1. to make

Understanding VSCode: What is this tool used for?

Mar 25, 2024 pm 03:06 PM

Understanding VSCode: What is this tool used for?

Mar 25, 2024 pm 03:06 PM

"Understanding VSCode: What is this tool used for?" 》As a programmer, whether you are a beginner or an experienced developer, you cannot do without the use of code editing tools. Among many editing tools, Visual Studio Code (VSCode for short) is very popular among developers as an open source, lightweight, and powerful code editor. So, what exactly is VSCode used for? This article will delve into the functions and uses of VSCode and provide specific code examples to help readers