Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

Use PHP to determine whether a web page is gzip compressed_PHP tutorial

Use PHP to determine whether a web page is gzip compressed_PHP tutorial

Use PHP to determine whether a web page is gzip compressed_PHP tutorial

Last night, when a friend in the group collected web pages, they found that the web pages obtained by file_get_contents were garbled when saved locally. The response header contained Content-Encoding: gzip

, but it looked normal in the browser.

Because I have had relevant experience, I immediately discovered that the website turned on gzip and file_get_contents obtained the compressed page instead of the decompressed page (I don’t know if file_get_contents should be brought when requesting the web page. Corresponding parameters, directly obtain the web page that has not been compressed by gzip? )

I just saw not long ago that the file type can be determined by reading the first 2 bytes of the file. Friends in the group also said that the first 2 bytes of a gzip-compressed web page (gbk encoded) are 1F 8B, so you can determine whether the web page has been gzip-compressed.

The code is as follows:

//Mire Military Network uses gzip to compress web pages

//file_get_contents The web page obtained directly is garbled.

header('Content-Type:text/html;charset=utf-8' );

$url = 'http://www.miercn.com';

$file = fopen($url , "rb");

//Read only 2 bytes If it is (hexadecimal) 1f 8b (decimal) 31 139, gzip is enabled;

$bin = fread($file, 2) ;

fclose($file);

$strInfo = @unpack("C2chars", $bin);

$typeCode = intval($strInfo['chars1'].$strInfo['chars2'] );

$isGzip = 0;

switch ($typeCode)

{

case 31139: ;

default:

$isGzip = 0;

}

$url = $isGzip ? "compress.zlib://".$url:$url; // ternary expression

$mierHtml = file_get_contents($url); //Get Mier Military Network data

$mierHtml = iconv("gbk","utf-8",$mierHtml);

echo $mierHtml;

http://www.bkjia.com/PHPjc/327839.html

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

In this chapter, we will understand the Environment Variables, General Configuration, Database Configuration and Email Configuration in CakePHP.

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

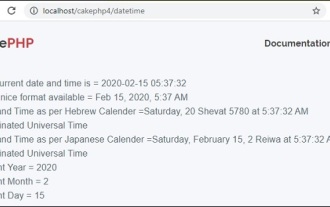

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

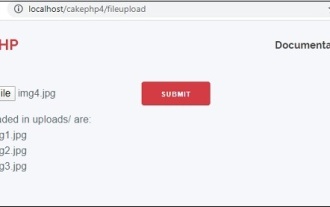

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

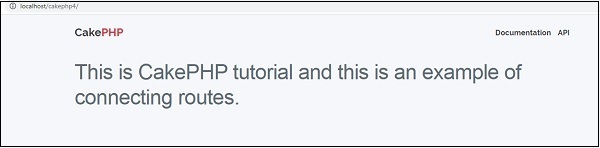

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

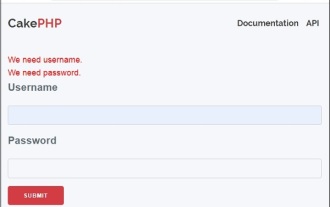

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c