Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

Use of single-page parallel collection function get_htmls based on curl data collection_PHP tutorial

Use of single-page parallel collection function get_htmls based on curl data collection_PHP tutorial

Use of single-page parallel collection function get_htmls based on curl data collection_PHP tutorial

Use get_html() in the first article to implement simple data collection. Since the data is collected one by one, the transmission time will be the total download time of all pages. If one page is 1 second, then 10 pages will be 10 Seconds. Fortunately, curl also provides parallel processing capabilities.

To write a function for parallel collection, you must first understand what kind of pages you want to collect and what requests to use for the collected pages. Only then can you write a relatively commonly used function.

Functional requirements analysis:

Return what?

Of course the html of each page is collected into an array

What parameters are passed?

When writing get_html(), we learned that we can use the options array to pass more curl parameters, so the feature of writing simultaneous collection functions for multiple pages must be retained.

What type of parameters?

Whether it is requesting the HTML of a web page or calling the Internet API interface, the parameters passed by get and post always request the same page or interface, but the parameters are different. Then the parameter type is:

get_htmls($url,$options);

$url is string

$options is a two-dimensional array, and the parameters of each page are an array.

In this case, the problem seems to be solved. But I searched all over the curl manual and couldn't see where the get parameters are passed, so I can only pass $url in the form of an array and add a method parameter

The prototype of the function is decided on get_htmls($urls,$options = array, $method = 'get'); the code is as follows:

function get_htmls($urls, $options = array(), $method = 'get'){

$mh = curl_multi_init();

if($method == 'get'){//The get method is most commonly used to pass values

foreach($urls as $key=>$url){

$ch = curl_init($url);

$options[CURLOPT_RETURNTRANSFER] = true;

$options[CURLOPT_TIMEOUT] = 5;

curl_setopt_array($ch,$options);

$cur ls[$key] = $ch;

curl_multi_add_handle( $mh,$curls[$key]);

option){

$option[CURLOPT_POST] = true;

curl_setopt_array($ch,$option); }else{

exit("Parameter error! n");

}

do{

$mrc = curl_multi_exec($mh,$active);

curl_multi_select($mh);//Reduce CPU pressure Comment out the CPU pressure to increase

}while($active);

foreach($curls as $key=>$ch){

$html = curl_multi_getcontent($ch);

curl_multi_remove_handle( $mh,$ch);

curl_close($ch);

$htmls[$key] = $html;

}

curl_multi_close($mh);

return $htmls;

}

Commonly used get requests are implemented by changing url parameters, and because our function is aimed at data collection. It must be collected by category, so the URL is similar to this:

http://www.baidu.com/s?wd=shili&pn=0&ie=utf-8

http://www.baidu.com/s?wd=shili&pn=10&ie=utf-8

http://www.baidu.com/s?wd=shili&pn=20&ie=utf-8

http://www.baidu.com/s?wd=shili&pn=30&ie=utf-8

http://www.baidu.com/s?wd=shili&pn=50&ie=utf-8

The above five pages are very regular, and only the value of pn changes.

$urls = array();

for($i= 1; $i<=5; $i++){

$urls[] = 'http://www.baidu.com/s?wd=shili&pn='.(($i-1)*10). '&ie=utf-8';

}

$option[CURLOPT_USERAGENT] = 'Mozilla/5.0 (Windows NT 6.1; rv:19.0) Gecko/20100101 Firefox/19.0';

$htmls = get_htmls( $urls,$option);

foreach($htmls as $html){

echo $html;//Get html here and you can perform data processing

}

Simulate common post requests:

Write a post.php file as follows:

if(isset($_POST[ 'username']) && isset($_POST['password'])){

echo 'The username is: '.$_POST['username'].' The password is: '.$_POST['password'] ;

}else{

echo 'Request error!';

}

Then call as follows:

$url = 'http://localhost/yourpath/post.php';//Here is your path

$options = array();

for($i=1; $i<=5; $i++){

$option[CURLOPT_POSTFIELDS] = 'username=user'.$i.'&password=pass'.$i;

$options[] = $option;

}

$htmls = get_htmls($url,$options,'post');

foreach($htmls as $html){

echo $html; //Get the html here and you can perform data processing

}

In this way, the get_htmls function can basically implement some data collection functions

That’s it for today’s sharing. If it’s not well written or unclear, please give me some advice

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Table Border in HTML

Sep 04, 2024 pm 04:49 PM

Table Border in HTML

Sep 04, 2024 pm 04:49 PM

Guide to Table Border in HTML. Here we discuss multiple ways for defining table-border with examples of the Table Border in HTML.

Nested Table in HTML

Sep 04, 2024 pm 04:49 PM

Nested Table in HTML

Sep 04, 2024 pm 04:49 PM

This is a guide to Nested Table in HTML. Here we discuss how to create a table within the table along with the respective examples.

HTML margin-left

Sep 04, 2024 pm 04:48 PM

HTML margin-left

Sep 04, 2024 pm 04:48 PM

Guide to HTML margin-left. Here we discuss a brief overview on HTML margin-left and its Examples along with its Code Implementation.

HTML Table Layout

Sep 04, 2024 pm 04:54 PM

HTML Table Layout

Sep 04, 2024 pm 04:54 PM

Guide to HTML Table Layout. Here we discuss the Values of HTML Table Layout along with the examples and outputs n detail.

HTML Ordered List

Sep 04, 2024 pm 04:43 PM

HTML Ordered List

Sep 04, 2024 pm 04:43 PM

Guide to the HTML Ordered List. Here we also discuss introduction of HTML Ordered list and types along with their example respectively

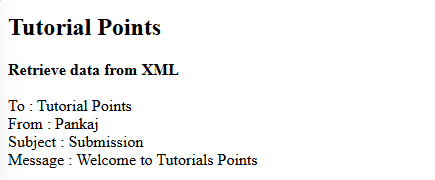

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

This tutorial demonstrates how to efficiently process XML documents using PHP. XML (eXtensible Markup Language) is a versatile text-based markup language designed for both human readability and machine parsing. It's commonly used for data storage an

Moving Text in HTML

Sep 04, 2024 pm 04:45 PM

Moving Text in HTML

Sep 04, 2024 pm 04:45 PM

Guide to Moving Text in HTML. Here we discuss an introduction, how marquee tag work with syntax and examples to implement.

HTML onclick Button

Sep 04, 2024 pm 04:49 PM

HTML onclick Button

Sep 04, 2024 pm 04:49 PM

Guide to HTML onclick Button. Here we discuss their introduction, working, examples and onclick Event in various events respectively.