PHP curl concurrency best practice code sharing_PHP tutorial

This article will discuss two specific implementation methods and make a simple performance comparison of different methods.

1. Classic cURL concurrency mechanism and its existing problems

The classic cURL implementation mechanism is easy to find online. For example, refer to the following implementation method in the PHP online manual:

function classic_curl($urls, $delay) {

$queue = curl_multi_init();

$map = array();

foreach ($urls as $url) {

// create cURL resources

$ch = curl_init();

// set URL and other appropriate options

curl_setopt($ch, CURLOPT_URL, $url);

curl_setopt($ch, CURLOPT_TIMEOUT, 1);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($ch, CURLOPT_HEADER, 0);

curl_setopt($ch, CURLOPT_NOSIGNAL, true );

// add handle

curl_multi_add_handle($queue, $ch);

$map[$url] = $ch;

}

$active = null;

// execute the handles

do {

$mrc = curl_multi_exec($queue, $active);

} while ($mrc == CURLM_CALL_MULTI_PERFORM);

while ($active > 0 && $mrc == CURLM_OK) {

if (curl_multi_select($queue, 0.5) != -1) {

do {

$mrc = curl_multi_exec($queue , $active);

} while ($mrc == CURLM_CALL_MULTI_PERFORM);

}

}

$responses = array();

foreach ($map as $url= >$ch) {

$responses[$url] = callback(curl_multi_getcontent($ch), $delay);

curl_multi_remove_handle($queue, $ch);

curl_close($ch);

}

curl_multi_close($queue);

return $responses;

}

First push all URLs into the concurrent queue, and then execute the concurrent process, Wait for all requests to be received before parsing the data and other subsequent processing. In the actual processing process, affected by network transmission, the content of some URLs will be returned before other URLs, but classic cURL concurrency must wait for the slowest URL to return Processing starts later, and waiting means CPU idleness and waste. If the URL queue is short, this idleness and waste are still within an acceptable range, but if the queue is long, this waiting and waste will become unacceptable. Accept.

2. Improved Rolling cURL concurrency method

After careful analysis, it is not difficult to find that there is still room for optimization in classic cURL concurrency. When a URL is optimized, After the request is completed, process it as quickly as possible, and wait for other URLs to return while processing, instead of waiting for the slowest interface to return before starting processing and other work, thereby avoiding CPU idleness and waste. Without further ado, below Paste the specific implementation:

function rolling_curl($urls, $delay) {

$queue = curl_multi_init();

$map = array();

foreach ($urls as $url) {

$ch = curl_init();

curl_setopt ($ch, CURLOPT_URL, $url);

curl_setopt($ch, CURLOPT_TIMEOUT, 1);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($ch, CURLOPT_HEADER, 0);

curl_setopt($ch, CURLOPT_NOSIGNAL, true);

curl_multi_add_handle($queue, $ch);

$map[(string) $ch] = $url;

}

$responses = array();

do {

while (($code = curl_multi_exec($queue, $active)) == CURLM_CALL_MULTI_PERFORM) ;

if ($code != CURLM_OK) { break; }

// a request was just completed -- find out which one

while ($done = curl_multi_info_read($queue)) {

// get the info and content returned on the request

$info = curl_getinfo($done['handle']);

$error = curl_error($done['handle']);

$results = callback(curl_multi_getcontent ($done['handle']), $delay);

$responses[$map[(string) $done['handle']]] = compact('info', 'error', 'results') ;

// remove the curl handle that just completed

curl_multi_remove_handle($queue, $done['handle']);

curl_close($done['handle']);

}

// Block for data in / output; error handling is done by curl_multi_exec

if ($active > 0) {

curl_multi_select($queue, 0.5);

}

} while ($active);

curl_multi_close($queue);

return $responses;

}

3. Performance comparison of two concurrent implementations

The performance comparison test before and after the improvement was conducted on a LINUX host. The concurrent queue used during the test is as follows:

http://item.taobao.com/item.htm?id=14392877692

http://item.taobao.com/item.htm?id=16231676302

http://item.taobao .com/item.htm?id=17037160462

http://item.taobao.com/item.htm?id=5522416710

http://item.taobao.com/item.htm?id=16551116403

http://item.taobao.com/item.htm?id=14088310973

Briefly explain the principles of experimental design and the format of performance test results: To ensure the reliability of the results, each set of experiments is repeated 20 times, in a single experiment, given the same interface URL set, measure the time consuming (in seconds) of the two concurrency mechanisms: Classic (referring to the classic concurrency mechanism) and Rolling (referring to the improved concurrency mechanism). The one with the shortest time is the winner (Winner), and the time saved (Excellence, in seconds) and performance improvement ratio (Excel. %) are calculated. In order to be as close to the real request as possible while keeping the experiment simple, in the processing of the returned results The above only performed simple regular expression matching without performing other complex operations. In addition, in order to determine the impact of the result processing callback on the performance comparison test results, usleep can be used to simulate more responsible data processing logic in reality (such as extraction, word segmentation, writing to files or databases, etc.).

The callback function used in the performance test is:

function callback($data, $delay) {

preg_match_all('/

(.+)

/iU', $data, $matches);usleep($delay);

return compact('data', 'matches');

}

When the data processing callback has no delay: Rolling Curl is slightly better, but the performance The improvement effect is not obvious.

The data processing callback delay is 5 milliseconds: Rolling Curl wins, and the performance is improved by about 40%.

Through the above performance comparison, Rolling cURL should be a better choice in application scenarios that handle URL queue concurrency. , when the amount of concurrency is very large (1000+), you can control the maximum length of the concurrent queue, such as 20. Whenever a URL is returned and processed, a URL that has not yet been requested is immediately added to the queue. The code written in this way will It is more robust and will not get stuck or crash if the number of concurrency is too large. For detailed implementation, please refer to: http://code.google.com/p/rolling-curl/

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

In this chapter, we will understand the Environment Variables, General Configuration, Database Configuration and Email Configuration in CakePHP.

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

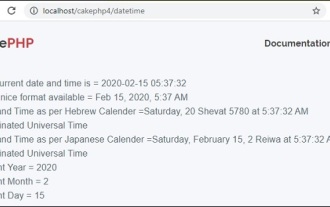

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

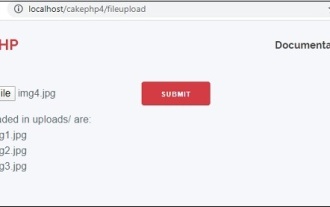

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

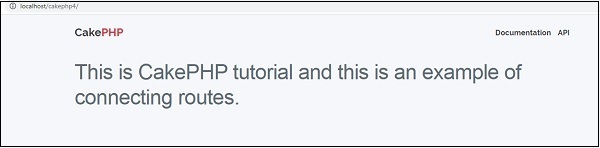

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

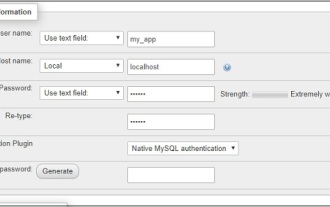

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

Working with database in CakePHP is very easy. We will understand the CRUD (Create, Read, Update, Delete) operations in this chapter.

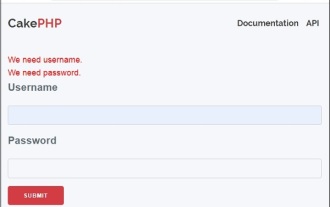

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.