Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

javascript - How can the front-end prevent collection as much as possible?

javascript - How can the front-end prevent collection as much as possible?

javascript - How can the front-end prevent collection as much as possible?

How can the front-end prevent collection as much as possible? What are some good implementation solutions?

Reply content:

How can the front-end prevent collection as much as possible? What are some good implementation solutions?

Know yourself and know the enemy

Go and learn crawl the websiteor anti-anti-crawler, and then come up with countermeasures one by one, haha

I usually check the referer, but it’s of no use...

Has no one done any research?

Prevent crawlers from crawling? It seems there is no perfect solution

There is no perfect method. There are some auxiliary methods that block the IP based on the number of IP requests, such as 100 visits in a short period of time. . But there are agents, so it’s useless and can only protect against newbies.

There may be concurrency restrictions, one end can only have 10 concurrencies, etc.

In fact, it’s the same, IP proxy + multi-threading still breaks through the concurrency limit, so it’s just for newbies.

Common methods to prevent front-end collection include:

ajax obtains data and displays it; general collection does not support js execution

Add garbled symbols, but use div and other tags to prevent the garbled characters from being displayed (such as invisible, smallest font size, and the same color as the background color). This method has been used before on the official website of "Reader".

Whoever can achieve the anti-collection effect on the front end, haha, can win the Nobel Prize in Physics -- By phantomjs

Add hidden controls, including url. The one who accesses this url is the machine

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

In this chapter, we will understand the Environment Variables, General Configuration, Database Configuration and Email Configuration in CakePHP.

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

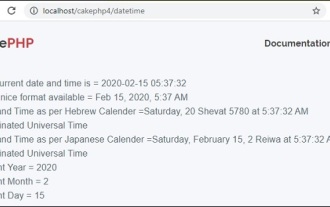

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

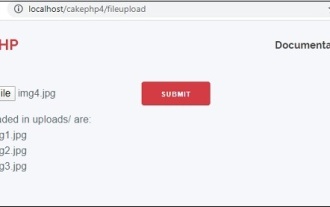

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

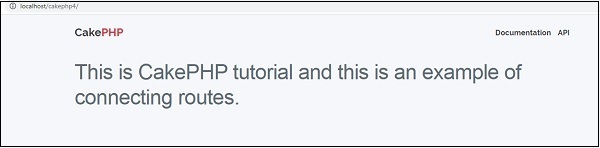

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

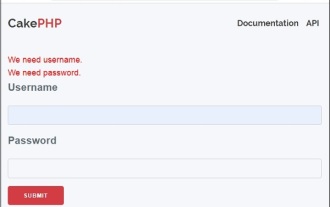

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

Working with database in CakePHP is very easy. We will understand the CRUD (Create, Read, Update, Delete) operations in this chapter.