SQL scheduled update optimization issues

A mysql data table contains a large amount of data, such as 10 million items, occupying 5G space. Insert data into it. How to regularly update the status of the newly inserted data to make it more efficient.

For example: I inserted 1000 items into it and updated the status of these 1000 items every 10 minutes. How to improve efficiency

Reply content:

A mysql data table contains a large amount of data, such as 10 million items, occupying 5G space. Insert data into it. How to regularly update the status of the newly inserted data to make it more efficient.

For example: I inserted 1000 items into it and updated the status of these 1000 items every 10 minutes. How to improve efficiency

Use something like cron job

I don’t know what status you are talking about

If it is a certain field in that table, just update it according to the primary key

The amount of table data is large, and the speed of update is related to the table index. If the updated field does not involve an index, and it is updated according to the primary key, it should be very fast, even if it is tens of millions of data

If you want to query the 1,000 new items among the 10 million, it may indeed be slow, depending on whether your query conditions are correctly indexed

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

How to fix mysql_native_password not loaded errors on MySQL 8.4

Dec 09, 2024 am 11:42 AM

How to fix mysql_native_password not loaded errors on MySQL 8.4

Dec 09, 2024 am 11:42 AM

One of the major changes introduced in MySQL 8.4 (the latest LTS release as of 2024) is that the "MySQL Native Password" plugin is no longer enabled by default. Further, MySQL 9.0 removes this plugin completely. This change affects PHP and other app

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c

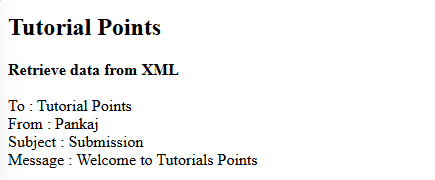

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

This tutorial demonstrates how to efficiently process XML documents using PHP. XML (eXtensible Markup Language) is a versatile text-based markup language designed for both human readability and machine parsing. It's commonly used for data storage an

PHP Program to Count Vowels in a String

Feb 07, 2025 pm 12:12 PM

PHP Program to Count Vowels in a String

Feb 07, 2025 pm 12:12 PM

A string is a sequence of characters, including letters, numbers, and symbols. This tutorial will learn how to calculate the number of vowels in a given string in PHP using different methods. The vowels in English are a, e, i, o, u, and they can be uppercase or lowercase. What is a vowel? Vowels are alphabetic characters that represent a specific pronunciation. There are five vowels in English, including uppercase and lowercase: a, e, i, o, u Example 1 Input: String = "Tutorialspoint" Output: 6 explain The vowels in the string "Tutorialspoint" are u, o, i, a, o, i. There are 6 yuan in total

7 PHP Functions I Regret I Didn't Know Before

Nov 13, 2024 am 09:42 AM

7 PHP Functions I Regret I Didn't Know Before

Nov 13, 2024 am 09:42 AM

If you are an experienced PHP developer, you might have the feeling that you’ve been there and done that already.You have developed a significant number of applications, debugged millions of lines of code, and tweaked a bunch of scripts to achieve op

Top 10 PHP CMS Platforms For Developers in 2024

Dec 05, 2024 am 10:29 AM

Top 10 PHP CMS Platforms For Developers in 2024

Dec 05, 2024 am 10:29 AM

CMS stands for Content Management System. It is a software application or platform that enables users to create, manage, and modify digital content without requiring advanced technical knowledge. CMS allows users to easily create and organize content

How to Add Elements to the End of an Array in PHP

Feb 07, 2025 am 11:17 AM

How to Add Elements to the End of an Array in PHP

Feb 07, 2025 am 11:17 AM

Arrays are linear data structures used to process data in programming. Sometimes when we are processing arrays we need to add new elements to the existing array. In this article, we will discuss several ways to add elements to the end of an array in PHP, with code examples, output, and time and space complexity analysis for each method. Here are the different ways to add elements to an array: Use square brackets [] In PHP, the way to add elements to the end of an array is to use square brackets []. This syntax only works in cases where we want to add only a single element. The following is the syntax: $array[] = value; Example