The so-called web crawler is a program that crawls data everywhere or in a specific direction on the Internet. Of course, this statement is not professional enough. A more professional description is to crawl the HTML data of specific website pages. However, since a website has many web pages, and it is impossible for us to know the URL addresses of all web pages in advance, how to ensure that we capture all the HTML pages of the website is a question that needs to be studied. The general method is to define an entry page, and then generally one page will have the URLs of other pages, so these URLs are obtained from the current page and added to the crawler's crawling queue, and then after entering the new page, the above recursively is performed. The operation is actually the same as depth traversal or breadth traversal.

Scrapy is a crawler framework based on Twisted and implemented in pure Python. Users only need to customize and develop a few modules to easily implement a crawler to crawl web content and various images. It is very convenient~

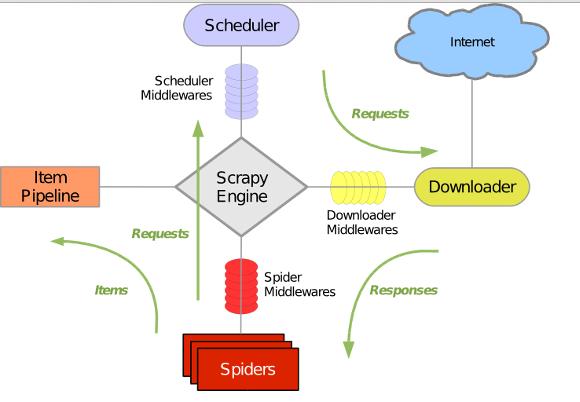

Using Scrapy Twisted is an asynchronous network library that handles network communications. It has a clear architecture and contains various middleware interfaces, which can flexibly fulfill various needs. The overall architecture is shown in the figure below:

The green line is the data flow direction. First, starting from the initial URL, the Scheduler will hand it over to the Downloader for downloading. After downloading, it will be handed over to the Spider for analysis. There are two results analyzed by the Spider. One kind: one is the links that need to be further crawled, such as the "next page" link analyzed before, these things will be sent back to the Scheduler; the other is the data that needs to be saved, they are sent to the Item Pipeline. That's where post-processing (detailed analysis, filtering, storage, etc.) is done on the data. In addition, various middleware can be installed in the data flow channel to perform necessary processing.

I assume you already have Scrapy installed. If you don't have it installed, you can refer to this article.

In this article, we will learn how to use Scrapy to build a crawler program and crawl the content on the specified website

1. Create a new Scrapy Project

2. Define the element Item you need to extract from the webpage

3. Implement a Spider class to complete the function of crawling URLs and extracting Items through the interface

4. Implement an Item PipeLine class to complete the Item storage function

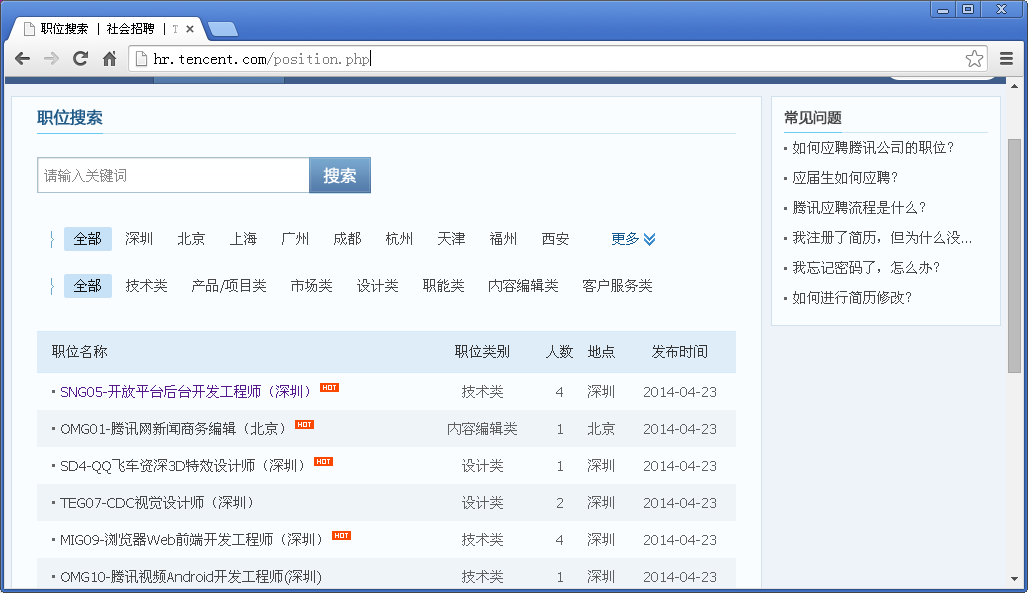

I will use the Tencent recruitment official website as an example.

Github source code: https://github.com/maxliaops/scrapy-itzhaopin

Goal: Grab job recruitment information from Tencent recruitment official website and save it in JSON format.

New project

First, create a new project for our crawler, first enter a directory (any directory we use to save code), execute:

scrapy startprojectitzhaopin

The last itzhaopin is the project name. This command will create a new directory itzhaopin in the current directory, with the following structure:

.

├── itzhaopin

│ ├── itzhaopin

│ │ ├── __init__.py

│ │ ├── items .py

│ │ ├── pipelines.py

│ │ ├── settings.py

│ │ └── spiders

│ │ └── __init__.py

│ └── scrapy.cfg

scrapy.cfg: Project configuration file

items.py: Data structure definition file that needs to be extracted

pipelines.py: Pipeline definition, used to further process the data extracted from items, such as saving, etc.

settings .py: crawler configuration file

spiders: directory where spiders are placed

Define Item

Define the data we want to crawl in items.py:

from scrapy.item import Item, Field

class TencentItem(Item):

name = Field() # 职位名称

catalog = Field() # 职位类别

workLocation = Field() # 工作地点

recruitNumber = Field() # 招聘人数

detailLink = Field() # 职位详情页链接

publishTime = Field() # 发布时间implement Spider

Spider is an inheritance from scrapy. The Python class of contrib.spiders.CrawlSpider has three required defined members

name: name, the identifier of this spider

start_urls: a list of URLs from which the spider starts crawling

parse(): a method, When the webpage in start_urls is crawled, you need to call this method to parse the webpage content. At the same time, you need to return the next webpage to be crawled, or return the items list

So create a new spider in the spiders directory, tencent_spider.py:

import re

import json

from scrapy.selector import Selector

try:

from scrapy.spider import Spider

except:

from scrapy.spider import BaseSpider as Spider

from scrapy.utils.response import get_base_url

from scrapy.utils.url import urljoin_rfc

from scrapy.contrib.spiders import CrawlSpider, Rule

from scrapy.contrib.linkextractors.sgml import SgmlLinkExtractor as sle

from itzhaopin.items import *

from itzhaopin.misc.log import *

class TencentSpider(CrawlSpider):

name = "tencent"

allowed_domains = ["tencent.com"]

start_urls = [

"http://hr.tencent.com/position.php"

]

rules = [ # 定义爬取URL的规则

Rule(sle(allow=("/position.php\?&start=\d{,4}#a")), follow=True, callback='parse_item')

]

def parse_item(self, response): # 提取数据到Items里面,主要用到XPath和CSS选择器提取网页数据

items = []

sel = Selector(response)

base_url = get_base_url(response)

sites_even = sel.css('table.tablelist tr.even')

for site in sites_even:

item = TencentItem()

item['name'] = site.css('.l.square a').xpath('text()').extract()

relative_url = site.css('.l.square a').xpath('@href').extract()[0]

item['detailLink'] = urljoin_rfc(base_url, relative_url)

item['catalog'] = site.css('tr > td:nth-child(2)::text').extract()

item['workLocation'] = site.css('tr > td:nth-child(4)::text').extract()

item['recruitNumber'] = site.css('tr > td:nth-child(3)::text').extract()

item['publishTime'] = site.css('tr > td:nth-child(5)::text').extract()

items.append(item)

#print repr(item).decode("unicode-escape") + '\n'

sites_odd = sel.css('table.tablelist tr.odd')

for site in sites_odd:

item = TencentItem()

item['name'] = site.css('.l.square a').xpath('text()').extract()

relative_url = site.css('.l.square a').xpath('@href').extract()[0]

item['detailLink'] = urljoin_rfc(base_url, relative_url)

item['catalog'] = site.css('tr > td:nth-child(2)::text').extract()

item['workLocation'] = site.css('tr > td:nth-child(4)::text').extract()

item['recruitNumber'] = site.css('tr > td:nth-child(3)::text').extract()

item['publishTime'] = site.css('tr > td:nth-child(5)::text').extract()

items.append(item)

#print repr(item).decode("unicode-escape") + '\n'

info('parsed ' + str(response))

return items

def _process_request(self, request):

info('process ' + str(request))

return requestImplement PipeLine

PipeLine is used to save the Item list returned by Spider, and can be written to a file, database, etc.

PipeLine has only one method that needs to be implemented: process_item. For example, we save Item to a JSON format file:

pipelines.py

from scrapy import signals

import json

import codecs

class JsonWithEncodingTencentPipeline(object):

def __init__(self):

self.file = codecs.open('tencent.json', 'w', encoding='utf-8')

def process_item(self, item, spider):

line = json.dumps(dict(item), ensure_ascii=False) + "\n"

self.file.write(line)

return item

def spider_closed(self, spider):

self.file.close(

)Up to now, we have completed the implementation of a basic crawler. You can enter the following command To start this Spider:

scrapy crawl tencent

After the crawler is finished running, a file named tencent.json will be generated in the current directory, which saves job recruitment information in JSON format.

Part of the content is as follows:

{"recruitNumber": ["1"], "name": ["SD5-Senior Mobile Game Planning (Shenzhen)"], "detailLink": "http://hr.tencent.com/position_detail.php?id =15626&keywords=&tid=0&lid=0", "publishTime": ["2014-04-25"], "catalog": ["Product/Project Category"], "workLocation": ["Shenzhen"]}

{ "recruitNumber": ["1"], "name": ["TEG13-Backend Development Engineer (Shenzhen)"], "detailLink": "http://hr.tencent.com/position_detail.php?id=15666&keywords= &tid=0&lid=0", "publishTime": ["2014-04-25"], "catalog": ["Technology"], "workLocation": ["Shenzhen"]}

{"recruitNumber": [ "2"], "name": ["TEG12-Data Center Senior Manager (Shenzhen)"], "detailLink": "http://hr.tencent.com/position_detail.php?id=15698&keywords=&tid=0&lid= 0", "publishTime": ["2014-04-25"], "catalog": ["Technical Category"], "workLocation": ["Shenzhen"]}

{"recruitNumber": ["1"] , "name": ["GY1-WeChat Pay Brand Planning Manager (Shenzhen)"], "detailLink": "http://hr.tencent.com/position_detail.php?id=15710&keywords=&tid=0&lid=0", "publishTime": ["2014-04-25"], "catalog": ["Market Class"], "workLocation": ["Shenzhen"]}

{"recruitNumber": ["2"], "name ": ["SNG06-Backend Development Engineer (Shenzhen)"], "detailLink": "http://hr.tencent.com/position_detail.php?id=15499&keywords=&tid=0&lid=0", "publishTime": [ "2014-04-25"], "catalog": ["Technology"], "workLocation": ["Shenzhen"]}

{"recruitNumber": ["2"], "name": ["OMG01 -Tencent Fashion Video Planning Editor (Beijing)"], "detailLink": "http://hr.tencent.com/position_detail.php?id=15694&keywords=&tid=0&lid=0", "publishTime": ["2014- 04-25"], "catalog": ["Content Editing Class"], "workLocation": ["Beijing"]}

{"recruitNumber": ["1"], "name": ["HY08-QT Client Windows Development Engineer (Shenzhen)"], "detailLink": "http://hr.tencent.com/position_detail.php?id=11378&keywords=&tid=0&lid=0", "publishTime": ["2014-04 -25"], "catalog": ["Technology"], "workLocation": ["Shenzhen"]}

{"recruitNumber": ["5"], "name": ["HY1-Mobile Game Test Manager (Shanghai)"], "detailLink": "http://hr.tencent.com/position_detail.php?id=15607&keywords=&tid=0&lid=0", "publishTime": ["2014-04-25"] , "catalog": ["Technical"], "workLocation": ["Shanghai"]}

{"recruitNumber": ["1"], "name": ["HY6-Internet Cafe Platform Senior Product Manager (Shenzhen )"], "detailLink": "http://hr.tencent.com/position_detail.php?id=10974&keywords=&tid=0&lid=0", "publishTime": ["2014-04-25"], "catalog ": ["Product/Project Category"], "workLocation": ["Shenzhen"]}

{"recruitNumber": ["4"], "name": ["TEG14-Cloud Storage R&D Engineer (Shenzhen)" ], "detailLink": "http://hr.tencent.com/position_detail.php?id=15168&keywords=&tid=0&lid=0", "publishTime": ["2014-04-24"], "catalog": ["Technical"], "workLocation": ["Shenzhen"]}

{"recruitNumber": ["1"], "name": ["CB-Compensation Manager (Shenzhen)"], "detailLink": "http://hr.tencent.com/position_detail.php?id=2309&keywords=&tid=0&lid=0", "publishTime": ["2013-11-28"], "catalog": ["Functional Class"] , "workLocation": ["Shenzhen"]}