python module logging

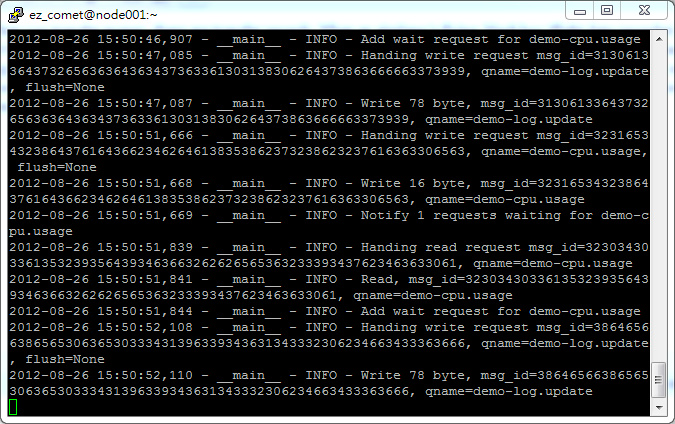

In real life, keeping logs is very important. When a bank transfer is made, there will be a transfer record; during the flight of the aircraft, there will be a black box (flight data recorder) to record everything during the flight. If something goes wrong, people can look at the log data to figure out what happened. For system development, debugging and operation, logging is equally important. Without logging, it would be almost impossible to figure out what happened when your program crashed. For example, when you are writing a server program, logging is very necessary. Shown below is a screenshot of the log file of the EZComet.com server.

After the service crashes, without logs, I have almost no way of knowing what went wrong. Logging is important not only for servers, but also for desktop graphics applications. For example, when your client's PC crashes, you can ask them to send you their log files so you can figure out what the problem is. Believe me, you never know what kind of strange problems may occur in different PC environments. I have received error logs like this before.

2011-08-22 17:52:54,828 - root - ERROR - [Errno 10104] getaddrinfo failed Traceback (most recent call last): File "<string>", line 124, in main File "<string>", line 20, in __init__ File "h:workspaceprojectbuildpyi.win32mrdjoutPYZ1.pyz/wx._core", line 7978, in __init__ File "h:workspaceprojectbuildpyi.win32mrdjoutPYZ1.pyz/wx._core", line 7552, in _BootstrapApp File "<string>", line 84, in OnInit File "h:workspaceprojectbuildpyi.win32mrdjoutPYZ1.pyz/twisted.internet.wxreactor", line 175, in install File "h:workspaceprojectbuildpyi.win32mrdjoutPYZ1.pyz/twisted.internet._threadedselect", line 106, in __init__ File "h:workspaceprojectbuildpyi.win32mrdjoutPYZ1.pyz/twisted.internet.base", line 488, in __init__ File "h:workspaceprojectbuildpyi.win32mrdjoutPYZ1.pyz/twisted.internet.posixbase", line 266, in installWaker File "h:workspaceprojectbuildpyi.win32mrdjoutPYZ1.pyz/twisted.internet.posixbase", line 74, in __init__ File "h:workspaceprojectbuildpyi.win32mrdjoutPYZ1.pyz/socket", line 224, in meth gaierror: [Errno 10104] getaddrinfo failed

I finally discovered that this customer’s PC was infected by a virus, which caused the call to the gethostname function to fail. See, how could you possibly know this if there were no logs to check.

Print output is not a good idea

Although logging is very important, not all developers can use it correctly. I have seen some developers record logs in this way, inserting print statements during the development process, and then removing these statements after the development is completed. Like this:

print 'Start reading database' records = model.read_recrods() print '# records', records print 'Updating record ...' model.update_records(records) print 'done'

This method is useful for simple script-type programs, but if it is a complex system, you'd better not use this method. First of all, you can't leave only extremely important messages in the log file. You will see a lot of message logs. But you won't find any useful information. You have no control over the code other than removing the output statements, but it's very possible that you forgot to remove the unused output. Furthermore, all the information output by print goes to the standard output, which will seriously affect your ability to view other output data from the standard output. Of course, you can also output messages to stderr, but using print is still not a good way to do logging.

Use python’s standard logging module

So, how to record logs correctly? It's actually very simple, using python's standard logging module. Thanks to the python community for making logging a standard module. It's very easy to use and very flexible. You can use the logging system like this:

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

logger.info('Start reading database')

# read database here

records = {'john': 55, 'tom': 66}

logger.debug('Records: %s', records)

logger.info('Updating records ...')

# update records here

logger.info('Finish updating records')When you run it, you can see:

INFO:__main__:Start reading database INFO:__main__:Updating records ... INFO:__main__:Finish updating records

You may ask how this is different from using print. It has the following advantages:

You can control the level of messages and filter out unimportant messages.

You can decide where to output and how to output.

There are many important gender levels to choose from, debug, info, warning, error and critical. By assigning different levels to the logger or handler, you can only output error messages to a specific log file, or only log debugging information when debugging. Let's change the logger's level to DEBUG and take a look at the output:

logging.basicConfig(level=logging.DEBUG)

The output becomes:

INFO:__main__:Start reading database

DEBUG:__main__:Records: {'john': 55, 'tom': 66}

INFO:__main__:Updating records ...

INFO:__main__:Finish updating recordsAs you can see, after we change the logger's level to DEBUG, the debugging record appears in the output . You can also choose what to do with these messages. For example, you can use FileHandler to write records to a file:

import logging logger = logging.getLogger(__name__) logger.setLevel(logging.INFO) # create a file handler handler = logging.FileHandler('hello.log') handler.setLevel(logging.INFO) # create a logging format formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s') handler.setFormatter(formatter) # add the handlers to the logger logger.addHandler(handler) logger.info('Hello baby')

Output logging at the appropriate level

With the flexible logging module, you can output logging anywhere at the appropriate level and then configure them . So you may ask, what is the appropriate level? Here I will share some of my experiences.

In most cases, you don’t want to read too many details in the log. Therefore, you should only use the DEBUG level during debugging. I only use DEBUG to get detailed debugging information, especially when the data volume is large or the frequency is high, such as the intermediate states of each loop inside the algorithm.

def complex_algorithm(items):

for i, item in enumerate(items):

# do some complex algorithm computation

logger.debug('%s iteration, item=%s', i, item)在处理请求或者服务器状态变化等日常事务中,我会使用 INFO 等级。

def handle_request(request):

logger.info('Handling request %s', request)

# handle request here

result = 'result'

logger.info('Return result: %s', result)

def start_service():

logger.info('Starting service at port %s ...', port)

service.start()

logger.info('Service is started')当发生很重要的事件,但是并不是错误时,我会使用 WARNING 。比如,当用户登录密码错误时,或者连接变慢时。

def authenticate(user_name, password, ip_address):

if user_name != USER_NAME and password != PASSWORD:

logger.warn('Login attempt to %s from IP %s', user_name, ip_address)

return False

# do authentication here有错误发生时肯定会使用 ERROR 等级了。比如抛出异常,IO 操作失败或者连接问题等。

def get_user_by_id(user_id):

user = db.read_user(user_id)

if user is None:

logger.error('Cannot find user with user_id=%s', user_id)

return user

return user我很少使用 CRITICAL 。当一些特别糟糕的事情发生时,你可以使用这个级别来记录。比方说,内存耗尽,磁盘满了或者核危机(希望永远别发生 :S)。

虽然不是非得将 logger 的名称设置为 __name__ ,但是这样做会给我们带来诸多益处。在 python 中,变量 __name__ 的名称就是当前模块的名称。比如,在模块 “foo.bar.my_module” 中调用 logger.getLogger(__name__) 等价于调用logger.getLogger(“foo.bar.my_module”) 。当你需要配置 logger 时,你可以配置到 “foo” 中,这样包 foo 中的所有模块都会使用相同的配置。当你在读日志文件的时候,你就能够明白消息到底来自于哪一个模块。

捕捉异常并使用 traceback 记录它

出问题的时候记录下来是个好习惯,但是如果没有 traceback ,那么它一点儿用也没有。你应该捕获异常并用 traceback 把它们记录下来。比如下面这个例子:

try:

open('/path/to/does/not/exist', 'rb')

except (SystemExit, KeyboardInterrupt):

raise

except Exception, e:

logger.error('Failed to open file', exc_info=True)使用参数 exc_info=true 调用 logger 方法, traceback 会输出到 logger 中。你可以看到下面的结果

ERROR:__main__:Failed to open file

Traceback (most recent call last):

File "example.py", line 6, in <module>

open('/path/to/does/not/exist', 'rb')

IOError: [Errno 2] No such file or directory: '/path/to/does/not/exist'Python 使用logging模块记录日志涉及四个主要类,使用官方文档中的概括最为合适:

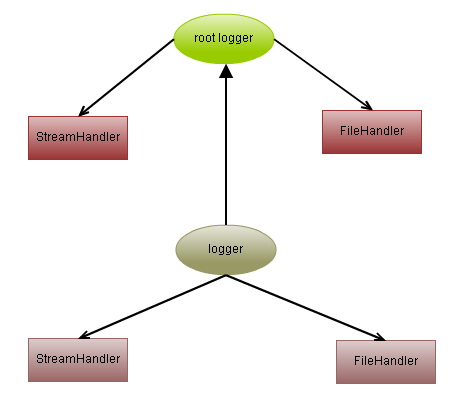

logger提供了应用程序可以直接使用的接口;

handler将(logger创建的)日志记录发送到合适的目的输出;

filter提供了细度设备来决定输出哪条日志记录;

formatter决定日志记录的最终输出格式。

logging模块是在2.3新引进的功能,下面是一些常用的类和模块级函数

模块级函数

logging.getLogger([name]):返回一个logger对象,如果没有指定名字将返回root logger

logging.debug()、logging.info()、logging.warning()、logging.error()、logging.critical():设定root logger的日志级别

logging.basicConfig():用默认Formatter为日志系统建立一个StreamHandler,设置基础配置并加到root logger中

每个程序在输出信息之前都要获得一个Logger。Logger通常对应了程序的模块名,比如聊天工具的图形界面模块可以这样获得它的Logger:

LOG=logging.getLogger(”chat.gui”)

而核心模块可以这样:

LOG=logging.getLogger(”chat.kernel”)

Logger.setLevel(lel):指定最低的日志级别,低于lel的级别将被忽略。debug是最低的内置级别,critical为最高

Logger.addFilter(filt)、Logger.removeFilter(filt):添加或删除指定的filter

Logger.addHandler(hdlr)、Logger.removeHandler(hdlr):增加或删除指定的handler

Logger.debug()、Logger.info()、Logger.warning()、Logger.error()、Logger.critical():可以设置的日志级别

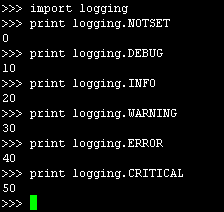

设置logger的level, level有以下几个级别:

NOTSET < DEBUG < INFO < WARNING < ERROR < CRITICAL

如果把looger的级别设置为INFO, 那么小于INFO级别的日志都不输出, 大于等于INFO级别的日志都输出

Handlers

handler对象负责发送相关的信息到指定目的地。Python的日志系统有多种Handler可以使用。有些Handler可以把信息输出到控制台,有些Logger可以把信息输出到文件,还有些 Handler可以把信息发送到网络上。如果觉得不够用,还可以编写自己的Handler。可以通过addHandler()方法添加多个多handler

Handler.setLevel(lel):指定被处理的信息级别,低于lel级别的信息将被忽略

Handler.setFormatter():给这个handler选择一个格式

Handler.addFilter(filt)、Handler.removeFilter(filt):新增或删除一个filter对象

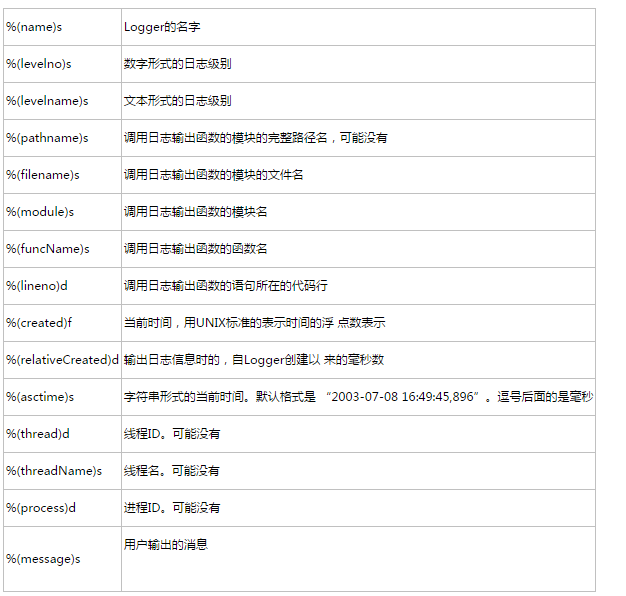

Formatters

Formatter对象设置日志信息最后的规则、结构和内容,默认的时间格式为%Y-%m-%d %H:%M:%S,下面是Formatter常用的一些信息

设置过滤器

细心的朋友一定会发现前文调用logging.getLogger()时参数的格式类似于“A.B.C”。采取这样的格式其实就是为了可以配置过滤器。看一下这段代码:

LOG=logging.getLogger(”chat.gui.statistic”)

console = logging.StreamHandler()

console.setLevel(logging.INFO)

formatter = logging.Formatter(’%(asctime)s %(levelname)s %(message)s’)

console.setFormatter(formatter)

filter=logging.Filter(”chat.gui”)

console.addFilter(filter)

LOG.addHandler(console)

和前面不同的是我们在Handler上添加了一个过滤器。现在我们输出日志信息的时候就会经过过滤器的处理。名为“A.B”的过滤器只让名字带有 “A.B”前缀的Logger输出信息。可以添加多个过滤器,只要有一个过滤器拒绝,日志信息就不会被输出。当然名为“A”前缀的Logger会输出信息。另外,在Logger中也可以添加过滤器。

每个Logger可以附加多个Handler。接下来我们就来介绍一些常用的Handler:

1) logging.StreamHandler

使用这个Handler可以向类似与sys.stdout或者sys.stderr的任何文件对象(file object)输出信息。它的构造函数是:

StreamHandler([strm])

其中strm参数是一个文件对象。默认是sys.stderr

2) logging.FileHandler

和StreamHandler类似,用于向一个文件输出日志信息。不过FileHandler会帮你打开这个文件。它的构造函数是:

FileHandler(filename[,mode])

filename是文件名,必须指定一个文件名。

mode是文件的打开方式。参见Python内置函数open()的用法。默认是’a',即添加到文件末尾。

3) logging.handlers.RotatingFileHandler

这个Handler类似于上面的FileHandler,但是它可以管理文件大小。当文件达到一定大小之后,它会自动将当前日志文件改名,然后创建 一个新的同名日志文件继续输出。比如日志文件是chat.log。当chat.log达到指定的大小之后,RotatingFileHandler自动把 文件改名为chat.log.1。不过,如果chat.log.1已经存在,会先把chat.log.1重命名为chat.log.2。。。最后重新创建 chat.log,继续输出日志信息。它的构造函数是:

RotatingFileHandler( filename[, mode[, maxBytes[, backupCount]]])

其中filename和mode两个参数和FileHandler一样。

maxBytes用于指定日志文件的最大文件大小。如果maxBytes为0,意味着日志文件可以无限大,这时上面描述的重命名过程就不会发生。

backupCount用于指定保留的备份文件的个数。比如,如果指定为2,当上面描述的重命名过程发生时,原有的chat.log.2并不会被更名,而是被删除。

4) logging.handlers.TimedRotatingFileHandler

这个Handler和RotatingFileHandler类似,不过,它没有通过判断文件大小来决定何时重新创建日志文件,而是间隔一定时间就 自动创建新的日志文件。重命名的过程与RotatingFileHandler类似,不过新的文件不是附加数字,而是当前时间。它的构造函数是:

TimedRotatingFileHandler( filename [,when [,interval [,backupCount]]])

其中filename参数和backupCount参数和RotatingFileHandler具有相同的意义。

interval是时间间隔。

when参数是一个字符串。表示时间间隔的单位,不区分大小写。它有以下取值:

S 秒

M 分

H 小时

D 天

W 每星期(interval==0时代表星期一)

midnight 每天凌晨

5) logging.handlers.SocketHandler

6) logging.handlers.DatagramHandler

以上两个Handler类似,都是将日志信息发送到网络。不同的是前者使用TCP协议,后者使用UDP协议。它们的构造函数是:

Handler(host, port)

其中host是主机名,port是端口名

7) logging.handlers.SysLogHandler

8) logging.handlers.NTEventLogHandler

9) logging.handlers.SMTPHandler

10) logging.handlers.MemoryHandler

11) logging.handlers.HTTPHandler

# encoding:utf-8

#import logging

#FORMAT = '%(asctime)-15s %(clientip)s %(user)-8s %(message)s'

#logging.basicConfig(format=FORMAT)

#d = {'clientip': '192.168.0.1', 'user': 'fbloggs'}

#logger = logging.getLogger('tcpserver')

#logger.warning('Protocol problem: %s', 'connection reset', extra=d)

#FORMAT = '%(asctime)-15s %(message)s'

#logging.basicConfig(filename = "C:\\Users\\june\\Desktop\\1.txt", level = logging.DEBUG, filemode = "a", format=FORMAT)

#logging.debug('this is a message')

#logging.debug('test')

#import logging

#import datetime

#

#curDate = datetime.date.today() - datetime.timedelta(days=0)

#logName = 'C:\\Users\\june\\Desktop\\error_%s.log' %curDate

#

#logging.basicConfig(level=logging.INFO,

# format='%(asctime)s %(filename)s[line:%(lineno)d] %(levelname)s %(message)s',

# #datefmt='%a, %d %b %Y %H:%M:%S',

# filename=logName,

# filemode='a')

#

##2013-10-21 03:25:51,509 writeLog.py[line:14] INFO This is info message

##2013-10-21 03:25:51,510 writeLog.py[line:15] WARNING This is warning message

#logging.debug('This is debug message')

#logging.info('This is info message')

#logging.warning('This is warning message')import logging

import logging.config

logging.config.fileConfig("logging.conf")

#create logger

loggerInfo = logging.getLogger("infoLogger")

#"application" code

loggerInfo.debug("debug message")

loggerInfo.info("info message")

loggerInfo.warn("warn message")

loggerInfo.error("error message")

loggerInfo.critical("critical message")

loggerError = logging.getLogger("errorLogger")

loggerError.error("Error: Hello world!")#coding=utf-8

import logging

import datetime

format='%(asctime)s - %(filename)s - [line:%(lineno)d] - %(levelname)s - %(message)s'

curDate = datetime.date.today() - datetime.timedelta(days=0)

infoLogName = r'C:/Users/june/Desktop/info_%s.log' %curDate

errorLogName = r'C:/Users/june/Desktop/error_%s.log' %curDate

formatter = logging.Formatter(format)

infoLogger = logging.getLogger("infoLog")

errorLogger = logging.getLogger("errorLog")

infoLogger.setLevel(logging.INFO)

errorLogger.setLevel(logging.ERROR)

infoHandler = logging.FileHandler(infoLogName, 'a')

infoHandler.setLevel(logging.INFO)

infoHandler.setFormatter(formatter)

errorHandler = logging.FileHandler(errorLogName, 'a')

errorHandler.setLevel(logging.ERROR)

errorHandler.setFormatter(formatter)

testHandler = logging.StreamHandler()

testHandler.setFormatter(formatter)

testHandler.setLevel(logging.ERROR)

infoLogger.addHandler(infoHandler)

infoLogger.addHandler(testHandler)

errorLogger.addHandler(errorHandler)

#infoLogger.debug("debug message")

#infoLogger.info("info message")

#infoLogger.warn("warn message")

# # 下面这行会同时打印在文件和终端上

#infoLogger.error("error message")

#

#errorLogger.error("error message")

#errorLogger.critical("critical message")'''

Created on 2016年8月18日

@author: apple

'''

#-*- coding:utf-8 -*-

#开发出一个日志系统,既要把日志输出到控制台,还要写入日志文件

import logging

import time

import os

import os.path

class Logger():

def __init__(self, log_name, logger_name):

'''

指定保存日志的文件路径,日志级别以及调用文件

将日志 存入到指定的文件中

'''

#设置日志文件名称:time.time()取得当前时间;time.localtime()取得本地时间;time.strftime()格式化日期;

time_str = time.strftime("%Y_%m_%d_%H_%M_%S", time.localtime(time.time()))

logname = time_str + '_' + log_name + '.log'

#设置日志文件所在的路径

log_filedir = 'Log'

if not os.path.isdir(log_filedir):

print("日志文件夹 %s 不存在,开始创建此文件夹" %log_filedir)

os.mkdir('Log')

else:

print("日志文件夹 %s 存在" %log_filedir)

os.chdir('Log')

#创建一个logger以及设置日志级别

#logging有6个日志级别:NOTSET, DEBUG, INFO, WARNING, ERROR, CRITICAL对应的值分别为:0,10,20,30,40,50

#例如:logging.DEBUG和10是等价的表示方法

#可以给日志对象(Logger Instance)设置日志级别,低于该级别的日志消息将会被忽略,也可以给Hanlder设置日志级别

#对于低于该级别的日志消息, Handler也会忽略。

self.logger = logging.getLogger(logger_name)

self.logger.setLevel(logging.DEBUG)

#创建文件handler,用于写入日志文件并设置文件日志级别

file_handler = logging.FileHandler(logname)

file_handler.setLevel(logging.DEBUG)

#创建控制端输出handler,用于输出到控制端并设置输出日志级别

console_handler = logging.StreamHandler()

console_handler.setLevel(logging.DEBUG)

#在控制端handler添加过滤器,将含有chat或者gui的handler信息输出

filter = logging.Filter("chat.gui")

console_handler.addFilter(filter)

#定义handler的输出格式并将格式应用到handler

formatter = logging.Formatter('%(asctime)s-%(name)s-%(levelname)s-%(message)s')

file_handler.setFormatter(formatter)

console_handler.setFormatter(formatter)

#将handler加入到logger

self.logger.addHandler(file_handler)

self.logger.addHandler(console_handler)

self.logger.debug("这个是debug日志信息")

self.logger.info("欢迎大家来到 Python的世界")

#将handler从logger中移除

self.logger.removeHandler(file_handler)

self.logger.removeHandler(console_handler)

if __name__ == '__main__':

print(os.getcwd())

Log = Logger('create_log', "chat.gui.statistic")

# 模块级函数

#

# logging.getLogger([name]):返回一个logger对象,如果没有指定名字将返回root logger

# logging.debug()、logging.info()、logging.warning()、logging.error()、logging.critical():设定root logger的日志级别

# logging.basicConfig():用默认Formatter为日志系统建立一个StreamHandler,设置基础配置并加到root logger中

#

# Loggers

#

# Logger.setLevel(lel):指定最低的日志级别,低于lel的级别将被忽略。debug是最低的内置级别,critical为最高

# Logger.addFilter(filt)、Logger.removeFilter(filt):添加或删除指定的filter

# Logger.addHandler(hdlr)、Logger.removeHandler(hdlr):增加或删除指定的handler

# Logger.debug()、Logger.info()、Logger.warning()、Logger.error()、Logger.critical():可以设置的日志级别

# Handlers

#

# handler对象负责发送相关的信息到指定目的地。可以通过addHandler()方法添加多个多handler

# Handler.setLevel(lel):指定被处理的信息级别,低于lel级别的信息将被忽略

# Handler.setFormatter():给这个handler选择一个格式

# Handler.addFilter(filt)、Handler.removeFilter(filt):新增或删除一个filter对象

# Formatters

#

# Formatter对象设置日志信息最后的规则、结构和内容,默认的时间格式为%Y-%m-%d %H:%M:%S,下面是Formatter常用的一些信息

# %(name)s Logger的名字

#

# %(levelno)s 数字形式的日志级别

#

# %(levelname)s 文本形式的日志级别

#

# %(pathname)s 调用日志输出函数的模块的完整路径名,可能没有

#

# %(filename)s 调用日志输出函数的模块的文件名

#

# %(module)s 调用日志输出函数的模块名

#

# %(funcName)s 调用日志输出函数的函数名

#

# %(lineno)d 调用日志输出函数的语句所在的代码行

#

# %(created)f 当前时间,用UNIX标准的表示时间的浮 点数表示

#

# %(relativeCreated)d 输出日志信息时的,自Logger创建以 来的毫秒数

#

# %(asctime)s 字符串形式的当前时间。默认格式是 “2003-07-08 16:49:45,896”。逗号后面的是毫秒

#

# %(thread)d 线程ID。可能没有

#

# %(threadName)s 线程名。可能没有

#

# %(process)d 进程ID。可能没有

#

# %(message)s 用户输出的消息'''

Created on 2016年8月25日

@author: apple

'''

import logging

logging.basicConfig(level=logging.INFO,

format='%(asctime)s %(name)s %(levelname)s %(message)s',

datefmt='%m-%d %H:%M',

filename='./AutoUpdate.log',

filemode='w')

console = logging.StreamHandler()

console.setLevel(logging.INFO)

formatter = logging.Formatter('%(name)s: %(levelname)-8s %(message)s')

console.setFormatter(formatter)

logging.getLogger('').addHandler(console)

logging.info("hello world!")

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1384

1384

52

52

How to solve the permissions problem encountered when viewing Python version in Linux terminal?

Apr 01, 2025 pm 05:09 PM

How to solve the permissions problem encountered when viewing Python version in Linux terminal?

Apr 01, 2025 pm 05:09 PM

Solution to permission issues when viewing Python version in Linux terminal When you try to view Python version in Linux terminal, enter python...

How to efficiently copy the entire column of one DataFrame into another DataFrame with different structures in Python?

Apr 01, 2025 pm 11:15 PM

How to efficiently copy the entire column of one DataFrame into another DataFrame with different structures in Python?

Apr 01, 2025 pm 11:15 PM

When using Python's pandas library, how to copy whole columns between two DataFrames with different structures is a common problem. Suppose we have two Dats...

How to teach computer novice programming basics in project and problem-driven methods within 10 hours?

Apr 02, 2025 am 07:18 AM

How to teach computer novice programming basics in project and problem-driven methods within 10 hours?

Apr 02, 2025 am 07:18 AM

How to teach computer novice programming basics within 10 hours? If you only have 10 hours to teach computer novice some programming knowledge, what would you choose to teach...

How to avoid being detected by the browser when using Fiddler Everywhere for man-in-the-middle reading?

Apr 02, 2025 am 07:15 AM

How to avoid being detected by the browser when using Fiddler Everywhere for man-in-the-middle reading?

Apr 02, 2025 am 07:15 AM

How to avoid being detected when using FiddlerEverywhere for man-in-the-middle readings When you use FiddlerEverywhere...

What are regular expressions?

Mar 20, 2025 pm 06:25 PM

What are regular expressions?

Mar 20, 2025 pm 06:25 PM

Regular expressions are powerful tools for pattern matching and text manipulation in programming, enhancing efficiency in text processing across various applications.

How does Uvicorn continuously listen for HTTP requests without serving_forever()?

Apr 01, 2025 pm 10:51 PM

How does Uvicorn continuously listen for HTTP requests without serving_forever()?

Apr 01, 2025 pm 10:51 PM

How does Uvicorn continuously listen for HTTP requests? Uvicorn is a lightweight web server based on ASGI. One of its core functions is to listen for HTTP requests and proceed...

What are some popular Python libraries and their uses?

Mar 21, 2025 pm 06:46 PM

What are some popular Python libraries and their uses?

Mar 21, 2025 pm 06:46 PM

The article discusses popular Python libraries like NumPy, Pandas, Matplotlib, Scikit-learn, TensorFlow, Django, Flask, and Requests, detailing their uses in scientific computing, data analysis, visualization, machine learning, web development, and H

How to dynamically create an object through a string and call its methods in Python?

Apr 01, 2025 pm 11:18 PM

How to dynamically create an object through a string and call its methods in Python?

Apr 01, 2025 pm 11:18 PM

In Python, how to dynamically create an object through a string and call its methods? This is a common programming requirement, especially if it needs to be configured or run...