The laboratory needs to collect movie information during this period, and a large data set is given. The data set contains more than 4,000 movie names. I need to write a crawler to crawl the movie information corresponding to the movie names.

In fact, in actual operation, there is no need for crawlers at all, only a simple Python foundation is required.

Prerequisites:

Python3 syntax basics

HTTP network basics

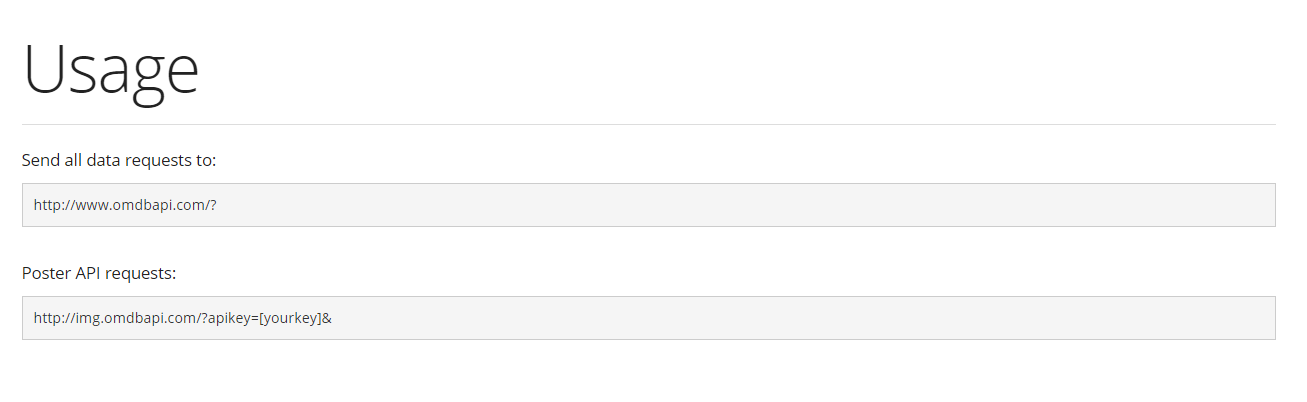

The first step is to determine the API provider. IMDb is the largest movie database. In contrast, there is an OMDb website that provides an API for use. The API of this website is very friendly and easy to use.

http://www.omdbapi.com/

The second step is to determine the format of the URL.

The third step is to understand how to use the basic Requests library.

http://cn.python-requests.org/zh_CN/latest/

Why should I use Requests instead of urllib.request?

Because this Python library is prone to all kinds of weird problems, I have had enough...

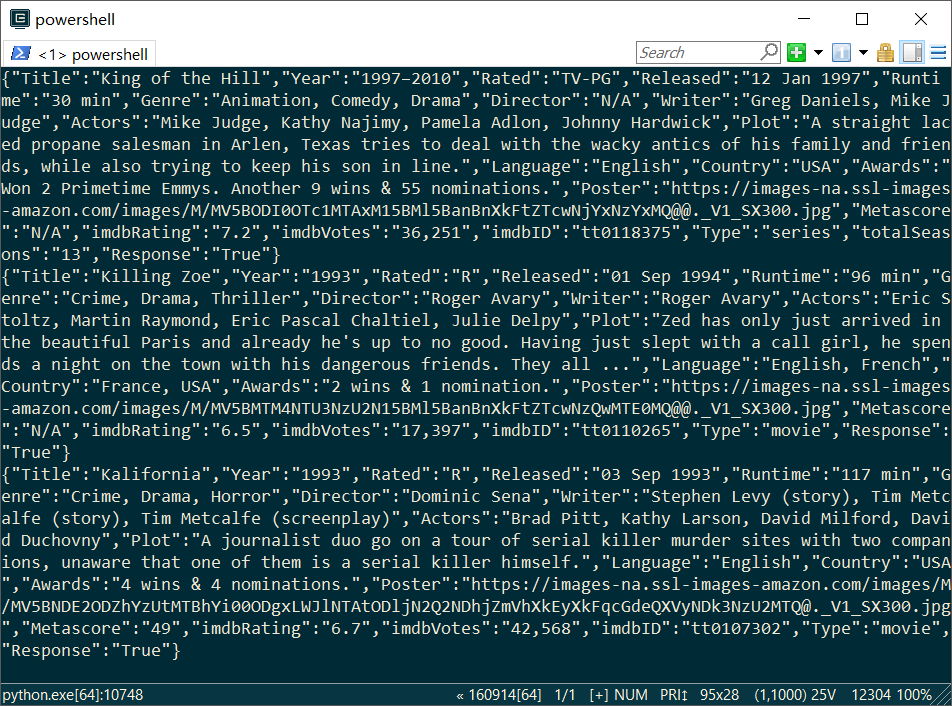

The fourth step is to write Python code.

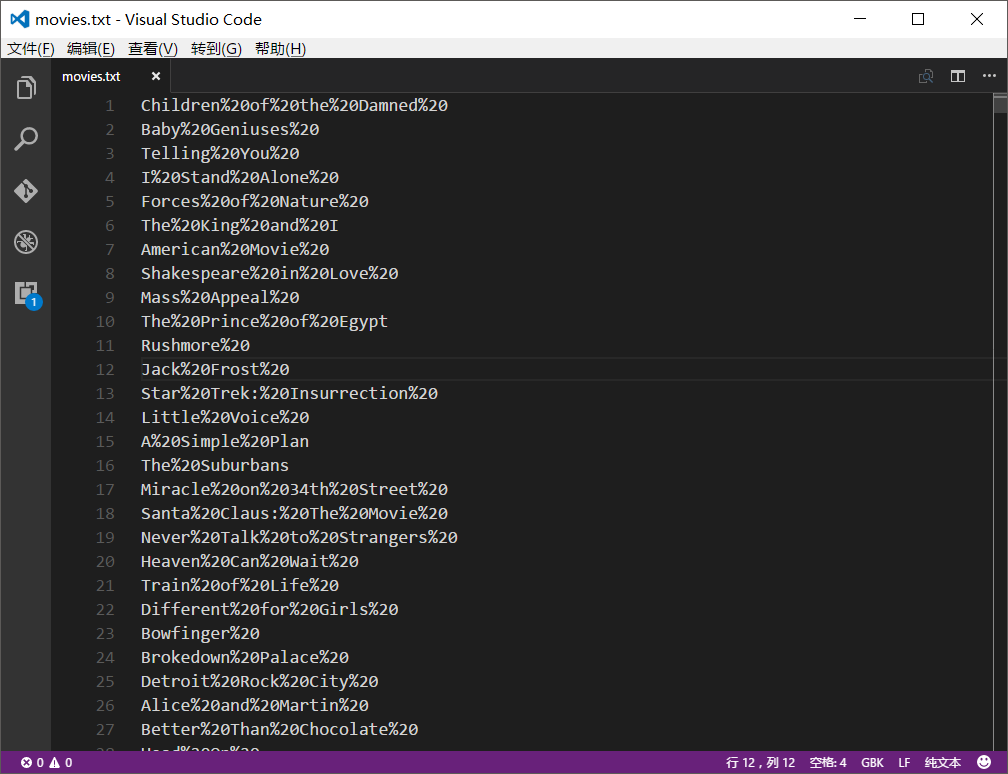

What I want to do is read the file line by line, and then use the movie name in that line to get the movie information. Because the source file is large, readlines() cannot completely read all movie names, so we read it line by line.

import requests

for line in open("movies.txt"):

s=line.split('%20\n')

urll='http://www.omdbapi.com/?t='+s[0]

result=requests.get(urll)

if result:

json=result.text

print(json)

p=open('result0.json','a')

p.write(json)

p.write('\n')

p.close()I formatted all the movie name files in advance and replaced all spaces with "%20" to make it easier to use the API (otherwise an error will be reported). This functionality can be accomplished using Visual Studio Code.

Note, select GBK encoding when encoding, otherwise the following error will occur:

1 UnicodeDecodeError: 'gbk' codec can't decode byte 0xff in position 0: illegal multibyte sequence

Step 5, do Optimization and exception handling.

Mainly do three things. The first thing is to control the API speed to prevent being blocked by the server;

The second thing is to obtain the API key (even use multiple keys)

The third thing: exception handling.

import requests 3

key=[‘’]

for line in open("movies.txt"):

try:

#……

except TimeoutError:

continue

except UnicodeEncodeError:

continue

except ConnectionError:

continueThe complete code is posted below:

# -*- coding: utf-8 -*-

import requests

import time

key=['xxxxx','yyyyy',zzzzz','aaaaa','bbbbb']

i=0

for line in open("movies.txt"):

try:

i=(i+1)%5

s=line.split('%20\n')

urll='http://www.omdbapi.com/?t='+s[0]+'&apikey='+key[i]

result=requests.get(urll)

if result:

json=result.text

print(json)

p=open('result0.json','a')

p.write(json)

p.write('\n')

p.close()

time.sleep(1)

except TimeoutError:

continue

except UnicodeEncodeError:

continue

except ConnectionError:

continueNext, have a cup of tea and see how your program runs!