Backend Development

Backend Development

Python Tutorial

Python Tutorial

Python+ big data computing platform, PyODPS architecture construction

Python+ big data computing platform, PyODPS architecture construction

Python+ big data computing platform, PyODPS architecture construction

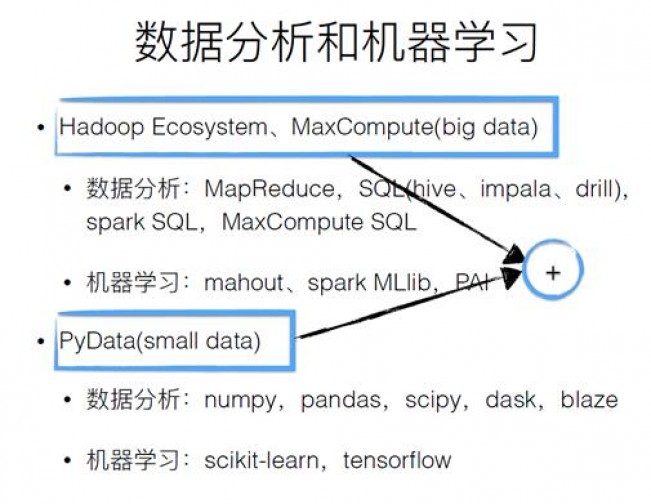

Data analysis and machine learning

Big data is basically built on the ecosystem of the Hadoop system, which is actually a Java environment. Many people like to use Python and R for data analysis, but this often corresponds to some small data problems or local data processing problems. How to combine the two to make it have greater value? The existing ecosystem of Hadoop and the existing Python environment are shown in the figure above.

MaxCompute

MaxCompute is a big data platform for offline computing, providing TB/PB level data processing, multi-tenancy, out-of-the-box use, and isolation mechanism to ensure security. The main analysis tool on MaxCompute is SQL. SQL is very simple, easy to use, and is descriptive. Tunnel provides a data upload and download channel without the need for scheduling by the SQL engine.

Pandas

Pandas is a data analysis tool based on numpy. The most important structure is DataFrame, which provides a series of drawing APIs. Behind it is the operation of matplotlib. It is very easy to interact with Python third-party libraries.

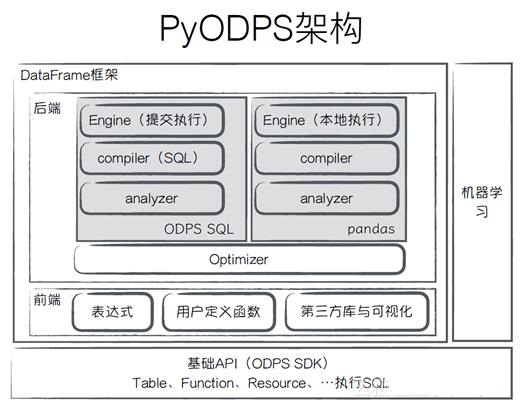

PyODPS architecture

PyODPS uses Python for big data analysis, and its architecture is shown in the figure above. The bottom layer is the basic API, which can be used to operate tables, functions or resources on MaxCompute. Above is the DataFrame framework. DataFrame consists of two parts. One part is the front end, which defines a set of expression operations. The code written by the user will be converted into an expression tree, which is the same as an ordinary language. Users can customize functions, visualize and interact with third-party libraries. At the bottom of the backend is the Optimizer, whose role is to optimize the expression tree. Both ODPS and pandas are submitted to the Engine for execution through the compiler and analyzer.

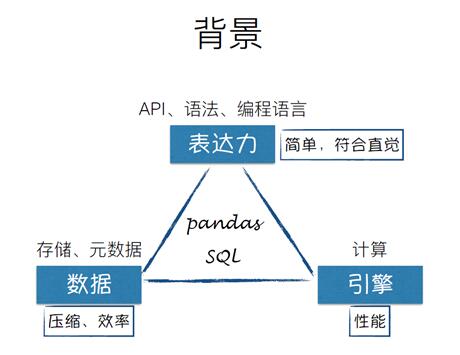

Background

Why do you want to build a DataFrame framework?

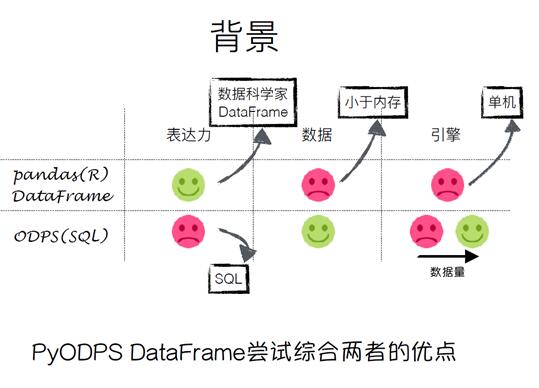

For any big data analysis tool, you will face problems in three dimensions: expressiveness, whether the API, syntax, and programming language are simple and intuitive? Data, Can the storage and metadata be compressed and effective? Is the engine and computing performance sufficient? So you will be faced with two choices: pandas and SQL.

As shown in the picture above, pandas has very good expressive power, but its data can only be placed in memory. The engine is a stand-alone machine and is limited by the performance of the machine. The expressive power of SQL is limited, but it can be used for a large amount of data. When the amount of data is small, there is no advantage of the engine. When the amount of data is large, the engine will become very advantageous. The goal of ODPS is to combine the advantages of both.

PyODPS DataFrame

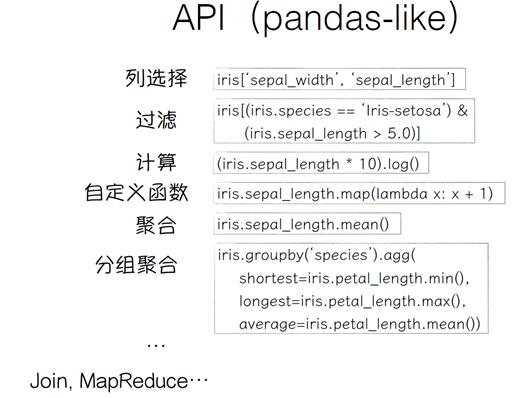

PyODPS DataFrame is written in Python language, and you can use Python variables, conditional judgments, and loops. You can use pandas-like syntax to define your own set of front-ends for better expressiveness. The backend can determine the specific execution engine based on the data source, which is the design pattern of the visitor and is extensible. The entire execution is delayed and will not be executed directly unless the user calls a method that is executed immediately.

As you can see from the picture above, the syntax is very similar to pandas.

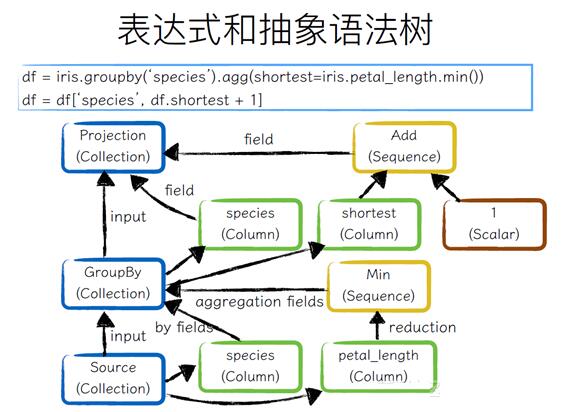

Expressions and abstract syntax trees

As can be seen from the above figure, the user performs the GroupBy operation from an original Collection, and then performs the column selection operation. The bottom is the Collection of Source. Two fields species are taken. These two fields are performed by By operation, and pental_length is used for aggregation operation to obtain the aggregate value. The Species field is taken out directly, and the shortest field is added by one.

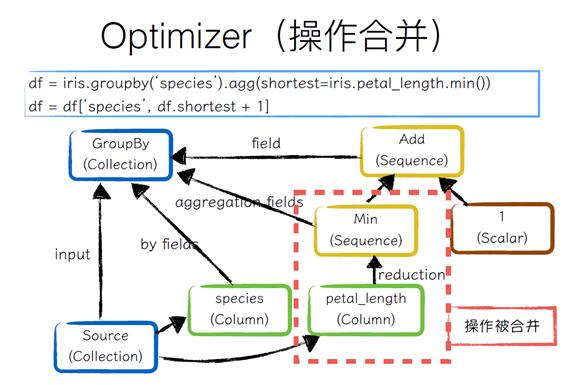

Optimizer(operation merging)

The backend will first use Optimizer to optimize the expression tree, first do GroupBy, and then do column selection on it. Through operation merging, petal_length can be removed for aggregation operation, and then add one, Finally, the Collection of GroupBy is formed.

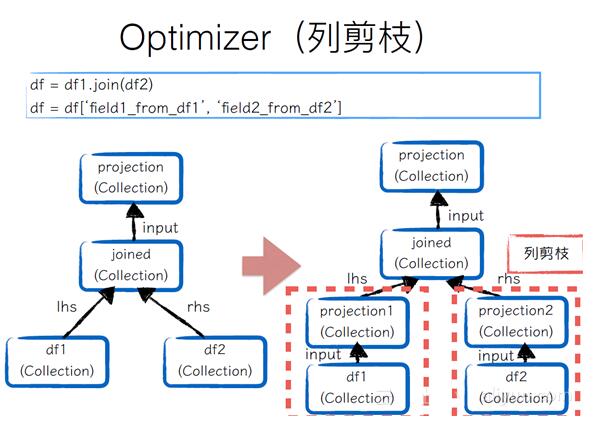

Optimizer(column pruning)

When the user joins two data frames and then retrieves two columns from the data frame, if it is submitted to a big data environment, such a process is very inefficient because not every column is used. Therefore, the columns under joined must be pruned. For example, we only use one field in data frame1. We only need to intercept the field and make a projection to form a new Collection. The same is true for data frame2. In this way, the amount of data output can be greatly reduced when performing verification operations on these two parts.

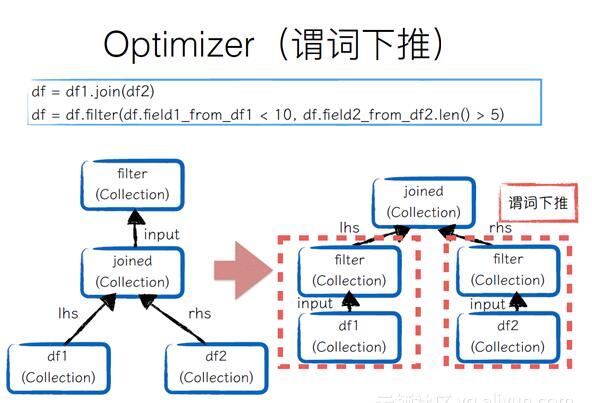

Optimizer (predicate pushdown)

If two data frames are joined and then filtered separately, this filtering operation should be pushed down to the bottom for execution, so as to reduce the amount of joined input .

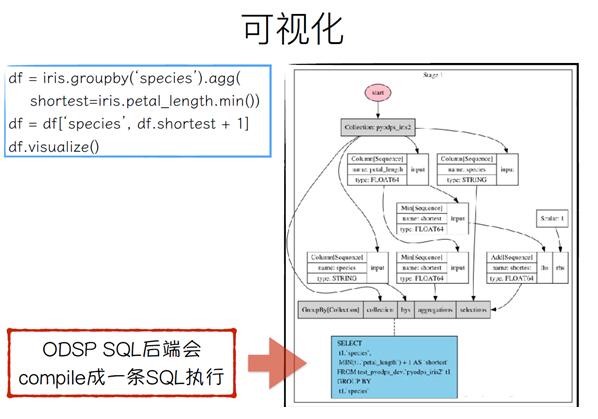

Visualization

provides visualize() to facilitate user visualization. As you can see in the example on the right, the ODSP SQL backend will compile into a SQL execution.

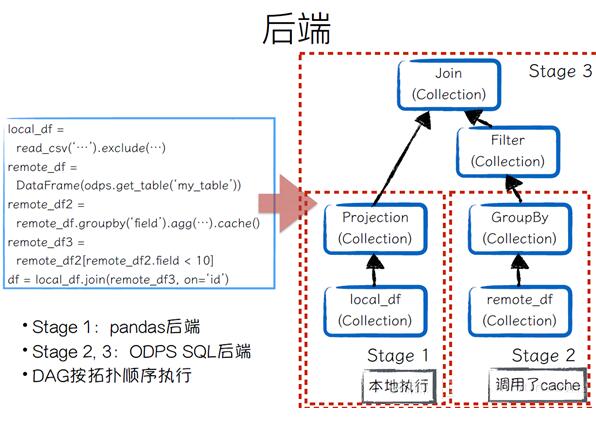

Backend

As you can see from the picture above, the computing backend is very flexible. Users can even join a pandas data frame and maxcompute data from a previous table.

Analyzer

The role of Analyzer is to convert some operations for specific backends. For example:

Some operations such as value_counts are supported by pandas itself, so for the pandas backend, no processing is required; for the ODPS SQL backend, there is no direct operation to perform, so when the analyzer is executed, it will be rewritten as groupby + sort Operations;

There are also some operators that cannot be completed by built-in functions when compiling to ODPS SQL, and will be rewritten into custom functions.

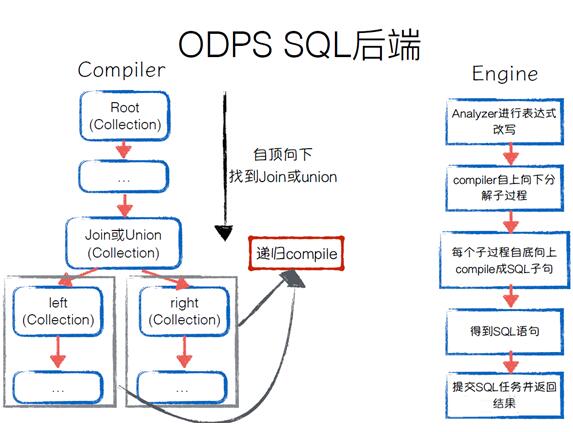

ODPS SQL backend

How does the ODPS SQL backend perform SQL compilation and then execution? The compiler can traverse the expression tree from top to bottom to find Join or Union. For subprocesses, compile recursively. When it comes to Engine for specific execution, Analyzer will be used to rewrite the expression tree, compile the top-down sub-process, and bottom-up compile into SQL clauses. Finally, the complete SQL statement will be obtained, the SQL will be submitted and the task will be returned.

pandas backend

first accesses the expression tree, and then corresponds to a pandas operation for each expression tree node. A DAG will be formed after the entire expression tree is traversed. Engine execution is executed in DAG topology order, continuously applying it to pandas operations, and finally getting a result. For big data environments, the role of the pandas backend is to do local DEBUG; when the amount of data is small, we can use pandas for calculations.

Difficulties + Pitfalls

Back-end compilation errors can easily cause the context to be lost. Multiple optimizes and analyzes make it difficult to find out which previous visit node caused the problem. Solution: Ensure the independence of each module and complete testing;

bytecode compatibility issues, maxcompute only supports the execution of custom functions in Python 2.7;

SQL execution order.

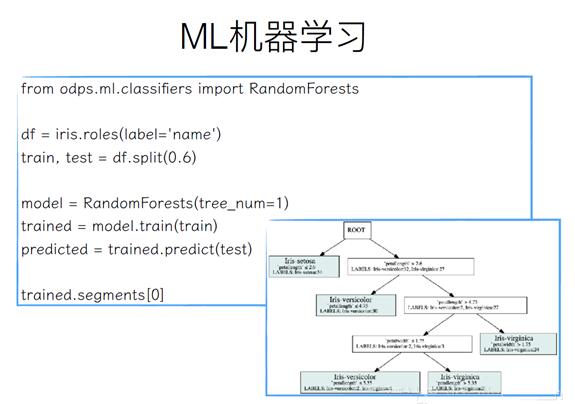

ML Machine Learning

Machine learning is the input and output of a data frame. For example, if there is an iris data frame, first use the name field to make a classification field, and call the split method to divide it into 60% training data and 40% test data. Then initialize a RandomForests with a decision tree in it, call the train method to train the training data, call the predict method to form a prediction data, and call segments[0] to see the visual results.

Future plans

Distributed numpy, DataFrame is based on the distributed numpy backend;

In-memory computing to improve interactive experience;

Tensorflow.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Can the Python interpreter be deleted in Linux system?

Apr 02, 2025 am 07:00 AM

Can the Python interpreter be deleted in Linux system?

Apr 02, 2025 am 07:00 AM

Regarding the problem of removing the Python interpreter that comes with Linux systems, many Linux distributions will preinstall the Python interpreter when installed, and it does not use the package manager...

How to solve the problem of Pylance type detection of custom decorators in Python?

Apr 02, 2025 am 06:42 AM

How to solve the problem of Pylance type detection of custom decorators in Python?

Apr 02, 2025 am 06:42 AM

Pylance type detection problem solution when using custom decorator In Python programming, decorator is a powerful tool that can be used to add rows...

Python asyncio Telnet connection is disconnected immediately: How to solve server-side blocking problem?

Apr 02, 2025 am 06:30 AM

Python asyncio Telnet connection is disconnected immediately: How to solve server-side blocking problem?

Apr 02, 2025 am 06:30 AM

About Pythonasyncio...

How to solve permission issues when using python --version command in Linux terminal?

Apr 02, 2025 am 06:36 AM

How to solve permission issues when using python --version command in Linux terminal?

Apr 02, 2025 am 06:36 AM

Using python in Linux terminal...

Python 3.6 loading pickle file error ModuleNotFoundError: What should I do if I load pickle file '__builtin__'?

Apr 02, 2025 am 06:27 AM

Python 3.6 loading pickle file error ModuleNotFoundError: What should I do if I load pickle file '__builtin__'?

Apr 02, 2025 am 06:27 AM

Loading pickle file in Python 3.6 environment error: ModuleNotFoundError:Nomodulenamed...

Do FastAPI and aiohttp share the same global event loop?

Apr 02, 2025 am 06:12 AM

Do FastAPI and aiohttp share the same global event loop?

Apr 02, 2025 am 06:12 AM

Compatibility issues between Python asynchronous libraries In Python, asynchronous programming has become the process of high concurrency and I/O...

What should I do if the '__builtin__' module is not found when loading the Pickle file in Python 3.6?

Apr 02, 2025 am 07:12 AM

What should I do if the '__builtin__' module is not found when loading the Pickle file in Python 3.6?

Apr 02, 2025 am 07:12 AM

Error loading Pickle file in Python 3.6 environment: ModuleNotFoundError:Nomodulenamed...

How to ensure that the child process also terminates after killing the parent process via signal in Python?

Apr 02, 2025 am 06:39 AM

How to ensure that the child process also terminates after killing the parent process via signal in Python?

Apr 02, 2025 am 06:39 AM

The problem and solution of the child process continuing to run when using signals to kill the parent process. In Python programming, after killing the parent process through signals, the child process still...