How does Java I/O work under the hood?

This blog post mainly discusses how I/O works at the bottom level. This article serves readers who are eager to understand how Java I/O operations are mapped at the machine level and what the hardware does when the application is running. It is assumed that you are familiar with basic I/O operations, such as reading and writing files through the Java I/O API. These contents are beyond the scope of this article.

Cache processing and kernel vs user space

Buffering and buffering processing methods are the basis of all I/O operations. The terms "input, output" only make sense for moving data into and out of the cache. Keep this in mind at all times. Typically, a process performing an operating system I/O request involves draining data from a buffer (a write operation) and filling the buffer with data (a read operation). This is the whole concept of I/O. The mechanisms that perform these transfer operations inside the operating system can be very complex, but are conceptually very simple. We will discuss it in a small part of the article.

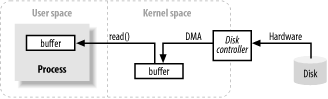

The above image shows a simplified "logical" diagram that represents how block data is moved from an external source, such as a disk, into a process's storage area (such as RAM). First, the process requires its buffer to be filled through the read() system call. This system call causes the kernel to issue a command to the disk control hardware to obtain data from the disk. The disk controller writes data directly to the kernel's memory buffer via DMA without further assistance from the main CPU. When a read() operation is requested, once the disk controller has completed filling the cache, the kernel copies the data from the temporary cache in kernel space to the process-specific cache.

One thing to note is that when the kernel tries to cache and prefetch data, the data requested by the process in the kernel space may already be ready. If so, the data requested by the process will be copied. If the data is not available, the process is suspended. The kernel will read the data into memory.

Virtual Memory

You may have heard of virtual memory many times. Let me introduce it again.

All modern operating systems use virtual memory. Virtual memory means artificial or virtual addresses instead of physical (hardware RAM) memory addresses. Virtual addresses have two important advantages:

Multiple virtual addresses can be mapped to the same physical address.

A virtual address space can be larger than the actual available hardware memory.

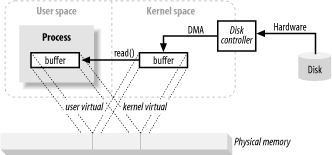

In the above introduction, copying from kernel space to end-user cache seems to add extra work. Why not tell the disk controller to send data directly to the user-space cache? Well, this is implemented by virtual memory. Use the above advantages 1.

By mapping a kernel space address to the same physical address as a user space virtual address, the DMA hardware (which can only address physical memory addresses) can fill the cache. This cache is visible to both kernel and user space processes.

This eliminates the copy between kernel and user space, but requires the kernel and user buffers to use the same page alignment. The buffer must use a multiple of the block size of the disk controller (usually 512-byte disk sectors). The operating system divides its memory address space into pages, which are fixed-size groups of bytes. These memory pages are always a multiple of the disk block size and usually 2x (simplified addressing). Typical memory page sizes are 1024, 2048 and 4096 bytes. Virtual and physical memory page sizes are always the same.

Memory Paging

In order to support the second advantage of virtual memory (having an addressable space larger than physical memory), virtual memory paging (often called page swapping) is required. This mechanism relies on the fact that pages in the virtual memory space can be persisted in external disk storage, thereby providing space for other virtual pages to be placed in physical memory. Essentially, physical memory acts as a cache for paging areas. The paging area is the space on the disk where the contents of memory pages are saved when they are forcibly swapped out of physical memory.

Adjust the memory page size to a multiple of the disk block size so that the kernel can send instructions directly to the disk controller hardware to write the memory page to disk or reload it when needed. It turns out that all disk I/O operations are done at the page level. This is the only way data can be moved between disk and physical memory on modern paged operating systems.

Modern CPUs contain a subsystem called the Memory Management Unit (MMU). This device is logically located between the CPU and physical memory. It contains mapping information from virtual addresses to physical memory addresses. When the CPU references a memory location, the MMU determines which pages need to reside (usually by shifting or masking certain bits of the address) and converts the virtual page number to a physical page number (implemented in hardware, which is extremely fast).

File-oriented, block I/O

File I/O always occurs in the context switch of the file system. File systems and disks are completely different things. Disks store data in segments, each segment is 512 bytes. It is a hardware device and knows nothing about the saved file semantics. They simply provide a certain number of slots where data can be saved. In this respect, a disk segment is similar to memory paging. They all have uniform size and are one large addressable array.

On the other hand, the file system is a higher level abstraction. A file system is a special method of arranging and translating data stored on a disk (or other random-access, block-oriented device). The code you write will almost always interact with the file system, not the disk directly. The file system defines abstractions such as file names, paths, files, and file attributes.

A file system organizes (on a hard disk) a series of uniformly sized data blocks. Some blocks hold meta-information, such as mappings of free blocks, directories, indexes, etc. Other blocks contain actual file data. Metainformation for an individual file describes which blocks contain the file's data, where the data ends, when it was last updated, and so on. When a user process sends a request to read file data, the file system accurately locates the location of the data on the disk. Action is then taken to place these disk sectors into memory.

File systems also have the concept of pages, whose size may be the same as a basic memory page size or a multiple of it. Typical file system page sizes range from 2048 to 8192 bytes and are always a multiple of the base memory page size.

Performing I/O on a paged file system can be boiled down to the following logical steps:

Determine which file system pages (collections of disk segments) the request spans. File contents and metadata on disk may be distributed over multiple file system pages, and these pages may not be contiguous.

Allocate enough kernel space memory pages to hold the same file system pages.

Establish the mapping of these memory pages to the file system pages on disk.

Generate page fault for every memory page.

The virtual memory system gets into paging faults and schedules pagins to verify those pages by reading the contents from disk.

Once pageins are completed, the file system breaks down the raw data to extract the requested file content or attribute information.

It should be noted that this file system data will be cached like other memory pages. In subsequent I/O requests, some or all of the file data remains in physical memory and can be directly reused without rereading from disk.

File Locking

File locking is a mechanism by which a process can prevent other processes from accessing a file or restrict other processes from accessing the file. Although named "File Lock", it means locking the entire file (which is often done). Locking can usually be done at a more granular level. As the granularity drops to the byte level, regions of the file are typically locked. A lock is associated with a specific file, starting at a specified byte position in the file and running to a specified byte range. This is important because it allows multiple processes to cooperate in accessing specific areas of the file without preventing other processes from working elsewhere in the file.

There are two forms of file locks: shared and exclusive. Multiple shared locks can be valid on the same file area at the same time. An exclusive lock, on the other hand, requires that no other lock is valid for the requested region.

Streaming I/O

Not all I/O is block-oriented. There is also stream I/O, which is the prototype of a pipe, and the bytes of the I/O data stream must be accessed sequentially. Common data flows include TTY (console) devices, print ports, and network connections.

Data streams are usually, but not necessarily, slower than block devices, providing intermittent input. Most operating systems allow working in non-blocking mode. Allows a process to check whether input to a data stream is available without blocking if it is not. This management allows processes to process input as it arrives and perform other functions while the input stream is idle.

A step further than non-blocking mode is conditional selection (readiness selection). It is similar to non-blocking mode (and often builds on non-blocking mode), but relieves the operating system from the burden of checking whether the stream is ready. The operating system can be told to observe a collection of streams and return instructions to the process which stream is ready. This capability allows a process to reuse multiple activity streams using common code and a single thread by leveraging preparation information returned by the operating system. This method is widely used by network servers in order to handle a large number of network connections. Preparing for selection is critical for high-volume expansion.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

Perfect Number in Java

Aug 30, 2024 pm 04:28 PM

Perfect Number in Java

Aug 30, 2024 pm 04:28 PM

Guide to Perfect Number in Java. Here we discuss the Definition, How to check Perfect number in Java?, examples with code implementation.

Weka in Java

Aug 30, 2024 pm 04:28 PM

Weka in Java

Aug 30, 2024 pm 04:28 PM

Guide to Weka in Java. Here we discuss the Introduction, how to use weka java, the type of platform, and advantages with examples.

Smith Number in Java

Aug 30, 2024 pm 04:28 PM

Smith Number in Java

Aug 30, 2024 pm 04:28 PM

Guide to Smith Number in Java. Here we discuss the Definition, How to check smith number in Java? example with code implementation.

Java Spring Interview Questions

Aug 30, 2024 pm 04:29 PM

Java Spring Interview Questions

Aug 30, 2024 pm 04:29 PM

In this article, we have kept the most asked Java Spring Interview Questions with their detailed answers. So that you can crack the interview.

Break or return from Java 8 stream forEach?

Feb 07, 2025 pm 12:09 PM

Break or return from Java 8 stream forEach?

Feb 07, 2025 pm 12:09 PM

Java 8 introduces the Stream API, providing a powerful and expressive way to process data collections. However, a common question when using Stream is: How to break or return from a forEach operation? Traditional loops allow for early interruption or return, but Stream's forEach method does not directly support this method. This article will explain the reasons and explore alternative methods for implementing premature termination in Stream processing systems. Further reading: Java Stream API improvements Understand Stream forEach The forEach method is a terminal operation that performs one operation on each element in the Stream. Its design intention is

TimeStamp to Date in Java

Aug 30, 2024 pm 04:28 PM

TimeStamp to Date in Java

Aug 30, 2024 pm 04:28 PM

Guide to TimeStamp to Date in Java. Here we also discuss the introduction and how to convert timestamp to date in java along with examples.

Java Program to Find the Volume of Capsule

Feb 07, 2025 am 11:37 AM

Java Program to Find the Volume of Capsule

Feb 07, 2025 am 11:37 AM

Capsules are three-dimensional geometric figures, composed of a cylinder and a hemisphere at both ends. The volume of the capsule can be calculated by adding the volume of the cylinder and the volume of the hemisphere at both ends. This tutorial will discuss how to calculate the volume of a given capsule in Java using different methods. Capsule volume formula The formula for capsule volume is as follows: Capsule volume = Cylindrical volume Volume Two hemisphere volume in, r: The radius of the hemisphere. h: The height of the cylinder (excluding the hemisphere). Example 1 enter Radius = 5 units Height = 10 units Output Volume = 1570.8 cubic units explain Calculate volume using formula: Volume = π × r2 × h (4

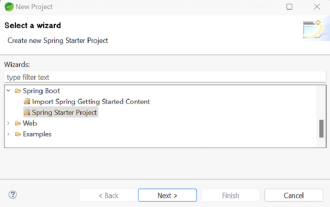

How to Run Your First Spring Boot Application in Spring Tool Suite?

Feb 07, 2025 pm 12:11 PM

How to Run Your First Spring Boot Application in Spring Tool Suite?

Feb 07, 2025 pm 12:11 PM

Spring Boot simplifies the creation of robust, scalable, and production-ready Java applications, revolutionizing Java development. Its "convention over configuration" approach, inherent to the Spring ecosystem, minimizes manual setup, allo