Java Queue usage summary

Queue Overview

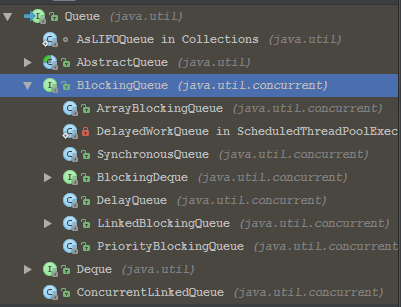

As shown in the figure, JDK provides 2 sets of implementations for concurrent queues, one is a high-performance non-blocking queue represented by ConcurrentLinkedQueue, and the other is a blocking queue represented by the BlockingQueue interface. Both inherit from Queue. A queue using a blocking algorithm can be implemented with one lock (the same lock is used for enqueueing and dequeuing) or two locks (different locks are used for enqueuing and dequeuing). Non-blocking implementation can use a loop. CAS is implemented in a way that we will analyze one by one below.

ConcurrentLinkedQueue

A queue suitable for high concurrency scenarios. It achieves high performance under high concurrency through a lock-free method (CAS+volatile). Generally, the performance of ConcurrentLinkedQueue is better than BlockingQueue.

It is an unbounded thread-safe queue based on link nodes. It follows the first-in, first-out principle. The head is the first to be added, and the tail is the most recently added. Null elements are not allowed to be added.

Note that add()/offer() are both methods of adding elements, there is no difference here; poll()/peek() are methods of removing the head element. The difference is that poll will delete elements, but peek will not.

It is important to note that due to its non-blocking nature, unlike other ordinary collections, the method of obtaining the SIZE of the queue does not cost constant time, but O(N), so we should avoid using size as much as possible () method, you can consider using isEmpty() instead.

Although the CAS+VOLATILE mechanism is used to avoid locks, what we need to understand is that this only ensures the safety of a single operation, such as peek(), but if you want to ensure the safety of multiple operations, you need to use the lock mechanism to achieve synchronization Effect.

BlockingQueue API

enqueue:

offer(E e): If the queue is not full, return true immediately; if the queue is full, return false immediately--> No blocking

put(E e): If the queue is full, it will block until the array is not full or the thread is interrupted -->Blocking

offer(E e, long timeout, TimeUnit unit): Insert an element at the end of the queue, if When the array is full, it will wait until the waiting time times out

Dequeue:

poll(): non-blocking to take data, return immediately

take(): blocking to take data

poll(long timeout, TimeUnit unit): Poll with a certain timeout to get data

ArrayBlockingQueue

An array-based blocking queue implementation, which maintains a fixed-length array internally to cache the data objects in the queue , since there is only one lock object (ReentrantLock) inside ArrayBlockingQueue, reading and writing are not separated, which means that production and consumption cannot be completely parallel. Since the length needs to be defined, it is also called a bounded queue.

LinkedBlockingQueue

A blocking queue implementation based on linked list. Similar to ArrayBlockingQueue, it also maintains a data buffer queue (composed of linked list) internally.

The reason why LinkedBlockingQueue processes concurrent data more efficiently than ArrayBlockingQueue is because the internal implementation uses 2 locks, which means that the entry into the queue and the exit from the queue are locked separately, that is, the reading and writing are separated, so that the producers and consumers can be fully reached Parallel.

No need to define the length, also called unbounded queue. Of course, when the length is not defined, you need to pay attention to the speed of the producer and the speed of the consumer, because the queue length is Integer.MAX_VALUE by default.

SynchronousQueue

A queue without buffering, the data produced by the producer will be directly obtained and consumed by the consumer. It is a lightweight blocking queue. Because it does not have capacity, in terms of usage, it can only be blocked by one thread fetching elements, waiting for another thread to put an element into the queue, and then it will be fetched immediately by the waiting thread. Go, in fact, it implements lightweight single element exchange between threads.

PriorityBlockingQueue

Priority-based blocking queue (the priority is determined by the Compator object passed in by the constructor, that is, the object passed in the queue must implement the Comparable interface).

When implementing PriorityBlockingQueue, the internal lock for controlling thread synchronization uses a fair lock, which is also an unbounded queue.

In layman's terms, it's not a first-in-first-out queue, but whoever has the lower priority gets out first. So you can think about it, whether every add/offer will be sorted? Do we need to sort them all according to priority? In fact, you can take a rough look at the add/take method and you will understand the design idea of PriorityBlockingQueue: when adding, no sorting process is performed. When taking, just select the one with the smallest priority and take it out. This avoids It takes time to sort when adding, but it saves time when taking, because it is not completely sorted, but only an element with a low priority is selected.

DelayQueue

Queue with delay time, the element in it can only be obtained from the queue when the specified delay time expires. The elements in the queue must implement the Delayed interface and there is no size limit. Essentially, it is implemented with the help of PriorityBlockingQueue, with delay time as the priority. There are many application scenarios for delay queues, such as removing cached data that has expired, processing task timeouts, closing idle connections, etc.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

In Java remote debugging, how to correctly obtain constant values on remote servers?

Apr 19, 2025 pm 01:54 PM

In Java remote debugging, how to correctly obtain constant values on remote servers?

Apr 19, 2025 pm 01:54 PM

Questions and Answers about constant acquisition in Java Remote Debugging When using Java for remote debugging, many developers may encounter some difficult phenomena. It...

Why does the Python script not be found when submitting a PyFlink job on YARN?

Apr 19, 2025 pm 02:06 PM

Why does the Python script not be found when submitting a PyFlink job on YARN?

Apr 19, 2025 pm 02:06 PM

Analysis of the reason why Python script cannot be found when submitting a PyFlink job on YARN When you try to submit a PyFlink job through YARN, you may encounter...

How to dynamically modify the savePath parameter of @Excel annotation in easypoi when project starts in Java?

Apr 19, 2025 pm 02:09 PM

How to dynamically modify the savePath parameter of @Excel annotation in easypoi when project starts in Java?

Apr 19, 2025 pm 02:09 PM

How to dynamically configure the parameters of entity class annotations in Java During the development process, we often encounter the need to dynamically configure the annotation parameters according to different environments...

How to choose Java project management tools when learning back-end development?

Apr 19, 2025 pm 02:15 PM

How to choose Java project management tools when learning back-end development?

Apr 19, 2025 pm 02:15 PM

Confused with choosing Java project management tools for beginners. For those who are just beginning to learn backend development, choosing the right project management tools is crucial...

When Tomcat loads Spring-Web modules, does the SPI mechanism really destroy the visibility principle of Java class loaders?

Apr 19, 2025 pm 02:18 PM

When Tomcat loads Spring-Web modules, does the SPI mechanism really destroy the visibility principle of Java class loaders?

Apr 19, 2025 pm 02:18 PM

Analysis of class loading behavior of SPI mechanism when Tomcat loads Spring-Web modules. Tomcat is used to discover and use the Servle provided by Spring-Web when loading Spring-Web modules...

What is the reason why the browser does not respond after the WebSocket server returns 401? How to solve it?

Apr 19, 2025 pm 02:21 PM

What is the reason why the browser does not respond after the WebSocket server returns 401? How to solve it?

Apr 19, 2025 pm 02:21 PM

The browser's unresponsive method after the WebSocket server returns 401. When using Netty to develop a WebSocket server, you often encounter the need to verify the token. �...

Ultimate consistency in distributed systems: how to apply and how to compensate for data inconsistencies?

Apr 19, 2025 pm 02:24 PM

Ultimate consistency in distributed systems: how to apply and how to compensate for data inconsistencies?

Apr 19, 2025 pm 02:24 PM

Exploring the application of ultimate consistency in distributed systems Distributed transaction processing has always been a problem in distributed system architecture. To solve the problem...

How does node.next = node; in Java AQS source code help with garbage collection?

Apr 19, 2025 pm 02:27 PM

How does node.next = node; in Java AQS source code help with garbage collection?

Apr 19, 2025 pm 02:27 PM

cancelAcquire method in JavaAQS source code: node.next=node;...