Web Front-end

Web Front-end

HTML Tutorial

HTML Tutorial

High-performance WEB development Why should we reduce the number of requests? How to reduce the number of requests!

High-performance WEB development Why should we reduce the number of requests? How to reduce the number of requests!

High-performance WEB development Why should we reduce the number of requests? How to reduce the number of requests!

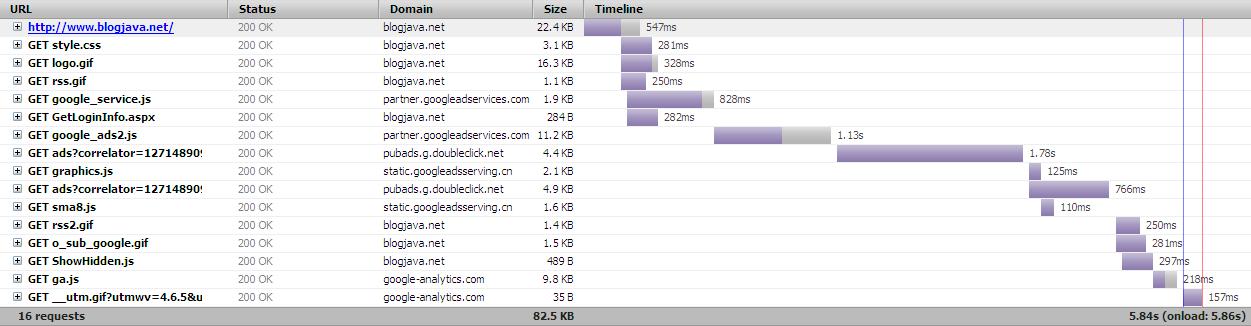

Dec 16, 2016 pm 03:36 PMThe amount of data in http request headers

Let’s first analyze the request headers to see what additional data is included in each request. The following is the monitored Google request headers

Host www.google.com.hk

User-Agent Mozilla/5.0 (Windows; U; Windows

NT 5.2; en-US; rv:1.9.2.3) Gecko/20100401 Firefox/3.6.3 GTBDFff GTB7.0

Accept text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8

Accept-Language zh-cn,en-us;q=0.7,en;q=0.3

Accept-Encoding gzip,deflate

Accept-Charset ISO-8859-1,utf-8;q=0.7,*;q=0.7

Keep-Alive 115

Proxy-Connection keep-alive

Response head returned

Date Sat,

17 Apr 2010 08:18:18 GMT

Expires -1

Cache-Control private, max-age=0

Content-Type text/html; charset=UTF-8

Set-Cookie

PREF=ID=b94a24e8e90a0f50:NW=1:TM=1271492298:LM=1271492298:S=JH7CxsIx48Zoo8Nn;

expires=Mon, 16-Apr-2012 08:18:18 GMT; path=/; domain=.google.com.hk

NID=33=EJVyLQBv2CSgpXQTq8DLIT2JQ4aCAE9YKkU2x-h4hVw_ATrGx7njA69UUBMbzVHVnkAOe_jlGGzOoXhQACSFDP1i53C8hWjRTJd0vYtRNWhGYGv491mwbngkT6LCYbvg;

expires=Sun, 17-Oct-2010 08:18:18 GMT; path=/; domain=.google.com.hk; HttpOnly

Content-Encoding gzip

Server gws

Content-Length 4344

The size of the request header sent here is about 420 bytes, and the size of the returned request header is about 600 bytes.

It can be seen that each request will bring some additional information for transmission (no cookie is included in this request). When the resource requested is very small, such as an icon of less than 1k, the data in the request may be larger than the actual icon. The amount of data is still large.

So when there are more requests, more data will be transmitted on the network, and the transmission speed will naturally be slower.

In fact, the amount of data brought by the request is still a small problem. After all, the amount of data that the request can bring is still limited.

Overhead of http connection

Compared with the extra data in the request header, the overhead of http connection is more serious. Let’s first look at the stages that need to go through from the user inputting a URL to downloading the content to the client:

1. Domain name resolution

2. Open TCP connection

3. Send request

4. Waiting (mainly including network delay and server processing time)

5. Downloading resources

Many people may think that most of the time for each request is spent in the downloading phase

You may be surprised when you look at the picture above. The time spent in the waiting stage is much more than the actual downloading time. Up The graph tells us:

1. Most of the time spent on each request is in other stages, not in the downloading resource stage

2. No matter how small the resource is, it will still take a lot of time in other stages, but the download stage will be shorter (see the 6th resource in the picture above, only 284Byte).

Facing the two situations mentioned above, how should we optimize? Reducing the number of requests reduces the cost of other phases and the amount of data transferred over the network.

How to reduce the number of requests

1. Merge files

Merge files is to merge many JS files into one file, and many CSS files into one file. This method should be used by many people, and I will not introduce it in detail here.

Only 1 merging tool is recommended: yuiCompressor This tool is provided by yahoo.

http://developer.yahoo.com/yui/compressor/

2. Merge images

This is using css

Sprite displays different images by controlling the position of the background image. This technology is also used by everyone. I won’t introduce it in detail. I recommend an online website for merging pictures: http://csssprites.com/

3. Merge JS and CSS into one file

The first method above only talks about merging several JS files into one JS file and several CSS files into one CSS file. How to merge CSS and JS into one file? See my other article. 1 article:

http://www.blogjava.net/BearRui/archive/2010/04/18/combin_css_js.html

4. Use Image maps

Image maps

It is to merge multiple pictures into one picture, and then use the

The above methods all have pros and cons. You can choose different usage methods in different situations. For example, using data to embed images reduces the number of requests, but it will increase the page size.

So Microsoft’s Bing search uses data to embed images when the user first visits, and then lazily loads the real images in the background. Subsequent visits will directly use the cached images without using data.

The above is about high-performance WEB development. Why should we reduce the number of requests? How to reduce the number of requests? For more related articles, please pay attention to the PHP Chinese website (www.php.cn)!

Hot Article

Hot tools Tags

Hot Article

Hot Article Tags

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Python web development framework comparison: Django vs Flask vs FastAPI

Sep 28, 2023 am 09:18 AM

Python web development framework comparison: Django vs Flask vs FastAPI

Sep 28, 2023 am 09:18 AM

Python web development framework comparison: Django vs Flask vs FastAPI

Reimagining Architecture: Using WordPress for Web Application Development

Sep 01, 2023 pm 08:25 PM

Reimagining Architecture: Using WordPress for Web Application Development

Sep 01, 2023 pm 08:25 PM

Reimagining Architecture: Using WordPress for Web Application Development

How to use the Twig template engine in PHP for web development

Jun 25, 2023 pm 04:03 PM

How to use the Twig template engine in PHP for web development

Jun 25, 2023 pm 04:03 PM

How to use the Twig template engine in PHP for web development

MySQL and PostgreSQL: Best Practices in Web Development

Jul 14, 2023 pm 02:34 PM

MySQL and PostgreSQL: Best Practices in Web Development

Jul 14, 2023 pm 02:34 PM

MySQL and PostgreSQL: Best Practices in Web Development

What are the advantages and disadvantages of C++ compared to other web development languages?

Jun 03, 2024 pm 12:11 PM

What are the advantages and disadvantages of C++ compared to other web development languages?

Jun 03, 2024 pm 12:11 PM

What are the advantages and disadvantages of C++ compared to other web development languages?

What are the common application scenarios of Golang in software development?

Dec 28, 2023 am 08:39 AM

What are the common application scenarios of Golang in software development?

Dec 28, 2023 am 08:39 AM

What are the common application scenarios of Golang in software development?

Remote development skills in Python web development

Jun 18, 2023 pm 03:06 PM

Remote development skills in Python web development

Jun 18, 2023 pm 03:06 PM

Remote development skills in Python web development

The balance of hard and soft skills required for Python developers

Sep 10, 2023 am 11:40 AM

The balance of hard and soft skills required for Python developers

Sep 10, 2023 am 11:40 AM

The balance of hard and soft skills required for Python developers