Sqlserver high concurrency and big data storage solution

With the increasing number of users and the skyrocketing daily activity and peak value, database processing performance is facing huge challenges. Let’s share the database optimization plan for the actual platform with a peak value of 100,000+. Discuss with everyone, learn from each other and improve!

Case: Game platform.

1. Solve high concurrency

When the number of client connections reaches the peak, the maintenance and processing of connections by the server will not be done here for the time being. discuss. When multiple write requests are sent to the database, multiple tables need to be inserted at this time, especially some expressions that are stored in the tens of millions per day. As time goes by, the traditional way of synchronously writing data is obviously not advisable. After Experiments have been improved a lot through asynchronous insertion, but at the same time, some sacrifices must be made to the real-time performance of reading data.

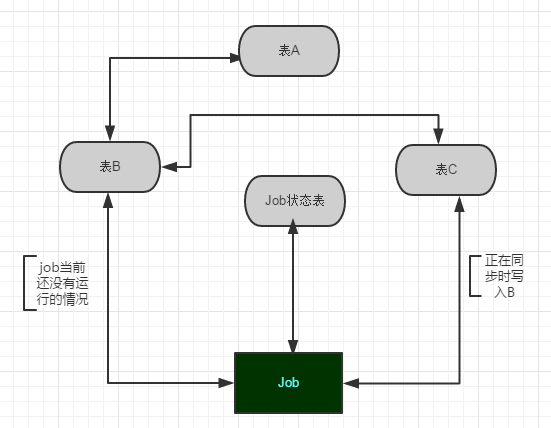

There are many asynchronous methods. The current method is to transfer the data from the temporary table to the real table through the job at regular intervals (5min, 10min...depending on the requirement settings).

1. There is original table A, which is also the table actually used when reading.

2. Create B and C with the same structure as the original table A for data transfer processing. The synchronization process is C->B->A.

3. Establish the job Job1 that synchronizes data and the table that records the running status of Job1. The most important thing during synchronization is to check the current status of Job1. If the data of B is currently being synchronized to A, then The data from the server is stored in C, and then the data is imported to B. This batch of data will be transferred to A when the next job is executed. As shown in Figure 1:

At the same time, in order to ensure safety and facilitate troubleshooting, a stored procedure that records the entire database instance should be used to check the job execution results in a shorter time. If an abnormal failure occurs, relevant personnel should be notified promptly through other means. For example, write to the email and SMS table, let a Tcp notification program read and send regularly, and so on.

Note: If the data in a day reaches dozens of G, and if there are query requirements for this table (partitioning will be mentioned below), one of the best strategies is:

You can synchronize B to Multiple servers share the query pressure and reduce resource competition. Because the resources of the entire database are limited, for example, an insertion operation will first obtain a shared lock, then locate a certain row of data through the clustered index, and then upgrade it to an intention lock. SQL Server needs to apply for different locks based on the size of the data to maintain the lock. memory, causing competition for resources. Therefore, reading and writing should be separated as much as possible, and can be divided according to the business model or set rules; in platform projects, priority should be given to ensuring that data can be effectively inserted.

In the inevitable query of big data, it will definitely consume a lot of resources. If you encounter batch deletion, you can switch to a circular batch method (such as 2000 items at a time), so that this will not happen. The process causes the entire library to hang up, resulting in some unpredictable bugs. After practice, it is effective and feasible, but it only sacrifices storage space. Fields with a large amount of data in the table can also be split into new tables according to query requirements. Of course, these should be set according to the needs of each business scenario, and a suitable but not flashy solution can be designed.

2. Solve the storage problem

If the data in a single table reaches dozens of gigabytes every day, it is natural to improve the storage solution. Now I would like to share my own plan to stay on the front line despite the ravages of soaring data! Here is an example to share my humble opinion on my own environment:

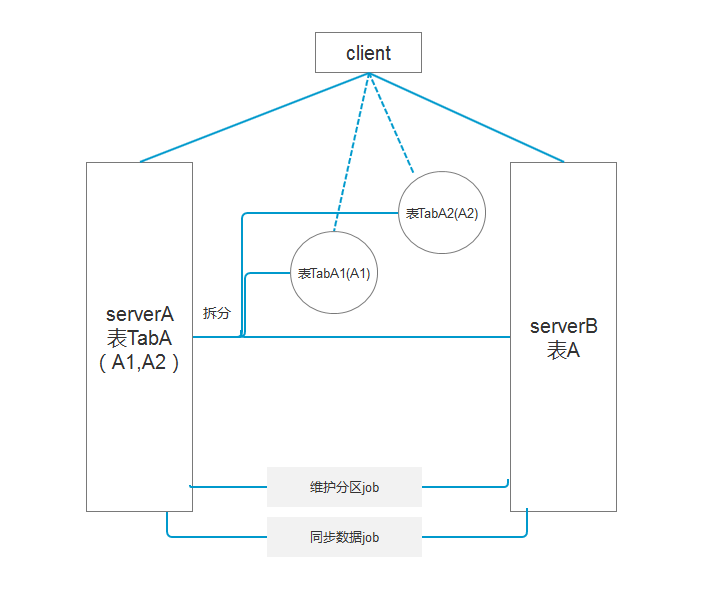

Existing data table A, a single table adds 30G of data every day, and uses asynchronous data synchronization during storage. Some tables cannot clear data. After partitioning, you can also divide the file groups into file groups and assign the file groups to different disks to reduce competition for IO resources and ensure the normal operation of existing resources. Now combine the requirements to retain historical data for 5 days:

1. At this time, you need to use the job to generate a partition plan based on the partition function, such as partitioning based on userid or time field;

2. Move the table After partitioning, the query can quickly locate a certain partition through the corresponding index;

3. Transfer the unnecessary partition data to a table with the same structure and index through job merging partitions, and then clear the data in this table.

As shown in Figure 2:

Capture long query times through sql query tracking, and use sql’s built-in stored procedure sp_lock or views dm_tran_locks and dblockinfo View the type and granularity of locks that exist on the current instance.

After locating the specific query statement or stored procedure, prescribe the right medicine! The medicine cures the disease!

The above is the entire content of this article. I hope that the content of this article can bring some help to everyone's study or work. I also hope to support the PHP Chinese website!

For more articles related to Sqlserver high concurrency and big data storage solutions, please pay attention to the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1381

1381

52

52

Alipay PHP SDK transfer error: How to solve the problem of 'Cannot declare class SignData'?

Apr 01, 2025 am 07:21 AM

Alipay PHP SDK transfer error: How to solve the problem of 'Cannot declare class SignData'?

Apr 01, 2025 am 07:21 AM

Alipay PHP...

Explain JSON Web Tokens (JWT) and their use case in PHP APIs.

Apr 05, 2025 am 12:04 AM

Explain JSON Web Tokens (JWT) and their use case in PHP APIs.

Apr 05, 2025 am 12:04 AM

JWT is an open standard based on JSON, used to securely transmit information between parties, mainly for identity authentication and information exchange. 1. JWT consists of three parts: Header, Payload and Signature. 2. The working principle of JWT includes three steps: generating JWT, verifying JWT and parsing Payload. 3. When using JWT for authentication in PHP, JWT can be generated and verified, and user role and permission information can be included in advanced usage. 4. Common errors include signature verification failure, token expiration, and payload oversized. Debugging skills include using debugging tools and logging. 5. Performance optimization and best practices include using appropriate signature algorithms, setting validity periods reasonably,

Explain the concept of late static binding in PHP.

Mar 21, 2025 pm 01:33 PM

Explain the concept of late static binding in PHP.

Mar 21, 2025 pm 01:33 PM

Article discusses late static binding (LSB) in PHP, introduced in PHP 5.3, allowing runtime resolution of static method calls for more flexible inheritance.Main issue: LSB vs. traditional polymorphism; LSB's practical applications and potential perfo

Describe the SOLID principles and how they apply to PHP development.

Apr 03, 2025 am 12:04 AM

Describe the SOLID principles and how they apply to PHP development.

Apr 03, 2025 am 12:04 AM

The application of SOLID principle in PHP development includes: 1. Single responsibility principle (SRP): Each class is responsible for only one function. 2. Open and close principle (OCP): Changes are achieved through extension rather than modification. 3. Lisch's Substitution Principle (LSP): Subclasses can replace base classes without affecting program accuracy. 4. Interface isolation principle (ISP): Use fine-grained interfaces to avoid dependencies and unused methods. 5. Dependency inversion principle (DIP): High and low-level modules rely on abstraction and are implemented through dependency injection.

Framework Security Features: Protecting against vulnerabilities.

Mar 28, 2025 pm 05:11 PM

Framework Security Features: Protecting against vulnerabilities.

Mar 28, 2025 pm 05:11 PM

Article discusses essential security features in frameworks to protect against vulnerabilities, including input validation, authentication, and regular updates.

Customizing/Extending Frameworks: How to add custom functionality.

Mar 28, 2025 pm 05:12 PM

Customizing/Extending Frameworks: How to add custom functionality.

Mar 28, 2025 pm 05:12 PM

The article discusses adding custom functionality to frameworks, focusing on understanding architecture, identifying extension points, and best practices for integration and debugging.

How to automatically set permissions of unixsocket after system restart?

Mar 31, 2025 pm 11:54 PM

How to automatically set permissions of unixsocket after system restart?

Mar 31, 2025 pm 11:54 PM

How to automatically set the permissions of unixsocket after the system restarts. Every time the system restarts, we need to execute the following command to modify the permissions of unixsocket: sudo...

How to send a POST request containing JSON data using PHP's cURL library?

Apr 01, 2025 pm 03:12 PM

How to send a POST request containing JSON data using PHP's cURL library?

Apr 01, 2025 pm 03:12 PM

Sending JSON data using PHP's cURL library In PHP development, it is often necessary to interact with external APIs. One of the common ways is to use cURL library to send POST�...