Talk about 12306.cn website performance technology

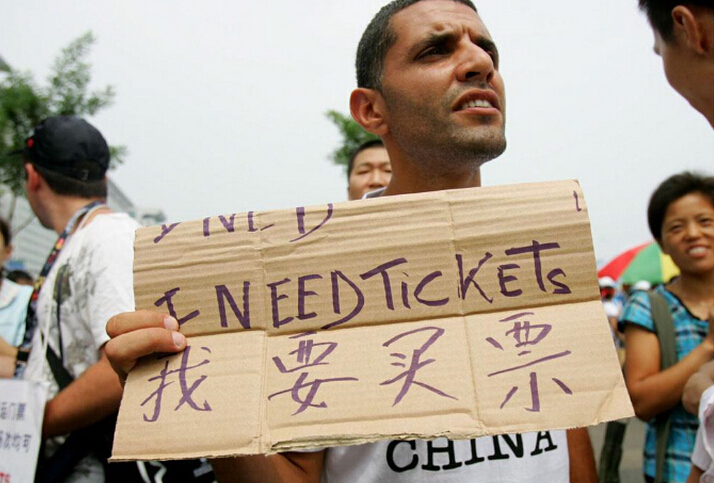

As the Spring Festival approaches, tickets for the 12306.cn website are passing by seconds after seconds, and people all over the country are scolding. Let’s discuss the performance and implementation of the 12306 website. We will only discuss performance issues, not those UI, user experience, or functional things that separate payment and ticket purchase and ordering)

Business

Any technology is inseparable from business needs, so to explain the performance issue, first I want to talk about it business issues.

1. Some people may compare this thing with QQ or online games. But I think the two are different. Online games and QQ online or when logging in access more of the user's own data, while the ticket booking system accesses the center's ticket volume data, which is different. Don’t think that online games or QQ are the same just because they work. The back-end loads of online games and QQ are still simpler than those of e-commerce systems.

2. Some people say that booking trains during the Spring Festival is like a flash sale on a website. It is indeed very similar, but if you don't think beyond the surface, you will find that it is also somewhat different. The matter of train tickets will be accompanied by a large number of query operations on the one hand, and more importantly, when placing an order, it requires a lot of consistent operations on the database. On the one hand, it is the consistency of each section ticket from the starting point to the end point, and on the other hand, it is necessary to On the other hand, buyers have many choices of routes, trains, and times, and they will constantly change their ordering methods. As for flash sales, just kill them directly. There are not so many queries and consistency issues. In addition, regarding flash sales, it can be made to only accept requests from the first N users (without operating any data on the backend, just log the user's orders). For this kind of business, you only need to put the available data in the memory cache. For the number of flash sales, the data can also be distributed. For 100 products, 10 can be placed on each of 10 servers. There is no need to operate any database at that time. After the number of orders is enough, stop the flash sale and then write to the database in batches. And there aren’t many flash sale items. Train tickets are not as simple as flash sales. During the Spring Festival travel period, almost all tickets are hot tickets, and almost people from all over the country come. There is also transfer business, and the inventory of multiple lines needs to be processed. Do you want to Think about how difficult it is. (Taobao’s Double Eleven has only 3 million users, while train tickets instantly have tens of millions or even hundreds of millions of users).

3.Some people compare this system with the Olympic ticketing system. I think it's still different. Although the Olympic ticketing system was abolished as soon as it went online. But the Olympics uses a lottery method, which means there is no first-come, first-served method. Moreover, it is a lottery after the event. You only need to receive information beforehand, and there is no need to ensure data consistency beforehand. There are no locks, and it is easy to expand horizontally. .

4.The ticket booking system should be very similar to the e-commerce order system. They both need to process inventory: 1) occupy the inventory, 2) pay ( Optional), 3) The operation of deducting inventory. This requires consistency checking, that is, data needs to be locked during concurrency. B2C e-commerce companies will basically do this asynchronously. That is to say, the order you place is not processed immediately, but delayed. Only if it is successfully processed, the system will send you a confirmation email. It said the order was successful. I believe that many friends have received emails indicating that order registration was unsuccessful. This means that data consistency is a bottleneck under concurrency.

5.The ticketing business of the railway is very abnormal. It uses sudden release of tickets, and some tickets are far from enough for everyone to share, so everyone just There will be a practice of grabbing tickets, which is a business with Chinese characteristics. So when the tickets are released, there will be millions or even tens of millions of people rushing to inquire and place orders. Within a few dozen minutes, a website can receive tens of millions of visits. This is a terrifying thing. It is said that the peak visit of 12306 is 1 billion PV, which is concentrated from 8 a.m. to 10 a.m., and the PV per second reaches tens of millions at the peak.

Say a few more words:

Inventory is a nightmare for B2C, and inventory management is quite complicated. If you don’t believe it, you can ask all traditional and e-commerce retail companies to see how difficult it is for them to manage inventory. Otherwise, there wouldn’t be so many people asking about Vanke’s inventory. (You can also read "The Biography of Steve Jobs" and you will know why Tim took over as Apple's CEO. The main reason is that he solved Apple's inventory cycle problem)

For a website, the high load of web browsing is easy to handle. The load of queries is difficult to handle, but it can still be handled by caching the query results. The most difficult thing is the load of placing orders. Because we need to access inventory, placing orders is basically done asynchronously. During last year's Double 11, Taobao's order count per hour was around 600,000, while JD.com could only support 400,000 orders per day (even worse than 12,306). Amazon could support 700,000 orders per hour five years ago. It can be seen that the operation of placing orders is not as high-performance as we think.

Taobao is much simpler than B2C websites because there are no warehouses, so there are no N warehouses like B2C to update and update the inventory of the same product. Query operation. When placing an order, the B2C website needs to find a warehouse that is close to the user and has inventory, which requires a lot of calculations. Just imagine, you bought a book in Beijing. If the warehouse in Beijing is out of stock, you have to transfer it from the surrounding warehouses. Then you have to check whether the warehouse in Shenyang or Xi'an has the goods. If not, you have to look at Jiangsu. warehouse, etc. On Taobao, there are not so many things. Each merchant has its own inventory. The inventory is just a number, and the inventory is distributed to the merchants, which is conducive to performance expansion.

#Data consistency is the real performance bottleneck. Some people say that nginx can handle 100,000 static requests per second, I don't doubt it. But this is just a static request. In theory, as long as the bandwidth and I/O are strong enough, the server has enough computing power, and the number of concurrent connections supported can withstand the establishment of 100,000 TCP links, there will be no problem. But in the face of data consistency, this 100,000 has completely become an unattainable theoretical value.

I said so much, I just want to tell you from a business perspective, we need to truly understand the abnormality of Spring Festival Railway Ticket Booking business from a business perspective.

Front-end performance optimization technology

To solve performance problems, there are many commonly used methods. I will list them below. I believe that the 12306 website uses the following methods Technology will make a qualitative leap in its performance.

1. Front-end load balancing

Through the DNS load balancer (generally on the router based on routing load redirection), user access can be evenly distributed among on multiple web servers. This reduces the request load on the web server. Because HTTP requests are short jobs, this function can be completed through a very simple load balancer. It is best to have a CDN network that allows users to connect to their nearest server (CDN is usually accompanied by distributed storage). (For a more detailed description of load balancing, see "Backend Load Balancing")

2. Reduce the number of front-end links

I took a look at 12306.cn and opened it The homepage needs to establish more than 60 HTTP connections, and the ticket booking page has more than 70 HTTP requests. Today's browsers all require concurrent requests (of course, the number of concurrent requests for a browser page is limited, but you can't stop users. Open multiple pages, and the back-end server TCP connection is disconnected at the front-end and will not be released immediately or important). Therefore, as long as there are 1 million users, there may be 60 million links (after the first visit, with the browser cache, this number will decrease, even if it is only 20%, it is still a million-level number of links), too too much. A login query page would be just fine. Type the js into a file, type the css into a file, type the icon into a file, and use css to display it in blocks. Keep the number of links to a minimum.

3. Reduce web page size and increase bandwidth

Not every company in this world dares to provide image services, because images consume too much bandwidth. In today's broadband era, it is difficult for anyone to understand the situation in the dial-up era when they were afraid to use pictures when making a picture page (this is also the case when browsing on mobile phones). I checked the total file size that needs to be downloaded on the 12306 homepage, which is about 900KB. If you have visited it, the browser will cache a lot for you, and you only need to download about 10K files. But we can imagine an extreme case. 1 million users visit at the same time, and they are all visiting for the first time. Each person needs to download 1M. If it needs to be returned within 120 seconds, then it needs, 1M * 1M /120 * 8 = 66Gbps bandwidth. It's amazing. So, I estimate that on that day, the congestion of 12306 should basically be the network bandwidth, so what you may see is no response. Later, the browser's cache helped 12306 reduce a lot of bandwidth usage, so the load was immediately transferred to the backend, and the backend data processing bottleneck was immediately eliminated. So you will see a lot of http 500 errors. This means that the backend server is down.

4. Staticization of front-end pages

Staticize some pages and data that do not change frequently, and gzip them. Another perverted method is to put these static pages under /dev/shm. This directory is the memory. Read the files directly from the memory and return them. This can reduce expensive disk I/O. Using nginx's sendfile function can allow these static files to be exchanged directly in the kernel, which can greatly increase performance.

5. Optimize queries

Many people are looking for the same query, and you can use a reverse proxy to merge these concurrent and identical queries. This technology is mainly implemented by query result caching. The first query goes to the database to obtain the data and puts the data in the cache. Subsequent queries directly access the cache. Hash each query and use NoSQL technology to complete this optimization. (This technology can also be used as a static page)

For inquiries about the amount of train tickets, I personally feel that instead of displaying numbers, just display "yes" or "no", which can greatly simplify the system complexity. , and improve performance. Offload the load of queries on the database so that the database can better serve people who place orders.

6. Caching issues

Cache can be used to cache dynamic pages and also to cache query data. Caching usually has several problems:

1) Cache update. Also called cache and database synchronization. There are several methods. One is to time out the cache, invalidate the cache and check again. The other is to notify the backend of updates. Once the backend changes, notify the frontend to update. The former is relatively simple to implement, but its real-time performance is not high. The latter is relatively complex to implement, but its real-time performance is high.

2) Cached paging. The memory may not be enough, so some inactive data needs to be swapped out of the memory. This is very similar to the operating system's memory paging and swapping memory. FIFO, LRU, and LFU are all relatively classic paging algorithms. For related content, see Wikipeida’s caching algorithm.

3) Cache reconstruction and persistence. The cache is in memory, and the system always needs to be maintained, so the cache will be lost. If the cache is gone, it needs to be rebuilt. If the amount of data is large, the cache rebuilding process will be very slow, which will affect the production environment. Therefore, the cache is durable. Chemicalization also needs to be considered.

Many powerful NoSQLs support the above three major caching issues.

Back-end performance optimization technology

We discussed front-end performance optimization technology before, so the front-end may not be a bottleneck problem. Then the performance problem will come to the back-end data. Here are some common back-end performance optimization techniques.

1. Data redundancy

Regarding data redundancy, that is to say, processing the data redundancy of our database is to reduce the overhead of table connections, which is relatively large. operation, but this will sacrifice data consistency. The risk is relatively high. Many people use NoSQL for data. It is faster because the data is redundant, but this poses a big risk to data consistency. This needs to be analyzed and processed according to different businesses. (Note: It is easy to transplant a relational database to NoSQL, but it is difficult to move from NoSQL to relational database in reverse)

2. Data Mirroring

Almost all All mainstream databases support mirroring, that is, replication. The benefit of database mirroring is that it can be used for load balancing. Evenly distribute the load of one database to multiple platforms while ensuring data consistency (Oracle's SCN). The most important thing is that this can also provide high availability. If one machine fails, the other one will still be in service.

Data consistency of data mirroring may be a complex issue, so we need to partition the data on a single piece of data, that is, evenly distribute the inventory of a best-selling product to different servers, such as a The best-selling products have 10,000 stocks. We can set up 10 servers, each with 1,000 stocks, just like a B2C warehouse.

3. Data Partition

One problem that data mirroring cannot solve is that there are too many records in the data table, causing the database operation to be too slow. So, partition the data. There are many ways to partition data. Generally speaking, they are as follows:

1) Classify the data according to some logic. For example, the train ticket booking system can be divided according to each railway bureau, according to various models, according to the originating station, and according to the destination... Anyway, it is to split a table into multiple sheets with the same information. Fields are different types of tables, so these tables can exist on different machines to share the load.

2) Divide the data by fields, that is, divide the tables vertically. For example, put some data that does not change frequently in one table, and put frequently changed data in multiple other tables. Turn a table into a 1-to-1 relationship. In this way, you can reduce the number of fields in the table and also improve performance to a certain extent. In addition, having many fields will cause the storage of a record to be placed in different page tables, which has problems with read and write performance. But then there are a lot of complicated controls.

3) Average score table. Because the first method is not necessarily evenly distributed, there may still be a lot of data of a certain type. Therefore, there is also an average distribution method to divide the tables according to the range of the primary key ID.

4) The same data partition. This was mentioned in the data mirroring above. That is to say, the inventory value of the same product is allocated to different servers. For example, if there are 10,000 inventories, they can be allocated to 10 servers, and one server has 1,000 inventories. Then load balance.

These three types of partitions have their pros and cons. The most commonly used one is the first one. Once the data is partitioned, you need to have one or more schedulers to let your front-end program know where to find the data. Partitioning the train ticket data and placing it in various provinces and cities will have a very meaningful and qualitative improvement in the performance of the 12306 system.

4. Back-end system load balancing

As mentioned before, data partitioning can reduce the load to a certain extent, but it cannot reduce the load of hot-selling products. For train tickets, it can be considered as tickets on certain main lines in big cities. This requires the use of data mirroring to reduce load. Using data mirroring, you must use load balancing. On the backend, it may be difficult for us to use a load balancer like a router, because that is to balance the traffic, because the traffic does not represent the busyness of the server. Therefore, we need a task distribution system that can also monitor the load of each server.

The task distribution server has some difficulties:

The load situation is relatively complex. What is busy? Is the CPU high? Or is the disk I/O high? Or is memory usage high? Or is the concurrency high? Or is the memory paging rate high? You may need to consider them all. This information is sent to the task allocator, and the task allocator selects a server with the lightest load for processing.

#The task distribution server needs to queue the task and cannot lose the task, so it also needs to be persisted. And tasks can be assigned to computing servers in batches.

#What should I do if the task distribution server dies? Some high-availability technologies such as Live-Standby or failover are required here. We also need to pay attention to how the queues of persistent tasks are transferred to other servers.

I have seen that many systems use static methods to allocate, some use hash, and some simply analyze in turn. None of these are good enough. One is that it cannot perfectly load balance. The other fatal flaw of the static method is that if a computing server crashes or we need to add a new server, our allocator needs to knew. In addition, the hash must be recalculated (consistent hashing can partially solve this problem).

Another method is to use a preemptive method for load balancing, where the downstream computing server goes to the task server to get tasks. Let these computing servers decide whether they want tasks. The advantage of this is that it can simplify the complexity of the system, and it can also reduce or increase computing servers in real time. But the only downside is that this may introduce some complexity if there are some tasks that can only be processed on a certain server. But overall, this method may be a better load balancing.

5. Asynchronous, throttle and batch processing

Asynchronous, throttle (throttle) and batch processing all require queue processing of the number of concurrent requests.

Asynchronous in business generally means collecting requests and then delaying processing. Technically, each processing program can be made parallel, and it can be expanded horizontally. However, the technical issues of asynchronous are probably as follows: a) The result returned by the callee will involve communication issues between process threads. b) If the program needs to be rolled back, the rollback will be a bit complicated. c) Asynchronous is usually accompanied by multi-threading and multi-process, and concurrency control is relatively troublesome. d) Many asynchronous systems use message mechanisms, and the loss and disorder of messages can also be complex problems.

throttle technology does not actually improve performance. This technology mainly prevents the system from being overwhelmed by traffic that exceeds its ability to handle. This is actually a protection mechanism. Generally speaking, using throttle technology is for systems that you cannot control, such as the banking system that is connected to your website.

#Batch processing technology is to batch process a bunch of basically the same requests. For example, if everyone buys the same product at the same time, it is not necessary for you to buy one and I will write to the database once. A certain number of requests can be collected and operated in one time. This technique can be used in many ways. For example, to save network bandwidth, we all know that the MTU (Maximum Transmission Unit) on the network is 1500 bytes for Ethernet and more than 4000 bytes for optical fiber. If one of your network packets does not fill this MTU, it is It is a waste of network bandwidth, because the network card driver can only read efficiently block by block. Therefore, when sending packets over the network, we need to collect enough information before doing network I/O. This is also a batch processing method. The enemy of batch processing is low traffic. Therefore, batch processing systems generally set two thresholds, one is the job volume, and the other is timeout. As long as one condition is met, submission processing will begin.

So, as long as it is asynchronous, there will generally be a throttle mechanism, and there will generally be a queue to queue up. If there is a queue, there will be persistence, and the system will generally use batch processing.

The "queuing system" designed by Yunfeng is this technology. This is very similar to the e-commerce order system. That is to say, my system has received your ticket purchase order request, but I have not actually processed it yet. My system will throttle these large quantities based on my own processing capabilities. Requests are made and processed bit by bit. Once the processing is completed, I can send an email or text message to tell the user that you can actually purchase tickets.

Here, I would like to discuss Yunfeng’s queuing system in terms of business and user needs, because it seems to solve this problem technically, but there may still be some problems in terms of business and user needs. Some things worth thinking about deeply:

1) Queue DoS attack. First, let’s think about it, is this team simply a queue? This is not good enough, because we cannot eliminate scalpers, and simple ticket_id is prone to DoS attacks. For example, if I initiate N ticket_ids and enter the ticket purchase process, and I don’t buy it, it will cost you half an hour, which is very It's easy for me to make it impossible for people who want to buy tickets to buy tickets for several days. Some people say that users should use their ID cards to queue up, so that they must use this ID card to make purchases, but this still cannot eliminate the steak queues or account dealers. Because they can register N accounts to queue up, but they just don’t buy. Scalpers only need to do one thing at this time, which is to make the website inaccessible to normal people, so that users can only buy through them.

2) Consistency of columns? Do operations on this queue require locks? As long as there is a lock, the performance will not improve. Just imagine, 1 million people ask you to assign location numbers at the same time. This queue will become a performance bottleneck. You must not have good database performance, so it may be worse than it is now. Robbing the database and grabbing the queue are essentially the same.

3) Queue waiting time. Is half an hour enough for ticket purchase? too much? What if the user happens to be unable to access the Internet at that time? If the time is short, users will complain if they don’t have enough time to operate. If the time is long, those people waiting in line will also complain. This method may have many problems in practical operation. In addition, half an hour is too long, which is completely unrealistic. Let’s use 15 minutes as an example: there are 10 million users, and only 10,000 can be put in at any time. These 10,000 users need 15 minutes to complete all operations. Then, to process all these 10 million users, it will take 1000*15m = 250 hours, 10 and a half days, and the train has left early. (I am not just talking nonsense. According to the explanation of experts from the Ministry of Railways: In the past few days, an average of 1 million orders have been placed a day, so it takes ten days to process 10 million users. This calculation may be a bit simple. I just want to say, In such a low-load system, queuing may not be able to solve business problems)

4) Distribution of queues. Is there only one queue in this queuing system? Not good enough. Because if the people you put in who can buy tickets are buying the same type of tickets for the same train (such as a sleeper berth on a train), they are still grabbing tickets, which means that the load of the system may still be concentrated among them. on a certain server. Therefore, the best way is to queue users based on their needs - providing origin and destination. In this way, there can be multiple queues. As long as there are multiple queues, it can be expanded horizontally. This can solve the performance problem, but it does not solve the problem of users queuing for a long time.

I think we can definitely learn from online shopping. When queuing (making an order), collect the user's information and the tickets they want to buy, and allow the user to set the priority of purchasing tickets. For example, if you can't buy a sleeper berth on train A, buy a sleeper berth on train B. If you can't buy it yet, you can buy a sleeper berth on train B. Just buy a hard seat, etc. Then the user recharges the required money first, and then the system processes the order completely automatically and asynchronously. The user will be notified via text message or email whether successful or not. In this way, the system can not only save half an hour of user interaction time and automatically speed up processing, but can also merge people with the same ticket purchase request for batch processing (reduce the number of database operations). The best thing about this method is that it can know the needs of these queuing users. Not only can it optimize the user's queue and distribute users to different queues, it can also let the Ministry of Railways make train schedules like Amazon's wish list through some calculations. Coordinate arrangements and adjustments (finally, the queuing system (ordering system) still needs to be stored in the database or persisted, not just in the memory, otherwise you will be scolded when the machine goes down).

Summary

After writing so much, let me summarize:

0) No matter how you design it, your system must be able to easily expand horizontally. In other words, all links in your entire data flow must be able to expand horizontally. In this way, when your system has performance problems, "adding 30 times more servers" will not be laughed at.

1) The above-mentioned technology cannot be achieved overnight. Without long-term accumulation, it is basically hopeless. We can see that no matter which one you use it will introduce some complexity, and the design is always a trade-off.

2) Centralized ticket sales are difficult to handle. Using the above technology can improve the performance of the ticket booking system hundreds of times. Building sub-stations in various provinces and cities and selling tickets separately is the best way to qualitatively improve the performance of the existing system.

3) The business model of grabbing tickets on the eve of Spring Festival travel and the supply of tickets is far less than the demand is quite abnormal. It allows tens of millions or even hundreds of millions of people to log in at the same time at 8 o'clock in the morning to grab tickets at the same time. This business model is a perversion of perversions. The abnormal business form determines that no matter what they do, they will definitely be scolded.

4)It is a pity to build such a large system for just one or two weeks, while spending the rest of the time idle. This is only the railways that can do such a thing. .

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1371

1371

52

52

Working with Flash Session Data in Laravel

Mar 12, 2025 pm 05:08 PM

Working with Flash Session Data in Laravel

Mar 12, 2025 pm 05:08 PM

Laravel simplifies handling temporary session data using its intuitive flash methods. This is perfect for displaying brief messages, alerts, or notifications within your application. Data persists only for the subsequent request by default: $request-

PHP Logging: Best Practices for PHP Log Analysis

Mar 10, 2025 pm 02:32 PM

PHP Logging: Best Practices for PHP Log Analysis

Mar 10, 2025 pm 02:32 PM

PHP logging is essential for monitoring and debugging web applications, as well as capturing critical events, errors, and runtime behavior. It provides valuable insights into system performance, helps identify issues, and supports faster troubleshoot

cURL in PHP: How to Use the PHP cURL Extension in REST APIs

Mar 14, 2025 am 11:42 AM

cURL in PHP: How to Use the PHP cURL Extension in REST APIs

Mar 14, 2025 am 11:42 AM

The PHP Client URL (cURL) extension is a powerful tool for developers, enabling seamless interaction with remote servers and REST APIs. By leveraging libcurl, a well-respected multi-protocol file transfer library, PHP cURL facilitates efficient execution of various network protocols, including HTTP, HTTPS, and FTP. This extension offers granular control over HTTP requests, supports multiple concurrent operations, and provides built-in security features.

Simplified HTTP Response Mocking in Laravel Tests

Mar 12, 2025 pm 05:09 PM

Simplified HTTP Response Mocking in Laravel Tests

Mar 12, 2025 pm 05:09 PM

Laravel provides concise HTTP response simulation syntax, simplifying HTTP interaction testing. This approach significantly reduces code redundancy while making your test simulation more intuitive. The basic implementation provides a variety of response type shortcuts: use Illuminate\Support\Facades\Http; Http::fake([ 'google.com' => 'Hello World', 'github.com' => ['foo' => 'bar'], 'forge.laravel.com' =>

12 Best PHP Chat Scripts on CodeCanyon

Mar 13, 2025 pm 12:08 PM

12 Best PHP Chat Scripts on CodeCanyon

Mar 13, 2025 pm 12:08 PM

Do you want to provide real-time, instant solutions to your customers' most pressing problems? Live chat lets you have real-time conversations with customers and resolve their problems instantly. It allows you to provide faster service to your custom

Explain the concept of late static binding in PHP.

Mar 21, 2025 pm 01:33 PM

Explain the concept of late static binding in PHP.

Mar 21, 2025 pm 01:33 PM

Article discusses late static binding (LSB) in PHP, introduced in PHP 5.3, allowing runtime resolution of static method calls for more flexible inheritance.Main issue: LSB vs. traditional polymorphism; LSB's practical applications and potential perfo

Alipay PHP SDK transfer error: How to solve the problem of 'Cannot declare class SignData'?

Apr 01, 2025 am 07:21 AM

Alipay PHP SDK transfer error: How to solve the problem of 'Cannot declare class SignData'?

Apr 01, 2025 am 07:21 AM

Alipay PHP...

Customizing/Extending Frameworks: How to add custom functionality.

Mar 28, 2025 pm 05:12 PM

Customizing/Extending Frameworks: How to add custom functionality.

Mar 28, 2025 pm 05:12 PM

The article discusses adding custom functionality to frameworks, focusing on understanding architecture, identifying extension points, and best practices for integration and debugging.