Detailed explanation of MySQL database optimization

A mature database architecture is not designed with high availability, high scalability and other characteristics from the beginning. It is designed with the increase in the number of users. , the infrastructure was gradually improved. This blog post mainly talks about the problems and optimization solutions faced in the development cycle of MySQL database. Putting aside the front-end application for the time being, it is roughly divided into the following five stages:

1 , Database table design

After the project is approved, the development department develops the project according to the needs of the product department. Part of the work of the development engineer is to design the table structure. For databases, this is very important. If not designed properly, it will directly affect access speed and user experience. There are many influencing factors, such as slow queries, inefficient query statements, improper indexing, database congestion (deadlock), etc. Of course, there is a team of test engineers who will do stress testing and find bugs. For teams without test engineers, most development engineers will not think too much about whether the database design is reasonable in the early stage, but will complete the function implementation and delivery as soon as possible. After the project has a certain amount of visits, the hidden problems will be exposed. It will not be so easy to modify it again.

2. Database deployment

The operation and maintenance engineer has appeared. The number of visits in the initial stage of the project will not be very large, so a single deployment is enough to cope with it. QPS (query rate per second) around 1500. Considering high availability, MySQL master-slave replication + Keepalived can be used for double-click hot backup. Common cluster software includes Keepalived and Heartbeat.

Dual-machine hot backup blog post: http://www.php.cn/

3. Database performance optimization

If MySQL is deployed on an ordinary When there are concurrent connections, the database processing performance will slow down, and the hardware resources are still abundant. At this time, it is time to consider software issues. So how to maximize the performance of the database? On the one hand, multiple MySQL instances can be run on a single server to maximize server performance. On the other hand, the database is optimized. Often the default configurations of the operating system and database are relatively conservative, which will have certain limitations on the performance of the database. These configurations can be Make appropriate adjustments to handle as many connections as possible.

Specific optimization has the following three levels:

3.1 Database configuration optimization

There are two commonly used storage engines in MySQL, one is MyISAM, which is not supported Transaction processing, fast read performance and table-level locking. The other is InnoDB, which supports transaction processing (ACID). The design goal is to maximize performance and row-level locks for processing large volumes of data.

Table lock: low overhead, large locking granularity, high probability of deadlock, and relatively low concurrency.

Row lock: high overhead, small locking granularity, low probability of deadlock, and relatively high concurrency.

Why do table locks and row locks occur? Mainly to ensure the integrity of the data. For example, if a user is operating a table and other users also want to operate the table, they must wait for the first user to complete the operation before other users can operate. Table locks and rows That's what locks do. Otherwise, if multiple users operate a table at the same time, data conflicts or exceptions will definitely occur.

Based on the above, using the InnoDB storage engine is the best choice, and it is also the default storage engine in MySQL 5.5 and later versions. There are many parameters associated with each storage engine. The parameters that mainly affect database performance are listed below.

Default value of public parameters:

max_connections = 151 #同时处理最大连接数,推荐设置最大连接数是上限连接数的80%左右 sort_buffer_size = 2M #查询排序时缓冲区大小,只对order by和group by起作用,可增大此值为16M query_cache_limit = 1M #查询缓存限制,只有1M以下查询结果才会被缓存,以免结果数据较大把缓存池覆盖 query_cache_size = 16M #查看缓冲区大小,用于缓存SELECT查询结果,下一次有同样SELECT查询将直接从缓存池返回结果,可适当成倍增加此值 open_files_limit = 1024 #打开文件数限制,如果show global status like 'open_files'查看的值等于或者大于open_files_limit值时,程序会无法连接数据库或卡死

Default value of MyISAM parameter:

key_buffer_size = 16M #索引缓存区大小,一般设置物理内存的30-40% read_buffer_size = 128K #读操作缓冲区大小,推荐设置16M或32M

Default value of InnoDB parameter:

innodb_buffer_pool_size = 128M #索引和数据缓冲区大小,一般设置物理内存的60%-70% innodb_buffer_pool_instances = 1 #缓冲池实例个数,推荐设置4个或8个 innodb_flush_log_at_trx_commit = 1 #关键参数,0代表大约每秒写入到日志并同步到磁盘,数据库故障会丢失1秒左右事务数据。1为每执行一条SQL后写入到日志并同步到磁盘,I/O开销大,执行完SQL要等待日志读写,效率低。2代表只把日志写入到系统缓存区,再每秒同步到磁盘,效率很高,如果服务器故障,才会丢失事务数据。对数据安全性要求不是很高的推荐设置2,性能高,修改后效果明显。 innodb_file_per_table = OFF #默认是共享表空间,共享表空间idbdata文件不断增大,影响一定的I/O性能。推荐开启独立表空间模式,每个表的索引和数据都存在自己独立的表空间中,可以实现单表在不同数据库中移动。 innodb_log_buffer_size = 8M #日志缓冲区大小,由于日志最长每秒钟刷新一次,所以一般不用超过16M

3.2 System kernel optimization

Most MySQL is deployed on Linux systems, so some parameters of the operating system will also affect MySQL performance. The following is appropriate optimization of the Linux kernel.

net.ipv4.tcp_fin_timeout = 30 #TIME_WAIT超时时间,默认是60s net.ipv4.tcp_tw_reuse = 1 #1表示开启复用,允许TIME_WAIT socket重新用于新的TCP连接,0表示关闭 net.ipv4.tcp_tw_recycle = 1 #1表示开启TIME_WAIT socket快速回收,0表示关闭 net.ipv4.tcp_max_tw_buckets = 4096 #系统保持TIME_WAIT socket最大数量,如果超出这个数,系统将随机清除一些TIME_WAIT并打印警告信息 net.ipv4.tcp_max_syn_backlog = 4096 #进入SYN队列最大长度,加大队列长度可容纳更多的等待连接

In the Linux system, if the number of file handles opened by a process exceeds the system default value of 1024, the message "too many files open" will be prompted, so the limit of open file handles must be adjusted.

# vi /etc/security/limits.conf #加入以下配置,*代表所有用户,也可以指定用户,重启系统生效 * soft nofile 65535 * hoft nofile 65535 # ulimit -SHn 65535 #立刻生效

3.3 Hardware configuration

Increase physical memory and improve file system performance. The Linux kernel will allocate cache areas (system cache and data cache) from memory to store hot data. Through the file system delayed writing mechanism, synchronization will only occur when conditions are met (such as the cache area reaching a certain percentage or the sync command being executed). to disk. In other words, the larger the physical memory, the larger the allocated cache area, and the more cached data. Of course, a certain amount of cached data will be lost if the server fails.

SSD hard drive replaces SAS hard drive, and the RAID level is adjusted to RAID1+0, which has better read and write performance (IOPS) than RAID1 and RAID5. After all, the pressure on the database mainly comes from disk I/O.

4. Database architecture expansion

As the business volume increases, the performance of a single database server can no longer meet business needs. It’s time to consider adding a machine, and it’s time to create a cluster~~~. The main idea is to decompose the load of a single database, break through the disk I/O performance, store hot data in the cache, and reduce the frequency of disk I/O access.

4.1 Master-slave replication and read-write separation

Because in the production environment, most databases are read operations, so we deploy a one-master-multiple-slave architecture. The master database is responsible for write operations and double-click hot backup, and multiple slave databases do the load. Balance, responsible for read operations. Mainstream load balancers include LVS, HAProxy, and Nginx. How to achieve separation of reading and writing? Most companies implement the separation of reading and writing at the code level, which is more efficient. Another way is to achieve read-write separation through a proxy program, which is rarely used in enterprises. Common proxy programs include MySQL Proxy and Amoeba. In such a database cluster architecture, the high concurrency capability of the database is greatly increased and the single-machine performance bottleneck problem is solved. If one slave database can handle 2000 QPS, then 5 slave databases can handle 10,000 QPS, and the horizontal scalability of the database is also very easy.

Sometimes, when facing applications with a large number of write operations, the write performance of a single unit cannot meet business requirements. If you have dual masters, you will encounter database data inconsistencies. The reason for this is that different users in the application may operate two databases, and simultaneous update operations will cause conflicts or inconsistencies in the database data of the two databases. When using a single database, MySQL uses the storage engine mechanism table locks and row locks to ensure data integrity. How to solve this problem when using multiple main databases? There is a set of master-slave replication management tools developed based on the perl language, called MySQL-MMM (Master-Master replication manager for Mysql, Mysql master-master replication manager). The biggest advantage of this tool is that it only provides one database write operation at the same time. Effectively ensure data consistency.

4.2 Add cache

Add a cache system to the database, Cache hot data to In memory, If there is data to be requested in the memory cache, the results will no longer be returned to the database to improve read performance. Caching implementations include local caching and distributed caching. Local caching caches data into local server memory or files, which is fast. Distributed can cache massive amounts of data and is easy to expand. Mainstream distributed cache systems include memcached and redis. Memcached has stable performance. The data is cached in the memory. It is very fast and can reach QPS. About 8w. If you want data persistence, use redis, the performance is not lower than memcached.

Working process:

4.3 Sub-library

Sub-library is based on different businesses. The tables are divided into different databases, such as web, bbs, blog and other libraries. If the business volume is large, the divided library can also be used as a master-slave architecture to further avoid excessive pressure on a single library.

4.4 Table splitting

As the amount of data increases, a certain table in the database has millions of data, causing queries and insertions to take too long. How can this be done? What about solving the pressure on a single meter? You should consider whether to split this table into multiple small tables to reduce the pressure on a single table and improve processing efficiency. This method is called table splitting.

The table splitting technology is more troublesome. You need to modify the SQL statements in the program code and manually create other tables. You can also use the merge storage engine to implement table splitting, which is relatively simple. After the table is divided, the program operates on a master table. This master table does not store data. It only has some relationships between the sub-tables and how to update the data. The master table will distribute the pressure to different small tables based on different queries. , thus improving concurrency and disk I/O performance.

Table splitting is divided into vertical splitting and horizontal splitting:

Vertical splitting: Split the original table with many fields into multiple tables to solve the table width problem. You can put infrequently used fields in a separate table, you can put large fields in a separate table, or you can put closely related fields in a table.

Horizontal split: Split the original table into multiple tables. Each table has the same structure to solve the problem of large data volume in a single table.

4.5 Partition

Partitioning is to divide the data of a table into multiple blocks. These blocks can be on one disk or on different disks. , after partitioning, it is still one table on the surface, but the data is hashed in multiple locations. In this way, multiple hard disks can process different requests at the same time, thereby improving disk I/O read and write performance, and the implementation is relatively simple.

Note: Adding cache, sub-library, sub-table and partition is mainly implemented by programmers.

5. Database maintenance

Database maintenance is the main work of operation and maintenance engineers or DBAs, including performance monitoring, performance analysis, performance tuning, Database backup and recovery, etc.

5.1 Key indicators of performance status

QPS, Queries Per Second: the number of queries per second, the number of queries a database can handle per second

TPS, Transactions Per Second: Number of transactions processed per second

Check the running status through show status. There will be more than 300 status information records. Several values can help us calculate QPS and TPS, as follows:

Uptime: The actual number of times the server has been running, in seconds

Questions: The number of queries that have been sent to the database

Com_select: The number of queries, the actual number of database operations

Com_insert: Number of inserts

Com_delete: Number of deletes

Com_update: Number of updates

Com_commit: Number of transactions

Com_rollback: Number of rollbacks

Then, here comes the calculation method, calculate QPS based on Questions:

mysql> show global status like 'Questions'; mysql> show global status like 'Uptime';

QPS = Questions / Uptime

基于Com_commit和Com_rollback计算出TPS:

mysql> show global status like 'Com_commit'; mysql> show global status like 'Com_rollback'; mysql> show global status like 'Uptime'; TPS = (Com_commit + Com_rollback) / Uptime

另一计算方式:基于Com_select、Com_insert、Com_delete、Com_update计算出QPS

mysql> show global status where Variable_name in('com_select','com_insert','com_delete','com_update');

等待1秒再执行,获取间隔差值,第二次每个变量值减去第一次对应的变量值,就是QPS

TPS计算方法:

mysql> show global status where Variable_name in('com_insert','com_delete','com_update');

计算TPS,就不算查询操作了,计算出插入、删除、更新四个值即可。

经网友对这两个计算方式的测试得出,当数据库中myisam表比较多时,使用Questions计算比较准确。当数据库中innodb表比较多时,则以Com_*计算比较准确。

5.2 开启慢查询日志

MySQL开启慢查询日志,分析出哪条SQL语句比较慢,使用set设置变量,重启服务失效,可以在my.cnf添加参数永久生效。

mysql> set global slow-query-log=on #开启慢查询功能 mysql> set global slow_query_log_file='/var/log/mysql/mysql-slow.log'; #指定慢查询日志文件位置 mysql> set global log_queries_not_using_indexes=on; #记录没有使用索引的查询 mysql> set global long_query_time=1; #只记录处理时间1s以上的慢查询

分析慢查询日志,可以使用MySQL自带的mysqldumpslow工具,分析的日志较为简单。

# mysqldumpslow -t 3 /var/log/mysql/mysql-slow.log #查看最慢的前三个查询

也可以使用percona公司的pt-query-digest工具,日志分析功能全面,可分析slow log、binlog、general log。

分析慢查询日志:pt-query-digest /var/log/mysql/mysql-slow.log

分析binlog日志:mysqlbinlog mysql-bin.000001 >mysql-bin.000001.sql

pt-query-digest –type=binlog mysql-bin.000001.sql

分析普通日志:pt-query-digest –type=genlog localhost.log

5.3 数据库备份

备份数据库是最基本的工作,也是最重要的,否则后果很严重,你懂得!但由于数据库比较大,上百G,往往备份都很耗费时间,所以就该选择一个效率高的备份策略,对于数据量大的数据库,一般都采用增量备份。常用的备份工具有mysqldump、mysqlhotcopy、xtrabackup等,mysqldump比较适用于小的数据库,因为是逻辑备份,所以备份和恢复耗时都比较长。mysqlhotcopy和xtrabackup是物理备份,备份和恢复速度快,不影响数据库服务情况下进行热拷贝,建议使用xtrabackup,支持增量备份。

Xtrabackup备份工具使用博文:http://www.php.cn/

5.4 数据库修复

有时候MySQL服务器突然断电、异常关闭,会导致表损坏,无法读取表数据。这时就可以用到MySQL自带的两个工具进行修复,myisamchk和mysqlcheck。

myisamchk: can only repair the myisam table, the database needs to be stopped

Common parameters:

-f –force force repair, overwrite old temporary files, generally not used

-r –recover recovery model

-q –quik quick recovery

-a –analyze analysis table

-o –safe-recover old recovery model, if- r cannot be repaired, you can try using this parameter

-F –fast only checks the tables that have not been closed normally

Quickly repair the weibo database:

# cd /var/lib /mysql/weibo

# myisamchk -r -q *.MYI

mysqlcheck: Both myisam and innodb tables can be used. There is no need to stop the database. If you want to repair a single table, you can add it after the database. Table name, separated by spaces

Common parameters:

-a –all-databases Check all libraries

-r –repair Repair the table

- c –check check table, default option

-a –analyze analyze table

-o –optimize optimize table

-q –quik fastest check or repair table

-F –fast only checks tables that have not been closed normally

Quickly repair the weibo database:

mysqlcheck -r -q -uroot -p123 weibo

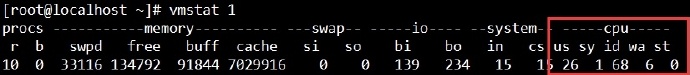

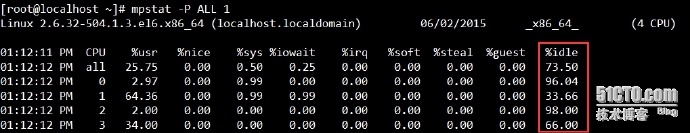

5.5 In addition, check the CPU and I/O performance method

#Check the CPU performance

#The parameter -P is to display the number of CPUs, ALL For all, you can also display only the number

#View I/O performance

#Parameter -m It is displayed in M units, the default is K

#%util: When it reaches 100%, it means that the I/O is very busy.

#await: The time the request waits in the queue directly affects the read time.

I/O limit: IOPS (r/s+w/s), generally around 1200. (IOPS, the number of read and write (I/O) operations per second)

I/O bandwidth: In sequential read and write mode, the theoretical value of SAS hard disk is about 300M/s, and the theoretical value of SSD hard disk is about 600M/s. around s.

The above are some of the main optimization solutions I have summarized after using MySQL for three years. The capabilities are limited and some are not comprehensive, but these can basically meet the database needs of small and medium-sized enterprises. Due to the limitations of the original design of relational databases, some BAT companies put massive amounts of data into relational databases and have been unable to achieve better performance in terms of massive data query and analysis. Therefore, NoSQL has become popular. Non-relational databases have large data volumes and high performance. They also make up for some shortcomings of relational databases. Gradually, most companies have stored some business databases in NoSQL, such as MongoDB, HBase, etc. Distributed file systems are used for data storage, such as HDFS, GFS, etc. Massive data calculation and analysis uses Hadoop, Spark, Storm, etc. These are cutting-edge technologies related to operation and maintenance, and they are also the main learning objects in storage. Come on, friends! If any blogger has a better optimization plan, please share it.

The above is a detailed explanation of MySQL database optimization. For more related content, please pay attention to the PHP Chinese website (www.php.cn)!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

PHP's big data structure processing skills

May 08, 2024 am 10:24 AM

PHP's big data structure processing skills

May 08, 2024 am 10:24 AM

Big data structure processing skills: Chunking: Break down the data set and process it in chunks to reduce memory consumption. Generator: Generate data items one by one without loading the entire data set, suitable for unlimited data sets. Streaming: Read files or query results line by line, suitable for large files or remote data. External storage: For very large data sets, store the data in a database or NoSQL.

How to use MySQL backup and restore in PHP?

Jun 03, 2024 pm 12:19 PM

How to use MySQL backup and restore in PHP?

Jun 03, 2024 pm 12:19 PM

Backing up and restoring a MySQL database in PHP can be achieved by following these steps: Back up the database: Use the mysqldump command to dump the database into a SQL file. Restore database: Use the mysql command to restore the database from SQL files.

How to optimize MySQL query performance in PHP?

Jun 03, 2024 pm 08:11 PM

How to optimize MySQL query performance in PHP?

Jun 03, 2024 pm 08:11 PM

MySQL query performance can be optimized by building indexes that reduce lookup time from linear complexity to logarithmic complexity. Use PreparedStatements to prevent SQL injection and improve query performance. Limit query results and reduce the amount of data processed by the server. Optimize join queries, including using appropriate join types, creating indexes, and considering using subqueries. Analyze queries to identify bottlenecks; use caching to reduce database load; optimize PHP code to minimize overhead.

How to insert data into a MySQL table using PHP?

Jun 02, 2024 pm 02:26 PM

How to insert data into a MySQL table using PHP?

Jun 02, 2024 pm 02:26 PM

How to insert data into MySQL table? Connect to the database: Use mysqli to establish a connection to the database. Prepare the SQL query: Write an INSERT statement to specify the columns and values to be inserted. Execute query: Use the query() method to execute the insertion query. If successful, a confirmation message will be output.

How to create a MySQL table using PHP?

Jun 04, 2024 pm 01:57 PM

How to create a MySQL table using PHP?

Jun 04, 2024 pm 01:57 PM

Creating a MySQL table using PHP requires the following steps: Connect to the database. Create the database if it does not exist. Select a database. Create table. Execute the query. Close the connection.

How to use MySQL stored procedures in PHP?

Jun 02, 2024 pm 02:13 PM

How to use MySQL stored procedures in PHP?

Jun 02, 2024 pm 02:13 PM

To use MySQL stored procedures in PHP: Use PDO or the MySQLi extension to connect to a MySQL database. Prepare the statement to call the stored procedure. Execute the stored procedure. Process the result set (if the stored procedure returns results). Close the database connection.

How to fix mysql_native_password not loaded errors on MySQL 8.4

Dec 09, 2024 am 11:42 AM

How to fix mysql_native_password not loaded errors on MySQL 8.4

Dec 09, 2024 am 11:42 AM

One of the major changes introduced in MySQL 8.4 (the latest LTS release as of 2024) is that the "MySQL Native Password" plugin is no longer enabled by default. Further, MySQL 9.0 removes this plugin completely. This change affects PHP and other app

The difference between oracle database and mysql

May 10, 2024 am 01:54 AM

The difference between oracle database and mysql

May 10, 2024 am 01:54 AM

Oracle database and MySQL are both databases based on the relational model, but Oracle is superior in terms of compatibility, scalability, data types and security; while MySQL focuses on speed and flexibility and is more suitable for small to medium-sized data sets. . ① Oracle provides a wide range of data types, ② provides advanced security features, ③ is suitable for enterprise-level applications; ① MySQL supports NoSQL data types, ② has fewer security measures, and ③ is suitable for small to medium-sized applications.