Backend Development

Backend Development

Python Tutorial

Python Tutorial

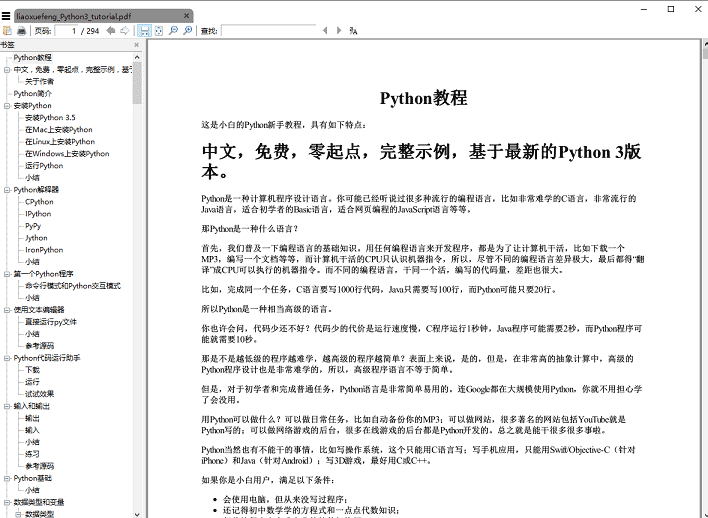

Python crawler implementation tutorial converted into PDF e-book

Python crawler implementation tutorial converted into PDF e-book

Python crawler implementation tutorial converted into PDF e-book

This article shares with you the method and code of using python crawler to convert "Liao Xuefeng's Python Tutorial" into PDF. Friends in need can refer to it.

It seems that there is no easier way to write a crawler than using Python. It's appropriate. There are so many crawler tools provided by the Python community that you will be dazzled. With various libraries that can be used directly, you can write a crawler in minutes. Today I am thinking about writing a crawler and crawling down Liao Xuefeng's Python tutorial. Create a PDF e-book for everyone to read offline.

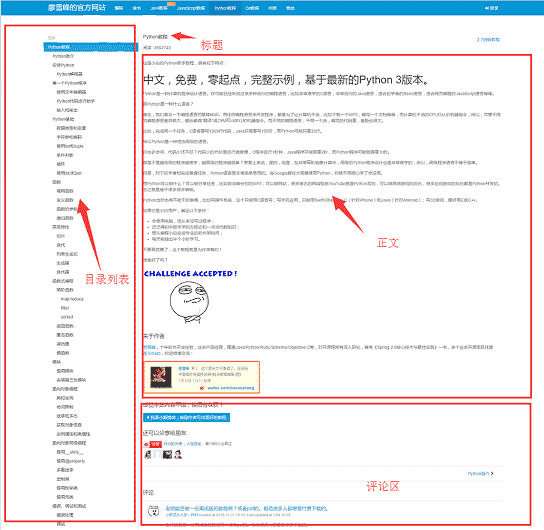

Before we start writing the crawler, let’s first analyze the page structure of the website 1. The left side of the web page is the directory outline of the tutorial. Each URL corresponds to an article on the right. The upper right side is the article’s The title, in the middle is the text part of the article. The text content is the focus of our concern. The data we want to crawl is the text part of all web pages. Below is the user's comment area. The comment area is of no use to us, so we can ignore it.

Tool preparation

After you have figured out the basic structure of the website, you can start preparing the tool kits that the crawler depends on. requests and beautifulsoup are two major artifacts of crawlers, reuqests is used for network requests, and beautifulsoup is used to operate html data. With these two shuttles, we can work quickly. We don't need crawler frameworks like scrapy. Using it in small programs is like killing a chicken with a sledgehammer. In addition, since you are converting html files to pdf, you must also have corresponding library support. wkhtmltopdf is a very good tool that can convert html to pdf suitable for multiple platforms. pdfkit is the Python package of wkhtmltopdf. First install the following dependency packages,

Then install wkhtmltopdf

pip install requests pip install beautifulsoup pip install pdfkit

Install wkhtmltopdf

For the Windows platform, download the stable version directly from the wkhtmltopdf official website 2 and install it. After the installation is completed, add the execution path of the program to the system environment $PATH variable. Otherwise, pdfkit cannot find wkhtmltopdf and the error "No wkhtmltopdf executable found" will appear. Ubuntu and CentOS can be installed directly using the command line

$ sudo apt-get install wkhtmltopdf # ubuntu $ sudo yum intsall wkhtmltopdf # centos

Crawler implementation

After everything is ready, you can go Code, but I still need to sort out my thoughts before writing code. The purpose of the program is to save the html text parts corresponding to all URLs locally, and then use pdfkit to convert these files into a pdf file. Let's split the task. First, save the html text corresponding to a certain URL locally, and then find all URLs and perform the same operation.

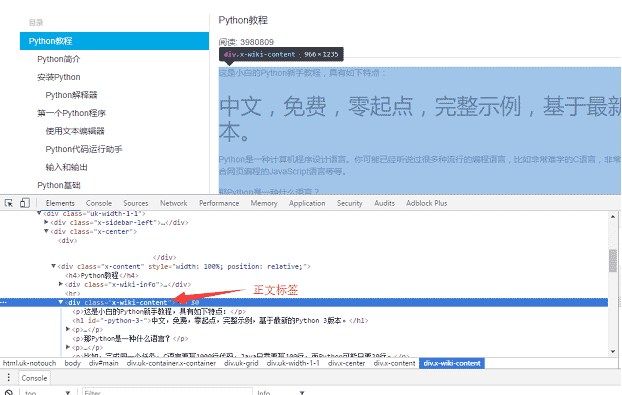

Use the Chrome browser to find the tag in the body part of the page, and press F12 to find the p tag corresponding to the body: <p >, where p is the body content of the web page. After using requests to load the entire page locally, you can use beautifulsoup to operate the HTML dom element to extract the text content.

The specific implementation code is as follows: Use the soup.find_all function to find the text tag, and then save the content of the text part to the a.html file.

def parse_url_to_html(url):

response = requests.get(url)

soup = BeautifulSoup(response.content, "html5lib")

body = soup.find_all(class_="x-wiki-content")[0]

html = str(body)

with open("a.html", 'wb') as f:

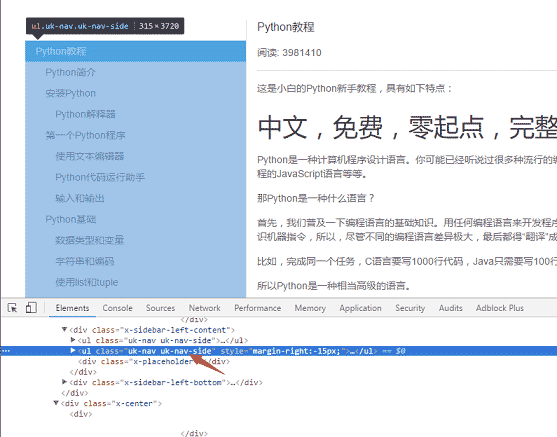

f.write(html)The second step is to parse out all the URLs on the left side of the page. Use the same method to find the left menu label <ul >

def get_url_list():

"""

获取所有URL目录列表

"""

response = requests.get("http://www.liaoxuefeng.com/wiki/0014316089557264a6b348958f449949df42a6d3a2e542c000")

soup = BeautifulSoup(response.content, "html5lib")

menu_tag = soup.find_all(class_="uk-nav uk-nav-side")[1]

urls = []

for li in menu_tag.find_all("li"):

url = "http://www.liaoxuefeng.com" + li.a.get('href')

urls.append(url)

return urlsdef save_pdf(htmls):

"""

把所有html文件转换成pdf文件

"""

options = {

'page-size': 'Letter',

'encoding': "UTF-8",

'custom-header': [

('Accept-Encoding', 'gzip')

]

}

pdfkit.from_file(htmls, file_name, options=options)

Summary

The total code amount adds up to less than 50 lines, however, Wait a minute, in fact, the code given above omits some details. For example, how to get the title of the article. The img tag of the text content uses a relative path. If you want to display the image normally in the PDF, you need to change the relative path to an absolute path. , and the saved temporary html files must be deletedFor more python crawler implementation tutorials converted into PDF e-books, please pay attention to the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1359

1359

52

52

How to solve the permissions problem encountered when viewing Python version in Linux terminal?

Apr 01, 2025 pm 05:09 PM

How to solve the permissions problem encountered when viewing Python version in Linux terminal?

Apr 01, 2025 pm 05:09 PM

Solution to permission issues when viewing Python version in Linux terminal When you try to view Python version in Linux terminal, enter python...

How Do I Use Beautiful Soup to Parse HTML?

Mar 10, 2025 pm 06:54 PM

How Do I Use Beautiful Soup to Parse HTML?

Mar 10, 2025 pm 06:54 PM

This article explains how to use Beautiful Soup, a Python library, to parse HTML. It details common methods like find(), find_all(), select(), and get_text() for data extraction, handling of diverse HTML structures and errors, and alternatives (Sel

Serialization and Deserialization of Python Objects: Part 1

Mar 08, 2025 am 09:39 AM

Serialization and Deserialization of Python Objects: Part 1

Mar 08, 2025 am 09:39 AM

Serialization and deserialization of Python objects are key aspects of any non-trivial program. If you save something to a Python file, you do object serialization and deserialization if you read the configuration file, or if you respond to an HTTP request. In a sense, serialization and deserialization are the most boring things in the world. Who cares about all these formats and protocols? You want to persist or stream some Python objects and retrieve them in full at a later time. This is a great way to see the world on a conceptual level. However, on a practical level, the serialization scheme, format or protocol you choose may determine the speed, security, freedom of maintenance status, and other aspects of the program

Mathematical Modules in Python: Statistics

Mar 09, 2025 am 11:40 AM

Mathematical Modules in Python: Statistics

Mar 09, 2025 am 11:40 AM

Python's statistics module provides powerful data statistical analysis capabilities to help us quickly understand the overall characteristics of data, such as biostatistics and business analysis. Instead of looking at data points one by one, just look at statistics such as mean or variance to discover trends and features in the original data that may be ignored, and compare large datasets more easily and effectively. This tutorial will explain how to calculate the mean and measure the degree of dispersion of the dataset. Unless otherwise stated, all functions in this module support the calculation of the mean() function instead of simply summing the average. Floating point numbers can also be used. import random import statistics from fracti

How to Perform Deep Learning with TensorFlow or PyTorch?

Mar 10, 2025 pm 06:52 PM

How to Perform Deep Learning with TensorFlow or PyTorch?

Mar 10, 2025 pm 06:52 PM

This article compares TensorFlow and PyTorch for deep learning. It details the steps involved: data preparation, model building, training, evaluation, and deployment. Key differences between the frameworks, particularly regarding computational grap

Scraping Webpages in Python With Beautiful Soup: Search and DOM Modification

Mar 08, 2025 am 10:36 AM

Scraping Webpages in Python With Beautiful Soup: Search and DOM Modification

Mar 08, 2025 am 10:36 AM

This tutorial builds upon the previous introduction to Beautiful Soup, focusing on DOM manipulation beyond simple tree navigation. We'll explore efficient search methods and techniques for modifying HTML structure. One common DOM search method is ex

How to Create Command-Line Interfaces (CLIs) with Python?

Mar 10, 2025 pm 06:48 PM

How to Create Command-Line Interfaces (CLIs) with Python?

Mar 10, 2025 pm 06:48 PM

This article guides Python developers on building command-line interfaces (CLIs). It details using libraries like typer, click, and argparse, emphasizing input/output handling, and promoting user-friendly design patterns for improved CLI usability.

What are some popular Python libraries and their uses?

Mar 21, 2025 pm 06:46 PM

What are some popular Python libraries and their uses?

Mar 21, 2025 pm 06:46 PM

The article discusses popular Python libraries like NumPy, Pandas, Matplotlib, Scikit-learn, TensorFlow, Django, Flask, and Requests, detailing their uses in scientific computing, data analysis, visualization, machine learning, web development, and H