Develop Onmyoji mini program from 0 to 1 24 hours a day

Preface

Everyone who plays Onmyoji knows that the seal mission will be refreshed twice every day at 5 a.m. and 6 p.m., each time you do it The most annoying thing about the mission is finding the corresponding copies and mysterious clues of various monsters. Onmyoji provides NetEase Genie for some data queries, but the experience is too touching, so most people choose to use search engines to search for monster distribution and mysterious clues.

It is very inconvenient to use search engines every time, so I decided to write a small program to query the distribution of Onmyoji monsters, striving to make the use experience faster and leaving more time for dog food. and Yuhun.

I happened to have two days last weekend, so I started writing immediately.

1. Concept and design

1.1 Concept

1 .The main function of the mini program to be made is the query function, so the homepage should be as concise as a search engine, and a search box is definitely needed;

2. The homepage contains popular searches and caches the most popular searches for shikigami;

3. The search supports complete matching or single-word matching;

4. Click the search result to jump directly to the Shikigami details page; 53. The Shikigami details page should include the Shikigami’s illustration, name, Rarity, haunted locations, and the haunted locations are sorted from most to least by the number of monsters;

5. Add the function of data error reporting and suggestions;

6. Support the user's personal search history;

7. The name of the mini program was finally decided to be called Shikigami Hunter after considering the functions of the mini program (actually this was decided after the final development was completed);

1.2 Design

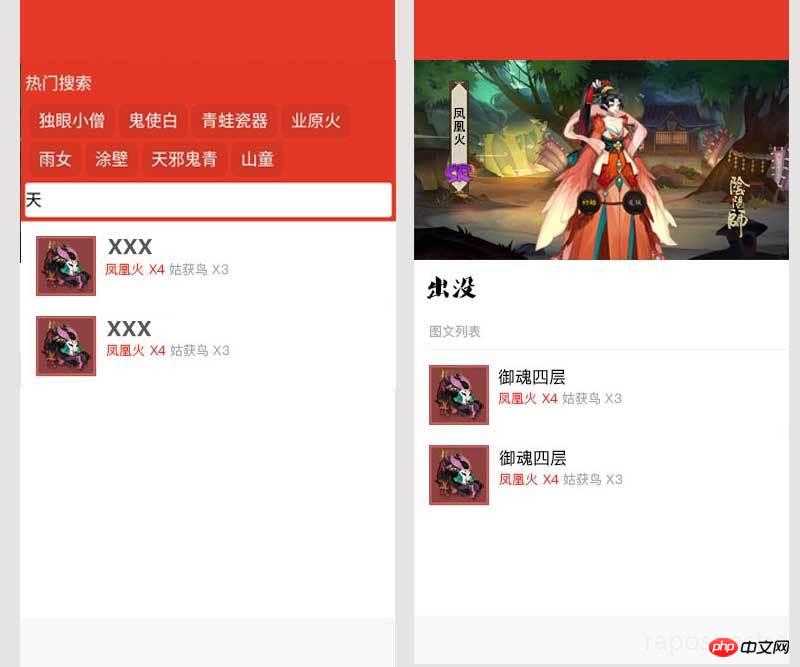

After the idea was completed, the author began to design a sketch using my half-assed PS level, which probably looked like this:

Well, just design the main homepage and details page, and then you can start thinking about how to do it!

1.3 Technical Architecture

1. The front-end is undoubtedly the WeChat applet;

2. Back-end usage Django provides Restful API services;

3. The most popular searches currently use redis as the cache server for caching;

personal search records use the localstorage provided by the WeChat applet;

4. Shikigami distribution information is crawled and cleaned using a crawler, formatted into json, and manually checked before being stored in the database;

5. Shikigami pictures and icons are directly crawled from official information;

6. Make your own unreachable shikigami pictures and icons;

7. The mini program requires HTTPS connection, which I happened to have done before. You can directly see the HTTPS free deployment guide here

8. At this point, after proper preparations before formal development, we can start formal development

2. API service development

Django’s API The author has often done service development before, so I have a relatively complete solution. You can refer heredjango-simple-serializer

The reason why it took 5 hours is because it has increased in the past 4 hours django-simple-serializer Support for the through attribute in Django ManyToManyField.

In short, the through feature allows you to add some additional fields or attributes to the intermediate table of the many-to-many relationship. For example: the many-to-many relationship between monster copies and monsters requires adding a storage for each The field count of how many corresponding monsters there are in each copy.

After getting through support, the API construction will be fast. There are five main APIs:

1. Search interface;

2. Shikigami details interface;

3. Shikigami copy interface;

4. Popular search interface;

##5. Feedback interface; After writing the interface, add some mock data for Test;3. Front-end development

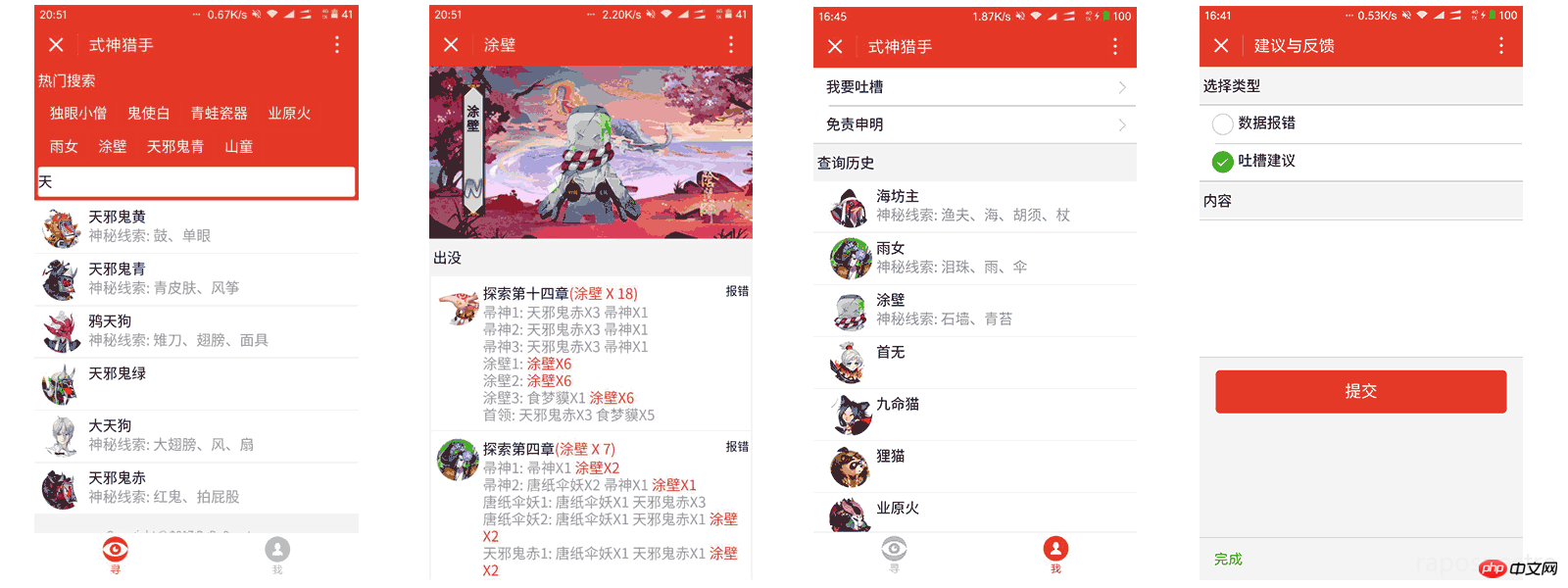

The front-end took the longest time. On the one hand, the author is really a back-end engineer, and the front-end is a half-way monk. On the other hand, the mini program has some pitfalls. Of course, the most important thing is to adjust the interface effect all the time, which takes a lot of time. The overall experience of writing a mini program is exactly the same as writing vue.js, except that some html tags cannot be used. Instead, you need to write according to the components officially provided by the mini program. Here are some feelings That is, the component design idea of the mini program itself should be based on React, and the syntax should be based on vue.js. Finally, after the front-end development is completed, it is mainly divided into these pages: 1. Home page (search page); 2. Shikigami details page; 3. My page (mainly for search history, disclaimer, etc.); 4. Feedback interface; 5. Statement interface (Why do you need this interface? Because all Pictures and some resources are directly grabbed from the official resources of Onmyoji, so it needs to be stated here that they are only used on a non-profit basis, and any messy copyrights still belong to Onmyoji).Hey, the ugly daughter-in-law has to meet her parents-in-law sooner or later, so I have to put the finally developed interface diagram here

The author is not here for the introduction and basics of WeChat mini programs. There’s more to say here. I believe that developers who are currently interested in WeChat mini programs will have no problem writing a simple demo on their own. I will mainly talk about the pitfalls I encountered during development:

3.1 background-image attribute

When writing the Shikigami details page, the background-image attribute needs to be used in two places to set the background image. Everything is displayed normally in the WeChat developer tools, but when it is debugged on a real machine, it cannot be displayed. Finally, it is found that the background-image of the mini program does not support referencing local resources on the real machine. There are two solutions:

1 .Use network images: Considering the size of the background image, the author gave up this solution;

2. Use base64 to encode the image.

Normally speaking, the background-image in CSS supports base64. This solution is equivalent to directly encoding the image with base64 into a base64 code for storage. You can use it like this when using it:

background-image: url(data:image/image-format;base64,XXXX);

image-format is the format of the image itself, and xxxx is the encoding of the image after base64. This method is actually a disguised way of referencing local resources. The advantage is that it can reduce the number of image requests, but the disadvantage is that it will increase the size of the css file and make it not so beautiful.

In the end, the author chose the second method mainly because the size of the picture and the increase of wxss were within the acceptable range.

3.2 template

The applet supports templates, but please note that the template has its own scope and can only use the data passed in by data.

In addition, when passing in data, the relevant data needs to be deconstructed and passed in. Inside the template, it is directly accessed in the form of {{ xxxx }} instead of {{ item.xxx }} in a loop. This form of access;

About deconstruction:

<template is="xxx" data="{{...object}}"/>Three. It is the deconstruction operation;

Generally the template will be placed in a separate template file for other files to call. It will not be written directly in normal wxml. For example, the author's directory looks like this:

├── app.js ├── app.json ├── app.wxss ├── pages │ ├── feedback │ ├── index │ ├── my │ ├── onmyoji │ ├── statement │ └── template │ ├── template.js │ ├── template.json │ ├── template.wxml │ └── template.wxss ├── static └── utils

To call template from other files, just use import directly:

<import src="../template/template.wxml" />

Then where you need to quote the template:

<template is="xxx" data="{{...object}}"/>here I encountered another problem. Writing the style corresponding to the template in the wxss corresponding to the template has no effect. It needs to be written in the wxss of the file that calls the template. For example, if the index needs to use the template, the corresponding css needs to be written in my/my.wxss. middle.

4. Crawling image resources

Shikigami icons and images are basically available on the Onmyoji official website, so you can’t do it yourself here. Realistic, so decisively write a crawler to crawl down and save it to your own cdn.

Both large and small pictures can be found at http://yys.163.com/shishen/index.html. At first, I considered crawling the webpage and using beautiful soup to extract the data. Later I found that the shikigami data was loaded asynchronously, which was even simpler. After analyzing the webpage, I got https://g37simulator.webapp.163.com/get_heroid_list and directly returned the shikigami. The json information of the information, so it is easy to write a crawler to get it done:

# coding: utf-8

import json

import requests

import urllib

from xpinyin import Pinyin

url = "https://g37simulator.webapp.163.com/get_heroid_list?callback=jQuery11130959811888616583_1487429691764&rarity=0&page=1&per_page=200&_=1487429691765"

result = requests.get(url).content.replace('jQuery11130959811888616583_1487429691764(', '').replace(')', '')

json_data = json.loads(result)

hellspawn_list = json_data['data']

p = Pinyin()

for k, v in hellspawn_list.iteritems():

file_name = p.get_pinyin(v.get('name'), '')

print 'id: {0} name: {1}'.format(k, v.get('name'))

big_url = "https://yys.res.netease.com/pc/zt/20161108171335/data/shishen_big/{0}.png".format(k)

urllib.urlretrieve(big_url, filename='big/{0}@big.png'.format(file_name))

avatar_url = "https://yys.res.netease.com/pc/gw/20160929201016/data/shishen/{0}.png".format(k)

urllib.urlretrieve(avatar_url, filename='icon/{0}@icon.png'.format(file_name))However, after crawling the data, I found a problem. NetEase’s official pictures are all uncoded high-definition large pictures. For a poor DS like the author Large pictures will go bankrupt if placed on CDN for two days, so the pictures need to be converted in batches into a size that is not too large but can be viewed. Well, you can use the batch processing capabilities of ps here.

1. Open ps, and then select a picture you crawled to;

2. Select "Window" on the menu bar and then select "Action;

3. In Under the "Action" option, create a new action;

4. Click the circular recording button to start recording the action;

5. Save a picture as a web format in the normal order of processing pictures;

6. Click the square stop button to stop the recording action;

7. Select File on the menu bar - Automatic - Batch Processing - Select the previously recorded action - Configure the input folder and output file Clip;

8. Click OK;

Wait for the batch processing to end, and it should be enough to refresh the soul or something during the process, and then upload all the obtained pictures to the static resource server , the picture is processed here

5. Shikigami data crawling (4 hours)

Shikigami distribution data is relatively complicated on the Internet. And there are a lot of deviations in the data, so after careful consideration, we decided to use a semi-manual and semi-automatic method. The crawled data output is json:

{

"scene_name": "探索第一章",

"team_list": [{

"name": "天邪鬼绿1",

"index": 1,

"monsters": [{

"name": "天邪鬼绿",

"count": 1

},{

"name": "提灯小僧",

"count": 2

}]

},{

"name": "天邪鬼绿2",

"index": 2,

"monsters": [{

"name": "天邪鬼绿",

"count": 1

},{

"name": "提灯小僧",

"count": 2

}]

},{

"name": "提灯小僧1",

"index": 3,

"monsters": [{

"name": "天邪鬼绿",

"count": 2

},{

"name": "提灯小僧",

"count": 1

}]

},{

"name": "提灯小僧2",

"index": 4,

"monsters": [{

"name": "灯笼鬼",

"count": 2

},{

"name": "提灯小僧",

"count": 1

}]

},{

"name": "首领",

"index": 5,

"monsters": [{

"name": "九命猫",

"count": 3

}]

}]

}Then we manually check it again. Of course, there will still be omissions, so the data error reporting function is It's very important.

The actual time for writing the code in this part may only be more than half an hour, and the rest of the time is checking the data;

After all the checks are completed, write a script to directly import json into In the database, after checking it is correct, use fabric to publish it to the online server for testing;

6. Test

The last step is basically to experience error checking on the mobile phone, modify some effects, turn off the debugging mode and prepare to submit for review;

I have to say that the review speed of the small program team is very fast, and the review was passed on Monday afternoon , and then decisively go online.

Last rendering:

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

'Onmyoji' new birthday system event gameplay introduction

Jul 17, 2024 am 04:25 AM

'Onmyoji' new birthday system event gameplay introduction

Jul 17, 2024 am 04:25 AM

How to play the new birthday system event in Onmyoji? The game is about to bring you a new birthday system, which will be accompanied by a lot of gameplay. At that time, you can go to game events to get a lot of game rewards. The editor has brought you an introduction to Onmyoji’s new birthday system event gameplay. , interested players must not miss it! "Onmyoji" new birthday system event gameplay introduction ☆ The new birthday system has arrived in Heianjing ☆ "Candlelight flickers to make good wishes, open your eyes and have friends by your side ~" Amidst the sweet smell of cake, the beautiful birthday wishes for the cubs have also been delivered ——The player birthday system will be launched after maintenance on July 17th! Onmyoji masters who are level ≥30 can click on the birthday cake paper man in the courtyard, or click on the avatar in the courtyard to go to the "Settings" interface.

'Onmyoji' How to get Yamata no Orochi's new skin Hyakue Roi

Jul 29, 2024 pm 10:55 PM

'Onmyoji' How to get Yamata no Orochi's new skin Hyakue Roi

Jul 29, 2024 pm 10:55 PM

How to get the new skin of Onmyoji Yamata no Orochi, Momo Eroi. In the game Onmyoji, there are many shikigami that can be obtained, and there are also many good-looking skins. Recently, the game has launched a new skin of Yamata no Orochi, Hyakue Roi. Many friends want to know about this skin. How to get it, let’s take a look at the detailed introduction today. Onmyoji Yamata no Orochi's new skin, a hundred-painted robe, is obtained by ink and gold ornaments, and the colors are intertwined to form a poem; the glass illuminates wishes, and a clear dream suddenly awakens. The skin of the Hyakue Luoyi series "Yamata no Orochi's new skin·Liu Li Wish Realm" will be available in the skin store for a limited time from July 31st to August 13th at 23:59, priced at 210 skin coupons. Players can also choose to purchase the "Hundred Painted Luo Yi·Miaoshou Hua" series of skins to unlock and obtain the corresponding skins.

How to play the desktop version of 'Onmyoji' in full screen

Jul 15, 2024 am 11:14 AM

How to play the desktop version of 'Onmyoji' in full screen

Jul 15, 2024 am 11:14 AM

How to play the desktop version of Onmyoji in full screen? I believe that many friends are playing the mobile game Onmyoji. In fact, the game has a desktop version that can be played on a computer. However, many friends do not know how to set the full screen when playing the desktop version, which is not very good. Today, I will take you to see the related solutions. I hope it can be helpful to everyone. How to go full screen in Onmyoji Desktop Edition To achieve full screen display in Onmyoji Desktop Edition, you can follow the steps below: Log in to the game to the main interface, click on your profile picture to enter settings. After entering the settings, scroll down to find the screen display options. Switches the screen display options from the default windowed display to full screen display. After switching to full screen, the game will automatically display in full screen and return to the main game interface. To exit full screen and switch back to window

'Onmyoji' Nian Reiki Temari Skin Introduction

Jul 16, 2024 am 07:52 AM

'Onmyoji' Nian Reiki Temari Skin Introduction

Jul 16, 2024 am 07:52 AM

How about the skin of Onmyoji Men Reiki Temari? Different game characters in the game have their own exclusive and exquisite skins. After this game maintenance is completed, the Aura Collection skin Temari will be released soon. The editor brings you an introduction to the skin of Onmyoji Men Reiki Temari. "Onmyoji" Menreiki Temari skin introduction ☆ Menreiki new collection skin is online ☆ Follow the guidance of the voice and follow the direction of Temari. The girl wearing the fox mask steps into this illusory feast, and everyone's face Overflowing with a happy smile. The girl is immersed in this absurd carnival, weaving her own dream. "A new game has begun." The △face Reiki Collection Skin Temari Play will be available in the skin store after maintenance on June 19, priced at 128 Soul Jade. △Qishang Ceremony Event Time: 6

'Onmyoji' Izanami's Nightmare Skin Introduction

Aug 01, 2024 pm 12:09 PM

'Onmyoji' Izanami's Nightmare Skin Introduction

Aug 01, 2024 pm 12:09 PM

How about Onmyoji Izanami’s Nightmare Skin? The game is about to bring you a new and exquisite skin. The editor has brought you an introduction to Onmyoji Izanami’s Nightmare Skin. Interested players must not miss it! "Onmyoji" Izanami's Dream Butterfly Nightmare skin introduction ☆ Izanami Flower Battle new skin information ☆ The golden snake swims on the scale streamer, and everything flows between the wrists and arms. The butterflies fluttered their wings and dropped their eyes. The thousands of prayers and wishes of the people were all poured out to the mother goddess in an instant. The snake demon neighed and lowered its head, two or three ghost butterflies hovered and spread their wings, the empty lament echoed with the butterfly language, and the faint light of the universe was reflected in the wing patterns. Izanami’s new skin, Nightmare, will be launched at 0:00 on August 1st! It can be obtained through the Flower Battle and Hazuki event. If you participate in the event and meet the specified conditions, you will get the limited avatar frame "Return to Nothingness".

'Yin Yu Jiang Hu' level 100 Yin Yang King strategy guide

Jul 16, 2024 am 05:13 AM

'Yin Yu Jiang Hu' level 100 Yin Yang King strategy guide

Jul 16, 2024 am 05:13 AM

How to beat the level 100 Yin Yang King in Misty Jianghu? In the mobile game Yan Yu Jiang Hu, many players are at a disadvantage when challenging the level 100 Yin Yang King. So how do we match up when facing the level 100 Yin Yang King? Here we bring you a detailed introduction to the matching details. Players who want to know more should come and take a look! The 100-level Yin-Yang King fighting strategy in "The Misty River and the Rain" When the 100-level version was just released, Wang Jian was beaten all over the floor by various martial arts, and he couldn't hit the Heavenly Sword Master and Han Xinan even if he was swinging a stick and getting angry. , Evil Sword Master, the sect is not as good as the magical clown in the wild with light skills and leisurely travels. I once had the idea that King Wuxia was dead, but after discussions with the bully in the ring, Yu Wuchen (now Jiang Qishu) in Android 19, and my own practice in the ring, I found that Wang Jianwuxia still has a way out, it is said that

'Onmyoji' Baihuasha online time list

Aug 20, 2024 pm 07:38 PM

'Onmyoji' Baihuasha online time list

Aug 20, 2024 pm 07:38 PM

"Onmyoji" Baihuasha belongs to the new skin of the Ukiyo Wanderer series. Players can go to the skin store to buy it at that time. Many friends may not know the specific launch time. The editor will introduce it to you in detail below. Don't miss it if you are interested. Onmyoji: Hundred Blossoms will be officially launched on August 21, 2024. Detailed introduction 1. Baihuasha is the new skin of SSR Amaterasu that will be launched soon. It belongs to the Kikuyo of the Ukiyo Wanderer series. 2. Skin price: The price is 210 skin coupons (98 soul jade). 3. The Chinese CV of SSR Amaterasu and Baihuasha is Huang Ying, and the Japanese CV is Nakahara Mai. 4. Skin details: gilt chrysanthemum petals are stacked and intertwined, glass pearls are embedded in the forehead, and golden chrysanthemums are dazzling, dotted in the dark robe. 5. Skin introduction: Dawn of Dawn

'Onmyoji' Summer Night Fireworks Event Gameplay Introduction

Jul 30, 2024 am 01:53 AM

'Onmyoji' Summer Night Fireworks Event Gameplay Introduction

Jul 30, 2024 am 01:53 AM

An introduction to how to play the Onmyoji Summer Night Fireworks event. I believe that many friends are playing the game Onmyoji, and the game will launch a new event in the near future. This Summer Night Fireworks event will be launched after the game is updated on July 31. There are many benefits to be gained by participating in the event. Today I will take you to take a look at the relevant introduction. How to play Onmyoji Summer Night Fireworks? ☆The summer night fireworks event is about to begin☆The bright moon penetrates the clouds and sheds light in the courtyard. When the young saplings are bathed in the moonlight, what kind of flowers will they bloom? The sweeper invites adults to water them together~△Activity time: After maintenance on July 31st - August 13th at 23:59△Activity introduction: ※During the event , Onmyoji masters with level ≥15 can participate in the event by clicking on the small paper man on the right side of the courtyard. ※Hua Shupei