A brief discussion on what Hadoop is and its learning route

Hadoop implements a distributed file system(HadoopDistributedFileSystem), referred to as HDFS. HDFS has high fault tolerance and is designed to be deployed on low-cost hardware; and it provides high throughput (highthroughput) to access application data, suitable for those with Applications with large datasets (largedataset) HDFS relaxes POSIX requirements and can access data in the file system in the form of streams

Hadoop's framework. The core design is: HDFS and MapReduce. HDFS provides storage for massive data, and MapReduce provides computing for massive data. In a word, Hadoop is storage plus calculation. ## The name Hadoop is not an abbreviation, but a fictitious name. The creator of the project, Doug Cutting, explained how Hadoop got its name: "This name was given to a brown elephant toy by my child.

Hadoop is a distributed computing platform that allows users to easily

structure and use it. Users can easily develop and run applications that handle massive amounts of data on Hadoop. It mainly has the following advantages: 1. High reliability Hadoop's ability to store and process data bit by bit is worthy of people's trust.

2. Highly scalable Hadoop distributes data and completes computing tasks among available computer clusters. These clusters can be easily expanded to thousands of nodes.

3. Efficiency Hadoop can dynamically move data between nodes and ensure the dynamic balance of each node, so the processing speed is very fast.

4. Highly fault-tolerant Hadoop can automatically save multiple copies of data and automatically redistribute failed tasks.

5. Low cost Compared with all-in-one computers, commercial data warehouses, and data marts such as QlikView and YonghongZ-Suite, hadoop is open source, so the software cost of the project will be greatly reduced.

Hadoop comes with a framework written in java language, so it is ideal to run on

Linux production platform. Applications on Hadoop can also be written in other languages, such as C++. The significance of Hadoop big data processing

Hadoop’s wide application in big data processing applications benefits from its natural advantages in data extraction, transformation and loading (ETL). The distributed architecture of Hadoop places the big data processing engine as close to the storage as possible, which is relatively suitable for batch processing operations such as ETL, because the batch processing results of such operations can go directly to storage. Hadoop's MapReduce function breaks a single task into pieces and sends the fragmented tasks (Map) to multiple nodes, and then loads (Reduce) them into the data warehouse in the form of a single data set.

PHP Chinese website Hadoop learning route information:

1. HadoopCommon: a module at the bottom of the Hadoop system, providing various tools for Hadoop sub-projects, such as:

Configuration files and log operations, etc. . 2. HDFS: Distributed file system, providing high-throughput application data access. To external clients, HDFS is like a traditional hierarchical file system. Files can be created,

delete, moved or renamed, and more. However, the architecture of HDFS is built based on a specific set of nodes (see Figure 1), which is determined by its own characteristics. These nodes include NameNode (just one), which provides metadata services inside HDFS; DataNode, which provides storage blocks to HDFS. This is a drawback (single point of failure) of HDFS since only one NameNode exists. Files stored in HDFS are divided into blocks, and these blocks are then copied to multiple computers (DataNode). This is very different from traditional RAID architecture. The block size (usually 64MB) and the number of blocks copied are determined by the client when the file is created. NameNode can control all file operations. All communications within HDFS are based on the standard

TCP/IP protocol. 3. MapReduce: A software framework set for distributed massive data processing computing cluster.

4. Avro: RPC project hosted by dougcutting, mainly responsible for

data serialization. Somewhat similar to Google's protobuf and Facebook's thrift. Avro will be used for Hadoop's RPC in the future, making Hadoop's RPC module communication faster and the data structure more compact. 5. Hive: Similar to CloudBase, it is also a set of software based on the Hadoop distributed computing platform that provides the SQL function of datawarehouse. It simplifies the summary and ad hoc query of massive data stored in Hadoop. hive provides a set of QL query language, based on sql, which is very convenient to use. 6. HBase: Based on HadoopDistributedFileSystem, it is an open source, scalable distributed database

based on column storage model , and supports the storage of structured data in large tables. 7. Pig: It is an advanced data flow language and execution framework for parallel computing. The SQL-like language is an advanced query language built on MapReduce. It compiles some operations into the Map and Reduce of the MapReduce model. , and users can define their own functions. 8. ZooKeeper: An open source implementation of Google’s Chubby. It is a reliable coordination system for large-scale distributed systems. It provides functions including: configuration maintenance, name service, distributed synchronization, group service, etc. The goal of ZooKeeper is to encapsulate complex and error-prone key services and provide users with a simple and easy-to-use

interface

and a system with efficient performance and stable functions. 9. Chukwa: A data collection system for managing large-scale distributed systems contributed by yahoo. 10. Cassandra: A scalable multi-master database with no single point of failure.

11. Mahout: A scalable machine learning and data mining library.

The initial design goals of Hadoop were high reliability, high scalability, high fault tolerance and efficiency. It is these inherent advantages in design that made Hadoop popular with many large companies as soon as it appeared. favored, and also attracted widespread attention from the research community. So far, Hadoop technology has been widely used in the Internet field.

The above is a detailed introduction to what Hadoop is and the Hadoop learning route. If you want to know more news and information about Hadoop, please pay attention to the official website of the platform, WeChat and other platforms. The platform IT career online learning and education platform provides you with authority. Big data Hadoop training course and

video

tutorial system, the first set of adaptive Hadoop online video course system recorded online by a gold medal lecturer on the big platform, allowing you to quickly master the practical skills of Hadoop from entry to proficiency in big data development .

The above is the detailed content of A brief discussion on what Hadoop is and its learning route. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1389

1389

52

52

Java Errors: Hadoop Errors, How to Handle and Avoid

Jun 24, 2023 pm 01:06 PM

Java Errors: Hadoop Errors, How to Handle and Avoid

Jun 24, 2023 pm 01:06 PM

Java Errors: Hadoop Errors, How to Handle and Avoid When using Hadoop to process big data, you often encounter some Java exception errors, which may affect the execution of tasks and cause data processing to fail. This article will introduce some common Hadoop errors and provide ways to deal with and avoid them. Java.lang.OutOfMemoryErrorOutOfMemoryError is an error caused by insufficient memory of the Java virtual machine. When Hadoop is

How to learn PHP development?

Jun 12, 2023 am 08:09 AM

How to learn PHP development?

Jun 12, 2023 am 08:09 AM

With the development of the Internet, the demand for dynamic web pages is increasing. As a mainstream programming language, PHP is widely used in web development. So, for beginners, how to learn PHP development? 1. Understand the basic knowledge of PHP. PHP is a scripting language that can be directly embedded in HTML code and parsed and run through a web server. Therefore, before learning PHP, you can first understand the basics of front-end technologies such as HTML, CSS, and JavaScript to better understand the operation of PHP.

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

With the advent of the big data era, data processing and storage have become more and more important, and how to efficiently manage and analyze large amounts of data has become a challenge for enterprises. Hadoop and HBase, two projects of the Apache Foundation, provide a solution for big data storage and analysis. This article will introduce how to use Hadoop and HBase in Beego for big data storage and query. 1. Introduction to Hadoop and HBase Hadoop is an open source distributed storage and computing system that can

How to use PHP and Hadoop for big data processing

Jun 19, 2023 pm 02:24 PM

How to use PHP and Hadoop for big data processing

Jun 19, 2023 pm 02:24 PM

As the amount of data continues to increase, traditional data processing methods can no longer handle the challenges brought by the big data era. Hadoop is an open source distributed computing framework that solves the performance bottleneck problem caused by single-node servers in big data processing through distributed storage and processing of large amounts of data. PHP is a scripting language that is widely used in web development and has the advantages of rapid development and easy maintenance. This article will introduce how to use PHP and Hadoop for big data processing. What is HadoopHadoop is

Explore the application of Java in the field of big data: understanding of Hadoop, Spark, Kafka and other technology stacks

Dec 26, 2023 pm 02:57 PM

Explore the application of Java in the field of big data: understanding of Hadoop, Spark, Kafka and other technology stacks

Dec 26, 2023 pm 02:57 PM

Java big data technology stack: Understand the application of Java in the field of big data, such as Hadoop, Spark, Kafka, etc. As the amount of data continues to increase, big data technology has become a hot topic in today's Internet era. In the field of big data, we often hear the names of Hadoop, Spark, Kafka and other technologies. These technologies play a vital role, and Java, as a widely used programming language, also plays a huge role in the field of big data. This article will focus on the application of Java in large

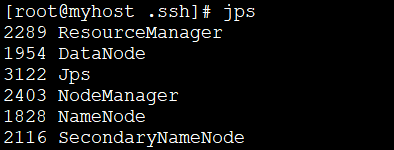

How to install Hadoop in linux

May 18, 2023 pm 08:19 PM

How to install Hadoop in linux

May 18, 2023 pm 08:19 PM

1: Install JDK1. Execute the following command to download the JDK1.8 installation package. wget--no-check-certificatehttps://repo.huaweicloud.com/java/jdk/8u151-b12/jdk-8u151-linux-x64.tar.gz2. Execute the following command to decompress the downloaded JDK1.8 installation package. tar-zxvfjdk-8u151-linux-x64.tar.gz3. Move and rename the JDK package. mvjdk1.8.0_151//usr/java84. Configure Java environment variables. echo'

Use PHP to achieve large-scale data processing: Hadoop, Spark, Flink, etc.

May 11, 2023 pm 04:13 PM

Use PHP to achieve large-scale data processing: Hadoop, Spark, Flink, etc.

May 11, 2023 pm 04:13 PM

As the amount of data continues to increase, large-scale data processing has become a problem that enterprises must face and solve. Traditional relational databases can no longer meet this demand. For the storage and analysis of large-scale data, distributed computing platforms such as Hadoop, Spark, and Flink have become the best choices. In the selection process of data processing tools, PHP is becoming more and more popular among developers as a language that is easy to develop and maintain. In this article, we will explore how to leverage PHP for large-scale data processing and how

Detailed explanation of Python advanced learning route

Jun 10, 2023 am 10:46 AM

Detailed explanation of Python advanced learning route

Jun 10, 2023 am 10:46 AM

Python is a powerful programming language that has become one of the most popular languages in many fields. From simple scripting to complex web applications and scientific calculations, Python can do it all. This article will introduce the advanced learning route of Python and provide a clear learning path to help you master the advanced skills of Python programming. Improving basic knowledge Before entering advanced learning of Python, we need to review the basic knowledge of Python. This includes Python syntax, data