Character encoding in Python is a common topic, and colleagues have written many articles in this regard. Some people follow what they say, and some write in depth. Recently, I saw a teaching video from a well-known training institution talking about this issue again, but the explanation was still unsatisfactory, so I wanted to write this article. On the one hand, I want to sort out the relevant knowledge, and on the other hand, I hope to give some help to others.

The default encoding of Python2 is ASCII, which cannot recognize Chinese characters, and the character encoding needs to be specified explicitly; the default encoding of Python3 is Unicode, which can recognize Chinese characters.

I believe that you have seen explanations similar to the above "Chinese processing in Python" in many articles, and I also believe that you really understood it when you first saw such an explanation. But after a long time, if you encounter related problems again and again, you will feel that you don’t understand it so clearly. If we understand what the default encoding mentioned above is, we will understand the meaning of that sentence more clearly.

It should be noted that "what is character encoding" and "the development process of character encoding" are not the topics discussed in this section. For these contents, you can refer to my previous << >>.

One character is not equivalent to one byte, characters can be recognized by humans Symbols, and these symbols need to be represented by bytes that the computer can recognize in order to be saved into computational storage. A character often has multiple representation methods, and different representation methods use different numbers of bytes. The different representation methods mentioned here refer to character encoding. For example, letters A-Z can be represented by ASCII code (occupying one byte), UNICODE (occupying two bytes), or UTF-8 ( occupies one byte). The role of character encoding is to convert human-recognizable characters into machine-recognizable bytecodes, and the reverse process.

UNICDOE is the real string, while character encodings such as ASCII, UTF-8, GBK, etc. represent byte strings. Regarding this, we can often see such descriptions in Python's official documentation "Unicode string" , " translating a Unicode string into a sequence of bytes"

We write code is written in the file, and the characters are stored in the file in byte form, so it is understandable that when we define a string in the file, it is treated as a byte string. However, we need a string, not a bytestring. An excellent programming language should strictly distinguish the relationship between the two and provide clever and perfect support. The JAVA language is so good that before I learned about Python and PHP, I never considered these issues that should not be handled by programmers. Unfortunately, many programming languages try to confuse "string" and "byte string". They use byte strings as strings. PHP and Python2 both belong to this programming language. The operation that best illustrates this problem is to take the length of a string containing Chinese characters:

Take the length of the string, and the result should be the number of all strings, whether Chinese or English

The length of the byte string corresponding to the string is related to the character encoding used in the encoding process (for example: UTF-8 encoding, a Chinese character needs 3 bytes to represent; GBK encoding, one Chinese character requires 2 bytes to represent)

Note: The default character encoding of Windows cmd terminal is GBK, so the character encoding entered in cmd Chinese characters need to be represented by two bytes

>>> # Python2

>>> a = 'Hello,中国' # 字节串,长度为字节个数 = len('Hello,')+len('中国') = 6+2*2 = 10

>>> b = u'Hello,中国' # 字符串,长度为字符个数 = len('Hello,')+len('中国') = 6+2 = 8

>>> c = unicode(a, 'gbk') # 其实b的定义方式是c定义方式的简写,都是将一个GBK编码的字节串解码(decode)为一个Uniocde字符串

>>>

>>> print(type(a), len(a))

(<type 'str'>, 10)

>>> print(type(b), len(b))

(<type 'unicode'>, 8)

>>> print(type(c), len(c))

(<type 'unicode'>, 8)

>>>The string support in Python3 has been greatly changed. The specific content will be introduced below.

Let’s do some popular science first: UNICODE character encoding is also a mapping of characters and numbers, but the numbers here are called code points. Actually The above is a hexadecimal number.

The official Python documentation has this description of the relationship between Unicode strings, byte strings and encoding:

Unicode string is a sequence of code points , the code point value range is 0 to 0x10FFFF (the corresponding decimal value is 1114111). This sequence of code points needs to be represented in storage (including memory and physical disk) as a set of bytes (values between 0 and 255), and the rules for converting Unicode strings into byte sequences are called encodings.

这里说的编码不是指字符编码,而是指编码的过程以及这个过程中所使用到的Unicode字符的代码点与字节的映射规则。这个映射不必是简单的一对一映射,因此编码过程也不必处理每个可能的Unicode字符,例如:

将Unicode字符串转换为ASCII编码的规则很简单--对于每个代码点:

如果代码点数值<128,则每个字节与代码点的值相同

如果代码点数值>=128,则Unicode字符串无法在此编码中进行表示(这种情况下,Python会引发一个UnicodeEncodeError异常)

将Unicode字符串转换为UTF-8编码使用以下规则:

如果代码点数值<128,则由相应的字节值表示(与Unicode转ASCII字节一样)

如果代码点数值>=128,则将其转换为一个2个字节,3个字节或4个字节的序列,该序列中的每个字节都在128到255之间。

简单总结:

编码(encode):将Unicode字符串(中的代码点)转换特定字符编码对应的字节串的过程和规则

解码(decode):将特定字符编码的字节串转换为对应的Unicode字符串(中的代码点)的过程和规则

可见,无论是编码还是解码,都需要一个重要因素,就是特定的字符编码。因为一个字符用不同的字符编码进行编码后的字节值以及字节个数大部分情况下是不同的,反之亦然。

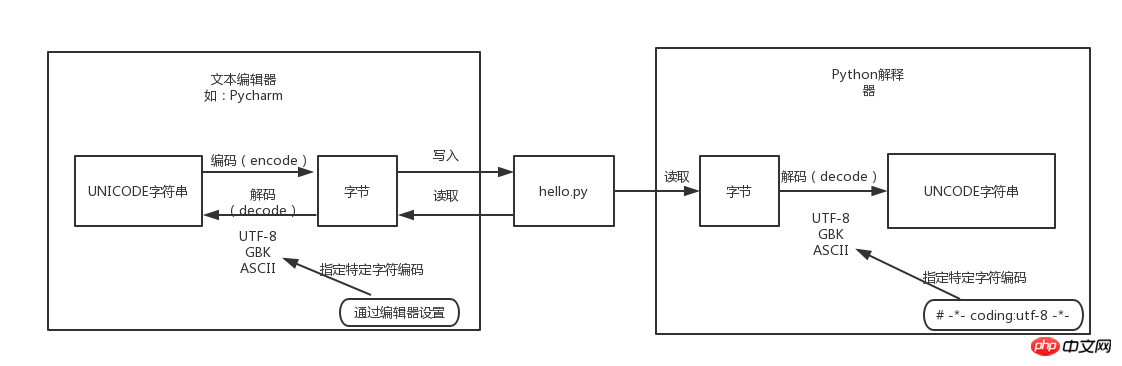

我们都知道,磁盘上的文件都是以二进制格式存放的,其中文本文件都是以某种特定编码的字节形式存放的。对于程序源代码文件的字符编码是由编辑器指定的,比如我们使用Pycharm来编写Python程序时会指定工程编码和文件编码为UTF-8,那么Python代码被保存到磁盘时就会被转换为UTF-8编码对应的字节(encode过程)后写入磁盘。当执行Python代码文件中的代码时,Python解释器在读取Python代码文件中的字节串之后,需要将其转换为UNICODE字符串(decode过程)之后才执行后续操作。

上面已经解释过,这个转换过程(decode,解码)需要我们指定文件中保存的字节使用的字符编码是什么,才能知道这些字节在UNICODE这张万国码和统一码中找到其对应的代码点是什么。这里指定字符编码的方式大家都很熟悉,如下所示:

# -*- coding:utf-8 -*-

那么,如果我们没有在代码文件开始的部分指定字符编码,Python解释器就会使用哪种字符编码把从代码文件中读取到的字节转换为UNICODE代码点呢?就像我们配置某些软件时,有很多默认选项一样,需要在Python解释器内部设置默认的字符编码来解决这个问题,这就是文章开头所说的“默认编码”。因此大家所说的Python中文字符问题就可以总结为一句话:当无法通过默认的字符编码对字节进行转换时,就会出现解码错误(UnicodeEncodeError)。

Python2和Python3的解释器使用的默认编码是不一样的,我们可以通过sys.getdefaultencoding()来获取默认编码:

>>> # Python2 >>> import sys >>> sys.getdefaultencoding() 'ascii' >>> # Python3 >>> import sys >>> sys.getdefaultencoding() 'utf-8'

因此,对于Python2来讲,Python解释器在读取到中文字符的字节码尝试解码操作时,会先查看当前代码文件头部是否有指明当前代码文件中保存的字节码对应的字符编码是什么。如果没有指定则使用默认字符编码"ASCII"进行解码导致解码失败,导致如下错误:

SyntaxError: Non-ASCII character '\xc4' in file xxx.py on line 11, but no encoding declared; see http://python.org/dev/peps/pep-0263/ for details

对于Python3来讲,执行过程是一样的,只是Python3的解释器以"UTF-8"作为默认编码,但是这并不表示可以完全兼容中文问题。比如我们在Windows上进行开发时,Python工程及代码文件都使用的是默认的GBK编码,也就是说Python代码文件是被转换成GBK格式的字节码保存到磁盘中的。Python3的解释器执行该代码文件时,试图用UTF-8进行解码操作时,同样会解码失败,导致如下错误:

SyntaxError: Non-UTF-8 code starting with '\xc4' in file xxx.py on line 11, but no encoding declared; see http://python.org/dev/peps/pep-0263/ for details

创建一个工程之后先确认该工程的字符编码是否已经设置为UTF-8

为了兼容Python2和Python3,在代码头部声明字符编码:-*- coding:utf-8 -*-

其实Python3中对字符串支持的改进,不仅仅是更改了默认编码,而是重新进行了字符串的实现,而且它已经实现了对UNICODE的内置支持,从这方面来讲Python已经和JAVA一样优秀。下面我们来看下Python2与Python3中对字符串的支持有什么区别:

Python2中对字符串的支持由以下三个类提供

class basestring(object) class str(basestring) class unicode(basestring)

执行help(str)和help(bytes)会发现结果都是str类的定义,这也说明Python2中str就是字节串,而后来的unicode对象对应才是真正的字符串。

#!/usr/bin/env python # -*- coding:utf-8 -*- a = '你好' b = u'你好' print(type(a), len(a)) print(type(b), len(b)) 输出结果: (<type 'str'>, 6) (<type 'unicode'>, 2)

Python3中对字符串的支持进行了实现类层次的上简化,去掉了unicode类,添加了一个bytes类。从表面上来看,可以认为Python3中的str和unicode合二为一了。

class bytes(object) class str(object)

实际上,Python3中已经意识到之前的错误,开始明确的区分字符串与字节。因此Python3中的str已经是真正的字符串,而字节是用单独的bytes类来表示。也就是说,Python3默认定义的就是字符串,实现了对UNICODE的内置支持,减轻了程序员对字符串处理的负担。

#!/usr/bin/env python

# -*- coding:utf-8 -*-

a = '你好'

b = u'你好'

c = '你好'.encode('gbk')

print(type(a), len(a))

print(type(b), len(b))

print(type(c), len(c))

输出结果:

<class 'str'> 2

<class 'str'> 2

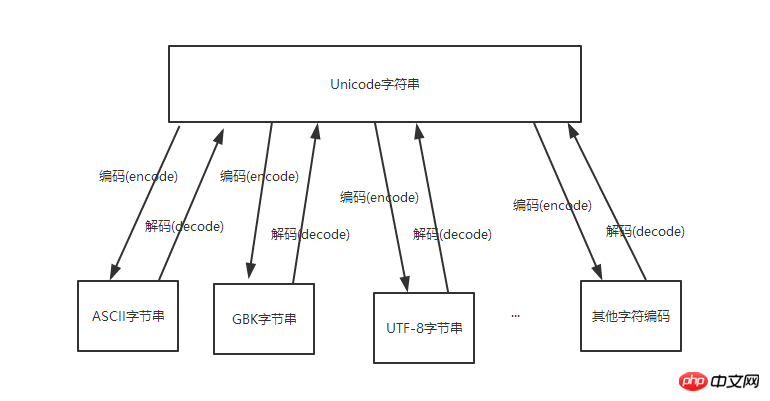

<class 'bytes'> 4上面提到,UNICODE字符串可以与任意字符编码的字节进行相互转换,如图:

那么大家很容易想到一个问题,就是不同的字符编码的字节可以通过Unicode相互转换吗?答案是肯定的。

字节串-->decode('原来的字符编码')-->Unicode字符串-->encode('新的字符编码')-->字节串

#!/usr/bin/env python

# -*- coding:utf-8 -*-

utf_8_a = '我爱中国'

gbk_a = utf_8_a.decode('utf-8').encode('gbk')

print(gbk_a.decode('gbk'))

输出结果:

我爱中国字符串-->encode('新的字符编码')-->字节串

#!/usr/bin/env python

# -*- coding:utf-8 -*-

utf_8_a = '我爱中国'

gbk_a = utf_8_a.encode('gbk')

print(gbk_a.decode('gbk'))

输出结果:

我爱中国最后需要说明的是,Unicode不是有道词典,也不是google翻译器,它并不能把一个中文翻译成一个英文。正确的字符编码的转换过程只是把同一个字符的字节表现形式改变了,而字符本身的符号是不应该发生变化的,因此并不是所有的字符编码之间的转换都是有意义的。怎么理解这句话呢?比如GBK编码的“中国”转成UTF-8字符编码后,仅仅是由4个字节变成了6个字节来表示,但其字符表现形式还应该是“中国”,而不应该变成“你好”或者“China”。

The above is the detailed content of Detailed introduction to strings and character encoding in Python. For more information, please follow other related articles on the PHP Chinese website!